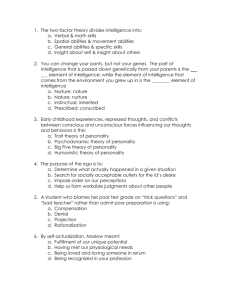

Current Issues in the Assessment of Intelligence and Personality November, 1993

advertisement