Lecture6

advertisement

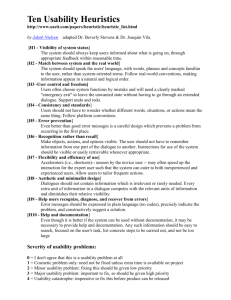

CS 575 Spring 2012 CSULA Bapa Rao Lecture 6 Agenda for today • • • • • • • Review of previous meeting Student Comments Heuristic Evaluation Presentation Team reports Heuristic Evaluation Task Assignment In-class heuristic evaluations [time permitting] Alan Kaye video Review of previous meeting • App Ideas / Use Cases • Team Finalization • Each team should own one app idea they will pursue Team Reports • • • • • • • • Red: Armando, Albert, Phu Orange: Phanti, Rain, Long Green: Ali, Behin Blue: Yin yin, Sina, Amir Pink: Raudel, Dinesh, Tony Purple: Gavik, Hardik, Mohammed Brown: Shweta, Sowmya Grey: Harshil, Haresh, Vishrut Work assignment from the previous week • (Revise & re-)write the high-level use case spec for your assigned app. – For some, this will be catch-up • Goal is to have a paper prototype • Point of view: – What experience do you want to provide for the user? – Relate to needfinding exercise results for validation • Storyboard two alternative design ideas – Use web, library or bookstore resources along with Aziz’s guide/sketch – Generate paper storyboards & upload pics to your wiki page • Discuss & Choose one of the ideas • Make an Oz-style paper prototype of the chosen idea – (see HanMail) • Make a video of use of paper prototype, upload & link • Bring paper prototype to next class • Refer: Assignment 3 in Stanford course link Heuristic Evaluation • • • • • Motivation Nielsen’s Usability heuristics Nielsen’s Severity Ratings How many evaluators? How to conduct a heuristic evaluation Motivation • Usability engineering is costly • Heuristic Evaluation uses guidelines rather than hard criteria – “Discount User Testing” • Most of the value for low investment • http://www.useit.com/papers/guerrilla_hci.ht ml The Scenario Concept (e.g., Wizard of Oz) Cost-Benefit Analysis Nielsen’s Usability Heuristics • • • • • • • • • Visibility of System Status Match Between System and the real world User control and freedom Consistency and standards Error prevention Recognition rather than recall Flexibility and efficiency of use Aesthetic and minimalist design Help users recognize, diagnose, and recover from errors • Help and documentation Usability Heuristics Visibility of system status The system should always keep users informed about what is going on, through appropriate feedback within reasonable time. Match between system and the real world The system should speak the users' language, with words, phrases and concepts familiar to the user, rather than system-oriented terms. Follow real-world conventions, making information appear in a natural and logical order. User control and freedom Users often choose system functions by mistake and will need a clearly marked "emergency exit" to leave the unwanted state without having to go through an extended dialogue. Support undo and redo. Consistency and standards Users should not have to wonder whether different words, situations, or actions mean the same thing. Follow platform conventions. Error prevention Even better than good error messages is a careful design which prevents a problem from occurring in the first place. Either eliminate error-prone conditions or check for them and present users with a confirmation option before they commit to the action. Recognition rather than recall Minimize the user's memory load by making objects, actions, and options visible. The user should not have to remember information from one part of the dialogue to another. Instructions for use of the system should be visible or easily retrievable whenever appropriate. Flexibility and efficiency of use Accelerators -- unseen by the novice user -- may often speed up the interaction for the expert user such that the system can cater to both inexperienced and experienced users. Allow users to tailor frequent actions. Aesthetic and minimalist design Dialogues should not contain information which is irrelevant or rarely needed. Every extra unit of information in a dialogue competes with the relevant units of information and diminishes their relative visibility. Help users recognize, diagnose, and recover from errors Error messages should be expressed in plain language (no codes), precisely indicate the problem, and constructively suggest a solution. Help and documentation Even though it is better if the system can be used without documentation, it may be necessary to provide help and documentation. Any such information should be easy to search, focused on the user's task, list concrete steps to be carried out, and not be too large. Nielsen’s Severity Ratings • Factors: – Frequency – Impact – Persistence • • • • • 0: Not a problem 1: Cosmetic 2: Minor usability problem 3: Major usability problem 4: Usability catastrophe Number of evaluators vs. problems found How Many Evaluators? Cost-benefit • Number of evaluators: 3-5 Not Every Evaluator Catches Every Problem How Many Evaluators, contd. • Taking the mean of 3 evaluations usually works How to do Heuristic Testing • • • • Do “walkthroughs” first Each individual evaluator inspects the interface alone After, evaluators can communicate and findings can be aggregated Record: – – Written reports from each evaluator or Observer notes • • 1-2 hrs – • • • Probably closer to 30 min for our app evaluator goes through the interface several times and inspects the various dialogue elements and compares them with a list of recognized usability principles (the heuristics). also allowed to consider any additional usability principles or results that come to mind that may be relevant for any specific dialogue element or category go through the interface at least twice – – • Observer can assist get a feel for the flow of the interaction and the general scope of the system focus on specific interface elements while knowing how they fit into the larger whole. http://www.useit.com/papers/heuristic/heuristic_evaluation.html Heuristic eval followup: Design Advice • Debriefing Session – Evaluators – Observer – Design team • Brainstorming mode – Address design problems – Identify positive aspects Output from heuristic testing process • a list of usability problems in the interface with references to those usability principles that were violated by the design in each case in the opinion of the evaluator. • Not enough to say “I don’t like this” – Explain why with respect to heuristic criteria In-class heuristic evaluation • Pick a team to be the evaluators for a paper design Assignment for the coming week • Self-evaluation of your work from the previous week (storyboard / paper prototype) • Start a journal on your personal page documenting the work you have personally done, and your personal thoughts & ideas – Back-fill with work you have already done – Include self-evaluation – For every activity done by your team, you should post documentation of your personal contribution and self-evaluation • Not everyone has to do every task, but everyone has to do significant tasks in the team activity – This will, in part, determine your personal final grade • I can’t grade you if I don’t know what you have done • I will provide evaluation templates as appropriate Assignment for the coming week (contd) • Read the papers on Heuristic Evaluation posted on the wiki. • Complete heuristic evaluation of your paper prototype and post your evaluation report on the team wiki page. – Be sure to include your personal (course-related) activity and contribution on your personal wiki page • Do a debriefing and make needed design changes • Post a self-evaluation of your heuristic evaluation. • Put an implementation proposal and plan together – See the sample on wiki – You have 2 weeks to build the prototype so limit your scope accordingly starting May 18th • + 1 week for testing – Identify platform – Decide what backend functions you will “fake” and how – Should be demo-able in class on June 9th 2012 • No coding till you finish heuristic evaluation step