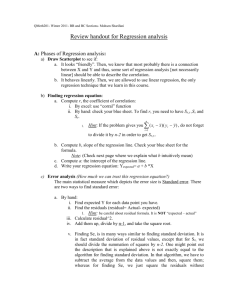

Least-Squares regression line • A regression line is a straight line that describes how a response variable y changes as an explanatory variable x changes. We often use it to predict the value of the response variable y for a given value of the explanatory variable x. • A straight line relating a response variable y to an explanatory variable x, has an equation of the form: y = a + b·x where b is the slope and a is the intercept. • Least-squares regression line of y on x is the line that makes the sum of squares of vertical distances of the data points from the line as small as possible. • The equation of the least-squares regression line of y on x is yˆ a b x with slope b r sy sx and intercept a y bx . week4 1 • The slope of the least square regression line, b, is the amount by which y changes when x increase by one unit. • So if the regression line equation is y = a + b·x and we change x to be x+1 (increasing x by 1 unit) the resulting y is y* = a + b·(x + 1) = a + b·x +b. If b > 0 then y will increase and if b < 0, y will decrease. • The change in the response variable y corresponding to a change of k units in the explanatory variable x is equal to k·b. week4 2 Example A grocery store conducted a study to determine the relationship between the amount of money x, spent on advertising and the weekly volume y, of sales. Six different levels of advertising expenditure were tried in a random order for a six-week period. The accompanying data were observed (in units of $100). Week 1 2 3 4 5 6 Weekly sales y 10.2 11.5 16.1 20.3 25.6 28.0 Amount spent on advertising, x 1.0 1.25 1.5 2.0 2.5 3.0 week4 3 • Below is the scatterplot and Minitab output. sales 30 20 10 1 2 3 ad. cost Variable ad.cost sales N 6 6 Mean 1.875 18.62 StDev 0.771 7.31 Correlation of sales and ad.cost = 0.990, P-Value = 0.000 br sy sx 0.99 7.31 9.39 0.771 a y b x 18.62 9.39 1.875 1.01 week4 4 • MINITAB Commands: Stat > Regression > Regression The regression equation sales = 1.00 + 9.39 ad. Predictor Coef Constant 1.004 ad. cost 9.3937 S = 1.173 is cost StDev 1.363 0.6807 R-Sq = 97.9% T 0.74 13.80 P 0.502 0.000 R-Sq(adj) = 97.4% • The output above gives the prediction equation: sales = 1.00 + 9.39 ad. cost This can be used (after some diagnostic checks) for predicting sales. For example the predicted sales, when the amount spent on advertising is 15, is sales 1.00 9.39 15 141.85 . week4 5 Extrapolation • Extrapolation is the use of the regression line for prediction outside the rage of values of the explanatory variable x. Such predictions are often not accurate. • For example, predicting the weekly sales, when the amount spent on advertising is 600$, would not be accurate. week4 6 Interpreting the regression line • The slope and intercept of the least-square line depend on the units of measurement-you can not conclude anything from their size. • The least-squares regression line always passes through the point ( x , y ) on the graph of y and x. week4 7 Coefficient of determination - r2 • The square of the correlation (r2) is the fraction of the variation in the values of y that is explained by the least-squares regression of y on x. • The use of r2 to describe the success of regression in explaining the response y is very common. • In the above example, r2 = 0.979 = 97.9%, i.e. 97.9% of the variation in sales is explained by the regression of sales on ad. cost. week4 8 Example from Term test, Summer, ’99 • MINITAB analyses of data on math and verbal SAT scores are given below. Correlations (Pearson) Verbal Math Math 0.275 0.000 GPA 0.322 0.194 0.000 0.006 Cell Contents: Correlation P-Value The regression equation is GPA = 1.11 + 0.00256 Verbal Predictor Coef Constant 1.1075 Verbal 0.0025560 S = 0.5507 StDev 0.3200 0.0005333 R-Sq = 10.4% week4 T 3.46 4.79 P 0.001 0.000 R-Sq(adj) = 9.9 9 Analysis of Source Regression Residual Error Total Variance DF SS 1 6.9682 198 60.0518 199 67.0200 MS 6.9682 0.3033 F 22.98 P 0.00 a) Which of the SAT verbal or math is a better predictor of GPA? b) What percent of the variation in GPA is explainable by the verbal scores? c) By the math scores? d) Indicate directly on the scatterplot below what it is that is minimized when we regress GPA on verbal SAT score. Give its actual numerical value for this regression. 4 GPA 3 2 1 0 400 500 600 Verbal week4 700 800 10 e) In each case below, either make the prediction, or indicate any reservations about making a prediction, or indicate what should be done in order to make a prediction. i) Predict the GPA of someone with a verbal SAT score of 700. ii) Predict the GPA of someone with a verbal SAT score of 250. iii) Predict the verbal score of someone with a GPA of 3.15. week4 11 Residuals • A residual is the difference between an observed value of the response variable and the value predicted by the regression line. That is, residual = observed y – predicted y = y yˆ . Residuals are also called ‘errors’ and denoted by e . • A negative value of the residual for a particular value of the explanatory variable x, means that the predicted value is overestimating the true value, i.e. y yˆ 0 y yˆ • Similarly, when a residual is positive, the predicted value is underestimating the true value of the response, i.e. y yˆ 0 week4 y yˆ 12 Example • For the example on sales data above, Week 1 2 3 4 5 6 Weekly sales y 10.2 11.5 16.1 20.3 25.6 28.0 Amount spent on advertising, x 1.0 1.25 1.5 2.0 2.5 3.0 sales = 1.00 + 9.39 ad. cost When x = 1.0, y = 10.2 and yˆ 10.39 then the residual = y yˆ = 10.2 – 10.39 = - 0.19. • MINITAB commands: Stat > Regression > Regression and click storage and choose Fits and Residuals. The output is given below, week4 13 Row 1 2 3 4 5 6 ad. cost 1.00 1.25 1.50 2.00 2.50 3.00 sales 10.2 11.5 16.1 20.3 25.6 28.0 week4 FITS1 10.3972 12.7456 15.0940 19.7909 24.4877 29.1846 RESI1 -0.19719 -1.24561 1.00596 0.50912 1.11228 -1.18456 14 Residuals and Model Assumptions • The mean of the least-square residuals is always zero. • A model that allows for the possibility that the observations do not lie exactly on a straight line is the model y = a + bx +e where e is a random error. • For inferences about the model, we make the following assumptions on random errors. – Errors are normally distributed with mean 0 and constant variance. – Errors are independent. week4 15 Residual plots • • Residual plots help us assess the model assumptions and the fit of a regression line. Recommended residual plots include: i) Normal probability plot of residuals, and some other plots such as histogram, box-plot etc. Check for normality. If skewed a transformation of the response variable may help. ii) Plot residuals versus predictor or fitted value. Look for curvature suggesting the need for higher order model or transformations, as shown in the following plot week4 16 Also look for trends in dispersion, e.g. an increasing dispersion as the fitted values increase, in which case the assumption of constant variance of the residuals is not valid and a transformation of the response may help, e.g. log or square root. iii) Plot residuals versus time order (if taken in some sequence) and versus any other excluded variable that you think might be relevant. Look for patterns suggesting that this variable has an influence on the relationship among the other variables. week4 17 Outliers and influential observations • An outlier is an observation that lies outside the overall pattern of the other observations. • Points that are outliers in the y direction of a scatterplot have large residuals, but other outliers need not have large residuals. • An observation is influential for a statistical calculation if removing it would markedly change the result of the calculation. • Points that are outliers in the x direction of a scatterplot are often influential for the least square regression line. week4 18 Example - Term test, Summer, ’99 • MINITAB analyses of data on math and verbal SAT scores are given below. The regression equation is GPA = 1.11 + 0.00256 Verbal Predictor Coef StDev T P Constant 1.1075 0.3200 3.46 0.001 Verbal 0.0025560 0.0005333 4.79 0.000 S = 0.5507 R-Sq = 10.4% R-Sq(adj) = 9.9 Unusual Observations Obs Verbal GPA 15 405 1.9000 40 490 1.2000 89 361 2.4000 108 759 2.8000 113 547 1.4000 121 780 1.3000 127 544 1.4000 131 578 0.3000 132 430 2.4000 136 760 1.1000 Fit 2.1427 2.3600 2.0302 3.0475 2.5056 3.1012 2.4980 2.5849 2.2066 3.0501 week4 StDev Fit 0.1089 0.0685 0.1310 0.0954 0.0468 0.1057 0.0477 0.0401 0.0965 0.0959 Residual -0.2427 -1.1600 0.3698 -0.2475 -1.1056 -1.8012 -1.0980 -2.2849 0.1934 -1.9501 St Resid -0.45 X -2.12R 0.69 X -0.46 X -2.01R -3.33RX -2.00R -4.16R 0.36 X -3.60RX 19 a) In the scatterplot below, circle the observations possessing the 3 largest residuals. 4 GPA 3 2 1 0 400 500 600 700 800 Verbal Circle below all the values that are outliers in the xdirection, and hence potentially the most influential observations. 4 3 GPA b) 2 1 0 400 week4 500 600 Verbal 700 800 20 Association and causation • In many studies of the relationship between two variables the goal is to establish that changes in the explanatory variable cause changes in response variable. • An association between an explanatory variable x and a response variable y, even if it very strong, is not by itself good evidence that changes in x actually cause changes in y. • Some explanations for an observed association. The dashed double arrow lines show an association. The solid arrows show a cause and effect link. The variable x is explanatory, y is response and z is a lurking variable. week4 21 Lurking Variables • A lurking variable is a variable that is not among the explanatory or response variables in a study and yet may influence interpretation of relationships among those variables. • Lurking variables can make a correlation or regression misleading. • In the sales example above a possible lurking variable is the type of advertising being used e.g. radio, T.V , street promotion etc. week4 22 Confounding • Two variables are confounded when their effects on a response variable cannot be distinguished from each other. • The confounded variable may be either explanatory variables or lurking variables. • Examples 2.42 and 2.43 on page 157 in IPS. week4 23 Example (Term test May 98) • MINITAB analyses of data (Reading.mtw) on pre1 and post1 scores are given below The regression equation is Post1 = 1.85 + 0.636 Pre1 Predictor Coef StDev T P Constant 1.852 1.185 1.56 0.123 Pre1 0.6358 0.1158 5.49 0.000 S = 2.820 R-Sq = 32.0% R-Sq(adj) = 31.0% Analysis of Variance Source DF Regression 1 Residual Error 64 Total 65 SS 239.74 508.88 748.62 Unusual Observations Obs Pre1 Post1 Fit 30 8.0 13.000 6.939 MS 239.74 7.95 StDev Fit 0.404 week4 F 30.15 P 0.000 Residual StResid 6.061 2.17R 24 S S 15 D D D 10 Post1 B S S D D D D S D D B D D S S D S B D S B B S B D S S S B B B B D D B D D B B B S 5 S S D B D B B S B B S 0 5 10 15 Pre1 a) b) Does it make sense to use the equation found by MINITAB’s regression procedure for predicting post1 scores from pre1 scores? Also, circle the most unusual value in the data set. Is this an influential observation? Comment on the distribution of residuals based on the following plots. week4 25 Histogram of the Residuals Normal Probability Plot of the Residuals (response is Post1) (response is Post1) 2.5 2.0 10 Normal Score Frequency 1.5 5 1.0 0.5 0.0 -0.5 -1.0 -1.5 -2.0 0 -2.5 -5 0 5 -5 0 Residual What do you learn from the following plot? Residuals Versus Pre1 (response is Post1) 5 Residual c) 5 Residual 0 -5 5 10 15 Pre1 week4 26 d) Describe one problem that can be spotted from a plot like the one above, and then draw what the corresponding plot would have to look like, below. e) From the above plots, would you say that ‘group’ is an important lurking variable in our regression of post1 on pre1? Why (not)? Here are some more MINITAB outputs. The regression equation is Pre1 = 5.72 + 0.504 Post1 Predictor Coef StDev Constant 5.7203 0.8026 Post1 0.50367 0.09173 S = 2.510 R-Sq = 32.0% week4 T 7.13 5.49 P 0.000 0.000 R-Sq(adj) = 31.0% 27 Correlations (Pearson) Pre1 Pre2 Pre2 0.335 0.006 Post1 0.566 0.345 0.000 0.005 Post2 0.089 0.206 0.478 0.097 Post3 -0.037 0.181 0.766 0.146 Post1 Post2 Cell Contents: Correlation P-value 0.064 0.607 0.470 0.000 Stem-and-leaf of Post3 Leaf Unit = 1.0 2 3 01 7 3 22333 9 3 45 12 3 667 15 3 899 22 4 0001111 30 4 22222333 (7) 4 4455555 29 4 6677 25 4 88888899999999 11 5 001 8 5 333 5 5 4455 1 5 7 N -0.042 0.738 = 66 week4 28 f) For each of the following, make a prediction if you can, and if you cannot explain why. (Assume that the variable ‘group’ may be ignored) i) Predict the post1 score of someone with pre1 score = 45. ii) Predict the pre1 score of someone with post1 score = 10. iii) Predict the post1 score of someone with pre1 score = 11. g) h) If we regressed post3 on pre2, what proportion of the total variation in post3 scores will by explained by the linear relation? If the std. dev. of post3 is 50% bigger than the std. dev. of pre2, estimate how much of an increase there is in post3 score corresponding to an increase of 1.0 in pre2, on s average. slope 0.181 0.181 1.5 0.27 post 3 s pre 2 i) Is the std. dev. of post 3 scores closer to 0.1, 0.5, 1, 5, 20, 50? week4 29 Cautions about regression and correlation • Correlation measures only linear association, and fitting a straight line makes sense only when the overall pattern of the relationship is linear. Always plot data before calculating. • Extrapolation (using the regression line to predict value far outside the range of the data that we used to fit it) often produces unreliable predictions. • Correlations and least square regressions are not resistant. Always plot the data and look for potentially influential points. • Lurking variables can make a correlation or regression misleading. Plot residuals against time and against other variables that may influence the relationship between x and y. • High correlation does not imply causation. • A correlation based on averages over many individuals is usually higher than the correlation between the same variables based on the data for less individuals. week4 30 Question from Term Test Oct, 2000 a) On the plot below, draw in with bars exactly what is minimized (after squaring and summing) should we fit a least-square line to predict W from Z. b) Here is a scatterplot with a positive association between x and y. Explain how you can change this into a negative association without changing x or y values, but by introducing new information about the data. week4 31 c) Suppose that in a regression study, the observations are taken one per week, over many weeks. What type of diagnostic should you examine? Draw an example of what you would not like to see in this diagnostic plot, if you want to use your simple regression of y on x. Explain briefly what the problem is. week4 32 d) Consider the scatterplot and the possible fitted line below. For the line drawn above draw a rough picture of the following residual plots. i) Residuals vs x. ii) Histogram of residuals. week4 33 Question from Term Test Oct 2000 In a study of car engine exhaust emissions, nitrogen oxide (NOX) and carbon monoxide (CO), in grams per mile were measured for a sample of 46 similar light-duty engines. a) On the graph below circle the two values that likely had the biggest influence on the slope of the l.s. line fitted to all the data. 3 NOX 2 1 0 10 20 CO week4 34 b) If we were to remove the two most influential observations mentioned above, would the slope of the l. s. line increase or decrease? Here are some MINITAB outputs: The regression equation is NOX = 1.83 - 0.0631 CO Predictor Coef StDev T P Constant 1.83087 0.09616 19.04 0.000 CO -0.06309 0.01011 -6.24 0.000 S = 0.3568 R-Sq = 46.9% R-Sq(adj) = 45.7% Analysis of Variance Source DF Regression 1 Residual Error 44 Total 45 SS 4.9562 5.6027 10.5589 Unusual Observations Obs CO NOX Fit 22 23.5 0.8600 0.3465 24 22.9 0.5700 0.3849 32 4.3 2.9400 1.5602 MS 4.9562 0.1273 StDev Fit 0.1660 0.1602 0.0644 week4 F 38.92 Residual 0.5135 0.1851 1.3798 P 0.000 St.Resid 1.63 X 0.58 X 3.93 R 35 c) What percent of the total variation in NOX is still left unexplained even after taking into account the CO values? d) How much does the NOX emission change when CO decreases by 10 grams? e) Tom predicts a CO of 13.15 if NOX = 1.0 (using the fitted regression equation in the above output). Do you agree? Explain. f) Jim predicts a NOX of 0.06 if CO = 28. Do you agree? Explain. e) The sum of squared deviations of the NOX measurements about their mean is 10.56. The sum of squared deviations of the NOX values from the l.s. line is less than 10.56. The latter is what percent of the former? We continue with the pursuit of a good prediction equation for prediction of NOX measurements. Have a look at the MINITAB outputs and answer the following. week4 36 A)First we try deleting the 3 most unusual observations. The regression equation is NOX = 1.85 - 0.0724 CO Predictor Coef StDev T P Constant 1.85109 0.08663 21.37 0.000 CO -0.07243 0.01026 -7.06 0.000 S = 0.2809 R-Sq = 54.8% R-Sq(adj) = 53.7% Analysis of Variance Source DF Regression 1 Residual Error 41 Total 42 SS 3.9287 3.2352 7.1639 Unusual Observations Obs CO NOX Fit 22 19.0 0.7800 0.4749 25 3. 2.2000 1.6012 26 1.9 2.2700 1.7171 MS 3.9287 0.0789 StDev Fit 0.1272 0.0585 0.0708 week4 F 49.79 Residual 0.3051 0.5988 0.5529 P 0.000 StResid 1.22 X 2.18R 2.03R 37 Residuals Versus CO (response is NOX) 2.5 Residual NOX 0.5 1.5 0.0 -0.5 0.5 0 0 10 10 20 CO 20 CO Histogram of the Residuals Normal Probability Plot of the Residuals (response is NOX) (response is NOX) 9 2 8 Normal Score Frequency 7 6 5 4 3 1 0 -1 2 1 -2 0 -0.4 -0.3 -0.2 -0.1 0.0 0.1 Residual 0.2 0.3 0.4 0.5 0.6 week4 -0.5 0.0 Residual 0.5 38 i) What improvements, if any, result (ignoring any residual plots for now)? Explain. B) As an alternative to deleting some observations, maybe we should try a transformation. Let’s try a (natural) log transformation of NOX. 3 1.0 0.5 NOX logNOX 2 0.0 1 -0.5 0 10 0 20 10 20 CO CO week4 39 The regression equation is logNOX = 0.656 - 0.0552 CO Predictor Coef StDev T P Constant 0.65565 0.06916 9.48 0.000 CO -0.055232 0.007272 -7.59 0.000 S = 0.2566 R-Sq = 56.7% R-Sq(adj) = 55.7% Analysis of Variance Source DF Regression 1 Residual Error 44 Total 45 SS 3.7989 2.8980 6.6970 Unusual Observations Obs CO logNOX Fit 16 15.0 -0.6733 -0.1712 17 15.1 -0.7133 -0.1800 22 23.5 -0.1508 -0.6440 24 22.9 -0.5621 -0.6103 32 4.3 1.0784 0.4187 MS 3.7989 0.0659 StDev Fit 0.0635 0.0644 0.1194 0.1152 0.0463 week4 F 57.68 Residual -0.5022 -0.5333 0.4931 0.0481 0.6597 P 0.000 St Resid -2.02R -2.15R 2.17RX 0.21 X 2.61R 40 Histogram of the Residuals Residuals Versus CO (response is logNOX) (response is logNOX) 9 8 0.5 6 Residual Frequency 7 5 4 0.0 3 2 1 -0.5 0 -0.5 0.0 0.5 0 Residual 10 20 CO i) Comparing the scatterplots using NOX and logNOX with no data deleted, what do you conclude? ii) Compare the regressions using logNOX with the regression of NOX on CO but minus the three unusual observations. Which approach works best? (Discuss the residual plots and any other relevant info). week4 41 Question Refer to Exercise-2.73 page 175 IPS. Some useful MINITAB outputs are given below. Coef Constant Rural Stdev t value p -2.5801 2.7277 -0.9459 0.3536 1.0935 0.0506 21.6120 0.0000 Df SS MS F p Regression 1 9371.099 9371.099 467.0797 0 Error 24 481.516 20.063 State whether the following statements are true of false. I. 95.1% of the variation in city particulate level has been explained by the model. II. An increase of 10g in the rural particulate level is accompanied by an expected increase of 15g in the city particulate level. III. The estimated city particulate level when the rural particulate level is 50g is approximately 52g. IV. Correlation between city and rural particulate levels is 0.951. week4 42 Question Examine the following regression minitab output from a study of the relation between freshman year grade point average, denoted "GPA" and verbal Scholastic Aptitude Test score, denoted "VERBAL": The regression equation is GPA = 0.539 + 0.00362 VERBAL Predicto Coef Stdev t-ratio p Constant 0.5386 0.3982 1.35 0.179 VERBAL 0.0036214 0.0006600 5.49 0.000 s = 0.4993 R-sq = 23.5% R-sq(adj)22.7% Analysis of Variance SOURCE DF SS MS F p Regression 1 7.5051 7.5051 30.10 0.000 Error 98 24.4313 0.2493 Total 99 31.9364 week4 43 1.5+ resids 0.0+ -1.5+ - * * * * * * * * * * * * ** * ** * * 2 ** * * * **** * ***2 * * * * * * * 2 * 2* * * * 2* * * * * * 2 2 * **2** 2 * 2 3 2** ** * * *2 * 2 * * * ** * * -+---------+---------+---------+---------+---------+------VERBAL 320 400 480 560 640 720 week4 44 State whether the following statements are true or false. I) 23.5% of the variation in GPA's has been explained by the verbal scores. II) An increase of 100 in the verbal SAT score is accompanied by an expected increase of .362 in GPA. III) The estimated GPA for someone attaining a verbal SAT score of 600 is 2.71. IV) Going by the residual plot, we might be better served by using a non-linear model to relate SAT and GPA. V) Since the residual plot shows no pattern, this model will give us very accurate predictions. VI) If the data is normally distributed, the plot above should show a linear pattern. week4 45

advertisement

Download

advertisement

Add this document to collection(s)

You can add this document to your study collection(s)

Sign in Available only to authorized usersAdd this document to saved

You can add this document to your saved list

Sign in Available only to authorized users