Nature gives us correlations

advertisement

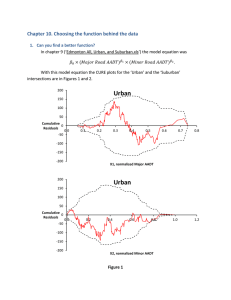

Nature gives us correlations… Evaluation Research (8521) Prof. Jesse Lecy Lecture 0 1 The Program Evaluation Mindset Input Policy / Program Black Box Outcome (something happens here) 2 The Program Evaluation Mindset Input Policy / Program Outcome (something happens here) The outcome is some function of the program and the amount of inputs into the process. It can sometimes be represented by this simple input-output equation. 𝑂𝑢𝑡𝑐𝑜𝑚𝑒 = 𝑓 𝑝𝑟𝑜𝑔𝑟𝑎𝑚, 𝑖𝑛𝑝𝑢𝑡 𝑙𝑒𝑣𝑒𝑙 𝑂𝑢𝑡𝑐𝑜𝑚𝑒 = 𝑏0 + 𝑏1 ∙ 𝐼𝑛𝑝𝑢𝑡 + 𝜀 The slopes tells us how much impact we expect a program to have when we spend one additional unit of input. 3 100 Effect 50 Heart Rate (per min) 150 Dosage and Response 50 55 60 65 70 Caffeine (mm) 𝐻𝑒𝑎𝑟𝑡𝑟𝑎𝑡𝑒 = 𝑏0 + 𝑏1 ∙ 𝐶𝑎𝑓𝑓𝑒𝑖𝑛𝑒 + 𝜀 Heart Rate Treatment (Caffeine) Control (No caffeine) 𝐻𝑒𝑎𝑟𝑡𝑟𝑎𝑡𝑒 = 𝑏0 + 𝑏1 ∙ 𝐶𝑎𝑓𝑓𝑒𝑖𝑛𝑒 + 𝜀 5 100 50 Heart Rate (per min) 150 Dosage and Response 50 55 60 # of Potato Chips 65 70 http://www.radiolab.org/2010/oct/08/its-alive/ 4:15- 7 How do we know when the interpretation is causal? City Density and Productivity 150 Effect ? 100 100 Effect? 50 50 Heart Rate (per min) 150 Number of Patents Per 10000 Residents Dosage and Response 50 55 60 Caffeine (mm) 65 70 50 55 60 Walking Speed 65 70 NATURE GIVES US CORRELATIONS 9 0 100 y 300 Example #1 0 50 100 150 200 150 200 150 200 x y y 300 x 0 100 z 0 50 100 50 100 0 z 200 z 0 50 100 x 0 100 y 300 Example #2 0 100 200 300 400 x y 0 100 0 50 100 150 200 0 50 100 200 z z z y 300 x 0 100 200 x 300 400 0 200 y 600 Example #3 0 200 300 400 x y 0 200 y 600 x 100 z 0 50 100 150 200 50 100 0 z 200 z 0 100 200 x 300 400 250 150 50 -50 y y x 0 20 40 60 80 100 20 0 y x x 60 100 x -50 0 50 100 y 150 200 250 Examples of Poor Causal Inference Examples of Poor Causal Inference 1. Ice cream consumption causes polio 2. Investments in public buildings creates economic growth 3. Early retirement and health decline 4. Hormone replacement therapy and heart disease: In a widely-studied example, numerous epidemiological studies showed that women who were taking combined hormone replacement therapy (HRT) also had a lower-than-average incidence of coronary heart disease (CHD), leading doctors to propose that HRT was protective against CHD. But randomized controlled trials showed that HRT caused a small but statistically significant increase in risk of CHD. Re-analysis of the data from the epidemiological studies showed that women undertaking HRT were more likely to be from higher socio-economic groups (ABC1), with better than average diet and exercise regimes. The use of HRT and decreased incidence of coronary heart disease were coincident effects of a common cause (i.e. the benefits associated with a higher socioeconomic status), rather than cause and effect as had been supposed. (Wikipedia) Examples of Complex Causal Inference MODERN PROGRAM EVAL 17 To Experiment or Not Experiment http://www.youtube.com/watch?v=exBEFCiWyW0 18 CASE STUDY – EDUCATION REFORM 19 Classroom Size and Performance http://www.publicschoolreview.com/articles/19 State Laws Limiting Class Size Notwithstanding the ongoing debate over the pros and cons of reducing class sizes, a number of states have embraced the policy of class size reduction. States have approached class size reduction in a variety of ways. Some have started with pilot programs rather than state-wide mandates. Some states have specified optimum class sizes while other states have enacted mandatory maximums. Some states have limited class size reduction initiatives to certain grades or certain subjects. Here are three examples of the diversity of state law provisions respecting class size reduction. California – The state of California became a leader in promoting class size reduction in 1996, when it commenced a large-scale class size reduction program with the goal of reducing class size in all kindergarten through third grade classes from 30 to 20 students or less. The cost of the program was $1 billion annually. Classroom Size and Performance http://www.publicschoolreview.com/articles/19 Florida – Florida residents in 2002 voted to amend the Florida Constitution to set the maximum number of students in a classroom. The maximum number varies according to the grade level. For prekindergarten through third grade, fourth grade through eighth grade, and ninth grade through 12th grade, the constitutional maximums are 18, 22, and 25 students, respectively. Schools that are not already in compliance with the maximum levels are required to make progress in reducing class size so that the maximum is not exceeded by 2010. The Florida legislature enacted corresponding legislation, with additional rules and guidelines for schools to achieve the goals by 2010. Georgia -- Maximum class sizes depend on the grade level and the class subject. For kindergarten, the maximum class size is 18 or, if there is a full-time paraprofessional in the classroom, 20. Funding is available to reduce kindergarten class sizes to 15 students. For grades one through three, the maximum is 21 students; funding is available to reduce the class size to 17 students. For grades four through eight, 28 is the maximum for English, math, science, and social studies. For fine arts and foreign languages in grades K through eight, however, the maximum is 33 students. Maximums of 32 and 35 students are set for grades nine through 12, depending on the subject matter of the course. Local school boards that do not comply with the requirements are subject to lose funding for the entire class or program that is out of compliance. Class Size Case Study - The Theory: Scenario 1 Test Scores Class Size ? SES 200 100 0 Scenario 2 Test Score 300 400 Class Size Test Scores -400 -300 -200 Class Size -100 0 Note changes in slopes and standard errors when you add variables. 0 𝑆𝑙𝑜𝑝𝑒 = -50 ∆Y ∆𝑌 ∆𝑋 ∆X -100 The regression coefficient represents a slope. In policy we think of the slope as an input-output formula. If I decrease class size (input) standardized tests scores increase (output). Test Score Residuals 50 Example: Classroom Size -400 -300 -200 Class Size -100 0 23 The Naïve Model: 200 100 0 Test Score 300 400 TestScore b0 b1ClassSize e -400 -300 -200 Class Size -100 0 With Teacher Skill as a Control: 0 -50 -100 Test Score Residuals 50 TestScore b0 b1ClassSize b2TeachSkill e -400 -300 -200 Class Size -100 0 Add SES to the Model: 0 -100 -200 Test Score Residuals 100 TestScore b0 b1ClassSize b2TeachSkill b3 SES e -400 -300 -200 Class Size -100 0 0 -100 -200 Why are slopes and standard errors changing when we add “control” variables? Test Score Residuals 100 Example: Classroom Size -400 -300 -200 -100 0 Class Size 27 How do we interpret results causally? Teacher Skill Class Size X SES Test Scores COURSE OUTLINE 29 The Origins of Modern Program Evaluation • The “Great Society” introduced unprecedented levels of spending on social services – marks the dawn of the modern welfare state. • Econometrics also comes of age, creating tools the provide opportunity for rigorous analysis of social programs. 30 31 32 We need effective programs, not expensive programs 33 Modern Program Evaluation Course Objectives: 1. Understanding why regressions are biased Seven Deadly Sins of Regression: 1. 2. 3. 4. 5. 6. 7. Multicollinearity Omitted variable bias Measurement error Selection / attrition Misspecification Population heterogeneity Simultaneity 34 Modern Program Evaluation Course Objectives: 2. Understand tools of program evaluation – – Fixed effect models Instrumental variables – – – – Matching Regression discontinuity Time series Survival analysis 35 Modern Program Evaluation Course Objectives: 3. How to talk to economists (and other bullies) 36 Modern Program Evaluation Course Objectives: 4. Correctly apply and critique evaluation designs Experiments • • Pretest-posttest control group Posttest only control group Quasi-Experiments • • • Pretest-posttest comparison group Posttest only comparison group Interrupted time series with comparison group Reflexive Design • • Pretest-posttest design Simple time series 37 Course Structure First half: Understanding bias – – No text, course notes online Weekly homework Second half: Evaluation design – – Text is required Campbell Scores policy-research.net/programevaluation 38 Evaluating Internal Validity: The Campbell Scores A Competing Hypothesis Framework Omitted Variable Bias • Selection • Nonrandom Attrition Trends in the Data • Maturation • Secular Trends Study Calibration • Testing • Regression to the Mean • Seasonality • Study Time-Frame Contamination Factors • Intervening Events • Measurement Error 39 Homework Policy • Homework problems each week 1st half of semester – – – • Graded pass/fail Submit via D2L please Work in groups is strongly encouraged Campbell Scores are due each class for the second half of the semester No late homework accepted! 50% of final grade. See syllabus for policy on turning in by email. • Midterm Exam (30%) – • Confidence intervals, Standard error of regression, Bias Final Exam (20%) – Covers evaluation design and Internal validity 40 Midterm Fall 2011 3 2 Student Count 10 1 5 0 0 Student Count 4 5 15 Midterm Spring 2012 40 60 80 100 Grade 1st Qu. Median Mean 3rd Qu. 120 40 60 80 100 120 Grade 82 86 87.21 97.5 1st Qu. Median Mean 3rd Qu. 75.5 89 83.47 96 41 Average Time Spent on Class by Students: Spring 2012 50.00% 40.00% 30.00% 20.00% 10.00% 0.00% 0-2 hours/wk 3-4 hours/wk 4-8 hours/wk 9-14 hours/wk 15+ hours/wk Hours Per Week Average Time Spent on Class by Students: Fall 2011 50.00% 40.00% 30.00% 20.00% 10.00% 0.00% 0-2 3-4 4-8 9-14 15+ Hours Per Week 42