Malloc ETL Presentation - Malloc Corporation

advertisement

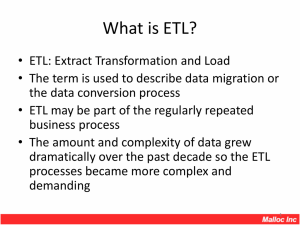

ETL: Extract Transformation and Load Term is used to describe data migration or data conversion process ETL may be part of the business process repeated regularly Amount and complexity of data grew dramatically over the past decade so ETL processed became more complex and demanding 1. 2. 3. 4. 5. 6. 7. 8. Requirements Analysis Design Proof of Concept Development Testing Execution Verification Scope of the data migration - what data is required in target system? Execution requirements – has to be within certain timeframe, sequence, geographic location, repeatability, acceptable system down time, etc. Source data retention period, backup and restore requirements Requirements should be made with this in mind: Data is company’s most valuable asset. Consequences of corrupted data are usually very costly. Understanding the source data Data Dictionary usable for designing ETL process has to created Mission critical task Frequently underestimated (importance and time) All available resource should be used to do analysis properly: Available system documentation including Data Model and Data Dictionary People Reverse engineering Choice of methodology Choice of technology Design Target Database Design ETL process Data Mapping Document Maps source data to target database Specifies transformation rules Specifies generated data (not from source) Design ETL verification process Ensure that all requirements are addressed Helps to determine or estimate: Feasibility of the concept Development time Performances, capacity and execution time Requirements could be met Gain knowledge about the technology Code produced in this phase usually can be reused during the development phase Includes: Produce code and processes as per Design and Data Mapping Document Data verification scripts or programs as per Test Plan Execution scripts as per Execution Plan Unit testing – performed and documented by developers. Typical Challenges: Inadequate requirements and design documents Developers unfamiliar with technology Ensures that requirements are met Test Plan is highly recommended Types of testing: Functional, stress, load, integration, connectivity, regression Challenges: Automation and repeatability (testing and verification scripts) Creation of the Test Data Extracting small data sets from large data volumes Confidential data may not be made available for testing Execution plan should include: Sequence of tasks Time of execution and expected duration of execution Checkpoints and success criteria Back out plan and continuation of business Resources involved For mission critical system down time could be limited or even entirely unacceptable Execution should be controlled and verified Confirms that data migration was successful Determined during the design phase Various methodologies and technologies could be used Automated verifications is highly recommended (driven by requirements) Underestimated complexity of the project Overlooked or neglected phases of the project Wrong choice of technology Common misconceptions like Expensive ETL tools will solve all problems No or very little programming will be required We don't need or we don't have time for plans, but we know exactly what we need to do Maintaining license and consultants is very expensive Significant time required to learn Usually require dedicated hardware Cannot take advantage of database vendor proprietary technologies optimized for fastest data migration For complex tasks very often requires integration with other technologies Very limited performances Only small amount of provided functionality is actually required for ETL project Very limited application for Data Analysis Huge discrepancy between marketing promises and actual performances During 15 years in IT Consulting business a proprietary ETL methodology and technology is developed Consists of tow major modules: Database Analyzer G-DAO Framework Major advantages: Inexpensive, easier to learn and performs better than mainstream ETL Software Any Java developer can master it and start using it within several days It is proven and it works Produces ETL Data Analysis Reports in various formats Major usage: Analyze and understand source data and the database attributes Create data mapping and transformation documents Create data dictionary Suggests ways to improve database design Valuable source of information for Business Analysis, Data Architects, Developers, Database Administrators. Intuitive, descriptive and easy to read In HTML format Can be imported and edited in major document editors such as MS Word User friendly GUI Interface It can also run in Batch mode for lengthy analysis (large data sources) Java Code Generator Eliminates huge legwork to develop code required for ETL Process Uses analysis performed by Database Analyzer to produce code optimized for particular database Code may be used for purposes other than ETL (any kind of database access and data manipulation) Uses advantage of almost unlimited world of java libraries (no proprietary languages and interfaces) Data can flow directly from source to target (no need to intermittent storage into files) XA transactions are supported for all major databases Functionality limited only by limitation of the JDBC driver and Java language Easy to learn and implement No dedicated hardware required Provides platform for any kind of business application that requires data access Incorporated in 1993 in Toronto, Canada Provided IT Consulting to: Most recent implementations of Database Analyzer and GDAO Framework Oracle Corporation General Electric Citibank Royal Bank Bank of Montreal The Prudential Standard Life and many more Citibank Royal Bank of Canada http://www.mallocinc.com