D2.1 Measuring UX Final

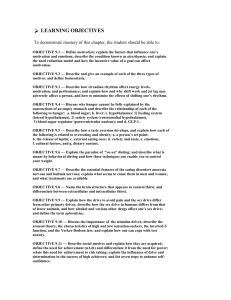

advertisement