Comparing Bug Finding Tools with Reviews and Tests

advertisement

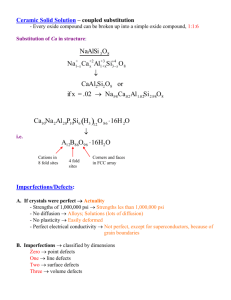

Stefan Wagner, Jan Jurjens, Claudia Koller, and Peter Trischberger By Shankar Kumar 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Defect-detection techniques (reviews, tests, . . . ) are still the most important methods for the improvement of software quality Costs for such techniques are significant. According to Myers (1979) 50% of the total development costs are caused by testing. Jones (1987) assigns 30 . 40% to quality assurance and defect removal. Optimization in this area can save money! One way can be automation Another the optimal combination of techniques Bug finding tools offer the possibility of automatic defect finding How do such tools compare to reviews and tests? 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Comparison of bug finding tools for Java with reviews and tests Based on several industrial projects at O2 Different backend applications with web interface Analysis of defect categories (severity) defect types defect removal efficiency Failures are a perceived deviation of the output values from the expected values faults are the cause of failures in code or other documents. Both are also referred to as defects. We mainly use defect in our analyses if there are no failures involved as with defects related to maintenance only. We address the question of how automated static analysis using bug finding tools relates to other types of defect-detection techniques and if it is thereby possible to reduce the effort for defect-detection using such tools. In detail, this amounts to three questions. 1. Which types and classes of defects are found by different techniques? 2. Is there any overlap of the found defects? 3. How large is the ratio of false positives from the tools? The main findings are summarized in the following. 1. Bug finding tools detect a subset of the defect types that can be found by a review. 2. The types of defects that can be found by the tools can be analyzed more thoroughly, that is, the tools are better regarding the bug patterns they are programmed for. 3. Dynamic tests find completely different defects than bug finding tools. 4. Bug finding tools have a significant ratio of false positives. 5. The bug finding tools show very different results in different projects. The results have four major implications. 1. Dynamic tests or reviews cannot be substituted by bug finding tools because they find significantly more and different defect types. 2. Bug finding tools can be a good pre-stage to a review because some of the defects do not have to be manually detected. A possibility would be to mark problematic code so that it cannot be overlooked in the review. 3. Bug finding tools can only provide a significant reduction in the effort necessary for defect-detection if their false positives ratios can be reduced. (From our case studies, we find the current ratios to be not yet completely acceptable.) 4. The tools have to be more tolerant regarding the programming style and design to provide more uniform results in different projects. 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Class of programs that aim to find defects in code By static analysis similarly to a compiler The outcome are warnings that a piece of code is critical in some way Identification using typical bug patterns from experience published common pitfalls in a programming language Coding guidelines and standards can be checked Also more sophisticated analysis based on data and control flow Three representatives for bug finding tools for Java Find Bugs : uses mainly bug patterns but also data flow analysis, operates on the byte code, from University of Maryland PMD : operates on source code, can enforce coding standards, customizable by using X-Path expressions on the parser tree QJ Pro : operates on source code, uses over 200 rules, additional rules can be defined, also checks based on code metrics 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Four done at O2 and one at TU Munich Project A Online shop Six month in use 1066 classes, 58 KLOC Project B Internet payment via SMS 215 classes 24 KLOC Project C Web frontend for management system 3 KLOC Project D Client data management 572 classes 34 KLOC EstA Textual requirements editor 28 classes 4 KLOC 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Analyze interrelations between bug finding tools, reviews, and tests Tools and tests were applied to all projects Review only in project C Each warning from the tools was checked if it is really a defect (True positives vs. false positives) True positives are warnings that are actually confirmed as defects in the code false positives are wrong identifications of problems. Techniques were applied independently For the comparison, we use a five step categorization of the defects using their severity. Hence, the categorization is based on the effects of the defects rather than their cause or type of occurrence in the code. We use a standard categorization for severity that is slightly adapted to the defects found in the projects. Defects in category 1 are the severest, the ones in category 5 have the lowest severity. 1. Defects that lead to a crash of the application. These are the most severe defects that stop the whole application from reacting to any user input. 2. Defects that cause a logical failure. This category consists of all defects that cause a logical failure of the application but do not crash it, for example a wrong result value. 3. Defects with insufficient error handling. Defects in this category are only minor and do not crash the application or result in logical failures, but are not handled properly. 4. Defects that violate the principles of structured programming. These are defects that normally do not impact the software but could result in performance bottlenecks etc. 5. Defects that reduce the maintainability of the code. This category contains all defects that only affect the readability or changeability of the software. This classification helps us compare the various defect-detection techniques based on the severity of the defects they find analyze the types of defects that they find. Orthogonal to the defect categorization Similar to standard defect taxonomies (i.e. IEEE Std 1044-1993) Partly an unification of the warning types of the tools Examples Stream is not closed Input is not checked for special characters 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Bug finding tools Bug finding tools vs. reviews Bug finding tools vs. testing Defect removal efficiency The number before the slash denotes the number of true positives, the number after the slash the number of all positives. Most of the true positives can be assigned to the category Maintainability of the code. It is noticeable that the different tools predominantly find different positives. Only a single defect type was found from all tools, four types from two tools each. Find Bugs finds in the different systems positives from all categories PMD only from the categories Failure of the application, Insufficient error handling Maintainability of the code. QJ Pro only reveals failure of the application Insufficient error handling Violation of structured programming. Number of types of defects varies from tool to tool. Find Bugs detects defects of 13 different types, PMD of 10 types QJ Pro only of 4 types. Ratio of false positives: Large variation over different projects Recommendation: Use Find Bugs and PMD in combination Use PMD or QJ Pro to enforce coding standards Reviews only on project C Revealed 19 different types of defects All defects found by the tools also found by reviews However, tools sometimes found more defects of a type But also the other way round Review can also reveal faults in the logic Using a tool before reviewing can improve thoroughness DEFECTS FOUND BY REVIEWS Review is more successful than bug finding tools, because it is able to detect far more defect types. However, it seems to be beneficial to first use a bug finding tool before inspecting the code, so that the defects that are found by both are already removed. This is because the automation makes it cheaper and more thorough than a manual review. On the downside, we also notice a high number of false positives from all tools. This results in significant non-productive work for the developers that could in some cases exceed the improvement achieved by the automation. Black box and glass box tests No stress tests Only 8 defect types were found Completely distinct from the ones found by the tools Only in categories 1 to 3 Stress tests might change this picture Defects found by tests For the software systems for which defects were revealed, there were no identical defects found with testing as well as the bug finding tools Dynamic testing is good at finding logical defects that are best visible when executing the software, bug finding tools have their strength at finding defects related to maintainability. we again recommend using both techniques in a project. BFTs mainly find maintainability-related defects which complies with expectation Only reviews and tests are able to verify and validate the logic of the software The limitation of the tools lies in what is expressible by bug patterns and how good and generic these patterns are… It is still surprising that there is not a single overlapping defect Positive: the combination is beneficiary Negative: the amount of (costly) testing cannot be reduced However, external validity limited by sample size maturity of analyzed software High ratio of false positives is disillusioning The expected benefit of automation is diminished by the necessary human intervention However, tools are sometimes more thorough 1. Motivation 2. Overview 3. Bug Finding Tools 4. Projects 5. Approach 6. Analysis 7. Conclusions Not a comprehensive empirical study But a first indication using a series of projects Only knowledge about relation of bug finding tools to reviews and tests BFTs revealed completely different defects than dynamic tests But reveal a subset of the ones found by reviews Tools are typically more thorough The effectiveness depends on the personal programming style and software design The high number of false positives makes the benefits questionable 1. T. Ball and S.K. Rajamani. The SLAM Project: Debugging System Software via Static Analysis. In Proc. 29th Annual ACM SIGPLAN-SIGACT Symposium on Principles of Programming Languages, 2002. 2. B. Beizer. Software Testing Techniques. Thomson Learning, 2nd edition, 1990. 3. W.R. Bush, J.D. Pincus, and D.J. Sielaff. A static analyzer for finding dynamic programming errors. Softw. Pract. Exper., 30:775–802, 2000. 4. R. Chillarege. Orthogonal Defect Classification. In Michael R. Lyu, editor, Handbook of Software Reliability Engineering, chapter 9. IEEE Computer Society Press and McGraw-Hill, 1996. 5. C. Csallner and Y. Smaragdakis. CnC: Combining Static Checking and Testing. In Proc. 27th International Conference on Software Engineering (ICSE’05), 2005. To appear. 6. D. Engler and M. Musuvathi. Static Analysis versus Model Checking for Bug Finding. In Proc. Verification, Model Checking and Abstract Interpretation (VMCAI’ 04), volume 2937 of LNCS, pages 191–210. Springer, 2002. QUESTIONS?