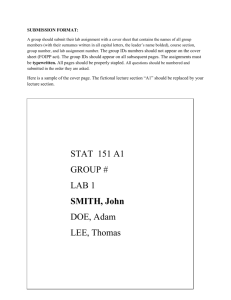

Document

advertisement

Testing IDS Overview • • • • • Introduction Measurable IDS characteristics Challenges of IDS testing Measuring IDS performances Test data sets 2/108 Introduction • Despite enormous investment in IDS technology, no comprehensive and scientifically rigorous methodology is available to test IDS. • Quantitative IDS performance measurement results are essential in order to compare different systems. 3/108 Introduction • Quantitative results are needed by: – Acquisition managers – to improve the process of system selection. – Security analysts – to know the likelihood that the alerts produced by IDS are caused by real attacks that are in progress. – Researchers and developers – to understand the strengths and weaknesses of IDS in order to focus research efforts on improving systems and measuring their progress. 4/108 Measurable IDS characteristics • • • • • • • Coverage Probability of false alarms Probability of detection Resistance to attacks directed at the IDS Ability to handle high bandwidth traffic Ability to correlate events Ability to detect new attacks 5/108 Measurable IDS characteristics • Ability to identify an attack • Ability to determine attack success • Capacity verification (NIDS). 6/108 Coverage • Determines which attacks an IDS can detect under ideal conditions. • For misuse (signature based) systems – Counting the number of signatures and mapping them to a standard naming scheme. • For anomaly detection systems – Determining which attacks out of the set of all known attacks could be detected by a particular methodology. 7/108 Coverage • The problem with determining coverage of an IDS lies in the fact that various researchers characterize the attacks by different numbers of parameters. 8/108 Coverage • These characterizations may take into account the particular goal of the attack (DoS, penetration, scanning, etc.), the software, protocol and/or OS against which it is targeted, the victim type, the data to be collected in order to obtain the evidence of the attack, the use or not of IDS evasion techniques, etc. • Combinations of these parameters are also possible. 9/108 Coverage • The consequence of these differences are coarse granularity attack definitions and finer granularity attack definitions. • Because of the disparity in granularity, it is difficult to determine attack coverage of an IDS precisely. 10/108 Coverage • CVE is an attempt to alleviate this problem. • But the CVE approach does not work either, if multiple attacks are used to exploit the same vulnerability using different approach (for example to evade IDS systems). 11/108 Coverage • Determining the importance of different attack types is also a problem when determining coverage. • Different environments may assign different costs and importance to detecting different types of attacks. 12/108 Coverage • Example: – An e-commerce site may not be interested in surveillance attacks, but may be very interested in detecting DDoS attacks. – A military site may be especially interested in detecting surveillance attacks in order to prevent more serious attacks by acting in their early phases. 13/108 Coverage • Another problem with coverage is in determining which attacks to cover regarding system updates. – Example: • It is worthless to test IDS coverage of the attacks against the defended system in which the measures against these attacks have already been applied (patching, hardening, etc.) 14/108 Probability of false alarms • Suppose that we have N IDS decisions, of which: – – – – In TP cases: intrusion – alarm. In TN cases: no intrusion – no alarm. In FP cases: no intrusion – alarm. In FN cases: intrusion – no alarm. 15/108 Probability of false alarms • • • • Total intrusions: TP+FN Total no-intrusions: FP+TN N=TP+FN+FP+TN Base-rate – the probability of an attack: TP FN P I N 16/108 Probability of false alarms • Events: Alarm A, Intrusion I – The following rates are defined: • True positive rate TPR TP TPR PA I TP FN • True negative rate TNR TN TNR PA I FP TN 17/108 Probability of false alarms • False positive rate FPR FP FPR P A I FP TN • False negative rate FNR FN FNR PA I TP FN 18/108 Probability of false alarms • This measure determines the rate of false positives produced by an IDS in a given environment during a particular time frame. 19/108 Probability of false alarms • Typical causes of false positives: – Weak signatures (alert on all traffic to a specific port, search for the occurrence of a common word such as ”help” in the first 100 bytes of SNMP or other TCP connections, alert on common violations of the TCP protocol, etc.) – Normal network monitoring and maintenance traffic. 20/108 Probability of false alarms • Difficulties regarding measuring of false alarm rate (1) – An IDS may have a different false positive rate in different network environments, and “standard network” does not exist. – It is difficult to determine aspects of network traffic or host activity that will cause false alarms. 21/108 Probability of false alarms • Difficulties regarding measuring of false alarm rate (2) – Consequence: it is difficult to guarantee that it is possible to produce the same number and type of false alarms in a test network as in a real network. – IDS can be configured in many ways and it is difficult to determine which configuration of an IDS should be used for a particular false positive test. 22/108 Probability of detection • This measurement determines the rate of attacks detected correctly by an IDS in a given environment during a particular time frame. • Difficulties in measuring probability of detection (1) – The success of an IDS is largely dependent upon the set of the attacks used during the test. 23/108 Probability of detection • Difficulties in measuring probability of detection (2) – The probability of detection varies with the false positive rate – the same configuration of the IDS must be used for testing for false positives and hit rates. 24/108 Probability of detection • Difficulties in measuring probability of detection (3) – A NIDS can be evaded by using the stealthy versions of attacks (fragmenting packets, using data encoding, using unusual TCP flags, enciphering attack packets, spreading attacks over multiple network sessions, launching attacks from multiple sources, etc.) – This reduces the probability of detection, even though the same attack would be detected if no stealthy version would be applied. 25/108 Resistance to attacks against IDS • This measurement demonstrates how resistant an IDS is to an attacker’s attempt to disrupt the correct operation of the IDS. • Some typical attacks against IDS (1) – Sending a large amount of non-attack traffic with volume exceeding the IDS processing capability – this causes dropping packets by the IDS. 26/108 Resistance to attacks against IDS • Some typical attacks against IDS (2) – Sending to the IDS non-attack packets that are specially crafted to trigger many signatures within the IDS – the human operator is overwhelmed with false positives, or an automated analysis tools crashes. 27/108 Resistance to attacks against IDS • Some typical attacks against IDS (3) – Sending to the IDS a large number of attack packets intended to distract the human operator, while the attacker launches a real attack hidden among these “false attacks”. – Sending to the IDS packets containing data that exploit a vulnerability within the very IDS processing algorithms. Such vulnerabilities may be consequence of coding errors. 28/108 Ability to handle high bandwidth traffic • This measurement demonstrates how well an IDS will function when presented with a large volume of traffic. • Most NIDS start to drop packets as the traffic volume increases – false negatives. • At certain threshold, most IDS will stop detecting any attacks. 29/108 Ability to correlate events • This measurement demonstrates how well an IDS correlates attack events. • These events may be gathered from IDS, routers, firewalls, application logs, etc. • One of the primary goals of event correlation is to identify penetration attacks. • Currently, IDS have limited capabilities in this area. 30/108 Ability to detect new attacks • This measurement demonstrates how well an IDS can detect attacks that have not occurred before. • Signature-only based systems will have 0 score here. • Anomaly-based systems may be suitable for this type of measurement. However, they in general produce more false alarms than the signature-based systems. 31/108 Ability to identify an attack • This measurement demonstrates how well an IDS can identify the attack that it has detected. • Each attack should be labelled with a common name or vulnerability name, or by assigning the attack to a category. 32/108 Ability to determine attack success • This measurement demonstrates if the IDS can determine the success of attacks from remote sites that give the attacker higher-level privileges on the attacked system. • Many remote privilege-gaining attacks (probes) fail and do not damage the attacked system. • Many IDS do not distinguish between unsuccessful and successful attacks. 33/108 Ability to determine attack success • For the same attack, some IDS can detect the evidence of damage and some IDS detect only the signature of attack actions. • The ability to determine the attack success is essential for the analysis of attack correlation and the attack scenario. • Measuring this capability requires the information about both successful and unsuccessful attacks. 34/108 Capacity verification for NIDS • The NIDS demand higher-level protocol awareness than other network devices (switches, routers, etc.) • NIDS inspect more deeply the network packets than the other devices do. • Therefore, it is important to measure the ability of a NIDS to capture, process and perform at the same level of accuracy under a given network load as it does on a quiescent network. 35/108 Capacity verification for NIDS • There exists a standardized capacity benchmarking methodology for NIDS (e.g. CISCO has its own methodology). • The NIDS customers can use the standardized capacity test results for each metric and a profile of their networks to determine if the NIDS is capable of inspecting their traffic. 36/108 Challenges of IDS testing • The following problems (at least) make IDS testing a challenging task: – Collecting attack scripts and victim software is difficult. – Requirements for testing signature-based and anomaly-based IDS are different. – Requirements for testing host-based and network-based IDS are different. – Using background traffic in IDS testing is not standardized. 37/108 Challenges of IDS testing • Collecting attack scripts and victim software (1) – It is difficult and expensive to collect a large number of attack scripts. – The attack scripts are available in various repositories, but it takes time to find relevant scripts to a particular testing environment. 38/108 Challenges of IDS testing • Collecting attack scripts and victim software (2) – Once an adequate script is identified, it takes approx. one person-week to review the code, test the exploit, determine where the attack leaves evidence, automate the attack and integrate it into a testing environment. 39/108 Challenges of IDS testing • Different requirements for testing signaturebased and anomaly-based IDS (1) – Most commercial systems are signature-based. – Many research systems are anomaly based. 40/108 Challenges of IDS testing • Different requirements for testing signaturebased and anomaly-based IDS (2) – An ideal IDS testing methodology would be applicable to both signature-based and anomaly-based systems. – This is important because the research anomaly-based systems should be compared to the commercial signature-based systems. 41/108 Challenges of IDS testing • Different requirements for testing signaturebased and anomaly-based IDS (3) – The problems with creating a single test to cover both type of systems (1) • Anomaly based systems with learning require normal traffic for training that does not include attacks. • Anomaly based systems with learning may learn behaviour of the testing methodology and perform well without detecting real attacks at all. 42/108 Challenges of IDS testing • Different requirements for testing signaturebased and anomaly-based IDS (4) – The problems with creating a single test to cover both type of systems (2) • This may happen when all the attacks in a test are launched from a particular user, IP address, subnet, or MAC address. 43/108 Challenges of IDS testing • Different requirements for testing signaturebased and anomaly-based IDS (5) – The problems with creating a single test to cover both type of systems (3) • Anomaly-based systems with learning can also learn subtle characteristics difficult to predetermine (packet window size, ports, typing speed, command set used, TCP flags, connection duration, etc.) – artificially perform well in the test environment. 44/108 Challenges of IDS testing • Different requirements for testing signaturebased and anomaly-based IDS (6) – The problems with creating a single test to cover both type of systems (4) • The performance of a signature based system in a test will, to a large degree, depend on the set of attacks used in the test. • Then the decision about which attacks to include in a test may be in favour of a particular IDS – not objective. 45/108 Challenges of IDS testing • Different requirements for testing hostbased and network-based IDS (1) – Testing host-based IDS presents some difficulties not present when testing networkbased IDS (1) • Network-based IDS can be tested off-line by creating a log file containing TCP traffic and replaying that traffic to IDS – this is convenient, because there is no need to test all the IDS at the same time. • Repeatability of the test is easy to achieve. 46/108 Challenges of IDS testing • Different requirements for testing hostbased and network-based IDS (2) – Testing host-based IDS presents some difficulties not present when testing networkbased IDS (2) • Host-based IDS use a variety of system inputs in order to determine whether or not a system is under attack. • This set of inputs is not the same for all IDS. 47/108 Challenges of IDS testing • Different requirements for testing hostbased and network-based IDS (3) – Testing host-based IDS presents some difficulties not present when testing networkbased IDS (3) • Host-based IDS monitor a host, not a single data feed. • Then it is difficult to replay activity from log files. • Since it is difficult to test a host-based IDS off-line, an on-line test should be performed. • Consequence: problems of repeatability. 48/108 Challenges of IDS testing • Using Background traffic in IDS testing (1) – Four approaches: • • • • Testing using no background traffic/logs Testing using real traffic/logs Testing using sanitized traffic/logs Testing using simulated traffic/logs. – It is not clear which approach is the most effective for testing IDS. – Each of the four approaches has unique advantages and disadvantages. 49/108 Challenges of IDS testing • Using Background traffic in IDS testing (2) – Testing using no background traffic/logs (1) • This testing may be used as a reference condition. • An IDS is set up on a host/network on which there is no activity. • Then, computer attacks are launched on this host/network to determine whether or not the IDS can detect them. • This technique can determine the probability of detection (hit rate) under no load, but it cannot determine the false positive rate. 50/108 Challenges of IDS testing • Using Background traffic in IDS testing (3) – Testing using no background traffic/logs (2) • Useful for verifying that an IDS has signatures for a set of attacks and that the IDS can properly label each attack. • Often much less costly than other approaches. • Drawback: tests using this technique are based on the assumption that an IDS ability to detect an attack is the same regardless of the background activity. 51/108 Challenges of IDS testing • Using Background traffic in IDS testing (4) – Testing using no background traffic/logs (3) • At low levels of background activity, that assumption is probably true. • At high levels of background activity, the assumption is often false since the IDS performances degrade at high traffic intensities. 52/108 Challenges of IDS testing • Using Background traffic in IDS testing (5) – Testing using real traffic/logs (1) • The attacks are injected into a stream of real background activity. • Very effective for determining the hit rate of an IDS given a particular level of background activity. • Background activity is real – contains all the anomalies and subtleties – realistic hit rates. • Enables comparison of IDS hit rates at different levels of activity. 53/108 Challenges of IDS testing • Using Background traffic in IDS testing (6) – Testing using real traffic/logs (2) • Drawbacks (1) – Repeatable test using real traffic is problematic – it is difficult to store and replay large amounts of real traffic at rates higher than 100 Mb/s (currently). Possible solution: parallelization – packet sequencing problems. – The experiments of this kind usually use a small number of victim machines, set up only to be attacked during the test. Some anomaly detection IDS can then artificially elevate their performances during the test. 54/108 Challenges of IDS testing • Using Background traffic in IDS testing (7) – Testing using real traffic/logs (3) • Drawbacks (2) – The real background activity used may contain anomalies unique to the network, which favour one IDS over another. Example: a test network may heavily use a particular protocol that was processed more deeply by a particular IDS. – The major problem with testing using real background traffic/logs: it is very difficult to determine false positive rates correctly, because it is virtually impossible to guarantee the identification of all the attacks that naturally occur in the background activity. 55/108 Challenges of IDS testing • Using Background traffic in IDS testing (8) – Testing using real traffic/logs (4) • Drawbacks (3) – It is difficult to publicly distribute the test, since there are privacy concerns related to the use of real background activity. – Replay may damage the timings – timestamps should also be kept. 56/108 Challenges of IDS testing • Using Background traffic in IDS testing (9) – Testing using sanitized traffic/logs (1) • Sanitizing – removing sensitive information from real data. • The goal – to overcome the privacy problems of using, analyzing, and distributing real background activity. • Example: TCP packet headers may be cleansed, and packet payloads may be hashed. • Real background activity is pre-recorded and then all the sensitive data are removed. 57/108 Challenges of IDS testing • Using Background traffic in IDS testing (10) – Testing using sanitized traffic/logs (2) • Then, attack data are injected within the sanitized data stream: – By replaying the sanitized data and running attacks concurrently, or – By separately creating attack data and then inserting these into the sanitized data. • Advantages: – Test data are freely distributable – The test is repeatable. 58/108 Challenges of IDS testing • Using Background traffic in IDS testing (11) – Testing using sanitized traffic/logs (3) • Disadvantages (1) – Sanitization attempts may end up removing much of the content of the background activity – very unrealistic environment. – The major problem: Sanitization attempts may fail – accidental release of sensitive data. It is infeasible for a human to verify the sanitization of a large volume of data. – The injected attacks do not interact realistically with the sanitized background activity. Example: an injected buffer overflow attack may cause a web server to crash, but background activity still requests the web server. 59/108 Challenges of IDS testing • Using Background traffic in IDS testing (12) – Testing using sanitized traffic/logs (4) • Disadvantages (2) – When sanitizing real traffic, it may be difficult to remove the attacks that existed in the data stream – this causes problems with the false positive rate testing. – Sanitizing data may remove information needed to detect attacks. 60/108 Challenges of IDS testing • Using Background traffic in IDS testing (13) – Testing using simulated traffic/logs (1) • The most common approach to testing IDS. • A test bed network with hosts and network infrastructure is created. • Background traffic is generated on this network, as well as the attacks. • The test bed network includes victims of interest with background traffic generated by means of complex traffic generators that model the actual network traffic statistics. 61/108 Challenges of IDS testing • Using Background traffic in IDS testing (14) – Testing using simulated traffic/logs (2) • There is also a possibility to employ simpler traffic generators to create a small number of packet types at a high rate. • Network traffic and host audit logs can be recorded in such a test bed network for later playback. • There is also possibility to perform evaluations in real time. 62/108 Challenges of IDS testing • Using Background traffic in IDS testing (15) – Testing using simulated traffic/logs (3) • Advantages: – Data can be distributed freely – they do not contain any private or sensitive information. – There is a guarantee that the background activity does not contain any unknown attacks. – IDS testing using simulated traffic is easily repeatable. 63/108 Challenges of IDS testing • Using Background traffic in IDS testing (16) – Testing using simulated traffic/logs (4) • Disadvantages: – It is very costly and difficult to create a simulation. – It is difficult to simulate a high bandwidth environment – resource constraints. – Different traffic is needed for different types of networks – academic, e-commerce, military, etc. 64/108 Measuring IDS performances • In order to compare different IDS, measures of their performances are needed. • Of all the measurable characteristics mentioned before, the true positive rate and the false positive rate are the most important for comparing IDS. • The true positive rate and the false positive rate are included in various sublimation metrics for comparing IDS. 65/108 Measuring IDS performances • It is important to determine the probability of intrusion, if an alert has been generated. • This gives rise to a Bayesian probabilistic measure for characterising IDS performances. • We need the total probability of an alert in order to determine the probability of intrusion given the alert. 66/108 Measuring IDS performances TP FN A TN I, I A FP I I mutually exclusive A=(IA)(IA) P A PI P A I PI P A I Total probability of an alert 67/108 Measuring IDS performances The base rate: TP FN P I N PI 1 PI The true positive rate The false positive rate TP P A I TPR TP FN FP P A I FPR FP TN 68/108 Measuring IDS performances • A performance measure: Bayesian detection rate: P( I )P( A | I ) P( I | A ) P( I )P( A | I ) P( I )P( A | I ) • The greater the detection rate, the better the IDS, but… 69/108 Measuring IDS performances • Base rate fallacy – Even if the false alarm rate P(A|¬I) is very low, the Bayesian detection rate P(I |A) is still low if the base rate P(I) is low – Example 1: if P(A|I) = 1, P(A|¬I) = 10-5, P(I) = 2×10-5, P(I |A) = 66%, very low! – Example 2: if P(A|I) = 1, P(A|¬I) = 10-5, P(I) = 10-1, P(I |A) = 99.99% – Example 3: if P(A|I) = 1, P(A|¬I) = 10-9, P(I) = 2×10-5, P(I |A) = 99.99% 70/108 Measuring IDS performances • Conclusion: – If the base rate is low, the false alarm rate must be extremely low. • Example: – KDD cup data set without filtering has a very high base rate – no base rate fallacy. – What is good for the military, it is sometimes very bad for a non-military environment. 71/108 Measuring IDS performances • Another performance measure: ROC – Receiver Operating Characteristic – Used widely in systems for detection of signals in noise (radars, etc.) – TPR vs. FPR curve – An ideal system has TPR=1 and FPR=0. 72/108 Measuring IDS performances • Example of a ROC curve: % TPR IDS1 IDS2 % FPR 73/108 Measuring IDS performances • The use of ROC curves for assessing IDS has suffered criticism (McHugh, 2000): – Normally, an IDS would be characterised by a single point in the coordinates FPR-TPR (However, if a parameter of an IDS is varied, the ROC curve is obtained, instead of a single point). 74/108 Measuring IDS performances • Example – ROC of the IDS with the relabeling algorithm in which DB index and centroid diameters are implemented. • The parameter: DeltaDB – varied between 0.2 and 0.45. 75/108 Measuring IDS performances 76/108 Test data sets • For testing using simulated traffic/logs, a source of simulated traffic in which attacks are injected is needed. • A widely used simulated traffic data set is the KDD cup ’99 data set. 77/108 KDD cup 1999 • It corresponds to testing IDS carried out by MIT Lincoln Laboratory in 1998 and 1999. • In 1999, the KDD organized a contest in data mining and the data base used was that generated by Lincoln Laboratory. 78/108 KDD cup 1999 • KDD (SIGKDD) – ACM special interest group on knowledge discovery and data mining. • The purpose of the KDD CUP ’99 contest was to classify the given data in order to differentiate attack records from the normal traffic records. 79/108 KDD cup 1999 • The KDD Cup 1999 Data (1) – Various intrusions simulated in a military airbase network environment – 9 weeks of raw tcpdump data for a LAN simulating a typical U.S. Air Force LAN. – 4,900,000 data instances – vectors of extracted feature values from connection records. 80/108 KDD cup 1999 • The KDD Cup 1999 Data (2) – Data were split into 2 parts: • The raw training data (4Gb of compressed binary tcpdump – 7 weeks of network traffic – approx. 5 million connection records). • Test data – 2 weeks – approx. 2 million connection records. – A connection: • A sequence of TCP packets starting and ending at some well defined time instants, between which data flow to and from a source IP address to a target IP address under a well defined protocol. 81/108 KDD cup 1999 • The KDD Cup 1999 Data (3) – Each connection is labelled as either normal or as an attack, with exactly one specified attack type. – Each connection record consists of about 100 bytes. 82/108 KDD cup 1999 • Four categories of simulated attacks – DoS – denial of service (e.g. Syn flood). – R2L – unauthorized access from a remote machine (e.g. guessing password). – U2R – unauthorized access to superuser or root functions (e.g. various “buffer overflow” attacks). – Probing – surveillance and other probing for vulnerabilities (e.g. port scanning). 83/108 KDD cup 1999 • The test data do not have the same probability distribution as the training data. • They include specific attack types not in the training data. • This made the data mining task more realistic – the distribution of real data and types of possible attacks are normally not known during the training of the learning system. 84/108 KDD cup 1999 • The training data set contains 22 training attack types (1) – – – – – – back DoS buffer_overflow u2r ftp_write r2l guess_passwd r2l imap r2l ipsweep probe 85/108 KDD cup 1999 • The training data set attack types (2) – – – – – – land dos loadmodule u2r multihop r2l neptune dos nmap probe perl u2r 86/108 KDD cup 1999 • The training data set attack types (3) – – – – – – phf r2l pod dos portsweep probe rootkit u2r satan probe smurf dos 87/108 KDD cup 1999 • The training data set attack types (4) – – – – spy r2l teardrop dos warezclient r2l warezmaster r2l. • The test data set contains 14 additional attack types. 88/108 KDD cup 1999 • 41 higher level traffic features were defined in order to help distinguishing normal connections from attacks. • These features are divided into 3 categories: – Basic features of individual connections. – Content features within a connection suggested by domain knowledge. – Traffic features computed using a 2-second time window. 89/108 KDD cup 1999 • Basic features of individual connections (host-based traffic features): – Connection records were sorted by destination host. – Features were constructed using a window of 100 connections to the same host instead of a time window. – This is useful since some probing attacks scan the hosts (or ports) using a long time interval. 90/108 KDD cup 1999 • Content features within a connection suggested by domain knowledge: – These features look for suspicious behaviour in the data portions, such as the number of failed login attempts. 91/108 KDD cup 1999 • Traffic features computed using a twosecond time window (time based traffic features): – The same host features examine only the connections in the past two seconds that have the same destination host as the current connection, and calculate statistics related to protocol behaviour, service, etc. – The same service features examine only the connections in the past two seconds that have the same service as the current connection. 92/108 KDD cup 1999 • Basic features of individual connections (1) Feature name Description Type duration Length (in sec) of the connection Continuous protocol_type Type of the protocol, e.g. tcp, udp, etc. Discrete service Network service on the destination, e.g. http, telnet, etc. Discrete src_bytes Number of data bytes from source to destination Continuous dst_bytes Number of data bytes from destination to source Continuous 93/108 KDD cup 1999 • Basic features of individual connections (2) Feature name Description Type flag Normal or error status of the connection Discrete land 1 if connection is from/to the same host/port; 0 otherwise Discrete wrong_fragment Number of “wrong” fragments Continuous urgent Continuous Number of urgent packets 94/108 KDD cup 1999 • Content features (1) Feature name Description Type hot Number of “hot” indicators Continuous num_failed_logins Number of failed login attempts Continuous logged_in 1 if successfully logged in; 0 otherwise Discrete num_compromised Number of “compromised” conditions Continuous root_shell Discrete 1 if root shell is obtained; 0 otherwise 95/108 KDD cup 1999 • Content features (2) Feature name Description Type su_attempted 1 if “su root” command attempted; 0 otherwise Discrete num_root Number of “root” accesses Continuous num_file_creations Number of file creation operations Continuous num_shells Number of shell prompts Continuous num_access_files Number of operations on access control files Continuous 96/108 KDD cup 1999 • Content features (3) Feature name Description Type num_outbound_cmds Number of outbound commands Continuous in an ftp session is_hot_login 1 if the login belongs to the “hot” Discrete list; 0 otherwise is_guest_login 1 if the login is a “guest” login; 0 Discrete otherwise 97/108 KDD cup 1999 • Time-based traffic features (1) Feature name Description Type count Number of connections to the same host as the current connection in the past 2 seconds Continuous The following features refer to so called “same host” connections serror_rate % of connections that have “SYN” errors Continuous rerror_rate % of connections that have “REJ” errors Continuous 98/108 KDD cup 1999 • Time-based traffic features (2) Feature name Description Type “same host” connections (cont.) same_srv_rate % of connections to the same service Continuous diff_srv_rate % of connections to different services Continuous srv_count Number of connections to the same service as the current connection in the past 2 seconds Continuous 99/108 KDD cup 1999 • Time-based traffic features (3) Feature name Description Type The following features refer to so called “same service” connections srv_serror_rate % of connections that have “SYN” errors Continuous srv_rerror_rate % of connections that have “REJ” errors Continuous srv_diff_host_rate % of connections to different hosts Continuous 100/108 KDD cup 1999 • Selecting the right set of system features is a critical step when formulating the classification tasks (in this case – intrusion detection algorithm). 101/108 KDD cup 1999 • The 41 features were obtained by means of the following process: – Frequent sequential patterns (frequent episodes) from the network audit data were identified. – These patterns were used as guidelines to select and construct temporal statistical features. 102/108 KDD cup 1999 • Weaknesses of the KDD Cup data set: – Simulated data must be similar to real data – there is no proof that KDD cup data are similar to real data. – No anomalous packets that appear in real data. – No failure modes. – Synthetic attacks are not distributed realistically in the background normal data. – Simulated TCP traffic is not diverse enough (only 9 types of TCP traffic in KDD cup data set). 103/108 Stide • Stide (Sequence Time Delay Embedding) data set – collections of system calls – Instead of high-level features used in the KDD CUP ’99 database, low level features are used in order to identify potential intrusions – sequences of system calls. – In the training phase, Stide builds a database of all unique, contiguous system call sequences of a predetermined fixed length occurring in the traces. 104/108 Stide • During testing, Stide compares sequences in the new traces to those in the database, and reports an anomaly measure indicating how much the new traces differ from the normal training data. • 13726 traces of normal data were collected at the Computer Science Department, University of New Mexico. 105/108 PESIM 2005 • PESIM 2005 dataset – Fraunhofer Institute Berlin, Germany – Goal: to overcome the problem with the KDD Cup 1999 dataset. – A combination of 5 servers in a virtual machine environment (2 Windows, 2 Linux and 1 Solaris). – HTTP, FTP, and SMTP services. – To achieve realistic traffic characteristics, news sites were mirrored on the HTTP servers. – File sharing facilities offered on FTP servers. 106/108 PESIM 2005 • SMTP traffic injected artificially: – 70% mails from personal communication and mailing lists. – 30% spam mails received by 5 individuals. • Normal data pre-processed in the following way: – Random selection of 1000 TCP connections from each protocol. – Attachments removed from the TCP traffic. 107/108 PESIM 2005 • Attacks against the simulated services generated by penetration testing tools. – Multiple instances of 27 different attacks were launched against the HTTP, FTP, and SMTP services. – The origin of the major part of the attacks is from the Metasploit environment. – Some of the attacks were taken from the Bugtraq and Packet Storm Security lists. • The problem: not publicly available! 108/108