04 - School of Computing

advertisement

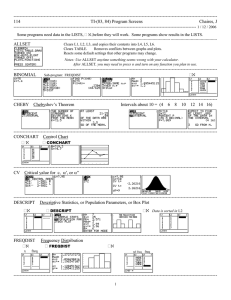

School of Computing something FACULTY OF ENGINEERING OTHER Word-counts and N-grams COMP3310 Natural Language Processing Eric Atwell, Language Research Group (with thanks to Katja Markert, Marti Hearst, and other contributors) Reminder Tokenization - by whitespace, regular expressions Problems: It’s data-base New York … Jabberwocky shows we can break words into morphemes Morpheme types: root/stem, affix, clitic Derivational vs. Inflectional Regular vs. Irregular Concatinative vs. Templatic (root-and-pattern) Morphological analysers: Porter stemmer, Morphy, PC-Kimmo Morphology by lookup: CatVar, CELEX, OALD++ MorphoChallenge: Unsupervised Machine Learning of morphology Counting Token Distributions Useful for lots of things One cute application: see who talks where in a novel • Idea comes from Eick et al. who did it with The Jungle Book by Kipling SeeSoft Vizualization of Jungle Book Characters, From Eick, Steffen, and Sumner ‘92 The FreqDist Data Structure Purpose: collect counts and frequencies for some phenomenon • Initialize a new FreqDist: >>> import nltk >>> from nltk.probability import FreqDist >>> fd = FreqDist() • When in a counting loop: fd.inc(‘item of interest’) • After done counting: fd.N() # total number of tokens counted (N = number) fd.B() # number of unique tokens (types; B = buckets) fd.samples() # list of all the tokens seen (there are N) fd.Nr(10) # number of samples that occurred 10 times fd.count(‘red’) # number of times the token ‘red’ was seen fd.freq(‘red’) # relative frequency of ‘red’; that is fd.count(‘red’)/fd.N() fd.max() # which token had the highest count fd.sorted_samples() # show the samples in decreasing order of frequency FreqDist() in action Word Lengths by Language Word Lengths by Language How to determine the characters? Who are the main characters in a story? Simple solution: look for words that begin with capital letters; count how often each occurs. Then show the most frequent. Who are the main characters? And where in the story? Language Modeling N-gram modelling: a fundamental concept in NLP Main idea: • For a given language, some words are more likely than others to follow each other; and • You can predict (with some degree of accuracy) the probability that a given word will follow another word. • This works for words; also for Parts-of-Speech, prosodic features, dialogue acts, … Next Word Prediction From a NY Times story... • Stocks ... • Stocks plunged this …. • Stocks plunged this morning, despite a cut in interest rates • Stocks plunged this morning, despite a cut in interest rates by the Federal Reserve, as Wall ... • Stocks plunged this morning, despite a cut in interest rates by the Federal Reserve, as Wall Street began Human Word Prediction Clearly, at least some of us have the ability to predict future words in an utterance. How? • Domain knowledge • Syntactic knowledge • Lexical knowledge Simple Statistics Does a Lot A useful part of the knowledge needed to allow word prediction can be captured using simple statistical techniques In particular, we'll rely on the notion of the probability of a sequence (a phrase, a sentence) N-Gram Models of Language Use the previous N-1 words in a sequence to predict the next word How do we train these models? • Very large corpora Simple N-Grams Assume a language has V word types in its lexicon, how likely is word x to follow word y? • Simplest model of word probability: 1/V • Alternative 1: estimate likelihood of x occurring in new text based on its general frequency of occurrence estimated from a corpus (unigram probability) popcorn is more likely to occur than unicorn • Alternative 2: condition the likelihood of x occurring in the context of previous words (bigrams, trigrams,…) mythical unicorn is more likely than mythical popcorn Computing Next Words Auto-generate a Story If it simply chooses the most probable next word given the current word, the generator loops – can you see why? This is a bigram model ?better to take longer history into account: trigram, 4-gram, … (but will this guarantee no loops?) Applications Why do we want to predict a word, given some preceding words? • Rank the likelihood of sequences containing various alternative hypotheses, e.g. for automatic speech recognition (ASR) Theatre owners say popcorn/unicorn sales have doubled... See for yourself: EBL has Dragon Naturally Speaking ASR • Assess the likelihood/goodness of a sentence • for text generation or machine translation. The doctor recommended a cat scan. El doctor recommendó una exploración del gato. Comparing Modal Verb Counts “can” and “will” more frequent in skills and hobbies (Bob the Builder: “Yes we can!”) How to implement this? Comparing Modals Comparing Modals Reminder FreqDist counts of tokens and their distribution can be useful Eg find main characters in Gutenberg texts Eg compare word-lengths in different languages Human can predict the next word … N-gram models are based on counts in a large corpus Auto-generate a story ... (but gets stuck in local maximum) Grammatical trends: modal verb distribution predicts genre