Blitz: A Principled Meta-Algorithm for Scaling Sparse Optimization

advertisement

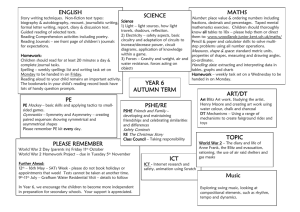

Blitz: A Principled Meta-Algorithm for Scaling Sparse Optimization Tyler B. Johnson and Carlos Guestrin University of Washington Optimization • Very important to machine learning Models of data Optimal model • Our focus is constrained convex optimization • Number of constraints can be very large! Classification Example • Sparse regression • Many constraints in dual problem Choices for Scaling Optimization • Stochastic methods Subject of this talk: • Parallelization Active Sets Active Set Motivation Important fact Convex Optimization with Active Sets Convex objective f Feasible set Convex Optimization with Active Sets 1. Choose set Then repeat... of constraints x 2. Set x to minimize objective subject to chosen constraints Convex Optimization with Active Sets 2. Set x to minimize objective subject to chosen constraints Algorithm 1. Choose set converges when of constraints x is feasible! x Limitations of Active Sets How many iterations to expect? x is infeasible until convergence Until x is feasible do Which constraints are important? How many constraints to choose? Propose active set of important constraints x ← Minimizer of objective s.t. only active set When to terminate subproblem? Blitz 1. Update y to be extreme feasible point on segment [y,x] y x Feasible point Minimizer subject to no constraints Blitz y 2. Select top k constraints with boundaries closest to y x Blitz 3. Set x to minimize objective subject to selected constraints And repeat… y x Blitz y 1. Update y to be extreme feasible point on segment [y,x] x Blitz 3. Set x to minimize objective subject to selected constraints y 2. Choose top k constraints with boundaries closest to y When x = y, Blitz converges! x Blitz Intuition • The key to Blitz is its y-update x y Blitz Intuition • The key to Blitz is its y-update y x • If y update is large, Blitz is near convergence • If y update is small, Blitz Intuition y y x x must improve significantly • If y update is large, Blitz is near convergence • If y update is small, then violated constraint greatly improves x next iteration Main Theorem Theorem 2.1 Active Set Size for Linear Convergence Corollary 2.2 Constraint Screening Corollary 2.3 Tuning Algorithmic Parameters Theory guides choice of: • Active set size • Subproblem termination criteria Tuned using theory Best fixed Best fixed Recap Blitz is an active set algorithm that: • Selects theoretically justified active sets to maximize guaranteed progress • Applies theoretical analysis to guide choice of algorithm parameters • Discards constraints proven to be irrelevant during optimization Empirical Evaluation Experiment Overview • Apply Blitz to L1-regularized loss minimization • Dual is a constrained problem • Optimizing subject to active set corresponds to solving primal problem over subset of variables Single Machine, Data in Memory Relative Suboptimality No Prioritization ProxNewt CD L1_LR Active Sets LIBLINEAR GLMNET Blitz Time (s) Experiment with high-dimensional RCV1 dataset Limited Memory Setting • Data cannot always fit in memory • Active set methods require only a subset of data at each iteration to solve subproblem • Set-up: – 1 pass over data to load active set – Solve subproblem with active set in memory – Repeat Limited Memory Setting Relative Suboptimality No Prioritization AdaGrad_1.0 AdaGrad_10.0 AdaGrad_100.0 CD Prioritized Memory Usage Strong Rule Blitz Time (s) Experiment with12 GB Webspam dataset and 1 GB memory Distributed Setting • With > 1 machine, communication is costly • Blitz subproblems require communication for only active set features • Set-up: – Solve with synchronous bulk gradient descent – Prioritize communication using active sets Distributed Setting Relative Suboptimality No Prioritization Gradient Descent Prioritized Communication KKT Filter Blitz Time (min) Experiment with Criteo CTR dataset and 16 machines Takeaways • Active sets are effective at exploiting structure! • We have introduced Blitz, an active sets algorithm that – Provides novel, useful theoretical guarantees – Is very fast in practice • Future work – Extensions to larger variety of problems – Modifications such as constraint sampling • Thanks! References • • • • • • • • • • • • • • Bach, F., Jenatton, R., Mairal, J., and Obozinski, G. Optimization with sparsity-inducing penalties. Foundations and Trends in Machine Learning, 4(1):1–106, 2012. Duchi, J., Hazan, E., and Singer, Y. Adaptive subgradient methods for online learning and stochastic optimization. Journal of Machine Learning Research, 12:2121–2159, 2011. Fan, R. E., Chen, P. H., and Lin, C. J. Working set selection using second order information for training support vector machines. Journal of Machine Learning Research, 6: 1889–1918, 2005. Fercoq, O. and Richtárik, P. Accelerated, parallel and proximal coordinate descent. Technical Report arXiv:1312.5799, 2013. Friedman, J., Hastie, T., and Tibshirani, R. Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software, 33(1):1–22, 2010. Ghaoui, L. E., Viallon, V., and Rabbani, T. Safe feature elimination for the lasso and sparse supervised learning problems. Pacific Journal of Optimization, 8(4):667– 698, 2012. Kim, H. and Park, H. Nonnegative matrix factorization based on alternating nonnegativity constrained least squares and active set method. SIAM Journal on Matrix Analysis and Applications, 30(2):713–730, 2008. Kim, S. J., Koh, K., Lustig, M., Boyd, S., and Gorinevsky, D. An interior-point method for large-scale L1-regularized least squares. IEEE Journal on Selected Top- ics in Signal Processing, 1(4):606–617, 2007. Koh, K., Kim, S. J., and Boyd, S. An interior-point method for large-scale L1-regularized logistic regression. Journal of Machine Learning Research, 8:519–1555, 2007. Li, M., Smola, A., and Andersen, D. G. Communication efficient distributed machine learning with the parameter server. In Advances in Neural Information Processing Systems 27, 2014. Tibshirani, R., Bien, J., Friedman, J., Hastie, T., Simon, N., Taylor, J., and Tibshirani, R. J. Strong rules for discarding predictors in lasso-type problems. Journal of the Royal Statistical Society, Series B, 74(2):245–266, 2012. Tsochantaridis, I., Joachims, T., Hofmann, T., and Altun, Y. Large margin methods for structured and interdependent output variables. Journal of Machine Learning Re- search, 6:1453–1484, 2005. Xiao, L. Dual averaging methods for regularized stochastic learning and online optimization. Journal of Machine Learning Research, 11:2543–2596, 2010. Yuan, G. X., Ho, C. H., and Lin, C. J. An improved GLMNET for L1-regularized logistic regression. Journal of Machine Learning Research, 13:1999–2030, 2012. Active Set Algorithm 1. Until x is feasible do 2. Propose active set of important constraints 3. x ← Minimizer of objective s.t. only active set Computing y Update • • • • Computing y update = 1D optimization problem Worst case, can be solved with bisection method For linear case, solution is simpler Requires considering all constraints Single Machine, Data in Memory No Prioritization ProxNewt CD L1_LR Support Set Recall Active Sets Support Set Precision LIBLINEAR GLMNET Blitz Time (s) Experiment with high-dimensional RCV1 dataset