PPT

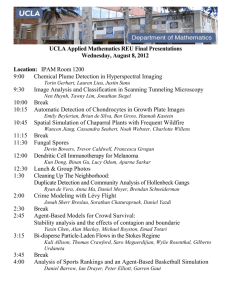

advertisement

Machine Learning II

Decision Tree Induction

CSE 473

Logistics

• PS 2 Due

• Review Session Tonight

• Exam on Wed

Closed Book

Questions like non-programming part of PSets

• PS 3 out in a Week

© Daniel S. Weld

2

Machine Learning Outline

• Machine learning:

√Function approximation

√Bias

• Supervised learning

Classifiers

A supervised learning technique in depth

Induction of Decision Trees

• Ensembles of classifiers

• Overfitting

© Daniel S. Weld

3

Terminology

Defined by restriction bias

© Daniel S. Weld

4

Past Insight

Any ML problem may be

cast as the problem of

FUNCTION APPROXIMATION

© Daniel S. Weld

5

Types of Training Experience

• Credit assignment problem:

Direct training examples:

• E.g. individual checker boards + correct move for each

• Supervised learning

Indirect training examples :

• E.g. complete sequence of moves and final result

• Reinforcement learning

• Which examples:

Random, teacher chooses, learner chooses

© Daniel S. Weld

6

Supervised Learning

(aka Classification)

FUNCTION APPROXIMATION

The most simple case has experience:

< <x1, f(x1)>

<x2, f(x2)>

…

© Daniel S. Weld

7

Issues: Learning Algorithms

© Daniel S. Weld

8

Insight 2: Bias

Learning occurs when

PREJUDICE meets DATA!

• The nice word for prejudice is “bias”.

• What kind of hypotheses will you consider?

What is allowable range of functions you use

when approximating?

• What kind of hypotheses do you prefer?

© Daniel S. Weld

9

Two Strategies for ML

• Restriction bias: use prior knowledge to

specify a restricted hypothesis space.

Version space algorithm over conjunctions.

• Preference bias: use a broad hypothesis

space, but impose an ordering on the

hypotheses.

Decision trees.

© Daniel S. Weld

10

Example: “Good day for sports”

• Attributes of instances

Wind

Temperature

Humidity

Outlook

• Feature = attribute with one value

E.g. outlook = sunny

• Sample instance

wind=weak, temp=hot, humidity=high,

outlook=sunny

© Daniel S. Weld

11

Experience: “Good day for sports”

Day Outlook

d1

s

d2

s

d3

o

d4

r

d5

r

d6

r

d7

o

d8

s

d9

s

d10 r

d11

s

d12

o

d13

o

d14 r

© Daniel S. Weld

Temp

h

h

h

m

c

c

c

m

c

m

m

m

h

m

Humid Wind

h

w

h

s

h

w

h

w

n

w

n

s

n

s

h

w

n

w

n

w

n

s

h

s

n

w

h

s

PlayTennis?

n

n

y

y

y

y

y

n

y

y

y

y

y

n

12

Restricted Hypothesis Space

conjunction

© Daniel S. Weld

13

Resulting Learning Problem

© Daniel S. Weld

14

Consistency

• Say an “example is consistent with a

hypothesis” when they agree

• Hypothesis

Sky Temp Humidity, Wind

<?,

cold,

high,

?>

• Examples:

<sun, cold,

high,

strong>

<sun, hot,

high,

low>

© Daniel S. Weld

15

Naïve Algorithm

© Daniel S. Weld

16

More-General-Than Order

{?, cold, high, ?, ?} >> {sun, cold, high, ? low}

© Daniel S. Weld

17

Ordering on Hypothesis Space

© Daniel S. Weld

18

Search Space

• Nodes?

• Operators?

© Daniel S. Weld

19

Two Strategies for ML

• Restriction bias: use prior knowledge to

specify a restricted hypothesis space.

Version space algorithm over conjunctions.

• Preference bias: use a broad hypothesis

space, but impose an ordering on the

hypotheses.

Decision trees.

© Daniel S. Weld

20

Decision Trees

• Convenient Representation

Developed with learning in mind

Deterministic

• Expressive

Equivalent to propositional DNF

Handles discrete and continuous parameters

• Simple learning algorithm

Handles noise well

Classify as follows

• Constructive (build DT by adding nodes)

• Eager

• Batch (but incremental versions exist)

© Daniel S. Weld

21

Classification

• E.g. Learn concept “Edible mushroom”

Target Function has two values: T or F

• Represent concepts as decision trees

• Use hill climbing search

• Thru space of decision trees

Start with simple concept

Refine it into a complex concept as needed

© Daniel S. Weld

22

Experience: “Good day for tennis”

Day Outlook

d1

s

d2

s

d3

o

d4

r

d5

r

d6

r

d7

o

d8

s

d9

s

d10 r

d11

s

d12

o

d13

o

d14 r

© Daniel S. Weld

Temp

h

h

h

m

c

c

c

m

c

m

m

m

h

m

Humid Wind

h

w

h

s

h

w

h

w

n

w

n

s

n

s

h

w

n

w

n

w

n

s

h

s

n

w

h

s

PlayTennis?

n

n

y

y

y

y

y

n

y

y

y

y

y

n

23

Decision Tree Representation

Good day for tennis?

Leaves = classification

Arcs = choice of value

for parent attribute

Sunny

Humidity

Normal

Yes

Outlook

Overcast

Rain

Wind

Yes

High

No

Strong

No

Weak

Yes

Decision tree is equivalent to logic in disjunctive normal form

G-Day (Sunny Normal) Overcast (Rain Weak)

© Daniel S. Weld

24

© Daniel S. Weld

25

DT Learning as Search

• Nodes

Decision Trees

• Operators

Tree Refinement: Sprouting the tree

• Initial node

Smallest tree possible: a single leaf

• Heuristic?

Information Gain

• Goal?

Best tree possible (???)

© Daniel S. Weld

27

What is the

Simplest

Tree?

Day Outlook

d1

s

d2

s

d3

o

d4

r

d5

r

d6

r

d7

o

d8

s

d9

s

d10

r

d11

s

d12

o

d13

o

d14

r

Temp

h

h

h

m

c

c

c

m

c

m

m

m

h

m

Humid

h

h

h

h

n

n

n

h

n

n

n

h

n

h

Wind

w

s

w

w

w

s

s

w

w

w

s

s

w

s

Play?

n

n

y

y

y

y

y

n

y

y

y

y

y

n

How good?

[10+, 4-]

© Daniel S. Weld

Means:

correct on 10 examples

incorrect on 4 examples

28

Successors

Yes

Humid

Wind

Outlook

Temp

Which attribute should we use to

split?

© Daniel S. Weld

29

To be decided:

• How to choose best attribute?

Information gain

Entropy (disorder)

• When to stop growing tree?

© Daniel S. Weld

30