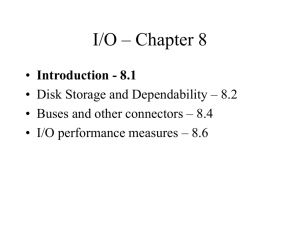

Storage

advertisement

Storage Cheap or Fast, Pick One Storage Great--you can do a lot of computation. But this often generates a lot of data. Where are you going to put it? In the demo clusters we run, the front end just has a little more disk than the compute nodes. This is used for the NFS-mounted home directories. But single drives do not have very good performance and are vulnerable to single-point failure RAID The next step up is RAID (Redundant Array of Inexpensive|Independent Disks) This uses a pool of disks to save data. Rather than spend billions building special highcapacity disks, greater capacity is achieved by simply putting PC disks into RAIDs. Typically you need a RAID controller on the host There are several types/levels of RAID RAID 0 RAID 0 writes blocks to multiple disks without redundancy Because the data is being written to multiple disks the controller can work in parallel on both read and write, improving performance If any error occurs data can be lost Don’t use on mission critical data; only for performance Ideally you have one drive per controller RAID 1 This is mirroring. The same data is written to two disks. If either disk fails a complete copy of the data is available at the other disk Uses 2X the storage space, can get better performance because the OS can pick the disk with the least seek or rotational latency RAID-5 RAID5 uses “parity” or redundant information. If a block fails, enough parity information is available to recover the data The parity information is spread across all the disks High read rate, medium write rate A disk failure requires a rebuild as the parity information is used to re-create the data lost RAID-10 RAID-10 is striping plus mirroring, so you get good performance plus a fully mirrored data, at the expense of 2X disk Storage RAID-5 is a reasonable choice most of the time. There are many commodity vendors of RAID arrays SCSI RAID arrays are expensive, the disks are expensive, and the disks have low capacity, but the RAID arrays have good performance ATA raid arrays have excellent price (1/3-1/2 that of SCSI drives) and capacity, somewhat lower performance Apple ATA RAID: 7 TB, $11.5K Promise Vtrak 15110: $4K plus 15 400GB SATA disks at $300 = 6 TB for $8,500 Storage in Clusters OK, so new you’ve got a RAID array. Now what? A pretty typical approach is to attach the RAID array to a host and then have that host serve NFS. Sometimes called “Network Attached Storage” or NAS Rather than being attached to the front end this may be a node of its own in the cluster C1 FE C2 S1 RAID NAS You can easily set up a Windows or open source NAS box and put it on the network. In the linux world this is just a linux box running Samba for Windows directory shares You can also buy pre-configured “appliances” for disk space. These are often boxes that run a strippeddown Linux variant and have a bunch of disks stuffed into them, along with a web-based interface. NAS Appliances Sun StorEdge 5210 Dell PowerVault 745N Snap Server 4200 Linksys, Iomega NAS (SOHO, combined with wireless & print server) NetApp (enterprise class) Emerging class: a NAS front-end/gateway to a SAN Storage Area Networks SANs are high performance, and you pay for it. A SAN has storage devices on a special network that carries only I/O traffic. The storage devices are connected to a set of servers. All the servers share all the storage devices on the SAN. In effect each server is attached to two networks: one for communication with other hosts, the other reserved for communicating with storage devices Storage Area Network SANs SANs let you add storage to a pool that can be shared by all servers connected to the SAN This can be a hard problem to solve due to parallelism. The original SANs sometimes had a single server attached to a single disk device to prevent simultaneous access More recent SANs use a distributed filesystem to avoid concurrency problems Single Machine Filesystems Server Attached Disk This is a fairly simple problem to solve because there is only one entity making requests of the disk-- “give me 200 blocks”. The server can keep track of block allocations and file names and make that information permanent by writing it to disk DFS Server 1 FC Switch SAN Disk Server 2 S1 asks for 500 disk blocks to hold a file named “Foo”. At the same time S2 asks for 200 blocks to hold a file named “Foo” Which wins? How can we ensure that none of the blocks for S1 are also given to S2? Effectively we need to serialize the requests to the SAN disk, and we need something to keep track of file names and block allocations that can be queried by all the servers MetaData A solution is to use metadata. This is “data about data”. In effect it keeps track of things like filenames and block allocations, just like a single machine filesystem does The metadata has to be available to all the servers who want to participate in the SAN, so it is typically written on the SAN itself Server 1 Server 2 FC Switch Meta SAN Disk SAN Disk Metadata Comms As an added twist, most implementations send the metadata over a separate physical network from the fibrechannel--usually gigabit ethernet. So every device in the SAN is usually connected by two networks Gig E Switch Server 1 Server 2 FC Switch San Disk San Disk Meta Data SAN Disks As you can see, the SAN “disks” are getting pretty complex. In reality the “disks” are usually RAID enclosures The RAID enclosure has a FC port, an ethernet port, and a RAID controller for its disks The SAN is really building on top of RAID building blocks SAN This arrangement has many advantages: • Servers and storage devices can be spread farther apart • All the storage devices go into a single, shared pool • Very high performance; can do serverless backups • Storage device availability not tied to the uptime of a host • Can tie applications to specific storage types (ATA RAID for high volume, SCSI RAID for high traffic transactional databases) OTOH, SANs probably won’t go commodity any time soon, since they have limited applicability to the desktop. Which means that they will remain expensive and complex iSCSI The most common SAN network is fibre channel (FC). The protocol used is often SCSI. You can send SCSI over other network protocols; an emerging option is iSCSI, which puts SCSI in IP over gigabit ethernet. This is slower but exploits the existing IP infrastructure and enables WAN SANs. Why recreate another, separate network for data traffic using a different hardware standard that requires new training? Why not just use well-understood IP networks that your people are already trained on? The drawback is that iSCSI has somewhat higher latency, which is an issue for disk access iSCSI iSCSI simply sends SCSI commands encapsulated inside TCP/IP, just as FC SANs send SCSI commands encapsulated inside FC frames This can enable wide-area SANs (if you have the bandwidth and are willing to live with the latency) since IP can be routed; a SAN distributed across an entire state or country is possible Putting the SCSI commands inside TCP/IP can add a couple layers to the software stack, which unavoidably increases latency; Gbit Ethernet also has less bandwidth than FC Implementations seem a bit immature for the enterprise; might ony make a splash with 10 gbit ethernet Another option is ATA over Ethernet (AOE) Fibre Channel Costs Uses copper or optical fibre at 2+ Gbits/sec Dell/EMC AX100, 3 TB SATA disks, 1 8 port fibre channel switch, 1 FC card = $16K FC cards approx. $500 each, 8 port FC switch aprox. $2,500 Filers with hundreds of TB are available if you’ve got the checkbook Often SANs use SCSI disk arrays to maximize performance Optical FC has a range up to a few KM, so this can be spread across a campus SAN and Clusters Fiber Channel Switching Fabric FE C1 Interconnect Network C2 C3 C4 FC Storage FC Switch FC Storage FC Jukebox Very high performance--the compute nodes participate in the SAN and can share in the benefits of the high speed storage network SAN and Clusters If you an afford this you can get very high performance; you might have four network interfaces (Gigabit ethernet, Infiniband, Gigabit ethernet for metadata, and FC) on each node May work well in situations with heavy database access, very heavy image processing Biological research, etc. SAN Example Apple sells XSAN, their OEM’d storage area network software; we are setting this up here Beatnik Bongo FC Switch Raid 1 Raid2 Ethernet Switch XSAN Each RAID enclosure of 14 disks may be divided up into multiple Logical Unit Numbers (LUNs). One LUN corresponds to one set of RAID disks--a single RAID box may have more than one collection of RAID sets, which may be of different types. One of the RAID boxes has three LUNs: One set of seven disks in a RAID-5 configuration, one set of five disks in a RAID-5 configuration, and one set of two disks in a RAID-1 configuration XSAN XSAN builds “Volumes” (a virtual disk) out of “storage pools”. A storage pool consists of one or more LUNs. XSAN A storage pool is simply a collection of LUNs, and a volume consists of one or more storage pools XSAN The LUNs are themselves used as RAID elements; XSAN treats each of them as disks and does a RAID-0 across them. This means that LUNs in the same storage pool should be of the same size FC Switch Config The FC switch requires some configuration; this is done via a web-based interface. It’s not quite as easy as plug-and-go. “Initiators” are computers, and “targets” are RAID enclosures Non-Apple Non-Apple computers can participate in the SAN; there are Linux implementations of the XSAN software available from third parties Each computer participating in the SAN must have the XSAN software; edu price is about $500 per copy (more for Linux) Summary Buy some disk