UTSA Assessment Handbook

advertisement

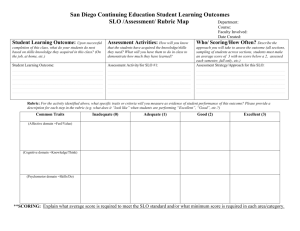

Student Learning Outcome Assessment Handbook February 2014 Assessment is a systematic and ongoing method of gathering, analyzing and using information for the purpose of developing and implementing strategies that will help improve student learning outcomes. 1|Page Contents DEVELOP AN ASSESSMENT PLAN *New Assessment Plan Form Identify programmatic student learning outcomes (SLO) SLO Name Action verb Dimensions Identify appropriate assessment methods Artifact and instrument Criterion for success ASSESS WHAT STUDENTS HAVE LEARNED *Results Report Collect data Sample Size Data collection frequency Report Results UTILIZE RESULTS TO IMPROVE STUDENT LEARNING *Use of Results for Improvement Report Analyze and interpret results Develop an action plan CLOSE THE LOOP 2|Page Student Learning Outcomes 3|Page Student Learning Outcomes (SLO) Observable student behaviors/ actions which demonstrate intended learning outcomes have occurred what students should know, do, or feel once they have completed a program of study. Should be specific and measurable and indicate relevant dimensions of the SLO. Dimensions – key aspects of the SLO e.g., an SLO for effective writing might have organization, content, synthesis of ideas, etc. as dimensions. SLO Name – 2-3 word label EXAMPLES: SLO Name: Concepts/Theory SLO: Students will demonstrate understanding of key concepts and theories in health promotion. SLO Name: Secondary Research SLO: Students will be able to integrate information from different types of secondary sources to support a thesis on a research topic. SLO Name: Composition/Writing SLO: Students will be able to compose multiple-page essays on topics related to health promotion that meet college-level academic standards for content, organization, style, grammar, mechanics, and format. ACTION VERBS Concrete verbs such as “define,” “apply,” or “analyze” are more helpful for assessment than verbs such as “be exposed to,” “understand,” “know,” “be familiar with.” Cognitive Learning Action Verbs: Knowledge - to recall or remember facts arrange, define, duplicate, label list, memorize, name, order, recognize, without necessarily understanding them relate, recall, reproduce, list, tell, describe, identify, show, label, collect, examine, tabulate, quote Comprehension – to understand and classify, describe, discuss, explain, express, interpret, contrast, predict, interpret learned information associate, distinguish, estimate, differentiate, discuss, extend, translate, review, restate, locate, recognize, report Application – to put ideas and concepts to apply, choose, demonstrate, dramatize, employ, illustrate, interpret, work in solving problems operate, practice, schedule, sketch, solve, use, calculate, complete, show, examine, modify, relate, change, experiment, discover Analysis – to break information into its analyze, appraise, calculate, categorize, compare, contrast, criticize, components to see interrelationships and differentiate, discriminate, distinguish, examine, experiment, question, ideas test, separate, order, connect, classify, arrange, divide, infer Synthesis – to use creativity to compose and arrange, assemble, collect, compose, construct, create, design, develop, design something original formulate, manage, organize, plan, prepare, propose, set up, rewrite, integrate, create, design, generalize Evaluation – to judge the value of appraise, argue, assess, attach, defend, judge, predict, rate, support, information based on established criteria evaluate, recommend, convince, judge, conclude, compare, summarize Based on Bloom’s Taxonomy from http://www.gavilan.edu/research/spd/Writing-Measurable-Learning-Outcomes.pdf 4|Page Assessment Methods 5|Page Assessment Methods Technique used to measure student attainment of stated learning outcomes. Should be a direct method that indicates the artifact measured and the measurement tool. Artifact – written assignment, test, presentation, portfolio, activity, etc. Instrument – rubric or imbedded questions Direct Methods - provide tangible and visible evidence of whether a student has achieved the intended knowledge, skill, or belief Course grades are not sufficient because they do not identify specific strengths/weaknesses. EXAMPLES: A rubric that measures various dimensions of writing style and content will be used to evaluate the final research paper in a representative sample of students from upper division courses. Final exams in courses x, y, z will include embedded test questions on topics such as defining health promotion, the relationship between health and behavior, theory in health promotion, difference between qualitative and quantitative research methods, and application of theory in health promotion. Learning Outcome Assessment Method Students will be able to compose multiple-page essays on topics related to health promotion that meet college-level academic standards for content, organization, style, grammar, mechanics, and format. Students will demonstrate understanding of key concepts and theories in health promotion. A rubric that measures various dimensions of writing style and content will be used to evaluate the final research paper in a representative sample of students from upper division courses. 6|Page ARTIFACT (evidence of student learning) ASSESSMENT METHOD Final exams in courses x, y, z will include embedded test questions on topics such as defining health promotion, the relationship between health and behavior, theory in health promotion, difference between qualitative and quantitative research methods, and application of theory in health promotion. INSTRUMENT (means of evaluating student learning) Assessment Methods Criterion for Success The target level of knowledge, skill, or attitude students are expected to display through the assessment method employed. Baseline - #/% who have achieved particular mastery level Benchmark – a standard of excellence used for comparison Target - #/% expected to achieve mastery level EXAMPLES: For imbedded test questions: 75% or more of the students will correctly answer each question related to the outcome. For rubrics: At least 75% of students will demonstrate a proficiency (3) or higher on a scale of 1 to 5 (5 = Excellent,…, 3 = Good,…, 1 = Poor) on each rubric dimension. In other words, results data will be disaggregated (see examples below). See page 7 for example of reporting when established criterion for success has been met. 7|Page Collecting Data 8|Page Collecting Data Sample Size The number or percentage of students assessed from the entire population of majors. Sample - Selected portion of the population of students in the program While data is often collected in relevant classes, it is not the class that is being assessed but the program. That is, data collected in a single course is essentially a “convenience” sample of the population of students in a program. EXAMPLE: 147 students out of 766 students in the program. Data Collection Frequency All student outcomes must be assessed each year. Data is collected in the Fall and/or Spring. If a class that is typically used for data collection will not be offered in an academic year, a different strategy for data collection should be used in order to maintain annual results for a particular SLO. 9|Page Reporting Results 10 | P a g e Reporting Results Finding The determination of whether aspects of this student learning outcome could be improved. Acceptable – the finding meets the expected level of student performance Needs Improvement - the finding indicates student performance could be improved In the past, assessment reports indicated whether criterion was Met/Not Met. This led to a perception that A. it was important for students to meet the criterion and B. that SLOs and assessment methods should be developed so that students would meet the criterion (e.g., set the bar low, use vague/ambiguous measures, etc.). At the same time, there was a perception that if the criterion had been met that no action plan was needed. Since the goal of assessment is to identify opportunities for improving student learning outcomes, the options now focus on which SLO could be improved (not whether students “passed” or “failed”). EXAMPLES: Some disaggregated results were lower than established criterion Overall, 75% or more of students demonstrated proficiency in identifying a problem statement (80%), overall content (75%), and citing references (92%) and less than 75% demonstrated proficiency in organizational structure (60%) and clarity of writing (72%). All disaggregated results met the established criterion, but some were lower than others Result - 75% or more students demonstrated proficiency in all areas – problem statement (85%), flow of the report (76%), content (81%), synthesis of ideas (75%), clarity of writing (78%), citations (92%). Thus, the lowest score was in the area of synthesis of ideas. You may decide that this is acceptable OR you may decide that you want to try and improve the lowest score (even though it met the criterion). 11 | P a g e Reporting Results Imbedded Objective Test Questions NOTE: Data tables might be included as an attachment, but would not be reported in TracDat. Criterion for Success: An aggregate average of 75% or more of the students will correctly answer questions related to the outcome. Topic Relationship between behavior and health Theory in health promotion Difference between qual/quant methods Defining health promotion Application of theory in health promotion Test Items addressing this learning outcome 1 2 3 4 5 Proportion of students answering these questions correctly 80% 60% 35% 92% 75% Results: 75% or more of students gave the correct answer for questions related to the relationship between behavior and health (80%), defining health promotion (92%), and application of theory in health promotion (75%). Less than 75% gave the correct answer for questions related to theory in health promotion (60%) and the difference between qualitative and quantitative research methods (35%). In particular, over 90% of students gave the correct answer for question about defining health promotion, but only 35% of students gave the correct answer for question about the difference between qualitative and quantitative research methods. Finding: Needs Improvement Alternatively, if there are a number of imbedded questions: Topic Test Items addressing this learning outcome Principles of health promotion 1, 2, 3, 4, 5 Theories of behavior and health 6, 7, 8, 9, 10 Research Methods in health promotion 11, 12, 13, 14, 15 *adapted from Suskie, L. (2009). Assessing student learning. Average proportion of students answering these questions correctly 80% 60% 35% Results: On average, over 75% (specifically, 80%) of students correctly answered test items related to principles of health promotion and less than 75% (specifically, 60%) correctly answered test items related to theories of behavior and health. Only 35% of students correctly answered test items related to research methods in health promotion. Finding: Needs Improvement 12 | P a g e Reporting Results Rubrics Sample Rubric for Assessing a Literature Review: http://libguides.cmich.edu/content.php?pid=148433&sid=1292217 Rubric Dimensions (from sample above) 1. 2. 3. 4. 5. 6. Problem statement Organization Content Synthesis of ideas Clarity Citations Proportion of students achieving proficiency of (3) or higher on scale of 1-5. 80% 60% 75% 35% 72% 92% NOTE: The above data table would be for your records and not reported in TracDat. Results: Overall, 75% or more of students demonstrated proficiency in identifying a problem statement (80%), overall content (75%), and citing references (92%) and less than 75% demonstrated proficiency in organizational structure (60%) and clarity of writing (72%). Thus, students were most proficient in citing references and least proficient in achieving clarity. Finding: Needs Improvement Alternatively, if there are a number rubric dimensions (probably more than in this example): Criterion for Success: An aggregate average of 75% or more of the students will demonstrate a proficiency of (3) or higher on a scale of 1 to 5 (5 = Excellent,…, 3 = Good,…, 1 = Poor) on rubric dimensions related to content and style. Topic Rubric dimensions addressing the topic Content (i.e., invention) 1, 3, 5 Style (i.e., arrangement) 2, 4, 6 *adapted from Suskie, L. (2009). Assessing student learning. Average proportion of students demonstrating proficiency 80% 60% Results: On average, over 75% (specifically, 80%) of students gave a clear problem statement, covered appropriate content, and gave a succinct, precise and insightful conclusion. However, fewer than 75% (specifically, 60%) achieved proficiency in stylistic elements including organization, clarity, and appropriate citation format. Finding: Needs Improvement 13 | P a g e Reporting Results What if all criteria were met? Rubric Dimensions (from sample above) 1. 2. 3. 4. 5. 6. Problem statement Flow of the report Content Synthesis of ideas Clarity of writing Citations Proportion of students achieving proficiency of (3) or higher on scale of 1-5. 85% 76% 81% 75% 78% 92% Results: 75% or more students demonstrated proficiency in all areas – problem statement (85%), flow of the report (76%), content (81%), synthesis of ideas (75%), clarity of writing (78%), citations (92%). Thus, the lowest score was in the area of synthesis of ideas. Finding: Needs Improvement Alternatively, if there are a number rubric dimensions (probably more than in this example): Criterion for Success: An aggregate average of 75% or more of the students will demonstrate a proficiency of (3) or higher on a scale of 1 to 5 (5 = Excellent, 3 = Good, 1 = Poor)” on rubric dimensions related to content and style. Topic Rubric dimensions addressing the topic Content (i.e., invention) 1, 3, 5 Style (i.e., arrangement) 2, 4, 6 *adapted from Suskie, L. (2009). Assessing student learning. Average proportion of students demonstrating proficiency 87% 75% Results: On average, over 85% of students gave a clear problem statement, covered appropriate content, and gave a succinct, precise and insightful conclusion. Though 75% or more students demonstrated proficiency in all areas, the lowest score was in the area in stylistic elements including organization, clarity, and appropriate citation format. Finding: Needs Improvement 14 | P a g e USE OF RESULTS FOR IMPROVEMENT Action Plan Modifications or revisions that will be made to the academic program, curriculum, teaching, etc. to improve student learning. Should be based on the assessment results. CLOSE THE LOOP Indicates how action plan has been implemented and discuss implications for student learning. 1. Summarize trends in assessment results for the past two years. Discuss any notable changes. 2. Discuss improvement strategies that have been implemented as a result of assessment results. What barriers/opportunities were encountered when implementing the strategies? 3. List improvement strategies that will be implemented as a result of assessment results (SP14, SP16, SP18 reports). 15 | P a g e Assessment Forms 16 | P a g e Assessment Forms For submitting new plans, results, use of results reports to be entered into TracDat: 1. New Assessment Plan form 2. Results Report form 3. Use of Results for Improvement form Annual Assessment report – generated from TracDat for VPIE assessment portfolio, annual faculty assessment forums, external audience including SACS and THECB. 17 | P a g e Assessment Forms New Assessment Plan Form (document template available on VPIE website) Instruction Links: Student Learning Outcome Name Student Learning Outcome Assessment Method Criterion for Success Data Collection Frequency Click for Example 18 | P a g e Assessment Forms Results Report Word .doc available on VPIE website Sample Size Results Finding Click for Example 19 | P a g e Assessment Forms New Assessment Plan report example Results Report example 20 | P a g e Assessment Forms Use of Results for Improvement Report Word .doc available on VPIE website 21 | P a g e Assessment Forms Annual Assessment report: Generated from TracDat to be archived in VPIE Assessment Portfolio Continued next page 22 | P a g e 23 | P a g e