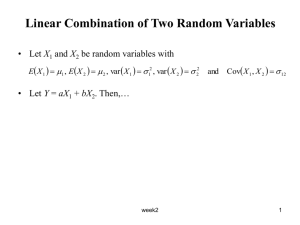

Week2

advertisement

The One-Factor Model • Statistical model is used to describe data. It is an equation that shows the dependence of the response variable upon the levels of the treatment factors. • Let Yij be a random variable that represents the response obtained on the j-th observation of the i-th treatment. • Let μ denote the overall expected response. • The expected response for an experimental unit in the i-th treatment group is μi = μ + τi • τi is deviation of i-th mean from overall mean; it is referred to as the effect of treatment i. STA305 week2 1 • The model is Yij i ij where ij is the deviation of the individual’s response from the treatment group mean. • ij is known as the random or experimental error. STA305 week2 2 Fixed Effects versus Random Effects • In some cases the treatments are specifically chosen by the experimenter from all possible treatments. • The conclusions drawn from such an experiment apply only to these treatments and cannot be generalized to other treatments not included in experiment. • This is called a fixed effects model • In other cases, the treatments included in the experiment can be regarded as a random selection from the set of all possible treatments. • In this situation, conclusions based on the experiment can be generalized to other treatments. • When the treatments are random sample, treatment effects, τi are random variables. • This model is called a random effects model or a components of variance model. • The random effects model will be studied after the fixed effects model STA305 week2 3 More about the Fixed Effects Model • As specified in slide (2) the model is Yij i ij Where are ij i.i.d. with distribution N(0, σ2) • It follows that response of experimental unit j in treatment group i, Yij , is normally distributed with E Yij i VarYij Var ij 2 • In other words Yij ~ N i , 2 STA305 week2 4 Treatment Effects • Recall that treatment effects have been defined as deviations from overall mean, and so the model can be parameterized so that: a r i 1 i i 0 • In the special case where r1 = r2 = · · · = ra = r this condition reduces a to i 0 i 1 • The hypothesis that there is no treatment effect can be expressed mathematically as: H0 : μ1 = μ2 = · · · = μa Ha : not all μi are equal • This can be expressed equivalently in terms of the τi: H0 : τ1 = τ2 = · · · = τa = 0 Ha : not all τi are equal to 0 STA305 week2 5 ’Dot’ Notation • “Dot” notation will be used to denote treatment and overall totals, as well as treatment and overall means. • The sum of all observations in the i-th treatment group will be denoted as ri Yi Yij Yi1 Yi 2 Yiri j 1 • Similarly, the sum of all responses in all treatment groups is denoted: r a Y Yij i i 1 j 1 • The treatment and overall means are: Y 1 ri Yi Yij i ri j 1 ri 1 a ri Y Y Yij n i 1 j 1 n STA305 week2 6 Rationale for Analysis of Variance • Consider all of the data from the a treatment groups as a whole. • The variability in the data may come from two sources: 1) treatment means differ from overall mean, this is called between group variability. 2) within a given treatment group individual observations differ from group mean, this is called within group variability. STA305 week2 7 Total Sum of Squares • Total variation in data set as a whole is measured by the total sum of squares. It is given by SST Yij Y a ri 2 i 1 j 1 • Each deviation from the overall sample mean can be expressed as the sum of 2 parts: 1) deviation of the observation from the group mean. 2) deviation of the group mean from the overall mean • In other words… • The SST can then be written as… STA305 week2 8 Expected Sums of Squares • Finding the expected value of the sums of squares for error and treatment will lead us to a test of the hypothesis of no treatment effect, i.e., H0 : τ1 = τ2 = · · · = τa = 0 • We start by finding the expected value of SSE…. • We continue with the expected value of SSTreat STA305 week2 9 Mean Squares • As we have seen in the calculation above, the MSE = SSE/(n − a) is an unbiased estimator of σ2. • The MSE is called the mean square for error. • The degrees of freedom associated with SSE are n − a and it follows that E(MSE) = σ2. • The mean square for treatment is defined to be: MSTreat = SSTreat / (a-1). • The expected value of MSTreat is 1 a E MSTreat ri i2 a 1 i 1 2 STA305 week2 10 Hypothesis Testing • Recall that our goal is to test whether there is a treatment effect. • The hypothesis of interest is H0 : τ1 = τ2 = · · · = τa = 0 Ha : not all τi are equal to 0 • Notice that if H0 is true, then 1 a 1 a 2 2 2 E MSTreat ri i ri 0 2 E MS E a 1 i 1 a 1 i 1 2 • On the other hand, if H0 is false, then at least one τa ≠ 0, in which case r 2 i i 0 and so E (MSTreat) > E (MSE) • On average, then, the ratio MSTreat/MSE should be small if H0 is true, and large otherwise. • We use this to develop formal test. STA305 week2 11 Cochran’s Theorem • Let Z1,Z2, . . . ,Zn be i.i.d. N(μ, 1). n • Suppose that Z i2 Q12 Qs2 where Qj has d.f vj. i 1 • A necessary and sufficient condition for the Qj to be independent of s 2 one another, and for Qj ~ χ (vj) is that v j n . j 1 • Cochran’s theorem implies that SSE/σ2 and SSTreat/ σ2 have independent χ2 distributions with n – a and a − 1 d.f., respectively. • Recall: If X1 and X2 are two independent random variables, each with a χ2 distribution, then X 1 / v1 ~ F v1 , v2 X 2 / v2 STA305 week2 12 Hypothesis Test for Treatment Effects • Cochran’s theorem and the result just stated provide the tools to construct a formal hypothesis test of no treatment effects. • The Hypothesis again are: H0 : τ1 = τ2 = · · · = τa = 0 Ha : not all τi are equal to 0 • The Test Statistic is: Fobs = MSTreat/MSE • Note that if H0 is true, then Fobs ~ F(a − 1, n − a). • So the P-value = P(F(a − 1, n − a) > Fobs). • We reject H0 in favor of Ha if P−value < α. • Alternatively, reject H0 in favor of Ha if Fobs > Fα(a − 1, n − a), where Fα(a − 1, n − a) is the upper 100 × α%-ile point of the F(a − 1, n − a) distribution. STA305 week2 13 Analysis of Variance Table • The results of the calculations and the hypothesis testing are best summarized in an analysis of variance table • The ANOVA Table is given below STA305 week2 14 Estimable Functions of Parameters • A function of the model parameters is estimable if and only if it can be written as the expected value of a linear combination of the response variables. • In other words, every estimable function is of the form a ri E cijYij i 1 j 1 where the cij are constants • It can be shown that from previous sections, μ, μi, and σ2 are estimable. STA305 week2 15 Example - Effectiveness of Three Methods for Teaching a Programming Language • A study was conducted to determine whether there is any difference in the effectiveness of 3 methods of teaching a particular programming language. • The factor levels (treatments) are the three teaching methods: 1) on-line tutorial 2) personal attention of instructor plus hands-on experience 3) personal attention of instructor, but no hands-on experience • Replication and Randomization: 5 volunteers were randomly allocated to each of the 3 teaching methods, for a total of 15 study participants. • Response Variable: After the programming instruction, a test was administered to determine how well the students had learned the programming language. • Research Question: Do the data provide any evidence that the instruction methods differ with respect to test score. • The data and the solutions are…. STA305 week2 16 Conducting an ANOVA in SAS • There are several procedures in SAS that can be used to do an analysis of variance. • PROC GLM (for generalized linear model) will be used in this course • To do the analysis for the Example on slide 16, start by creating a SAS dataset: data teach ; input method score ; cards ; 1 73 1 77 ..... 3 71 ; run ; STA305 week2 17 • Use this dataset to conduct an ANOVA using the following SAS code: proc glm data = teach ; class method ; model score = method / ss3 ; run ; quit ; • The output produced by this procedure is given in the next slide. STA305 week2 18 STA305 week2 19 Estimating Model Parameters • The ANOVA indicates whether there is a treatment effect, however, it doesn’t provide any information about individual treatments or how treatments compare with each other. • To better understand outcome of experiment, estimating mean response for each treatment group is useful. • Also, it is useful to obtain an estimate of how much variability there is within each treatment group. • This involves estimating model parameters. STA305 week2 20 Variability • Recall, on slides (9 and 10) we have showed that the MSE is unbiased estimator of σ2. • Further, Cochran’s Theorem was used to show that SSE/ σ2 ~ χ2(n − a). • We can use this result to calculate a 100 × (1 − α)% confidence interval for σ2. • The CI is give by SS E SS E 2 , 2 1 / 2 n 1 / 2 n 1 2 2 where 1 / 2 n a and / 2 n a are the upper and lower percentage points of the χ2 distribution with n − a d.f., respectively. STA305 week2 21 Overall Mean • As discussed in the beginning, the overall expected value is μ. • Show that is unbiased estimator of μ… • The variance of Y is σ2/n. • So the 100 × (1 −α)% confidence interval for μ is: MS E Y t / 2 n a n • Further, a 100 × (1 −α)% confidence interval for μi is: MS E Yi t / 2 n a r i • It follows that Yi Y is an unbiased estimator of the effect of treatment i, τi. STA305 week2 22 Differences between Treatment Groups • Differences between specific treatment groups will be important from researcher’s point of view. • The expected difference in response between treatment groups i and j is: μi − μj = τi – τj. • Since treatment groups are independent of each other, it follows that 1 1 2 Yi Y ~ N i j , ri rj • Therefore, a 100 × (1 −α)% confidence interval for τi – τj is: Y i Y t / 2 n a MS E STA305 week2 1 1 ri rj 23 Example - Methods for Teaching Programming Language Cont’d • Back to the example of three teaching methods and their effect on programming test score. • Based on the ANOVA developed earlier, we found significant difference between the three methods. • Which method had the highest average? • What is a 95% CI for mean difference in test scores for the 2 instructor-based methods? STA305 week2 24 Comparisons Among Treatment Means • As mentioned above, ANOVA will indicate whether there is significant effect of treatments overall it doesn’t indicate which treatments are significantly different from each other. • There are a number of methods available for making pairwise comparisons of treatment means. STA305 week2 25 Least Significant Difference (LSD) • This method tests the hypothesis that all treatment pairs have the same mean against the alternative that at least one pair differs, that is the hypothesis are: H0 : μi − μj = 0 for all i, j Ha : μi − μj ≠ 0 for at least one pair i, j • In testing difference between any two specific means, reject the null hypothesis if: Yi Y j t / 2 n a 1 1 ri rj • In the case where the design is balanced and ri = r for all i, the condition above becomes: Yi Y j t / 2 n a 2MS E r STA305 week2 26 • In other words, the smallest difference between the means that would be considered statistically significant is: LSD t / 2 n a 2MS E r • This quantity, LSD, is called the least significant difference. • LSD method requires that the difference between each pair of means be compared to the LSD. • In cases where difference is greater than LSD, we conclude that treatment means differ. STA305 week2 27 Important Notes • As in any situation where large number of significance tests conducted, the possibility of finding large difference due to chance alone increases. • Therefore, in case where the number of treatment groups is large, the probability of making this type of error is relatively large. • In other words, probability of committing a Type I error will be increased above α. • Further, although the ANOVA F-test might find a significant treatment effect, LSD method might conclude that there are no 2 treatment means that are significantly different from each other. • This is because ANOVA F-test considers overall trend of effect of treatment on outcome, and is not restricted to pairwise comparisons. STA305 week2 28 Other Methods for Pairwise Comparisons • Other methods for conducting pairwise comparisons are available. • The methods that are implemented in PROC GLM in SAS include: – Bonferonni – Duncan’s Multiple Range Test – Dunnett’s procedure – Scheffe’s method – Tukey’s test – several otheres • Chapter 4 of Dean & Voss discusses some of these methods. STA305 week2 29 Pairwise Comparisons in SAS • Pairwise comparisons can be requested by including a means statement. • The code below requests means with LSD comparison: proc glm data = teach ; class method ; model score = method / ss3 ; means method / lsd cldiff ; run ; • The part of the output containing the pairwise comparisons is shown in the next slide. STA305 week2 30 STA305 week2 31 STA305 week2 32 Contrasts • ANOVA test indicates only whether there is an overall trend for the treatment means to differ, and does not indicate specifically which treatments are the same, which are different, etc. • In the last few slides looked at pairwise comparisons between treatment means. • However, comparisons that are of interest to researcher may include more then just two group. They can be linear combination of means. STA305 week2 33 Example - Does Food Decrease Effectiveness of Pain Killers? • Researchers at pain clinic want to know whether effectiveness of two leading pain killers is same when taken on empty stomach as when taken with food. • A study with four treatment groups was designed: 1. aspirin with no food 2. aspirin with food 3. tylenol with no food 4. tylenol with food • In addition to determining whether there is a difference between the four treatment groups, researchers want to determine whether there is a difference between taking medication with food and taking it without. • This second hypothesis can be expressed statistically as: H0 : μ1 + μ3 = μ2 + μ4 Ha : μ1 + μ ≠ μ 2 + μ4 STA305 week2 34 • The point estimate of difference between fed and not fed conditions is based on sample means: Y 1 Y3 Y2 Y4 STA305 week2 35 Hypothesis Tests Using Contrasts • As in the example on the previous slide, the comparison of treatment means that is of interest might be a linear combination of means. That is, the hypothesis of interest would be of the form H0 : c1μ1 + c2μ2 + · · · + caμa = 0 Ha : c1μ1 + c2μ2 + · · · + caμa ≠ 0 • The ci are constants subject to the constraints: (i) ci > 0 for all i, and a (ii) i 1 ci 0 • Test of this hypothesis can be constructed using sample means for each treatment group. • The linear combination c1μ1 + c2μ2 + · · · + caμa is called a contrast. STA305 week2 36 • If the assumptions of the model are satisfied, then: 2 a a 2 ciYi ~ N ci i , ci ri i 1 i 1 i 1 a • If σ2 was known, a test of H0 could be done using: cY c /r a i 1 i i a 2 i 1 i i • Since σ2 is unknown, we use its unbiased estimate, the MSE, and conduct a t-test with n − a d.f.. The test statistics is cY MS c a tobs i 1 i i a E 2 i i 1 / ri • Recall, if X is a random variable with t(v) distribution, then X2 has F(1, v) distribution. STA305 week2 37 • So an equivalent test statistic is: Fobs MS a 2 cY i 1 i i a 2 E i i 1 c / ri • At level α , reject H0 in favour of Ha if Fobs > Fα(1, n − a), or equivalently if |tobs| > tα/2 (n − a). • The sum of squares for contrast is: SS contrast a 2 cY i 1 i i a 2 i i 1 i c /r • Each contrast has 1 d.f., so the mean square for contrast is: MScontrast = SScontrast/1 STA305 week2 38 Summary • The hypothesis: H0 : c1μ1 + c2μ2 + · · · + caμa = 0 Ha : c1μ1 + c2μ2 + · · · + caμa ≠ 0 • Test Statistic Fobs MS contrast MS E • Decision Rule: reject H0 if Fobs > Fα(1, n − a) STA305 week2 39 Orthogonal Contrasts • Very often more than one contrast will be of interest. Further, it is possible that one research question will require more than one contrast, i.e., H0 : μ1 = μ3 and μ2 = μ4 • Ideally, we want tests about different contrasts to be independent of each other. • Suppose that the two contrasts of interest are: c1μ1 + c2μ2 + · · · + caμa and d1μ1 + d2μ2 + · · · + daμa. a • These two contrasts are orthogonal to each other they iff they satisfy: c d i 1 i i 0 • If there are a treatments then, SSTreat can be decomposed into set of a − 1 orthogonal contrasts, each with 1 d.f. as follows SSTreat = SScontrast1 + SScontrast2 + · · · + SScontrasta−1. • Unless a = 2, there will be more than one set of orthogonal contrasts. STA305 week2 40 Example - Food / Pain Killers Continued • Refer back to the example on slide 31. The study designed with 4 treatment groups. • The treatment sum of squares can be decomposed into 3 orthogonal contrasts. • Since researcher interested in difference between fed & unfed, makes sense to use the following contrasts: STA305 week2 41 • Exercise: verify that each is in fact a contrast. • Exercise: verify that contrasts are orthogonal. • Note, there is more than one way to decompose treatment sum of squares into set of orthogonal contrasts. • For example, instead of comparing aspirin and Tylenol, might be interested in comparing food with no food. • In this case, compare (i) aspirin with food and Tylenol with food, (ii) aspirin without food and Tylenol without food, and (iii) the 2 food groups to the 2 no-food groups. STA305 week2 42 ANOVA Table for Orthogonal Contrasts • Contrasts to be used in experiment must be chosen at the beginning of the study. • The hypotheses to be tested should not be selected after viewing the data. • Once the treatment SS has been decomposed using preplanned orthogonal contrasts, the ANOVA table can be expanded to show decomposition as shown in the next slide. STA305 week2 43 STA305 week2 44 Example - Pressure on a Torsion Spring STA305 week2 45 • The figure above shows a diagram of a torsion spring. • Pressure is applied to arms to close the spring. • A study has been designed to examine pressure on torsion spring. • Five different angles between arms of spring will be studied to determined their impact on the pressure: 67º, 71 º, 75 º, 79 º, and 83 º. • Researchers are interested in whether there is an overall difference between different angle settings. • In addition would like to study set of orthogonal contrasts which compares the 2 smallest angles to each other and 2 largest angles to each other. • The data collected are shown in the following slide. STA305 week2 46 Torsion Spring Data STA305 week2 47 Solution STA305 week2 48 Contrasts in SAS • To do the analysis for the last example, start by creating a SAS dataset: data torsion ; input angle pressure; cards ; 67 83 67 85 71 87 71 84 ........... 79 90 83 90 83 92; run ; STA305 week2 49 • Here is an additional code that is required to specify the contrasts of interest: proc glm data = torsion ; class angle ; model pressure = angle / ss3 ; contrast ’67-71’ angle 1 -1 0 0 0 ; contrast ’79-83’ angle 0 0 0 1 -1 ; contrast ’sm vs lg’ angle 1 1 0 -1 -1 ; contrast ’mid vs oth’ angle 1 1 -4 1 1 ; run ; quit ; STA305 week2 50 • The ANOVA part of the output is not shown here. • The part of the output generated by the contrast statements looks like this: Contrast DF 67-71 1 79-83 1 sm vs lg 1 mid vs oth 1 Contrast SS 3.37500000 1.33333333 93.35294118 0.20796354 Mean Square 3.37500000 1.33333333 93.35294118 0.20796354 STA305 week2 F Value 2.92 1.15 80.70 0.18 Pr>F 0.1031 0.2958 <0.0001 0.6761 51