Operant conditioning

advertisement

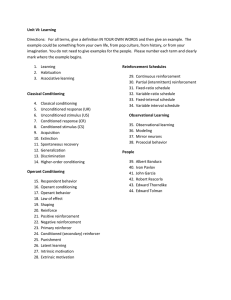

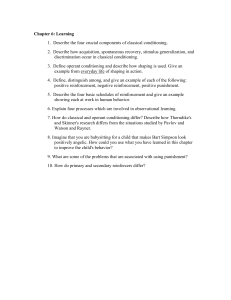

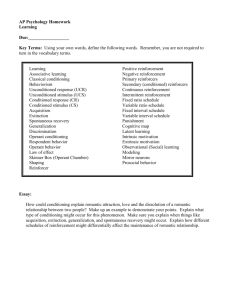

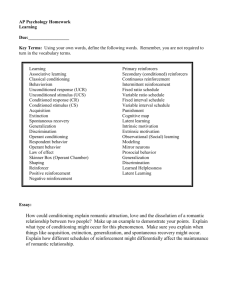

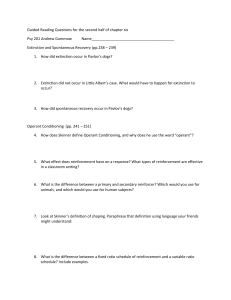

Operant conditioning Operant conditioning In classical conditioning, the presence of one stimulus (e.g. meat powder) is conditional on the presence of another stimulus (e.g., a bell) What else can an animal learn, besides the relationship of two stimuli? Operant conditioning It is also possible for the animal to generate a response and for that response to have consequences: Act cute, you get pet Poop on the rug, you get scolded Note that the thing to be learned is not a UR. Animal emits a response (pooping, acting cute), and it is rewarded or punished. Edward Thorndike Thorndike’s Law of Effect “If a response in the presence of a stimulus is followed by a satisfying event, the association between the stimulus and the response is strengthened. If the response is followed by an annoying event, the association is weakened.” Today we’ll cover: • Basics of operant conditioning • What makes operant conditioning effective. • The problem of definition in o.c. (not just that “animals seek rewards”). Thorndike’s method was limited because each trial took so long. A stripped-down environment Free operant curve, from a cumulative recorder Steep slope=many responses Shallow slope=few responses What would the curve look like if 20 bar presses food? To really teach the animal you would shape it’s behavior. . . Fixed ratio Consistent ratio of number of responses & number of reinforcers Steady response Easy to extinguish Example: factory Piece work Variable ratio Set ratio of number of responses & number of reinforcers, but can vary locally Example: slot machine Rapid response Hard to extinguish Fixed interval First response after a specific amount of time since the last reinforcement Example: studying for exams Little response until just before reinforcement: then rapid response Fairly easy to extinguish Variable interval First response after a some amount of time since the last reinforcement: amount of time can vary, locally Example: checking email Steady response Hard to extinguish Contingencies don’t just add good stuff. . . Result e.g., food Add to environment Increase probability of behavior Positive Reinforcement Punishment Negative Reinforcement Negative Punishment (Extinction) Action Take away from environment e.g., escape e.g., spanking Decrease probability of behavior e.g., being grounded Complex contingencies: Would this work? Bar press reinforced, but ONLY when red light is on. YES! This is called differential reinforcement How does differential reinforcement apply here? Reinforcer = food. Response = hovering Differential signal = looking up. What’s happening, and what should the birds do? What’s happening= differential sign has changed What should the birds do = stop responding Moments later, birds are leaving Operant conditioning--what makes it effective? • • • • • Schedule of reinforcement Temporal contingency Belongingness Quality, quantity of reinforcer What else the animal might do T-maze: temporal contingency Condition 1: immediate reward (.5 sec) ! Condition 2: delayed reward (5 sec) ! Effectiveness--temporal contingency Learning strength (arbitrary units) The delay between the animal’s act that you are reinforcing, and the reinforcer. 35 30 25 20 Grice (1948) Wolfe (1934) 15 10 5 0 0 5 10 15 30 60 150 Delay (seconds) WHY does learning drop off with delay?? Condition 2: delayed reward (5 sec) ! Operant conditioning--what makes it effective? • • • • • Schedule of reinforcement Temporal contingency Belongingness Quality, quantity of reinforcer What else the animal might do Belongingness • Thorndike tried to condition his cat to yawn or scratch to escape box--he proposed belongingness Instinctive drift • A concept related to belongingness: instinctive drift (Breland & Breland.) •Motivational state can also influence; a hungry animal does more food-seeking behaviors. . . Digging Digging, scratching, rearing Quality/quantity of reinforcer Works as you would expect. What else might the animal do? It’s not as simple as “the animal Maximizes good things, minimizes” bad things. Even humans don’t do this, if the situation gets moderately complex. Example Variable ratio Variable interval What’s the optimal strategy? Variable ratio Variable interval Optimal is to hit VR almost exclusively and occasionally hit the VI. Instead, they respond to equalize ratios of work/reward The problem of definition What is a reinforcer? The problem of definition Thorndike called a reinforcer something “that brings about a satisfying state of affairs.” How do we know when animal is satisfied? Presumably, when the animal will work to achieve this state of satisfaction. But that’s circular What’s a reinforcer? What’s pleasurable? Something pleasurable. Something that increases behavior, that animal will work to get What will the animal work for (e.g., peck)? Another definition: physiological homeostasis Animal seeks to lessen thirst, hunger, etc. Definition of reinforcement is based on biological drives. Learning = a “stamping in” of the work that needs to be done to reduce hunger. E.g, “I must not only consume and chew to get nourishment. I also must press the bar, then consume, then chew. Problems Too many drives were proposed. Animals (and people) do things that seem more likely to raise drives, not lower them Reinforcement as behavioral regulation Premack principle: Given two responses arranged in an operant conditioning procedure, the more probable response will reinforce the less likely behavior. Which do you want to do: play pinball or eat candy? Must eat candy to play pinball These kids treat candy eating as work: do it to get to play pinball. Must eat candy to play pinball These kids eat candy but don’t care that they have earned pinball time. Behavioral homeostasis & bliss point—a clever, not-quite-right idea Minutes drinking 35 30 25 20 Restricted drinking 15 10 5 0 0 15 30 45 60 Minutes running Normally, animal likes to be at gray spot (15 minutes of each--now it can’t be at gray spot. What will it do? Minutes drinking 35 30 25 20 Restricted drinking 15 10 5 0 0 15 30 45 60 Minutes running IN THEORY you should be able to predict what animal will do--it will select spot on blue line that is as close as possible to it’s “bliss point”. IN REALITY this prediction sometimes works, sometimes doesn’t. Reinforcement--final word In the end, we still don’t have a good definition of the concept. Premack Principle is as close as we get. Nevertheless, the concept of reinforcement seems useful. Applications • • • • Animal training Biofeedback Education Token Economies Biofeedback Operant conditioning of the autonomic nervous system. For years, not explored because no one thought it could possibly work. Apply operant conditioning principles to education 1. Make sure student doesn’t make mistakes; guide behavior. 2. Review frequently. Little enthusiasm. Teachers didn’t like it for their own reasons. Students were bored. Token economies Used in some mental health institutions, and some classrooms. Mrs. Ahlersmeyer’s 3rd grade class, Lafayette Elementary, Lafayette, IN • Students earn a “salary” (marbles). • Outstanding work or behavior earns bonuses. • Students allowed 5 sick days per quarter, after that, they are docked pay. • Students charged rent for their use of desk, and for any school property lost or damaged. • Students docked pay for inappropriate behavior. Use is controversial because it seems “dehumanizing” (mental patients) or because it seems that you’re “paying” students for behavior that they should want to do. Applications • • • • Animal training Biofeedback Education Token Economies