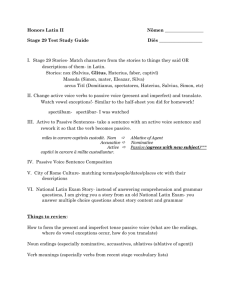

pptx

advertisement

Improving Automatic Meeting Understanding by Leveraging Meeting Participant Behavior Satanjeev Banerjee, Thesis Proposal. April 21, 2008 1 Using Human Knowledge Knowledge of human experts is used to build systems that function under uncertainty Often captured through in-lab data labeling Another source of knowledge: Users of the system Can provide subjective knowledge System can adapt to the users and their information needs Reduce data needed in the lab Technical goal: Improve system performance by automatically extracting knowledge from users 2 Domain: Meetings Problem: (Romano and Nunamaker, 2001) Large parts of meetings contain unimportant information Some small parts contain important information How to retrieve the important information? Impact goal: Help humans get information from meetings What information do people need from meetings? 3 Understanding Information Needs Survey of 12 CMU faculty members How often do you need information from past meetings? (Banerjee, Rose & Rudnicky, 2005) On average, 1 missed-meeting, 1.5 attended-meeting a month Task 1: Detect agenda item being discussed What information do you need? Missed-meeting: “What was discussed about topic X?” Attended-meeting: Detail question – “What was the accuracy?” Task 2: Identify utterances to include in notes How do you get the information? 4 From notes if available – high satisfaction If meeting missed – ask face-to-face Existing Approaches to Accessing Meeting Information Classic supervised Meeting recording and browsing learning (Cutler, et al,02), (Ionescu, et al, 02), (Ehlen, et al, 07), Automatic meeting understanding Unsupervised (Waibel, et al, 98). learning Meeting transcription (Stolcke, et al, 2004), (Huggins-Daines, et al, 2007) Meeting topic segmentation (Galley, et al, 2003), (Purver, et al, 2006) Activity recognition through vision (Rybski & Veloso, 2004) Meeting participants Action item detection (Ehlen, et al, 07) used after the meeting Our goal Extract high quality supervision ...from meeting participants (best judges of noteworthy info) ...during the meeting (when participants are most available) 5 Challenges for Supervision Extraction During the Meeting Giving feedback costs the user time and effort Creates a distraction from the user’s main task – participating in the meeting Our high-level approach Develop supervision extraction mechanisms that help meeting participants do their task 6 Interpret participants’ responses as labeled data Thesis Statement Develop approaches to extract high quality supervision from system users, by designing extraction mechanisms that help them do their own task, and interpret their actions as labeled data 7 Roadmap for the Rest of this Talk Review of past strategies for supervision extraction Approach: Passive supervision extraction for agenda item labeling Active supervision extraction to identify noteworthy utterances Success criteria, contribution and timeline 8 Past Strategies for Extracting Supervision from Humans Two types of strategies: Passive and Active Passive: System does not choose which data points user will label E.g.: Improving ASR from user corrections (Burke, et al, 06) Active: System chooses which data points user will label 9 E.g.: Have user label traffic images as risky or not (Saunier, et al, 04) Past strategies | Passive approach | Active approach | Summary Research Issue 1: How to Ask Users for Labels? Categorical labels Item scores/rank Associate desktop documents with task label (Shen, et al, 07) Label image of safe roads for robot navigation (Failes & Olsen, 03) Rank report items for inclusion in summary (Garera, et al, 07) Pick best schedule from system-provided choices (Weber, et al, 07) Feedback on features: 10 Tag movies with new text features (Garden, et al, 05) Identify terms that signify document similarity (Godbole, et al, 04) Past strategies | Passive approach | Active approach | Summary Research Issue 2: How to Interpret User Actions as Feedback? Depends on similarity between user and system behavior Interpretation simple when behaviors are similar E.g.: Email classification (Cohen 96) Interpretation may be difficult when user behavior and target system behavior are starkly different 11 E.g.: User corrections of ASR output (Burke, et al, 06) Past strategies | Passive approach | Active approach | Summary Research Issue 3: How to Select Data Points for Label Query (Active Strategy)? Typical active learning approach: Goal: Minimize number of labels sought to reach target error Approach: Choose data points most likely to improve learner E.g.: Pick data points closest to decision boundary (Monteleoni, et al, 07) Typical assumption: Human’s task is labeling System user’s task is usually not same as labeling data 12 Past strategies | Passive approach | Active approach | Summary Our Overall Approach to Extracting Data from System Users Goal: Extract high quality subjective labeled data from system users. Passive approach: Design the interface to ease interpretation of user actions as feedback Task: Label meeting segments with agenda item Active approach: Develop label query mechanisms that: 13 Query for labels while helping the user do his task Extract labeled data from user actions Task: Identify noteworthy utterances in meetings Talk Roadmap Review of past strategies for supervision extraction Approach: Passive supervision extraction for agenda item labeling Active supervision extraction to identify noteworthy utterances Success criteria, contribution and timeline 14 Passive Supervision: General Approach Goal: Design the interface to enable interpretation of user actions as feedback Recipe: Identify kind of labeled data needed Target a user task Find relationship between user task and data needed Build interface for user task that captures the relationship 15 Past strategies | Passive approach | Active approach | Summary Supervision for Agenda Item Detection Automatically detect agenda item being discussed Labeled data User task Meeting segments labeled with agenda item Note taking during meetings Relationship 1. Most notes refer to discussions in preceding segment 2. A note and its related segment belong to same agenda item Note taking interface 1. Time stamp speech and notes 2. Enable participants to label notes with agenda item 16 Past strategies | Passive approach | Active approach | Summary Insert Agenda Speech recognition research status Topic detection research status FSGs Shared note taking area Personal notes – not shared 17 Past strategies | Passive approach | Active approach | Summary Getting Segmentation from Notes Speech recognition research status 300 Topic detection research status 700 Speech recognition research status 18 Note’s time stamp Note’s agenda item box 100 Speech recognition research status 200 Speech recognition research status 400 Topic detection research status 600 Topic detection research status 800 Speech recognition research status 950 Speech recognition research status Past strategies | Passive approach | Active approach | Summary Evaluate the Segmentation How accurate is the extracted segmentation? Compare to human annotator Also compare to standard topic segmentation algorithms Evaluation metric: Pk For every pair of time points k seconds apart, ask: 19 Are the two points in the same segment or not, in the reference? Are the two points in the same segment or not, in the hypothesis? Pk = # time pairs where hypothesis and reference disagree Total # of time point pairs in the meeting Past strategies | Passive approach | Active approach | Summary SmartNotes Deployment in Real Meetings Has been used in 75 real meetings 16 unique participants overall 4 sequences of meetings 20 Sequence = 3 or more longitudinal meetings Sequence Num meetings so far 1 (ongoing) 30 2 27 3 (ongoing) 8 4 (ongoing) 4 Remaining 6 Past strategies | Passive approach | Active approach | Summary Data for Evaluation Data: 10 consecutive related meetings Avg meeting length 31 minutes Avg # agenda items per meeting 4.1 Avg # participants per meeting 3.75 (2 to 5) Avg # notes per agenda item 5.9 Avg # notes per meeting 25 Reference segmentation: Meetings segmented into agenda items by two different annotators. Inter-annotator agreement: Pk = 0.062 21 Past strategies | Passive approach | Active approach | Summary Results Baseline: TextTiling (Hearst 97) State of the art: (Purver, et al, 2006) Pk 0.39 Not significant 0.21 0.26 0.06 Unsupervised baseline 22 Segmentation Purver, et al, 2006 Inter annotator from SmartNotes agreement Pastdata strategies | Passive approach | Active approach | Summary Does Agenda Item Labeling Help Retrieve Information Faster? 2 10-minute meetings, manually labeled with agenda items 5 questions prepared for each meeting Questions prepared without access to agenda items 16 subjects, not participants of the test meetings Within subjects user study Experimental manipulation: Access to segmentation versus no segmentation 23 Past strategies | Passive approach | Active approach | Summary Minutes to Complete the Task 10 7.5 With agenda item labels 24 Without agenda item labels Past strategies | Passive approach | Active approach | Summary Shown So Far Method of extracting meeting segments labeled with agenda item from note taking Resulting data produces high quality segmentation Likely to help participants retrieve information faster Next: Learn to label meetings that don’t have notes 25 Past strategies | Passive approach | Active approach | Summary Proposed Task: Learn to Label Related Meetings that Don’t Have Notes Plan: Implement language model based detection similar to (Spitters & Kraaiij, 2001). 26 Train agenda item – specific language models on automatically extracted labeled meeting segments Perform segmentation similar to (Purver, et al, 06) Label new meeting segments with agenda item whose LM has the lowest perplexity Past strategies | Passive approach | Active approach | Summary Proposed Evaluation Evaluate agenda item labeling of meeting with no notes 3 real meeting sequences with 10 meetings each For each meeting i in each sequence Train agenda item labeler on automatically extracted labeled data from previous meetings in same sequence Compute labeling accuracy against manual labels Show improvement in accuracy from meeting to meeting Baseline: Unsupervised segmentation + text matching between speech and agenda item label text Evaluate effect on retrieving information Ask users to answer questions from each meeting 27 With agenda item labeling output by improved labeler, versus With agenda item labeling output by baseline labeler Past strategies | Passive approach | Active approach | Summary Talk Roadmap Review of past strategies for supervision extraction Approach: Passive supervision extraction for agenda item labeling Active supervision extraction to identify noteworthy utterances Success criteria, contribution and timeline 28 Active Supervision System goal: Select data points, and query user for labels In active learning, human’s task is to provide the labels But system user’s task may be very different from labeling data General approach Design query mechanisms such that 1. Choose data points to query by balancing 2. 29 Each label query also helps the user do his own task The user’s response to the query can be interpreted as a label Estimated benefit of query to user Estimated benefit of label to learner Past strategies | Passive approach | Active approach | Summary Task: Noteworthy Utterance Detection Goal: Identify noteworthy utterances – utterances that participants would include in notes Labeled data needed: Utterances labeled as either “noteworthy” or “not noteworthy” 30 Past strategies | Passive approach | Active approach | Summary Extracting Labeled Data Noteworthy utterance detector Label query mechanism Helps participants take notes Interpret participants’ acceptances / rejections as “noteworthy” / “not noteworthy” labels Method of choosing utterances for suggestion 31 Completed Notes assistance: Suggest utterances for inclusion in notes during the meeting Proposed Proposed Benefit to user’s note taking Benefit to learner (detector) from user’s acceptance/rejection Past strategies | Passive approach | Active approach | Summary Proposed: Noteworthy Utterance Detector Binary classification of utterances as noteworthy or not Support Vector Machine classifier Features: Lexical: Keywords, tf-idf, named entities, numbers Prosodic: speaking rate, f0 max/min Agenda item being discussed Structural: Speaker identity, utterances since last accepted suggestion Similar to meeting summarization work of (Zhu & Penn, 2006) 32 Past strategies | Passive approach | Active approach | Summary Extracting Labeled Data Noteworthy utterance detector Label query mechanism Helps participants take notes Interpret participants’ acceptances / rejections as “noteworthy” / “not noteworthy” labels Method of choosing utterances for suggestion 33 Completed Notes assistance: Suggest utterances for inclusion in notes during the meeting Proposed Proposed Benefit to user’s note taking Benefit to learner (detector) from user’s acceptance/rejection Past strategies | Passive approach | Active approach | Summary Mechanism 1: Direct Suggestion Fix the problem with emailing 34 Past strategies | Passive approach | Active approach | Summary Mechanism 2: “Sushi Boat” pilot testing has been successful most participants took twenty minutes ron took much longer to finish tasks there was no crash 35 Past strategies | Passive approach | Active approach | Summary Differences between The Mechanisms Direct suggestion User can provide accept/reject label Higher cost for the user if suggestion is not noteworthy Sushi boat suggestion 36 User only provides accept labels Lower cost for the user Past strategies | Passive approach | Active approach | Summary Will Participants Accept Suggestions? Wizard of Oz study Wizard listened to audio and suggested text 6 meetings – 2 direct mechanism, 4 sushi boat mechanism Num Offered Num offered per min Num accepted Num accepted per min % accepted Direct suggestion 50 0.6 17 0.2 34.0 Sushi boat 273 1.8 85 0.6 31.0 37 Past strategies | Passive approach | Active approach | Summary Percentage of Notes from Sushi Boat Meeting 38 Num lines of notes Num lines from Sushi boat % lines from Sushi boat 1 7 6 86% 2 24 20 83% 3 32 29 91% 4 32 30 94% Total/Avg 95 85 89% Past strategies | Passive approach | Active approach | Summary Extracting Labeled Data Noteworthy utterance detector Label query mechanism Helps participants take notes Interpret participants’ acceptances / rejections as “noteworthy” / “not noteworthy” labels Method of choosing utterances for suggestion 39 Completed Notes assistance: Suggest utterances for inclusion in notes during the meeting Proposed Proposed Benefit to user’s note taking Benefit to learner (detector) from user’s acceptance/rejection Past strategies | Passive approach | Active approach | Summary Method of Choosing Utterances for Suggestion One idea: Pick utterances that either have high benefit for detector, or high benefit for the user Most beneficial for detector: Least confident utterances Most beneficial for user: Noteworthy utterances with high conf Does not take into account user’s past acceptance pattern Our approach: 40 Estimate and track user’s likelihood of acceptance Pick utterances that either have high detector benefit, or is very likely to be accepted Past strategies | Passive approach | Active approach | Summary Estimating Likelihood of Acceptance Features: Estimated user benefit of suggested utterance Benefit(utt) = T(utt) – R(utt)), if utt is noteworthy according to detector – R(utt)), if utt is not noteworthy according to detector where T(utt) = time to type utterance, R(utt) = time to read utterance # suggestions, acceptances, rejections in this and previous meetings Amount of speech in preceding window of time Time since last suggestion Combine features using logistic regression Learn per participant from past acceptances/rejections 41 Past strategies | Passive approach | Active approach | Summary Overall Algorithm for Choosing Utterances for Direct Suggestion Given: An utterance and a participant Decision to make: Suggest utterance to participant? Estimate benefit of utterance label to detector Estimate likelihood of acceptance Combine > threshold? No Don’t suggest Yes 42 Suggest utterance to participant Past strategies | Passive approach | Active approach | Summary Learning Threshold and Combination Wts Train on WoZ data Split meetings into development and test set For each parameter setting Select utterances for suggestion to user in development set Compute acceptance rate by comparing against those accepted by the user in the meeting Of those shown, use acceptances and rejections to retrain utterance detector Evaluate utterance detector on test set Pick parameter setting with acceptable tradeoff between utterance detector error rate and acceptance rate 43 Past strategies | Passive approach | Active approach | Summary Proposed Evaluation Evaluate improvement in noteworthy utterance detection 3 real meeting sequences with 15 meetings each Initial noteworthy detector trained on prior data Retrain over first 10 meetings by suggesting notes Test over next 5 Evaluate: After each test meeting, ask participants to grade automatically identified noteworthy utterances Baseline: Grade utterances identified by prior-trained detector Evaluate effect on retrieving information Ask users to answer questions from test meetings 44 With utterances identified by detector trained on 10 meetings, vs. With utterances identified by prior-trained detector Past strategies | Passive approach | Active approach | Summary Talk Roadmap Review of past strategies for supervision extraction Approach: Passive supervision extraction for agenda item labeling Active supervision extraction to identify noteworthy utterances Success criteria, contribution and timeline 45 Thesis Success Criteria Show agenda item labeling improves with labeled data automatically extracted from notes Show participants can retrieve information faster Show noteworthy utterance detection improves with actively extracted labeled data 46 Show participants retrieve information faster Past strategies | Passive approach | Active approach | Summary Expected Technical Contribution Framework to actively acquire data labels from end users Learning to identify noteworthy utterances by suggesting notes to meeting participants. Improving topic labeling of meetings by acquiring labeled data from note taking 47 Past strategies | Passive approach | Active approach | Summary Summary: Tasks Completed/Proposed Agenda item detection through passive supervision Design interface to acquire labeled data Completed Evaluate interface and labeled data obtained Completed Implement agenda item detection algorithm Proposed Evaluate agenda item detection algorithm Proposed Important utterance detection through active learning 48 Implement notes suggestion interface Completed Implement SVM classifier Proposed Evaluate the summarization Proposed Past strategies | Passive approach | Active approach | Summary Proposal Timeline Time frame Scheduled task Apr – Jun 08 Iteratively do the following: Continue running Wizard of Oz studies in real meetings to finetune label query mechanisms. Analyze WoZ data to identify features for the automatic summarizer In parallel, implement baseline meeting summarizer Jul – Aug 08 Deploy online summarization and notes suggestion system in real meetings, and iterate on its development based on feedback Sep – Oct 08 Upon stabilization, perform summarization user study on test meeting groups Nov 08 Implement agenda detection algorithm Dec 08 Perform agenda detection based user study Jan – Mar 09 Write dissertation Apr 09 Defend thesis 49 Past strategies | Passive approach | Active approach | Summary Thank you! 50 Acceptances Per Participant Participant Num sushi boat lines accepted 1 87 2 4 3 6 4 8 Totals 105 51 % of acceptances 82.9% 3.8% 5.7% 7.6% % of notes in 5 prior meetings 90% 10% 0% Did not attend