Assessment Method

advertisement

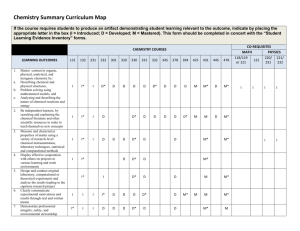

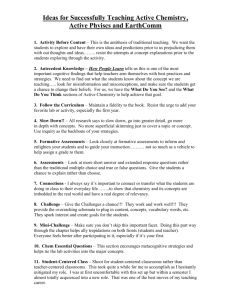

Ubiquitous Core Skills Assessment David Eubanks, JCSU Kaye Crook, Coker College Slides at highered.blogspot.com Hi…I’m the Assessment Director The Theory What Could Go Wrong? (Example 1) Assessment Method: The History capstone experience culminates in a senior thesis that requires the student demonstrate historical literacy, historiographical awareness, good communication skills, and the ability to conduct research. The thesis is composed in close association with a History faculty member who continuously assesses the student's abilities in the core curriculum areas. The thesis advisor's evaluation is then shared with other faculty members in the department. (Example 1) Assessment Results and Evidence: Each student in the program who took HIS 391 and HIS 491 (the two-course capstone sequence) over the last academic year was assessed using the History Capstone Evaluation Form. Data from the past year indicates that majors have improved in terms of their writing, oral expression, critical thinking, and research skills. (Example 1) Actions and Improvements: Continue to monitor History majors' critical thinking and writing skills using the History Capstone Evaluation Form [2814]. (Example 2) Outcomes Statement: Assessment results will show that by end of their senior year, chemistry majors will be knowledgeable in each of the four major areas of chemistry, and will have successfully participated in independent or directed research projects and/or internships. Prior to graduation, they will have presented an acceptable senior seminar in chemistry. Assessment Method: Simple measures of proficiency are the chemistry faculty's grading of homework assignments, exams, and laboratory reports. A more general method of evaluation is to use nationally-standardized tests as final exams for each of the program's course offerings. These are prepared and promulgated by the American Chemical Society (ACS). [No mention of senior seminar] Assessment Results and Evidence (none) Actions and Improvements (none) Fixing What Went Wrong Resurrected an IE Committee for assessment Provided faculty training Provided guidelines for reports Adhered to established deadline Reviewed the revised reports and provided feedback Think Like a Reviewer! 1. 2. 3. 4. 5. Would you have a clear understanding of this program’s outcomes, and assessment methods? Is there documentation of assessment results? Is it clear that results/data have been analyzed by program faculty? Have actions been taken to improve the quality of program based on these results? Are there statements that seem to come out of nowhere? Assessment Report Audit Form IE Assessment Reports Audit SACS Standard CS 3.3.1: The institution identifies expected outcomes, assess the extent to which it achieves these outcomes, and provides evidence of improvement based on analysis of the results in educational programs, to include student learning outcomes. Program Area 1. Objectives1 & Outcomes2 Identified; Focus on Student Learning 2. Outcomes Align with Objective(s) 3. Assessment Methods3 Identified 4. Assessment Methods Align with Outcomes 5. Results of Assessments Cited (Data)4 6. Evidence of Comparative, Ongoing Data4 7. Analysis and Use of Results (data)4 8. Evidence of Action Taken5 9. SQUID Links to Rubrics & Cited Documents 10. Reports updated through 08-09 (program & year of report) (program & year of report) (program & year of report) (program & year of report) 1 Objectives state what is to be achieved specific to the goal it falls under. It will be theoretical and broad. Outcomes specify what is expected to be observed once the assessment has been completed. Student Learning Outcomes identify knowledge, skills, dispositions that exemplify graduates of the program. 3 Assessment Methods describe what will be done, observed, measured to determine if the outcomes & objectives have been met. There should be some variety, use of both qualitative and quantitative methods possible with small sample sizes. 4 Assessment Results and Evidence should include findings from the listed assessment methods with documentation and evidence. Look for data (numbers, percentages, ratings) and evidence that results have been analyzed, shared, and discussed with conclusions reached. 5 There should be clear evidence that actions have been taken to modify and improve the program based on analysis of results cited. Past tense should be used as much as possible; for example, “faculty met and decided to…” It is OK to state occasionally state “no improvements needed at this time”. 6 Documents needed for the evaluator should be linked in SQUID by [xxxx] notation. Check all Squid links to find evidence such as data organized in tables, charts, graphs, rubrics and scoring guides used in the assessments, minutes of meetings, etc. 2 Provide comments for each Program Area below and on the back of this page. Improved Chemistry Outcomes Assessment results will show that chemistry graduates have 1. a clear understanding of and demonstrate proficiency in each of the five major areas of chemistry: inorganic chemistry, organic chemistry, analytical chemistry, physical, and bio-chemistry. 2. the ability and technical expertise to successfully engage in independent or directed research projects and/or internships relevant to the chemical field. Improved Chemistry Assessments 1. Assessment methods for knowledge proficiency a. Knowledge proficiency of chemistry students will be assessed by using standardized tests that are prepared and distributed by the American Chemical Society (ACS). These tests are used nationwide to provide an external and uniform source of assessment. These tests are an excellent means of comparing knowledge proficiency of Coker students to the national average. b. The performance of students in admission tests such the Graduate Record Examination (GRE), Pharmacy College Admission Test (PCAT), and Medical College Admission Test (MCAT) will also be used to assess knowledge proficiency. c. Additionally, assessment criteria as specified in an internal Chemistry Content Knowledge Scoring Guide [4315] rubric will be used. These criteria will be applied to students in chemistry courses and the scores will be reported in assessment results. 2. The technical know-how and practical skills of students will be assessed based on their performance in laboratory sessions and using rubric [4315]. Students are required to participate in a research project under the direction of a faculty member. Their performance (measured in terms of poster presentation, publication, etc) will be used to assess practical and research skills. Technical know-how will be assessed in a student’s senior capstone seminar using rubric [4316]. General Education Assessment What goes wrong: Data Salad Assessment Design Choosing an Achievement Scale Pick a scale that corresponds to the educational progress we expect to see. Example: 0 = Remedial work 1 = Fresh / Soph level work 2 = Jr / Sr level work 3 = What we expect of our graduates For Complex Outcomes: Use authentic data that already exists Create subjective ratings in context Sacrifices reliability for validity Sacrifices reliability for validity Don’t have to bribe students to do something extra Assess what you observe, not just what you teach! Recovers some reliability Faculty Assessment of Core Skills Each syllabus has simple rubric for at least one skill (not necessarily taught) Ratings entered on web form each term by course instructors Analysis and Reporting Comparison of Writing vs. Speaking Compare Demographics Longitudinal Writing Effectiveness in Day 2.50 Avg. Score 2.00 1.50 AF F AF M WF WM 1.00 0.50 0.00 2003-4 2004-5 2005-6 Academic Year 2006-7 Matthew Effect Reading and Writing Writing by Class and Library Use 1.8 1.6 Avg. Writing Score 1.4 1.2 1 > Library 0.8 < Library 0.6 0.4 0.2 0 Fall 05 Fall 04 Fall 03 Start Term Fall 02 What can go wrong: Averages Averages compress all the juice out of data Without juice, we only have directional info How high should it be? How do we get there? An average is like a shotgun. Use carefully. A Solution: Min / max Major Code Art Analytical Thinking Creative Thinking Writing Effectiveness Speaking Effectiveness Class Student ID Last (4) Senior xxxxxxx Tatiana Min Remedial Remedial Fr/So Remedial Max Jr/Sr Fr/So Jr/Sr Jr/Sr Avg 1.00 0.80 1.17 1.00 First Tolstoy Observations 7 Assessment Results Three of our five senior students attained higher scores in their Senior year compared to the scores in their First year. Unfortunately, two of our students showed improvement during their Sophomore and Junior years, but the improvement was not sustained into their Senior year. Action tied to results Change our Capstone course to include the writing of a thesis in the style of a review article. We are hopeful that this assignment will improve at least the effective writing and analytical thinking components of the core skills. A rubric has been developed to help assess these core skills in that writing assignment Solution: Use Proportions Day Analytical Min Rating 100% 80% Jr-Sr 60% Fr-Soph 40% Remedial 20% 0% 2003-4 2004-5 2005-6 2006-7 Some Effects Institutional dashboard Core skills rubric in every syllabus Provided evidence for QEP Creates a language and expectation Example of program changes: We now videotape student presentations Writing assignments look for other core skills Intentional focus on analytic vs creative thinking Last Requests? highered.blogspot.com “Through the eyes of an IE evaluator” from Marila D. Palmer, SACS Summer Institute, July 2009 What does an Institutional Effectiveness Evaluator look for when reading an IE program report? Assessments align with learning outcomes and goals; for example, indicate on a survey which questions align with which outcomes Assortment of well-matched assessments: written and oral exams, standardized tests, presentations, capstone projects, portfolios, surveys, interviews, employer satisfaction studies, job/grad placement, case studies Goals/outcomes probably don’t change much from year to year. Strategies for accomplishing these goals may and probably should change. Outcomes should focus on student learning rather than curricular or program improvement Documentation: numbers, percentages, comparative, longitudinal data On-going documentation (year to year to year) Results that have been analyzed, shared, discussed, and acted upon Evidence of improvement based on analysis of results Easy-to-find and/or highlighted sections that point to proof/evidence of improvement; tell the evaluator exactly where in the document/evidence gives proof A little narrative goes a long way; get rid of extraneous information; think of it as a summary Report needs to be organized; keep is short and simple (KISS) How we reviewed the reports IE Assessment Reports Audit SACS Standard CS 3.3.1: The institution identifies expected outcomes, assess the extent to which it achieves these outcomes, and provides evidence of improvement based on analysis of the results in educational programs, to include student learning outcomes. Program Area 1. Objectives1 & Outcomes2 Identified; Focus on Student Learning 2. Outcomes Align with Objective(s) 3. Assessment Methods3 Identified 4. Assessment Methods Align with Outcomes 5. Results of Assessments Cited (Data)4 6. Evidence of Comparative, Ongoing Data4 7. Analysis and Use of Results (data)4 8. Evidence of Action Taken5 9. SQUID Links to Rubrics & Cited Documents 10. Reports updated through 08-09 (program & year of report) (program & year of report) (program & year of report) (program & year of report) 1 Objectives state what is to be achieved specific to the goal it falls under. It will be theoretical and broad. Outcomes specify what is expected to be observed once the assessment has been completed. Student Learning Outcomes identify knowledge, skills, dispositions that exemplify graduates of the program. 3 Assessment Methods describe what will be done, observed, measured to determine if the outcomes & objectives have been met. There should be some variety, use of both qualitative and quantitative methods possible with small sample sizes. 4 Assessment Results and Evidence should include findings from the listed assessment methods with documentation and evidence. Look for data (numbers, percentages, ratings) and evidence that results have been analyzed, shared, and discussed with conclusions reached. 5 There should be clear evidence that actions have been taken to modify and improve the program based on analysis of results cited. Past tense should be used as much as possible; for example, “faculty met and decided to…” It is OK to state occasionally state “no improvements needed at this time”. 6 Documents needed for the evaluator should be linked in SQUID by [xxxx] notation. Check all Squid links to find evidence such as data organized in tables, charts, graphs, rubrics and scoring guides used in the assessments, minutes of meetings, etc. 2 Provide comments for each Program Area below and on the back of this page. Assessment Reporting Review Handout I. My Plans “How-To’s”: Goal: Student Learning Outcomes: The program identifies expected outcomes, assesses the extent to which it achieves these outcomes, and provides evidence of improvement in student learning outcomes. (SACS 3.3.1, formerly 3.5.1) Objective: A statement of what you are trying to achieve Focus on student learning What is to be achieved? Somewhat broad and theoretical Outcomes Statement: What would you like to see as a result of the assessments you use? Is observable Is measurable (either quantitatively or qualitatively) Assessment Method: Describe what will be done, observed, and measured to determine if outcomes and objectives have been met Need more than one method Can give both quantitative and qualitative results Alignment with outcomes should be clear Any forms used for assessment should have squid link with indication which section(s) align to which outcomes May change based on results from previous year Assessment Reporting Review Handout (continued) I. My Plans “How-To’s” (continued): Assessment Results and Evidence: Summarize results of the assessments, describe the evidence you looked at, and provide an analysis of the results Include findings from listed assessment methods; include results from majors in all on and off site programs Squid links to data/results organized in tables and charts Documentation/Evidence includes numerical data, information from discussions & interviews, minutes of meetings, etc When citing numerical data or ratings, include in the narrative what they mean (1 = excellent, etc) Report AND analyze your findings Include results from actions you reported you would do this year Compare results from last year to this year or from one cohort to another Should not be the same from year to year Actions and Improvements: What actions were taken to address issues discovered in your findings? Report what you did, not what you will do (or word it so that any future actions were discussed and agreed upon) If your evidence shows positive results, how can you build on it? If not, what do you try next? Actions taken to modify and improve the quality of your program should tie to the analysis of the evidence reported in the previous section Should NOT be the same from year to year; do not report “everything is fine and we will continue to monitor…” Assessment Reporting Review Sheet (continued) II. Assessment Report “Shoulds”: 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. Should provide actionable information Should have purpose of improving quality of educational program you are offering Should have focus that it is done for internal use rather than for external use Results should be valuable to you for feedback and improvement rather than solely for reporting purposes Ask “does this assessment method give me any feedback that we can actually use to improve our program?” Should focus on student learning outcomes, not program outcomes or curricular improvement Should not have all actions/improvements in future tense; show you actually took action rather than you intend to do so Should address and document “we will do” actions from previous year to what was actually done this year and what were the results Should be written so that documentation/evidence is easy-to-find; highlight/identify exactly what part of a document/table/survey/rubric/portfolio, etc provides proof of results and improvement Should address results from majors in all programs (day, evening, off-campus, distance learning, etc) Read your reports & think like a reviewer. • Would you have a clear understanding of this program’s goals, outcomes, and assessment methods? • Are there obvious examples and documentation of results of the assessment methods listed? • Is it clear that results/data have been discussed and analyzed among faculty in the program? • Have actions been taken (note past tense) to improve the quality of program based on these results? • Are there statements that seem to come out of nowhere (no linkage to objective, assessments, or data)? Guidelines for reports 1. Have learning goals/outcomes been established? 2. Have methods of assessment been identified? 3. Are the assessments aligned with the goals and outcomes? Is it easy to see how? 4. Are results reported using data and other evidence? Is this evidence easy to find and identify? Have results been analyzed? Is there evidence of on-going assessment from year to year? 5. Is there evidence that results have been used to take actions and make modifications and improvements? Questions? Kaye Crook Associate Professor of Mathematics Coker College Hartsville, SC kcrook@coker.edu Thank you!