Assessment of Student Learning

advertisement

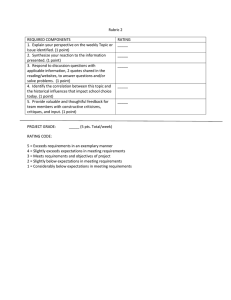

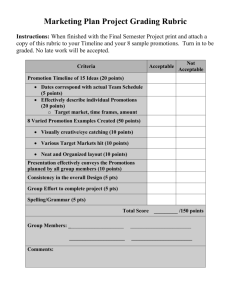

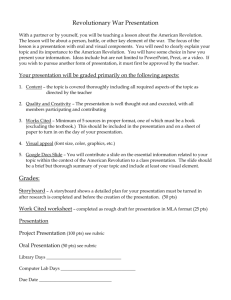

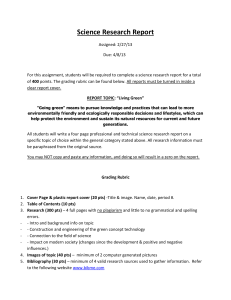

STUDENT LEARNING OUTCOMES ASSESSMENT Cycle of Assessment Institutional & Program/ Department Goals Course Goals/ Intended Outcomes Means Of Assessment And Criteria For Success Use of Results To Implement Change Summary of Data Collected Where to Start? Lesson Plans Syllabi Review Course Descriptions Reflect accurate focus? Collaborative Effort Are goals for a course shared by all sections? Teaching Goals Inventory When Planning a Lesson : What do you want students to “get” from the lesson? How can this be articulated so that you will be able to know if the students “got it”? Articulate as a goal or outcome in order to assess if students met goal of lesson Scaffolding: What are the specific goals of using scaffolding? What are your expectations? How will you know if scaffolding worked? Principles of good goals: Singular Measurable Observable Reasonable Use action words Bloom’s Taxonomy of Cognitive Domain Level Action Verbs Evaluation compare, judge, defend, appraise Synthesis compile, compose, create, predict Analysis infer, deduce, diagram, outline Application Comprehension change, demonstrate, operate, solve Knowledge discriminate, estimate, explain, generalize define, list, identify, recite Observable Use action words Students WILL: “interpret” “analyze” “demonstrate” “compose” “identify” What is one goal for tomorrow’s class? Students will ______________ Is this goal: Observable? Singular? Measurable? Cycle of Assessment Institutional & Program/ Department Goals Course Goals/ Intended Outcomes Means Of Assessment And Criteria For Success Use of Results To Implement Change Summary of Data Collected Means of Assessment & Criteria for Success: How do you know: Students met the goal? And to what extent? Types of Assessment (specifically for classroom assessment) Minute papers The Muddiest Point One sentence summary Directed paraphrasing Examples of Direct & Indirect Measures of Student Learning at the Course Level Direct: Homework assignments Exams and quizzes Standardized tests Reports Class discussion Rubric scores for writing or presentations Grades directly related to learning goals Indirect: Course Evaluations Number of hours students spend on homework Assessment of Student Learning survey responses Grades that are not based on criteria directly related to learning goals Test Blueprints List of the key learning outcomes to be assessed on a test Includes number of points or test questions aligned to each learning goal RUBRICS Criterion-based rating scale Establishes guidelines in evaluating assignments Can be simplistic or comprehensive Should be pre-tested Simple Checklist Rubric Are important components of an assignment reflected in students work? Simple Rating Scales Categorizes work: 5=Excellent 4=Good 3=Satisfactory 2=Fair 1=Poor Detailed Rating Scale Provide common performance standards Make scores more consistent Can be given to students along with the assignment Clear expectations- students will know what is expected of them Example of a Detailed Rating Scale Research Paper Research Methods (35 pts) Organization (30 pts) Original Thinking (25 pts) Citations (10 pts) Excellent Proficient Fair Poor Implementing the Assessment: Will the means of assessment measure what you want to find out Inform students Explain why- to make improvements, not to grade Read/review as soon as possible Time: For “quick” assessments: 1-2 minutes per student assessment Criteria for Success Overall Primary total rating Component Secondary, more detailed. Identify scores which would elicit further review by faculty Establishing Criteria for Success Reasonable expectation of work Expressed in specific terms (overall) “At least 75% of class will receive a rating of a 3.0 or better on the organization component of the research paper rubric” (overall) “The average number of points earned in the first section of the exam will be at least 15” (component) “No more than 5% will earn 5 points or less” Cycle of Assessment Institutional & Program/ Department Goals Course Goals/ Intended Outcomes Means Of Assessment And Criteria For Success Use of Results To Implement Change Summary of Data Collected Summary of Data Collected What were your results? Did you meet, exceed or fall below your target? What do the results tell you? Hypothesize Results Should: Show to what extent goals were accomplished Be linked to use of assessment means chosen Detailed to show the assessment took place Justify the “use of results” Results Should Not: Be statistically unlikely Match criteria of success exactly Not be used Informing Students of Results: What did you learn from students’ responses? Mode of informing students Handout or through discussion (e.g. 40% thought “X” was the muddiest point.) Discuss any changes: You’ll make Students should make Cycle of Assessment Institutional & Program/ Department Goals Course Goals/ Intended Outcomes Means Of Assessment And Criteria For Success Use of Results To Implement Change Summary of Data Collected Using Results -Is the use of results substantive enough? -Do you need to modify your pedagogy, lesson plan, goals, targets or assessment tools? -Detailed enough? -Communicated to the appropriate parties? (your students, department Chair, Dean) Using Assessment Results Results Reason/Hypothesis Action Taken *: Table adapted from “Student Learning Assessment, Options and Resources” by Middle States Commission on Higher Education. If Results are Positive: Celebrate your successes! Let colleagues know! If Results are Not So Positive: What needs to be modified? Work with colleagues Don’t get discouraged! Some Tips: Start off in one class Try out on yourself first Don’t make it a chore/burdensomekeep it simple Take note: first few times it will take a little longer Close the loop! Next Steps: Continue the assessment cycle Share with department Create an assessment process Visit Office of Institutional Research & Assessment with any questions! Questions? Kimberly Gargiulo, Coordinator of Assessment Office of Institutional Research and Assessment S 752 220-8331 kgargiulo@bmcc.cuny.edu