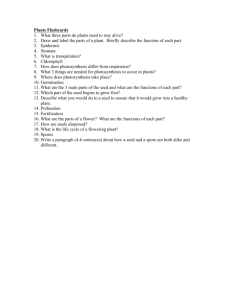

Notes 12

advertisement

Stat 112 Notes 12

• Today:

– Transformations for fitting Curvilinear

Relationships (Chapter 5)

Log Transformation of Both X and

Y variables

• It is sometimes useful to transform both

the X and Y variables.

• A particularly common transformation is to

transform X to log(X) and Y to log(Y)

E (log Y | X ) 0 1 log X

E (Y | X ) exp( 0 1 log X )

Heart Disease-Wine Consumption

Data (heartwine.JMP)

Bivariate Fit of Heart Disease Mortality By Wine Consumption

Residual Plot for Simple Linear Regression Model

Residual

10

8

6

3

2

1

0

-1

-2

-3

0

10

20

4

30

40

50

60

70

80

60

70

80

Wine Consumption

Residual Plot for Log-Log Transformed Model

2

0

10

20

30

40

50

60

70

80

Wine Consumption

Linear Fit

Transformed Fit Log to Log

3

Residual

Heart Disease Mortality

12

1

-1

-3

0

10

20

30

40

50

Wine Consumption

Evaluating Transformed Y Variable Models

The residuals for a log-log transformation model on the original Y-scale are

eˆi Yi Eˆ (Y | X i )

Yi exp(b0 b1 log X i )

The root mean square error and R2 on the original Y-scale are shown in JMP under Fit

Measured on Original Scale.

To evaluate models with transformed Y variables and compare their R2’s and root mean

square error to models with untransformed Y variables, use the root mean square error

and R2 on the original Y-scale for the transformed Y variables.

Linear Fit

Heart Disease Mortality = 7.6865549 - 0.0760809 Wine Consumption

Summary of Fit

RSquare

RSquare Adj

Root Mean Square Error

0.555872

0.528114

1.618923

Transformed Fit Log to Log

Log(Heart Disease Mortality) = 2.5555519 - 0.3555959 Log(Wine Consumption)

Fit Measured on Original Scale

Sum of Squared Error

Root Mean Square Error

RSquare

41.557487

1.6116274

0.5598656

The log-log transformation

provides slightly better predictions

than the simple linear regression

Model.

Interpreting Coefficients in Log-Log

Models

E (log Y | X ) 0 1 log X

E (Y | X ) exp( 0 1 log X )

Assuming that

E (log Y | log X ) 0 1 log X

satisfies the simple linear regression model assumptions, then

Median(Y | X ) exp( 0 ) exp( 1 X )

Thus,

Median(Y | log 2 X ) exp( 0 ) exp( 1 log 2 X )

21

Median(Y | log X )

exp( 0 ) exp( 1 log X )

Thus, a doubling of X is associated with a multiplicative change of 2 1 in the

median of Y.

Transformed Fit Log to Log

Log(Heart Disease Mortality) = 2.5555519 - 0.3555959 Log(Wine Consumption)

Doubling wine consumption is associated with multiplying median heart disease

mortality by 20.356 0.781 .

Another interpretation of

coefficients in log-log models

For a 1% increase in X,

Median(Y | log1.01X ) exp( 0 ) exp( 1 log1.01X )

exp( 0 )1.011

Median(Y | log X )

exp( 0 ) exp( 1 log X )

Because 1.011 1 .011 ,

a 1% increase in X in associated with a 1 percent increase in the median (or mean) of Y.

Transformed Fit Log to Log

Log(Heart Disease Mortality) = 2.5555519 - 0.3555959 Log(Wine Consumption)

Increasing wine consumption by 1% is associated with a -0.36% decrease in mean heart

disease mortality.

Similarly a 10% increase in X is associated with a 10 1 percent increase in mean heart

disease mortality.

Increasing wine consumption by 10% is associated with a -3.6% decrease in mean heart

disease mortality.

For large percentage changes (e.g., 50%, 100%) , this interpretation is not accurate.

Another Example of

Transformations: Y=Count of tree

seeds, X= weight of tree

Bivariate Fit of Seed Count By Seed weight (mg)

30000

25000

Seed Count

20000

15000

10000

5000

0

-5000

-1000

0

1000

2000

3000

Seed w eight (mg)

4000

5000

Bivariate Fit of Seed Count By Seed weight (mg)

30000

25000

Seed Count

20000

15000

10000

5000

0

-5000

-1000

0

1000

2000

3000

Seed w eight (mg)

Linear Fit

Transformed Fit Log to Log

Transformed Fit to Log

4000

5000

Linear Fit

Seed Count = 6751.7179 - 2.1076776 Seed weight (mg)

Transformed Fit to Log

Seed Count = 12174.621 - 1672.3962 Log(Seed weight (mg))

Summary of Fit

Summary of Fit

RSquare

RSquare Adj

Root Mean Square Error

Mean of Response

Observations (or Sum

Wgts)

0.220603

0.174756

6199.931

4398.474

19

RSquare

RSquare Adj

Root Mean Square Error

Mean of Response

Observations (or Sum

Wgts)

0.566422

0.540918

4624.247

4398.474

19

Transformed Fit Log to Log

Log(Seed Count) = 9.758665 - 0.5670124 Log(Seed weight (mg))

Fit Measured on Original Scale

Sum of Squared Error 161960739

Root Mean Square

3086.6004

Error

RSquare

0.8068273

Sum of Residuals

3142.2066

By looking at the root mean square error on the original y-scale, we see that

Both of the transformations improve upon the untransformed model and that the

transformation to log y and log x is by far the best.

Comparison of Transformations to

Polynomials for Tree Data

Bivariate Fit of Seed Count By Seed weight (mg)

30000

Transformed Fit Log to Log

25000

Log(Seed Count) = 9.758665 - 0.5670124*Log(Seed weight (mg))

Seed Count

20000

Fit Measured on Original Scale

15000

Root Mean Square Error

3086.6004

10000

5000

Polynomial Fit Degree=6

0

-5000

0

1000

2000

3000

Seed w eight (mg)

4000

5000

Seed Count = 1539.0377 + 2.453857*Seed weight (mg)

-0.0139213*(Seed weight (mg)-1116.51)^2

+1.2747e-6*(Seed weight (mg)-1116.51)^3

+1.0463e-8*(Seed weight (mg)-1116.51)^4

- 5.675e-12*(Seed weight (mg)-1116.51)^5

+ 8.269e-16*(Seed weight (mg)-1116.51)^6

Summary of Fit

Transformed Fit Log to Log

Polynomial Fit Degree=6

Root Mean Square Error

6138.581

For the tree data, the log-log transformation is

much better than polynomial regression.

Prediction using the log y/log x

transformation

• What is the predicted seed count of a tree

that weights 50 mg?

• Math trick: exp{log(y)}=y (Remember by

log, we always mean the natural log, ln),

i.e., elog10 10

Eˆ (Y | X 50) exp{ Eˆ (log Y | X 50)}

exp{ Eˆ (log Y | log X log 50)} exp{ Eˆ (log Y | log X 3.912)}

exp{9.7587 0.5670 * 3.912} exp{7.5406} 1882.96