Survey Development Tips

advertisement

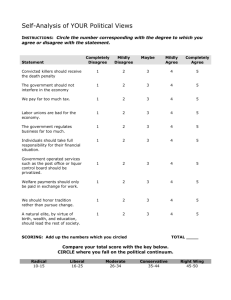

Survey Development Tips Here are a few key things to remember when developing surveys (Adapted from Dillman, D. A., Smyth, J.D. and Christian, L. M. (2008). Mail, Internet, and Mixed-Mode Surveys: The Tailored Design Method, 3rd Ed. New York: John Wiley and Sons.): 1) Ask exactly as many questions as you need to learn what you want to know. Surveys that are too long lead respondents to quit midway through or give hurried answers. Also, only ask as many questions as you wish to analyze later. 2) Keep the question format the same for similar questions. People get attuned to patterns and if you change the patterns, people might not answer the questions correctly. 3) Unless there is an excellent reason to put demographic questions at the beginning of your survey (for instance, for branching questions), they should be put at the end. Some people will not answer demographic questions and if those questions are up front, they might not answer any of your questions. 4) When asking for demographic status, only ask questions that are pertinent to your evaluation needs. For instance, if you survey faculty members who attended a workshop you hosted, you might want to know what department they are in (e.g., education, chemistry), and how long they have been teaching, but it is not relevant to know their religion. 5) Ask questions that will help you identify patterns in the responses. For instance, if you are surveying students, you might ask when they entered the university to learn if students in different class years give different responses. 6) Be sure that closed-ended response options are mutually exclusive. For instance, age categories might be: <18, 18-34, 35-54, 55+. 7) When developing closed-ended questions with a scaled response, make sure the scale is balanced around an actual or theoretical middle such as: “strongly disagree, mildly disagree, neither agree nor disagree, mildly agree, strongly agree.” “Not applicable” or “don’t know” should not be within the scale as a midpoint, but rather at the end and not considered as part of any scale. 8) Be sure your questions are asked in a way that it is clear what the respondent is expected to do and what type of answer is expected. A question like “What is the best thing about going to school here?” might yield answers about weekend parties when what you really want to know is “What is the most important factor in your choice of a major?” 9) Be cautious when using “check all that apply” questions, particularly if the list is long. With a check all that apply format, respondents are more likely to check options at the top of the list than at the bottom or to decide after awhile that they have checked “enough” of the response options. Instead, ask each question with a yes or no option next to each response choice. 10) Ask questions that don’t require too much thought or calculation on the part of the respondent. A question like “How many visits did you make to the library this past semester?” requires too much work (hopefully) for respondents to spend the effort to give you an accurate answer. If you want to know how many times people do something, use a frame of reference (e.g., last week, last month) that is appropriate for what you want to know and easy enough for the respondents to calculate the right response quickly. 11) Avoid “double barreled” questions that ask two questions which may have different answers but allow only one answer choice. For instance “Please rate the quality of the food and service in the cafeteria on a scale of 1 to 5 where 1 is poor and 5 is excellent” is a double barreled question where the food may be excellent and the service poor or vice-versa, but the respondent is not given the opportunity to separate these features. 12) Avoid leading questions such as “Since we all know that the department has lost its best instructors to other institutions in the past few years, how would you rate the quality of teaching in the department?”i Material adapted from: Brawner, C., Litzler, E., and Slattery, M. Evaluation As A Tool For Improvement: Building Evaluation Capacity in the forthcoming L. Barker and J. M. Cohoon eds. Reforming Computer Science at the Undergraduate Level: NCWIT Extension Services. Funded by the National Center for Women and Information Technology and NSF HRD-1203148/1203198/1203174/1203179.