Chapter_6_cs5504

advertisement

Multiprocessors

Speed of execution is a paramount concern,

always so …

If feasible … the more simultaneous execution

that can be done on multiple computers … the

better

Easier to do in the server and embedded

processor markets where there is a natural

parallelism that is exhibited by the applications

and algorithms

Less so in the desktop market

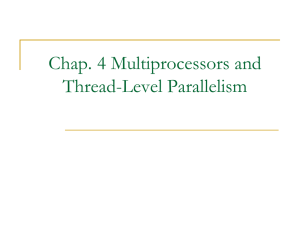

Multiprocessors and ThreadLevel Parallelism

Chapter 6 delves deeply into the issues

surrounding multiprocessors.

Thread level parallelism is a necessary

adjunct to the study of multiprocessors

Outline

Intro to problems in parallel processing

Taxonomy

MIMDs

Communication

Shared-Memory Multiprocessors

Multicache coherence

Implementation

Performance

Outline - continued

Distributed-Memory Multiprocessors

Synchronization

Coherence protocols

Performance

Atomic operations, spin locks, barriers

Thread-Level Parallelism

Low Level Issues in Parallel

Processing

Consider the following generic code:

y=x+3

z = 2*x + y

w = w*w

Lock file M

Read file M

Naively splitting up the code between two

processors leads to big problems.

Low Level Issues in Parallel

Processing - continued

Processor A

Processor B

y=x+3

z = 2*x + y

w = w*w

Lock file M

Read file M

Problems: commands must be executed so as to

not violate the original sequential nature of the

algorithm, a processor has to wait on a file etc.

Low Level Issues in Parallel

Processing - continued

This was a grossly bad example of course,

but the underlying issues appear in good

multiprocessing applications

Two key issues are:

Shared memory (shared variables)

Interprocessor communication (e.g. current

shared variable updates, file locks)

Computation/Communication

“A key characteristic in determining the

performance of parallel programs is the ratio

of computation to communication.” (bottom

of page 546

“Communication is the costly part of parallel

computing” … and also the slow part

A table on page 547 shows this ratio for some

DSP calculations – which normally have a

good ratio

Computation/Communication –

best and worst cases

Problem: Add 6 to each component of vector

x[n]. Three processors A, B, and C.

Best: give A the first n/3 components, B the

next n/3 and C the last n/3. One message at

the beginning, the results passed back in one

message at the end.

Computation/Communication ratio = n/2

Computation/Communication –

best and worst cases

Worst case: have processor A add 1 to x[k]

pass it to B which adds 2 which passes it to C

to add 3. Two messages per effective

computation. Computation/Communication

ratio = n/(2*n) = 1/2

Of course this is terrible coding but it makes the

point.

Real examples are found on page 547

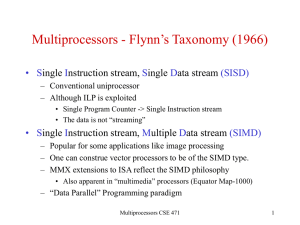

Taxonomy

SISD – single instruction stream, single data

stream (uniprocessors)

SIMD – single instruction stream, multiple

data streams (vector processors)

MISD – multiple instruction streams, single

data stream (no commercial processors have

been built of this type, to date)

MIMD – multiple instruction streams, multiple

data streams

MIMDs

Have emerged as the architecture of choice

for general purpose multiprocessors.

Often built with off-the-shelf microprocessors

Flexible designs are possible

Two Classes of MIMD

Two basic structures will be studied:

Centralized shared-memory multiprocessors

Distributed-memory multiprocessors

Why focus on memory?

Communication or data

sharing can be done at

several levels in our basic

structure

Sharing disks is no problem

and sharing cache between

processors is probably not

feasible

Hence our main distinction

is whether or not to share

memory

Centralized Shared-Memory

Multiprocessors

Main memory is shared

This has many

advantages

Much faster message

passing !!

This also forces many

issues to be dealt with

Block write contention

Coherent memory

Distributed-Memory

Multiprocessors

Each processor has its

own memory

An interconnection

network aids the

message passing

Communication

Algorithms or applications that can be parsed

completely into independent streams of

computations are very rare.

Usually, in order to parse an application between n

processors a great deal of inter-processor

information must be communicated

Examples, which data a processor is working on, how far it

has processed the data it is working on, computed values

that are needed by another processor, etc.

Message passing, shared memory, RPCs, all are

methods of communication for multiprocessors

The Two Biggest Challenges in

Using Multiprocessors

Page 540 and 537

Insufficient parallelism (in the algorithms or

code)

Long-latency remote communications

“Much of this chapter focuses on techniques for

reducing the impact of long remote

communication latency.” page 540 2nd paragraph

Advantages of Different

Communication Mechanisms

Since this is a key distinction, both in terms of

system performance and cost you should be

aware of the comparative advantages.

Know the issues on pages 535-6

SMPs - Shared-Memory

Multiprocessors

Often called by SMP

rather than centralized

shared-memory

multiprocessors

We now look at the

coherent memory

problem

Multiprocessor Cache

Coherence – the key problem

Time

Event

Cache for A

Cache for B

0

Memory

contents for X

1

1

CPU A reads X

1

1

2

CPU B reads X

1

1

1

3

CPU A stores 0 in X 0

1

0

The problem is that CPU B is still using a value of X = 1 whereas A is not.

Obviously we can’t allow this … but how do we stop it?

Basic Schemes for Enforcing

Coherence – Section 6.3

Look over the definitions of coherence and

consistency (page 550)

Coherence protocols on page 552: directory based

and snooping

We concentrate on snooping with invalidation that is

implemented by a write-back cache

Understand the basics in figure 6.8 and 6.9

Study the finite-state transition diagram on page 557

A Cache Coherence Protocol

Performance of Symmetric

Shared-Memory Multiprocessors

Comments:

Not an easy topic, definitions can vary as with the

case of single processors

Results of studies are given in section 6.4

Review the specialized definitions on page 561

first

Coherence misses

True sharing misses

False sharing

Example: CPU execution on a

four-processor system

Study figure 6.13 (page 563) and the

accompanying explanation

What is considered in CPU

time measurements

Note that these benchmarks include

substantial I/O time which is ignored in the

CPU time measurements.

Of course the cache access time is included

in the CPU time measurements since the

processes will not be switched out on a

cache access vice a memory miss or I/O

request

L2 hits, L3 hits and pipeline stalls add time to

the execution – these are shown graphically

Commercial Workload

Performance

OLTP Performance and L3

Caches

Online transaction

processing workloads

(part of the commercial

benchmark) demand a

lot from memory

systems. This graph

focuses on the impact

of L3 cache size

Memory Access Cycles vs.

Processor Count

Note the increase in

memory access cycles

as the processor count

increases

This is mainly due to

true and false sharing

misses which increase

as the processor count

increases

Distributed Shared-Memory

Architectures

Coherence is again an

issue

Study pages 576-7

where some of the

disadvantages of

allowing hardware to

exclude cache

coherence are

discussed

Directory-Based Cache

Coherence Protocols

Just as with a snooping

protocol there are two

primary operations that

a directory protocol

must handle: read

misses and writes to

shared, clean blocks.

Basics: a directory is

added to each node

Directory Protocols

We won’t spend as much time in class on

these. But look over the state transition

diagrams and browse over the performance

section.

Synchronization

Key ability needed to synchronize in a

multiprocessor setup

Ability to atomically read and modify a

memory location

That means: no other process can context switch

in and modify the memory location after our

process reads and before our process modifies.

Synchronization

“These hardware primitives are the basic

building blocks that are used to build a wide

variety of user-level synchronization

operations, including locks and barriers.”

(page 591)

Examples of these atomic operations are

given on 591-3 in both code and text form

Read over and understand both the spin lock

and barrier concepts. Problems on the next

exam may well include one of these.

Synchronization Examples

Check out the examples on 596, 603-4. They

bring out key points in the operation of

multiprocessor synchronization that you need

to know.

Threads

Threads are “lightweight processes”

Thread switches are much faster than

process or context switches

Page 608 for this study a thread is:

Thread = {copy of registers, separate PC, separate

page table }

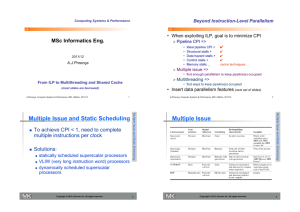

Threads and SMT

SMST – Simultaneous Multithreading exploits

TLP (thread-level parallelism) at the same it

exploits ILP (instruction-level parallelism)

And why is SMT good?

It turns out that most modern multiple-issue

processors have more functional unit parallelism

available than a single thread can effectively use

(see section 3.6 for more – basically they allow

multiple instructions to issue in a single clock

cycle – superscaler and VLIW are two basic

flavors – but more later in the course.