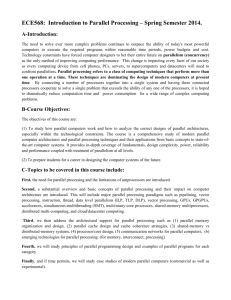

Chap. 4 Multiprocessors and Thread-Level Parallelism

advertisement

Chap. 4 Multiprocessors and Thread-Level Parallelism Uniprocessor performance Performance (vs. VAX-11/780) 10000 From Hennessy and Patterson, Computer Architecture: A Quantitative Approach, 4th edition, October, 2006 ??%/year 1000 52%/year 100 10 25%/year 1 1978 1980 1982 1984 1986 1988 1990 1992 1994 1996 1998 2000 2002 2004 2006 • VAX : 25%/year 1978 to 1986 • RISC + x86: 52%/year 1986 to 2002 • RISC + x86: ??%/year 2002 to present 2 From ILP to TLP & DLP (Almost) All microprocessor companies moving to multiprocessor systems Single processors gain performance by exploiting instruction level parallelism (ILP) Multiprocessors exploit either: Thread level parallelism (TLP), or Data level parallelism (DLP) What’s the problem? 3 From ILP to TLP & DLP (cont.) We’ve got tons of infrastructure for singleprocessor systems Algorithms, languages, compilers, operating systems, architectures, etc. These don’t exactly scale well Multiprocessor design: not as simple as creating a chip with 1000 CPUs Task scheduling/division Communication Memory issues Even programming moving from 1 to 2 CPUs is extremely difficult 4 Why Multiprocessors? Slowdown in uniprocessor performance arising from diminishing returns in exploiting ILP, combined with growing concern on power Growth in data-intensive applications Growing interest in servers, server perf. Increasing desktop perf. less important Data bases, file servers, … Outside of graphics Improved understanding in how to use multiprocessors effectively Especially server where significant natural TLP Multiprocessing Flynn’s Taxonomy of Parallel Machines SISD: Single I Stream, Single D Stream How many Instruction streams? How many Data streams? A uniprocessor SIMD: Single I, Multiple D Streams Each “processor” works on its own data But all execute the same instrs in lockstep E.g. a vector processor or MMX =>Data Level Parallelism Flynn’s Taxonomy MISD: Multiple I, Single D Stream Not used much MIMD: Multiple I, Multiple D Streams Each processor executes its own instructions and operates on its own data This is your typical off-the-shelf multiprocessor (made using a bunch of “normal” processors) Includes multi-core processors, Clusters, SMP servers Thread Level Parallelism MIMD popular because Flexible: can run both N programs, or work on 1 multithreaded program together Cost-effective: same processor in desktop & MIMD Back to Basics 1. “A parallel computer is a collection of processing elements that cooperate and communicate to solve large problems fast.” Parallel Architecture = Computer Architecture + Communication Architecture 2 classes of multiprocessors WRT memory: Centralized Memory Multiprocessor • • 2. < few dozen processor chips (and < 100 cores) in 2006 Small enough to share single, centralized memory Physically Distributed-Memory multiprocessor • • Larger number chips and cores than 100. BW demands Memory distributed among processors Centralized Shared Memory Multiprocessors Distributed Memory Multiprocessors Centralized-Memory Machines Also “Symmetric Multiprocessors” (SMP) “Uniform Memory Access” (UMA) All memory locations have similar latencies Data sharing through memory reads/writes P1 can write data to a physical address A, P2 can then read physical address A to get that data Problem: Memory Contention All processor share the one memory Memory bandwidth becomes bottleneck Used only for smaller machines Most often 2,4, or 8 processors Shared Memory Pros and Cons Pros Communication happens automatically More natural way of programming Easier to write correct programs and gradually optimize them No need to manually distribute data (but can help if you do) Cons Needs more hardware support Easy to write correct, but inefficient programs (remote accesses look the same as local ones) Distributed-Memory Machines Two kinds Distributed Shared-Memory (DSM) Message-Passing All processors can address all memory locations Data sharing like in SMP Also called NUMA (non-uniform memory access) Latencies of different memory locations can differ (local access faster than remote access) A processor can directly address only local memory To communicate with other processors, must explicitly send/receive messages Also called multicomputers or clusters Most accesses local, so less memory contention (can scale to well over 1000 processors) Message-Passing Machines A cluster of computers Each with its own processor and memory An interconnect to pass messages between them Producer-Consumer Scenario: Two types of send primitives P1 produces data D, uses a SEND to send it to P2 The network routes the message to P2 P2 then calls a RECEIVE to get the message Synchronous: P1 stops until P2 confirms receipt of message Asynchronous: P1 sends its message and continues Standard libraries for message passing: Most common is MPI – Message Passing Interface Message Passing Pros and Cons Pros Simpler and cheaper hardware Explicit communication makes programmers aware of costly (communication) operations Cons Explicit communication is painful to program Requires manual optimization If you want a variable to be local and accessible via LD/ST, you must declare it as such If other processes need to read or write this variable, you must explicitly code the needed sends and receives to do this Challenges of Parallel Processing First challenge is % of program inherently sequential (limited parallelism available in programs) Suppose 80X speedup from 100 processors. What fraction of original program can be sequential? a. b. c. d. 10% 5% 1% <1% 7/11/2016 16 Amdahl’s Law Answers Speedup overall 1 1 Fraction enhanced 80 Fraction parallel Speedup parallel 1 1 Fraction parallel 80 (1 Fraction parallel Fraction parallel 100 Fraction parallel ) 1 100 79 80 Fraction parallel 0.8 Fraction parallel Fraction parallel 79 / 79.2 99.75% 17 Challenges of Parallel Processing Second challenge is long latency to remote memory (High cost of communications) delay ranges from 50 clock cycles to 1000 clock cycles. Suppose 32 CPU MP, 2GHz, 200 ns remote memory, all local accesses hit memory hierarchy and base CPI is 0.5. (Remote access = 200/0.5 = 400 clock cycles.) What is performance impact if 0.2% instructions involve remote access? a. b. c. 1.5X 2.0X 2.5X 7/11/2016 CPI Equation CPI = Base CPI + Remote request rate x Remote request cost CPI = 0.5 + 0.2% x 400 = 0.5 + 0.8 = 1.3 No communication is 1.3/0.5 or 2.6 faster than 0.2% instructions involve local access 19 Challenges of Parallel Processing Application parallelism primarily via new algorithms that have better parallel performance Long remote latency impact both by architect and by the programmer For example, reduce frequency of remote accesses either by 1. 2. Caching shared data (HW) Restructuring the data layout to make more accesses local (SW) 7/11/2016 Cache Coherence Problem Shared memory easy with no caches P1 writes, P2 can read Only one copy of data exists (in memory) Caches store their own copies of the data Those copies can easily get inconsistent Classic example: adding to a sum P1 loads allSum, adds its mySum, stores new allSum P1’s cache now has dirty data, but memory not updated P2 loads allSum from memory, adds its mySum, stores allSum P2’s cache also has dirty data Eventually P1 and P2’s cached data will go to memory Regardless of write-back order, the final value ends up wrong Small-Scale—Shared Memory Caches serve to: Increase bandwidth versus bus/memory Reduce latency of access Valuable for both private data and shared data What about cache consistency? Time 0 1 2 3 Event $A $B X (memory) 1 1 CPU A reads X CPU B reads X CPU A stores 0 into X 1 1 1 1 0 1 0 •Read and write a single memory location (X) by two processors (A and B) •Assume Write-through cache 22 Cache coherence problem Time 0 1 2 3 Event $A $B X (memory) 1 1 CPU A reads X CPU B reads X CPU A stores 0 into X 1 1 1 1 0 1 0 23 Example Cache Coherence Problem P2 P1 u=? $ P3 3 u=? 4 $ 5 $ u :5 u= 7 u :5 I/O devices 1 u:5 2 Memory Processors see different values for u after event 3 With write back caches, value written back to memory depends on which cache flushes or writes back value Processes accessing main memory may see very stale value Unacceptable for programming, and it’s frequent! 7/11/201624 Cache Coherence Definition A memory system is coherent if 1. A read by a processor P to a location X that follows a write by P to X, with no writes of X by another processor occurring between the write and read by P, always returns the value written by P. 2. If P1 writes to X and P2 reads X after a sufficient time, and there are no other writes to X in between, P2’s read returns the value written by P1’s write. 3. Preserves program order any write to an address must eventually be seen by all processors Writes to the same location are serialized: two writes to location X are seen in the same order by all processors. preserves causality Maintaining Cache Coherence Hardware schemes Shared Caches Snooping Trivially enforces coherence Not scalable (L1 cache quickly becomes a bottleneck) Every cache with a copy of data also has a copy of sharing status of block, but no centralized state is kept Needs a broadcast network (like a bus) to enforce coherence Directory Sharing status of a block of physical memory is kept in just one location, the directory Can enforce coherence even with a point-to-point network Snoopy Cache-Coherence Protocols State Address Data Pn P1 Bus snoop $ $ Mem I/O devices Cache-memory transaction Cache Controller “snoops” all transactions on the shared medium (bus or switch) relevant transaction if for a block it contains take action to ensure coherence invalidate, update, or supply value depends on state of the block and the protocol Either get exclusive access before write via write invalidate or update all copies on write 27 Example: Write-thru Invalidate P2 P1 u=? $ P3 3 u=? 4 $ 5 $ u :5 u= 7 u :5 I/O devices 1 u:5 2 u=7 Memory Must invalidate before step 3 Write update uses more broadcast medium BW all recent MPUs use write invalidate 7/11/2016 Proce ssor Bus Activity CPU A Cache reads miss for X X CPU B Cache reads miss for X X CPU A Indalidati stores on for X 1 into X CPU B Cache reads miss for X X $A $B 0 0 0 0 0 1 1 X (memory) 0 0 1 1 29