tutorial 4 (Near-Duplicates Detection)

advertisement

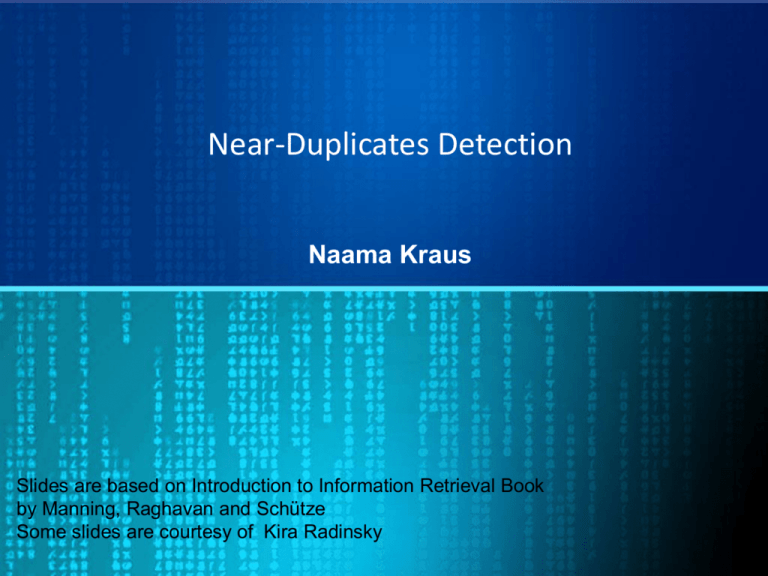

Near-Duplicates Detection

Naama Kraus

Slides are based on Introduction to Information Retrieval Book

by Manning, Raghavan and Schütze

Some slides are courtesy of Kira Radinsky

Why duplicate detection?

• About 30-40% of the pages on the Web are (near)

duplicates of other pages.

– E.g., mirror sites

• Search engines try to avoid indexing duplicate pages

– Save storage

– Save processing time

– Avoid returning duplicate pages in search results

• Improved user’s search experience

• The goal: detect duplicate pages

Exact-duplicates detection

• A naïve approach – detect exact duplicates

– Map each page to some fingerprint, e.g. 64-bit

– If two web pages have an equal fingerprint

check if content is equal

Near-duplicates

• What about near-duplicates?

– Pages that are almost identical.

– Common on the Web. E.g., only date differs.

– Eliminating near duplicates is desired!

• The challenge

– How to efficiently detect near duplicates?

– Exhaustively comparing all pairs of web pages

wouldn’t scale.

Shingling

• K-shingles of a document d is defined to be the set of all

consecutive sequences of k terms in d

– k is a positive integer

• E.g., 4-shingles of

“My name is Inigo Montoya. You killed my father. Prepare to

die”:

{

}

my name is inigo

name is inigo montoya

is inigo montoya you

inigo montoya you killed

montoya you killed my,

you killed my father

killed my father prepare

my father prepare to

father prepare to die

Computing Similarity

• Intuition: two documents are near-duplicates if their

shingles sets are ‘nearly the same’.

• Measure similarity using Jaccard coefficient

– Degree of overlap between two sets

• Denote by S (d) the set of shingles of document d

• J(S(d1),S(d2)) = |S (d1) S (d2)| / |S (d1) S (d2)|

• If J exceeds a preset threshold (e.g. 0.9) declare

d1,d2 near duplicates.

• Issue: computation is costly and done pairwise

– How can we compute Jaccard efficiently ?

Hashing shingles

• Map each shingle into a hash value integer

– Over a large space, say 64 bits

• H(di) denotes the hash values set derived

from S(di)

• Need to detect pairs whose sets H() have a

large overlap

– How to do this efficiently ? In next slides …

Permuting

• Let p be a random permutation over the hash

values space

• Let P(di) denote the set of permuted hash

values in H(di)

• Let xi be the smallest integer in P(di)

Illustration

Document 1

264

Start with 64-bit H(shingles)

264

Permute on the number line

with p

264

264

Pick the min value

Key Theorem

• Theorem: J(S(di),S(dj)) = P(xi = xj)

– xi, xj of the same permutation

• Intuition: if shingle sets of two documents are

‘nearly the same’ and we randomly permute,

then there is a high probability that the

minimal values are equal.

Proof (1)

• View sets S1,S2 as columns of a matrix A

– one row for each element in the universe.

– aij = 1 indicates presence of item i in set j

•

Example

S1 S2

0

1

1

0

1

0

1

0

1

0

1

1

Jaccard(S1,S2) = 2/5 = 0.4

Proof (2)

• Let p be a random permutation of the rows of

A

• Denote by P(Sj) the column that results from

applying p to the j-th column

• Let xi be the index of the first row in which the

column P(Si) has a 1

P(S1)

P(S2)

0

1

1

0

1

0

1

0

1

0

1

1

Proof (3)

• For columns Si, Sj, four types of rows

A

B

C

D

Si

1

1

0

0

Sj

1

0

1

0

• Let A = # of rows of type A

• Clearly, J(S1,S2) = A/(A+B+C)

Proof (4)

• Previous slide: J(S1,S2) = A/(A+B+C)

• Claim: P(xi=xj) = A/(A+B+C)

• Why ?

– Look down columns Si, Sj until first non-TypeD row

• I.e., look for xi or xj (the smallest or both if they are equal)

– P(xi) = P(xj) type A row

– As we picked a random permutation, the

probability for a type A row is A/(A+B+C)

• P(xi=xj) = J(S1,S2)

Sketches

• Thus – our Jaccard coefficient test is

probabilistic

– Need to estimate P(xi=xj)

• Method:

– Pick k (~200) random row permutations P

– Sketchdi = list of xi values for each permutation

• List is of length k

• Jaccard estimation:

– Fraction of permutations where sketch values

agree

– | Sketchdi Sketchdj | / k

Example

Sketches

R1

R2

R3

R4

R5

S1

1

0

1

1

0

S2

0

1

0

0

1

S3

1

1

0

1

0

S1

Perm 1 = (12345) 1

Perm 2 = (54321) 4

Perm 3 = (34512) 3

S2

2

5

5

S3

1

4

4

Similarities

1-2

0/3

1-3

2/3

2-3

0/3

Algorithm for Clustering Near-Duplicate

Documents

1.Compute the sketch of each document

2.From each sketch, produce a list of <shingle, docID> pairs

3.Group all pairs by shingle value

4.For any shingle that is shared by more than one document, output a

triplet <smaller-docID, larger-docID, 1>for each pair of docIDs sharing

that shingle

5.Sort and aggregate the list of triplets, producing final triplets of the form

<smaller-docID, larger-docID, # common shingles>

6.Join any pair of documents whose number of common shingles exceeds a

chosen threshold using a “Union-Find”algorithm

7.Each resulting connected component of the UF algorithm is a cluster of

near-duplicate documents

Implementation nicely fits the “map-reduce”programming paradigm

Implementation Trick

• Permuting universe even once is prohibitive

• Row Hashing

– Pick P hash functions hk

– Ordering under hk gives random permutation of

rows

• One-pass Implementation

– For each Ci and hk, keep slot for min-hash value

– Initialize all slot(Ci,hk) to infinity

– Scan rows in arbitrary order looking for 1’s

• Suppose row Rj has 1 in column Ci

• For each hk,

– if hk(j) < slot(Ci,hk), then slot(Ci,hk) hk(j)

Example

R1

R2

R3

R4

R5

C1

1

0

1

1

0

C2

0

1

1

0

1

h(x) = x mod 5

g(x) = 2x+1 mod 5

C1 slots

C2 slots

h(1) = 1

g(1) = 3

1

3

-

h(2) = 2

g(2) = 0

1

3

2

0

h(3) = 3

g(3) = 2

1

2

2

0

h(4) = 4

g(4) = 4

1

2

2

0

h(5) = 0

g(5) = 1

1

2

0

0