File

advertisement

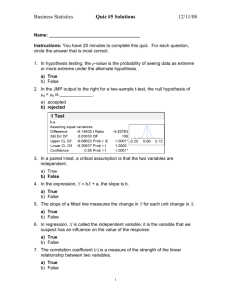

Introduction to Multiple Regression Analysis You will recall that the general linear model used in least squares regression is: Yi = + bXi + I where b is the regression coefficient describing the average change in Y per one unit increase (or decrease) in X, is the Y-intercept (the point where the line of best fit crosses the y-axis), and i is the error term or residual (the difference between the actual value of Y for a given value of X and the value of Y predicted by the regression model for that same value of X). In other words, the regression coefficient describes the influence of X on Y. But how can we tell if this influence is a causal influence? To answer this question, we need to satisfy the three criteria for labeling X the cause of Y: (1) that there is covariation between X and Y; (2) that X precedes Y in time; and (3) that nothing but X could be the cause of Y. (1) We will know that X and Y covary if the regression coefficient is statistically significant. This means that (the "true" relationship in the universe, i.e., in general) is probably not 0.0. (Remember, b = 0.0 means that X and Y are statistically independent. Therefore, X could not be the cause of X.) (2) We will know that X precedes Y in time if we have chosen our variables carefully. (There is no statistical test for time order; it must be dealt with through measurement, research design, etc.) (3) How can we tell if something other than X could be the cause of Y?; as before, by introducing control variables. We saw how this was done with discrete variables used to create zero-order and first-order partial contingency tables. In the case of regression analysis, statistical control is achieved by adding control variables to our (linear) regression model. This transforms simple regression into multiple regression analysis. The multiple regression model looks like this: Yi = + b1X1i + b2X2i + b3X3i + i We still have a residual and a Y-intercept. However, by introducing two additional variables in our regression model on the right-hand side (they are sometimes called "right-side" variables), we have changed the relationship from a (sort of zero-order) bivariate X and Y association to a multi-way relationship with two control variables, X2 and X3. We therefore have three regression coefficients, b1, b2, and b3. The central point is that, when we solve for the values of these constants, the value of b1 (the coefficient for our presumed cause, X1) now has been automatically adjusted for whatever influence the control variables, X2 and X3, have on Y. This adjustment occurs mathematically in the solution of simultaneous equations involving four unknowns, , b1, b2, and b3. In other words, instead of describing the gross influence of X1 on Y as in the simple regression case, in the multiple regression case this coefficient describes the net influence of X1 on Y, that is, net of the effects of X2 and X3. This is statistical control at its best and is the way we answer the question of nonspuriousness. How do we interpret the results? There are two possibilities: (1) either the regression coefficient for our presumed causal variable, b1, is zero, i.e., b1 = 0.0; or (2) the regression coefficient is not zero, i.e., b1, > 0.0. How can we tell which result has occurred? By deciding whether or not b1 is statistically significant. We perform the t-test for the significance of the regression coefficient in the usual way and decide whether or not we can reject the null hypothesis, 1 = 0.0. If the t-value falls within the region of rejection, we reject this null hypothesis in favor of its alternate, that there is most likely an association between X1 and Y even when X2 and X3 are held constant. If the t-value falls outside the region of rejection, we cannot reject this null hypothesis and conclude that one of the control variables must have “washed out” any association that might have existed between X1 and Y. (We don't actually perform a simple regression of X1 on Y first, then rerun the model with the control variables added; we do only the one multiple regression analysis.) Here is an example. Let's say that Y is average annual salary, X1 is number of years of formal education, and the control variables are age (X2) and respondents' parents’ income (X3). Our causal hypothesis might be that education is the (sole) cause of income. The criterion of nonspuriousness requires us to take on and defeat all challengers, i.e., all other competing (i.e., possibly spurious) causes. We do this by controlling for the only two other possible causes of respondents’ annual salary (pretend), age and parents’ income. If the coefficient for education, b1, is not statistically significant (and the coefficient for age, b2, is also not statistically significant), but the coefficient for parents income (b3 in this example) IS statistically significant, then we conclude that the relationship between education, X1, and salary, Y, is spurious. The reason is that now the only variable associated with Y is the prior variable, parents’ income, X3. This means that the real cause of salary is not education but rather parents’ income, which may also "cause" the amount of formal education respondents receive. Suppose, on the other hand, that the coefficient for education, b1, and the coefficient for parents’ income, b3, are both not statistically significant, but the coefficient for age, b2, is statistically significant. Then we would conclude that the relationship between education, X1, and salary, Y, is spurious. The reason is that the only variable associated with Y is the prior variable, age, X2. This means that the real cause of salary is neither education nor parents’ income. Instead, salary is solely a function of respondents’ age. Nonspuriousness would be demonstrated by a statistically significant coefficient for education and statistically nonsignificant coefficients for age and parents’ income. Of course, it is easy to imagine that all three coefficients could be statistically significant. This would mean that all three variables—education, age, and parents’ income—have a causal relationship with salary. Thus, multiple regression analysis easily allows us to talk about partial and multiple causation rather than the monocausal view that we have seemed to take thus far. In the case of multiple regression, the multiple regression coefficient has a slightly—but importantly— different meaning than the simple regression coefficient. Each multiple regression coefficient represents the average change in the dependent variable for a one-unit increase or decrease in an independent variable with ALL THE OTHER INDEPENDENT VARIABLES IN THE MODEL HELD CONSTANT. This was what was meant earlier by the term net influence on Y. The Standardized Model and Standardized Regression Coefficients Like the simple regression coefficient, multiple regression coefficients reflect the measurement scales of the two variables involved. That is, the coefficient for education is in the metric of salary dollars per year of education, the coefficient for age is in the metric of salary dollars per year of age, and the coefficient for parents’ income is in the metric of salary dollars per dollar of parents’ income. For this reason, multiple regression coefficients cannot be compared directly. In other words, you cannot say that, because one multiple regression coefficient is twice the magnitude of a second, the first variable has twice as much influence on the dependent variables as the second. In order to make such comparisons—and in order to make such statements—, the multiple regression coefficients must be STANDARDIZED. This means that they must be transformed into z-scores. The algorithm for doing this is simple: multiply the multiple regression coefficient by the ratio of the standard error of the independent variable to the standard deviation of the dependent variable. For a three-variable model, this would be: s X1 b1 sY * 1 sX 2 b2 sY * 2 and sX3 b3 sY * 3 The result is the standardized regression coefficient, i, usually called the Beta coefficient, the Beta weight, or simply Beta. Beta coefficients are PURE NUMBERS and can be compared. That is, if one Beta is twice the magnitude of a second, you CAN say that the first variable has TWICE the net influence on the dependent as the second variable. The multiple regression model can be rewritten in its standardized form as: * * * ˆ ZY 1 Z1 2 Z2 3 Z3 Notice that there is no intercept in this model because, when all the Beta coefficients are 0.0 (that is, when none of the independent variables has any influence on the dependent variable), Z-hatY equals the mean, and the mean of the standard deviations is 0.0 (hence, Z = 0.0). There is also no error term (residual) since this is the model for the predicted standardized values of Y, that is, for Z-hatY. The standardized coefficients are a little harder to interpret than the unstandardized multiple regression coefficients. They are the average change in the standardized value of Y for each standard deviation increase or decrease in X with all the other variables in the model held constant. When we looked at the F-test for the simple regression model, we said that it did not provide any new information. In the case of the multiple regression model, however, this test takes on more importance. The F-test is performed in the same way as before: it is the MODEL mean square divided by the ERROR mean square. It is a test of the null hypothesis that NONE of the multiple regression coefficients is significantly different from 0.0, H0 : 1 = 2 = 3 = 0.0 If this null hypothesis is rejected, it tells us that AT LEAST ONE of the multiple regression coefficients in our model is significantly different from zero. In other words, it tells us that we are headed in the right direction in constructing a causal model to explain our dependent variable. To evaluate the explanatory power of our multiple regression model, we examine the multiple R-square (i.e., the Coefficient of Determination). With several independent variables, we should make an adjustment in its value. That is, if we were to throw one hundred independent variables into our model, we would expect, other things being equal, that the multiple Rb square would be greater than if our model only contained one or two variables. Therefore, we should adjust the value of the R-square for the number of variables in the model. The adjustment is simple: 3 1 R N 1 1 2 R2 df Error Here, the "bar" means adjusted rather than mean. R2 is the unadjusted Coefficient of Determination, and N, as always, is sample size. This is an adjustment for degrees of freedom, 2 dfTotal 1 1 R df Error In the example, R2 is 0.3613, N is 63, and error degrees of freedom are 59. Thus R 2 1 0.361363 1 1 59 R 2 0.3288 This tells us that our model statistically explains 32.9 percent of the variance in the dependent variable, crime rate. Sample SAS Programs for Multiple Regression with Diagnostics libname old 'a:\'; libname library 'a:\ '; options nodate nonumber ps=66; proc reg data=old.cities; model crimrate = policexp incomepc stress74 / stb; title1 'OLS REGRESSION RESULTS'; run; OLS REGRESSION RESULTS Model: MODEL1 Dependent Variable: CRIMRATE NUMBER OF SERIOUS CRIMES PER 1,000 Analysis of Variance Source DF Sum of Squares Mean Square Model Error C Total 3 59 62 7871.03150 13912.52405 21783.55556 2623.67717 235.80549 Root MSE Dep Mean C.V. 15.35596 44.44444 34.55091 R-square Adj R-sq F Value Prob>F 11.126 0.0001 0.3613 0.3289 Parameter Estimates Variable DF Parameter Estimate Standard Error T for H0: Parameter=0 Prob > |T| INTERCEP POLICEXP INCOMEPC STRESS74 1 1 1 1 14.482581 0.772946 0.020073 0.005875 12.95814942 0.15818555 0.03573539 0.00800288 1.118 4.886 0.562 0.734 0.2683 0.0001 0.5764 0.4658 Variable DF Standardized Estimate INTERCEP POLICEXP INCOMEPC STRESS74 1 1 1 1 0.00000000 0.55792749 0.05911456 0.08431770 Intercept POLICE EXPENDITURES PER CAPITA INCOME PER CAPITA, IN $1OS LONGTERM DEBT PER CAPITA, 1974 Multiple Regression Analysis Attached is output from a SAS program performing multiple regression analysis. The model estimated has as its dependent (Y) variable the number of serious crimes per 1,000 population (CRIMRATE). Independent variables (Xi) are size of city (POPULAT) and per capita income (INCOMEPC). Data are from a random sample of 63 cities. Please answer the following questions. Assume that = 0.05. 1. What is the value of the standardized multiple regression coefficient ( 1) for city size (POPULAT)? ________ 2. What is the value of the t-ratio for the unstandardized multiple regression coefficient for this variable? ________ 3. Is this coefficient statistically significant? ________ 4. What is the value of the standardized multiple regression coefficient ( 2) for per capita income (INCOMEPC)? ________ 5. What is the value of the t-ratio for the unstandardized multiple regression coefficient for this variable? ________ 6. Is this coefficient statistically significant? ________ 7. What is the value of the F-ratio? ________ 8. Is the model statistically significant? ________ 9. What is the value of the Coefficient of Determination (R2) for city size (POPULAT)? ________ 10. What is the value of the adjusted R2? ________ PPD 404 Multiple Regression Example Model: MODEL1 Dependent Variable: CRIMRATE NUMBER OF SERIOUS CRIMES PER 1,000 Analysis of Variance Source DF Sum of Squares Mean Square Model Error C Total 2 60 62 1517.63121 20265.92435 21783.55556 758.81560 337.76541 Root MSE Dep Mean C.V. 18.37840 44.44444 41.35139 R-square Adj R-sq F Value Prob>F 2.247 0.1146 0.0697 0.0387 Parameter Estimates Variable DF Parameter Estimate Standard Error T for H0: Parameter=0 Prob > |T| INTERCEP POPULAT INCOMEPC 1 1 1 34.590340 0.004270 0.021752 14.48439959 0.00209535 0.04230548 2.388 2.038 0.514 0.0201 0.0460 0.6090 Variable DF Standardized Estimate INTERCEP POPULAT INCOMEPC 1 1 1 0.00000000 0.25391144 0.06406161 Variable Label Intercept NUMBER OF RESIDENTS, IN 1,000S INCOME PER CAPITA, IN $1OS Multiple Regression Analysis Answers Attached is output from a SAS program performing multiple regression analysis. The model estimated has as its dependent (Y) variable the number of serious crimes per 1,000 population (CRIMRATE). Independent variables (Xi) are size of city (POPULAT) and per capita income (INCOMEPC). Data are from a random sample of 63 cities. Please answer the following questions. Assume that = 0.05. 1. What is the value of the standardized multiple regression coefficient ( 1) for city size (POPULAT)? 0.25 2. What is the value of the t-ratio for the unstandardized multiple regression coefficient for this variable? 2.038 3. Is this coefficient statistically significant? 4. What is the value of the standardized multiple regression coefficient ( 2) for per capita income (INCOMEPC)? 0.06 5. What is the value of the t-ratio for the unstandardized multiple regression coefficient for this variable? 0.51 6. Is this coefficient statistically significant? 7. What is the value of the F-ratio? 8. Is the model statistically significant? 9. What is the value of the Coefficient of Determination (R2) for city size (POPULAT)? 0.07 10. What is the value of the adjusted R2? 0.04 Yes No 2.25 No