Real-time Concepts for Embedded Systems

advertisement

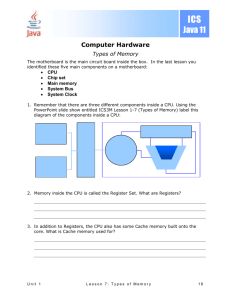

2. Hardware for real-time systems 1 Outline • Basic processor architecture • Memory technologies • Architectural advancements • Peripheral interfacing • Microprocessor vs. Microcontroller • Distributed real-time architectures 2 BASIC PROCESSOR ARCHITECTURE 3 Von Neumann Architecture CPU System Bus Memory Von Neumann computer architecture without explicit IO • System bus is a set of three buses • • • Address: unidirectional and controlled by CPU Data: bidirectional, transfer data and instruction Control: a heterogeneous collection of control, status, clock and power lines. Central Processing Unit Control Unit PCR IR Datapath ALU Registers Instruction access Data access Address Bus Data Bus Instruction processing Fetch Decode Load Execute Store Clock cycles Sequential instruction cycle with five phases Instruction set • An instruction set describes a processor’s functionality and its architecture. • Most instructions make reference to either memory locations, pointers to a memory location, or a register. • Traditionally the distinction between computer organization and computer architecture is that the latter involves using only those hardware details that are visible to the programmer, while the former involves implementation details. 7 Instruction Forms • 0-address form – Stack machine – RPN calculators – E.g., NOP • 1-address form – Uses implicit register (accumulator), E.g., INC R1 • 2-address form – Has the form: op-code operand1, operand2, – E.g., ADD R1, R2 • 3-address form – Has the form: op-code operand1, operand2, resultant – E.g., SUB R1, R2, R3 8 Core Instructions • There are generally six kinds of instructions. These can be classified as: – horizontal-bit operation • e.g. AND, IOR, XOR, NOT – vertical-bit operation • e.g. rotate left, rotate right, shift right, and shift left – Control • e.g. TRAP, CLI, EPI, DPI, HALT – data movement • e.g. LOAD, STORE, MOVE – mathematical/special processing – other (processor specific) • e.g. LOCK, ILLEGAL 9 Addressing Modes • Three basic modes – immediate data – direct memory location – indirect memory location: INC &memory • Others are combinations of these – register indirect • One of the working registers for storing a pointer to a data structure located in memory. – double indirect 10 Two principal techniques for implementing control unit • Microprogramming – Define an instruction by a microprogram including a sequence of primitive hardware commands, for activating appropriate datapath functions. • Hard-wired logic – Consist of combinational and sequential circuits – Offer noticeably faster execution; but take more space, difficult to create or modify instructions. 11 IO and interrupt considerations CPU Enhanced System Bus Memory Input/ Output • Enhanced von Neumann architecture with separate memory and I/O elements • IO specific registers form memory segments to status, mode and data registers of configurable I/O ports. Memory-Mapped I/O provides a convenient data transfer mechanism. • Not require the use of special CPU I/O instructions. • Certain designated locations of memory appear as virtual I/O ports. – Load R1, &speed ;motor speed into register R1 – STORE R1, &motoraddr ;Store to address of ; motor control where speed is a bit-mapped variable and motoraddr is a memory-mapped location. 13 Input from an appropriate memory-mapped location involves executing a LOAD instruction on a pseudomemory location connected to an input device. Output uses a STORE instruction. 14 Programmed I/O • It offers a separate address space for I/O registers. – An additional control signal “memory/IO” for distinguishing between memory and I/O accesses. – It saves the limited address space. • Special data movement instructions IN and OUT are used to transfer data to and from the CPU. – IN R1, & port; Read the content of port and store to R1 – OUT &port, R1; Write the content of R1 to port. – Both require the efforts of the CPU and cost time that could impact real-time performance; 15 Hardware interrupts • Maskable and non-maskable interrupts – Maskable: used for events that occur during regular operation conditions – Nonmaskable: reserved for extremely critical events – They are hardware mechanism for providing prompt services to external events. – Reduce real-time punctuality and make response times nondeterministic. • Processors provide two atomic instructions – EPI: enable priority interrupt (EPI) and – DPI: disable priority interrupt (DPI) 16 MEMORY TECHNOLOGIES 17 Different classes of memory • Volatile RAM and Nonvolatile ROM are two distinct groups of memory – RAM : Random-access memory – ROM: Read-only memory • Today, the borderline between two groups are no longer clear. (EEPROM and flash) Address Bus Generic memory (A0-An) Data Bus 2𝑚+1 × 𝑛 + 1 (D0-Dn) Bits Read (Write) Chip Select Writable ROM: EEPROM and flash • Common features – Can be rewritten in a similar way as RAM, but much slower, (up to 100 times slower than read) – Wears, typically be rewritten for up to 1,000,000 times • Common practices in RTS – EEPROM can be written sparsely, normally used as a nonvolatile program and parameter storage. – Flash can only be erased in large blocks, used for storing both application programs and large data records. – Use flash as low-cost mass memory, and load application programs from flash to RAM for execution. – Run programs from faster RAM instead of ROM. 19 Static RAM(SRAM) and dynamic RAM (DRAM) • Comparisons of SRAM and DRAM – SRAM: more space intensive, more expensive, but faster to access – DRAM: compact, cheaper, but slower to access; need refreshing circuitry to avoid any loss of data • Common practices – Use DRAM in need of a large memory – Use SRAM in case of no more than moderate memory need 20 Summary of memory types and usages Memory type DRAM SRAM UVROM Fusible link PROM EEPROM Flash Ferroelectric RAM Ferrite core Typical access time 50-100 ns 10 ns 50 ns 50 ns Density Typical applications 64 Mb 1 Mb 32 Mb 32 Mb main memory μmemory , cache, fast RAM Code and data storage Code and data storage 50-200 ns 20-30 ns (read) 1 μs (write) 40 ns 1 Mb 64 Mb Persistent storage of variable data Code and data storage 64 Mb various 10 ms 2 Kb or less None, possibly ultra-hardened non-volatile memory Selection of the appropriate technology is a systems design issue. 21 Memory Access Why is this important? Address Bus A0 - Am Memory-Read Access Time Data Bus D0 - Dn Chip Select Read Write Clock Cycles Timing diagram of a memory-read bus cycle 22 How to determine bus cycle length • Worst-case access times of memory and I/O ports • Latencies of address decoding circuitry and possible buffers on the system bus – Sync bus protocol: possible to add wait states to the default bus cycle, dynamically adapt it to different access times – Async bus protocol: no wait states, data transfer based on handshaking type protocol (CPU, system bus, memory devices) • Overall power consumption – Grow with increasing CPU clock rate 23 Memory layout issues FFFFF7FF FFFFF000 0008FFFF Memory-mapped I/O Data I/O Registers SRAM 00080000 00047FFF 00040000 0001FFFF 00000000 Configuration Program EEPROM ROM 24 Hierarchical memory organization • Primary and secondary memory storage forms a hierarchy involving access time, storage density, cost and other factors. • The fastest possible memory is desired in real-time systems, but economics dictates that the fastest affordable technology is used as required. • In order of fastest to slowest, memory should be assigned, considering cost as follows: – – – – – internal CPU memory registers cache main memory memory on board external devices 25 Locality of Reference • Refers to the relative “distance” in memory between consecutive code or data accesses. • If data or code fetched tends to reside relatively close in memory, then the locality of reference is high. • When programs execute instructions that are relatively widely scattered locality of reference is low, • Well-written programs in procedural languages tend to execute sequentially within code modules and within the body of loops, and hence have a high locality of reference. • Object-oriented code tends to execute in a much more nonlinear fashion. But portions of such code can be linearized (e.g. array access). 26 Cache • A small block of fast memory where frequently used instructions and data are kept. The cache is much smaller than the main memory. CPU Cache L1 Cache L2 • Usage: Main Memory – Upon memory access check the address tags to see if data is in the cache. – If present, retrieve data from cache, – If data or instruction is not already in the cache, cache contents are written back and new block read from main memory to cache. – The needed information is then delivered from cache to CPU and the address tags adjusted. 27 Cache • Performance benefits are a function of cache hit radio. • Since if needed data or instructions are not found in the cache, then the cache contents need to be written back (if any were altered) and overwritten by a memory block containing the needed information. • Overhead can become significant when the hit ratio is low. – Therefore a low hit ratio can degrade performance. • If the locality of reference is low, a low number of cache hits would be expected, degrading performance. • Using a cache is also non-deterministic – it is impossible to know a priori what the cache contents and hence the overall access time will be. 28 Wait States • When a microprocessor must interface with a slower peripheral or memory device, a wait state may be needed to be added to the bus cycles. • Wait states extends the microprocessor read or write cycle by a certain number of processor clock cycles to allow the device or memory to “catch up.” • For example, EEPROM, RAM, and ROM may have different memory access times. Since RAM memory is typically faster than ROM, wait states would need to be inserted when accessing RAM. • Wait states degrade overall systems performance, but preserve determinism. 29 ARCHITECTURAL ADVANCEMENTS Pipelining is a form of speculative execution • Pipelining imparts an implicit execution parallelism in the different stages of processing an instruction. – increase the instruction throughput – With pipelining, more instructions can be processed in different phases simultaneously, • Suppose 4-stage pipeline – – – – fetch – get the instruction from memory decode – determine what the instruction is execute – perform the instruction decoded store – store the results to memory 31 Pipelining Sequential execution of 4-stages of 3 instructions (12 clock cycles) 3 instructions finished in 6 clock cycles Sequential instruction execution versus pipelined instruction execution. Nine complete instruction can be completed in the pipelined approach in the same time it takes to complete three instructions in the sequential (scalar) approach. 32 Pros of pipeline • Under ideal conditions – Instruction phases are all of equal length – Every instruction needs the same amount of time – Continuously full pipeline • In general, the best possible instruction completion time of an N-stage pipeline is 1/N times of the completion time of the nonpipelined case. – Utilize ALU and CPU resources more effectively 33 Cons of pipelining • Requires buffer registers between stages – Additional delay compared with non-pipeline • Degrade performance in certain situations – For branch instructions in the pipeline, the prefetched instructions further in the pipeline may be no longer valid and must be flushed. – External interrupts (unpredictable situations) – Data dependencies between consecutive instructions 34 Superpipelines • Achieve superpipelined architectures by decomposing the instruction cycle further. – 6-stage pipelines: fetch, two decodes(indirect addressing modes), execute, write-back, commit. – In practice, more than10 stages in CPUs with GHz-level rates • Cons – Degrade by cache misses and pipeline flushing/refill (locality of instruction reference violates) – Extensive pipeline is a source of significant nondeterminism in RTS. 35 ASICs (Applications Specific Integrated Circuit ) • A special purpose IC designed for one application only, In essence, they are systemson-chip including – a microprocessor, memory, I/O devices and other specialized circuitry. • ASICs are used in many embedded applications – Image processing, avionics systems, medical systems. • Real-time design issues are the same for them as they are for most other systems. 36 PAL(Programmable logic array) / PLA (Programmable array logic) • One-time programmable logic devices for special purpose functionality in embedded systems. – PAL is a programmable AND array followed by a fixed number input OR element. Each OR element has a certain number of dedicated product terms. – PLA is same as PAL, but the AND array is followed by a programmable width OR array. • Comparisons – PLA is much more flexible and yields more efficient logic, but is more expensive. – PAL is faster (uses fewer fuses) and is less expensive. 37 FPGAs (Field programmable gate array) • FPGA allows construction of a system-on-a-chip with an integrated processor, memory, and I/O. – Differs from the ASIC in that it is reprogrammable, even while embedded in the system. • A reconfigurable architecture allows for the programmed interconnection and functionality of a conventional processor. – Move algorithms and functionality from residing in the software side into the hardware side. • Widely used in embedded, mission-critical systems where fault-tolerance and adaptive functionality is essential. 38 Multi-core processors Core 1 Core 2 Core 1 Core 4 I&D Caches I&D Caches I&D Caches I&D Caches Internal cache bus System bus Common I/O Cache Quad-core processor architecture with individual onchip caches and a common on-chip cache Pros and cons • Parallel processing – True task concurrency for multitasking real-time applications • Require a complete collection software tools for support the parallel development process. – Learn to design algorithms for parallelism; otherwise, the potential of multi-core arch remains largely unused. – Load balancing between cores is hard and needs expertise and right tools for performance analysis 40 • Timing consuming to port existing single CPU software efficiently to multi-core environment. – Reduce the interests of companies to switch to multi-core in matured real-time applications. • Nondeterministic instruction processing in multi-core architecture. • Fast and punctual inter-core communication is a key issue when developing high-performance system. – Communication channel is a well-known bottleneck • A theoretical foundation for estimating speedup when number of parallel cores increase in Chapter 7 – The limit of parallelism in terms of speed up appears to be a software property, not a hardware on. 41