Evolution of Reinforcement Learning in Unpredictable Environments

advertisement

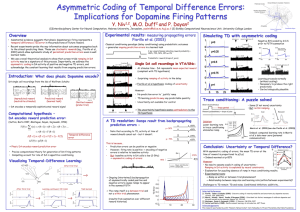

Dopamine, Uncertainty and TD Learning Yael Niv Michael Duff Peter Dayan Gatsby Computational Neuroscience Unit, UCL CNS 2004 What is the function of Dopamine? Prefrontal Cortex Dorsal Striatum (Caudate, Putamen) Parkinson’s Disease -> Movement control? Intracranial selfstimulation; Drug addiction -> Reward pathway? -> Learning? Nucleus Accumbens (Ventral Striatum) Amygdala Ventral Tegmental Area Substantia Nigra Also involved in: - Working memory - Novel situations - ADHD - Schizophrenia … What does phasic Dopamine encode? Unpredicted reward (neutral/no stimulus) Predicted reward (learned task) Omitted reward (probe trial) (Schultz et al.) The TD Hypothesis of Dopamine V (t ) r ( ) r (t 1) r (t 2) r (t 3) ... t r (t 1) V (t 1) (t 1) r (t 1) V (t 1) V (t ) Temporal V (t ) (t 1) Phasic DA encodes a reward prediction error • Precise theory for generation of DA firing patterns • Compelling account for the role of DA in classical conditioning (Sutton+Barto 1987, Schultz,Dayan,Montague 1997) V value r reward difference error But: Fiorillo, Tobler & Schultz 2003 • Introduce inherent uncertainty into the classical conditioning paradigm Stimulus = 2 sec visual stimulus Reward (probabilistic) = drops of juice • Five visual stimuli indicating different reward probabilities: P= 100%, 75%, 50%, 25%, 0% Fiorillo, Tobler & Schultz 2003 At stimulus time - DA represents mean expected reward Delay activity - A ramp in activity up to reward Hypothesis: DA ramp encodes uncertainty in reward “Uncertainty Ramping” and TD error? • The uncertainty is predictable from the stimulus • TD predicts away predictable quantities If it represents uncertainty, the ramping activity should disappear with learning according to TD. Uncertainty ramping is not easily compatible with the TD hypothesis Are the ramps really coding uncertainty? A closer look at FTS’s results At time of reward: • Prediction errors result from probabilistic reward delivery p = 50% p = 75% • Crucially: Positive and negative errors cancel out A TD Resolution: • TD prediction error δ(t) can be positive or negative • Neuronal firing rate is only positive (negative values can be encoded relative to base firing rate) But: DA base firing rate is low -> asymmetric encoding of δ(t) DA 55% 270% δ(t) Simulating TD with asymmetric errors Negative δ(t) scaled by d=1/6 prior to PSTH summation Learning proceeds normally (without scaling) − Necessary to produce the right predictions − Can be biologically plausible DA - Uncertainty or Temporal Difference? With asymmetric coding of errors, the mean TD error at the time of reward p(1-p) => Maximal at p=50% Experiment Model However: • No need to assume explicit coding of uncertainty Ramping is explained by neural constraints. • Explanation for puzzling absence of ramp in trace conditioning results. • Experimental test: Ramp as within or between trial phenomenon? Challenges: TD and noise; Conditioned inhibition, additivity Trace conditioning: A puzzle and its resolution CS = short visual stimulus Trace period US (probabilistic) = drops of juice • Same (if not more) uncertainty, but no DA ramping (Fiorillo et al.; Morris, Arkadir, Nevet, Vaadia & Bergman) • Resolution: lower learning rate in trace conditioning eliminates ramp Other sources of uncertainty: Representational Noise (1) • Rate coding is inherently stochastic • Add noise to tapped delay line representation σ = 0.0577 σ = 0.0866 Mirenowicz and Schultz (1996) σ = 0.1155 prediction error weights => TD learning is robust to this type of noise Other sources of uncertainty: Representational Noise (2) • Neural timing of events is necessarily inaccurate • Add temporal noise to tapped delay line representation ε = 0.05 ε = 0.10 => Devastating effects of even small amounts of temporal noise on TD predictions