ppt

advertisement

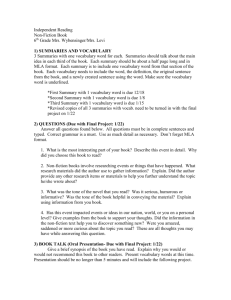

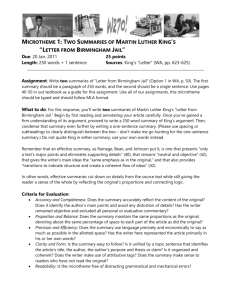

Do Summaries Help? A Task-Based Evaluation of Multi-Document Summarization Kathleen McKeown , Rebecca J. Passonneau David K . Elson , Ani Nenkova , Julia Hirschberg Columbia University SIGIR 2005 INTRODUCTION Newsblaster, a system that provides an interface to browse the news, featuring multi-document summaries of clusters of articles on the same event key components of Newsblaster: • Article clustering • Event cluster summarization • User interface 2 http://newsblaster.cs.columbia.edu/ 3 METHODS In the Experiment, subjects are asked to write a report using a news aggregator as a tool. Each subject was asked to perform four 30-minute fact gathering scenarios using a Web interface. Each scenario involved answering three related questions about an issue in the news. 4 METHODS The four tasks were: The Geneva Accord (日內瓦協定) in the Middle East Hurricane Ivan’s (伊凡颶風) effects Conflict in the Iraqi city of Najaf Attacks by Chechen (車臣) separatists in Russia 5 6 METHODS Subjects were given a Web page that we constructed as their sole resource The page contained four document clusters, two of which were centrally related to the topic at hand, and two of which were peripherally related. Each cluster contained, on average, ten articles. 7 METHODS Four summary condition levels: Level 1: No summaries Level 2: One-sentence summary for each article, one-sentence summary for each entire cluster Level 3: Newsblaster multi-document summary for each cluster Level 4: Human multi-document summary for each cluster 8 Experiment 45 subjects for three studies Study A: 21 subjects wrote reports for two scenarios each in two summary conditions: Level 3 and Level 4. Study B: 11 subjects wrote reports for all four scenarios, using Summary Level 2. Study C: 13 subjects wrote reports for all four scenarios, using Summary Level 1. 9 Scoring Use the Pyramid method for evaluation For example, to score a report from the human summary, we constructed the pyramid using reports created using all other conditions, plus the reports written by other people with human summaries. If there are n reports, then there will be n levels in the pyramid. The top level will contain those facts that appear in all articles 10 Scoring Let Tj refer to the jth tier in a pyramid of facts. If the pyramid has n tiers, then Tn is the top-most tier and T1, the bottommost. The score for a report with X facts is: where j is equal to the index of the lowest tier an optimally informative report will draw from. 11 12 Scoring It has been observed that report length has a significant effect on evaluation results We restricted the length of reports to be no longer than one standard deviation above the mean, and we truncated all question answers to a length of eight content units, which was the third quartile of lengths of all answers. 13 RESULTS We measured in three ways: 1. By scoring the reports and comparing scores across summary conditions 2. By comparing user satisfaction per summary condition 3. By comparing where subjects preferred to draw report content from, measured by counting the citations they inserted following each extracted fact. 14 15 Content Score Comparison Differences between the scores are not significant (p=0.3760 from ANOVA analysis) in Table 1. This may have been due to the fact that the event clusters for Geneva contained more editorials with less “hard” news, while the clusters for Hurricane Ivan contained more breaking news reports. 16 Content Score Comparison After removing the Geneva Accord scenario scores, Newsblaster summaries is significantly better than documents only The differences between Newsblaster and minimal or human summaries are not significant 17 User Satisfaction 18 Citation patterns 19 DISCUSSION When we developed Newsblaster, we speculated that summaries could help users find necessary information in the following ways: 1. they may find the information they need in the summaries themselves, thus requiring them to read less of the full articles 2. the summaries may link them directly to the relevant articles and positions within the articles where the relevant information occurs 20 DISCUSSION There were two problems First, the interface for Summary Level 2 identified individual articles with a title and a one sentence summary; but Level 3 only had titles for each article. Second, the interface for Summary Level 2 shows the list of individual articles on the same Web page for the cluster; but Level 3 shows the summary and cluster title on the same page and requires the subject to click on cluster title to see the list of individual articles. 21 DISCUSSION Another problem that we noted was that reports written by subjects were of widely varying length. Reports varied from 102 words to 1525 words. We adjusted for this by truncating reports. Lengthy reports tended to have more duplication of facts, which clearly makes for less effective reports. The impact of truncating reports requires follow-up study 22 CONCLUSIONS Our answer to the question, Do Summaries Help?, is clearly yes. Our results show that subjects produce better quality reports using a news interface with Newsblaster summaries than with no summaries. Users are also more satisfied with multidocument summaries than with minimal one-sentence summaries such as those used by commercial online news systems. Interface design, report length, and scenario design effect on task completion 23