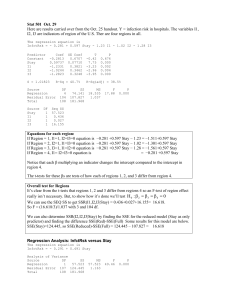

LIR 832 - Lecture 5 - Michigan State University

advertisement

Regression: An Introduction LIR 832 Regression Introduced Topics of the day: A. What does OLS do? Why use OLS? How does it work? B. Residuals: What we don’t know. C. Moving to the Multi-variate Model D. Quality of Regression Equations: R2 Regression Example #1 Just what is regression and what can it do? To address this, consider the study of truck driver turnover in the first lecture… Regression Example #2 Suppose that we are interested in understanding the determinants of teacher pay. What we have is a data set on average perpupil expenditures and average teacher pay by state… Regression Example #2 Descriptive Statistics: pay, expenditures Variable pay expendit N 51 51 Mean 24356 3697 Median 23382 3554 TrMean 23999 3596 Variable pay expendit Minimum 18095 2297 Maximum 41480 8349 Q1 21419 2967 Q3 26610 4123 StDev 4179 1055 SE Mean 585 148 Regression Example #2 Covariances: pay, expenditures pay expendit pay 17467605 3679754 expendit 1112520 Correlations: pay, expenditures Pearson correlation of pay and expenditures = 0.835 P-Value = 0.000 Regression Example #2 $45,000 $40,000 $35,000 Avg. Pay $30,000 $25,000 $20,000 $15,000 $10,000 $5,000 $0 $0 $1,000 $2,000 $3,000 $4,000 $5,000 Expenditures $6,000 $7,000 $8,000 $9,000 Regression Example #2 The regression equation is pay = 12129 + 3.31 expenditures Predictor Constant expendit S = 2325 Coef SE Coef T P 12129 1197 10.13 0.000 3.3076 0.3117 10.61 0.000 R-Sq = 69.7% R-Sq(adj) = 69.1% pay = 12129 + 3.31 expenditures is the equation of a line and we can add it to our plot of the data. Regression Example #2 $45,000 $40,000 $35,000 Avg. Pay $30,000 $25,000 Pay = 12129 +3.31*Expenditures $20,000 $15,000 $10,000 $5,000 $0 $0 $1,000 $2,000 $3,000 $4,000 $5,000 Expenditures $6,000 $7,000 $8,000 $9,000 Regression: What Can We Learn? What can we learn from the regression? Q1: What is the relationship between per pupil expenditures and teacher pay? A: For every additional dollar of expenditure, pay increases by $3.31. Regression: What Can We Learn? Q2: Given our sample, is it reasonable to suppose that increased teacher expenditures are associated with higher pay? H0: expenditures make no difference: β ≤0 HA: expenditures increase pay: β >0 P( (xbar -μ)/σ > (3.037 - 0)/.3117) = p( z > 10.61) A: Reject our null, reasonable to believe there is a positive relationship. Regression: What Can We Learn? Q3: What proportion of the variance in teacher pay can we explain with our regression line? A: R-Sq = 69.7% Regression: What Can We Learn? Q4: We can also make predictions from the regression model. What would teacher pay be if we spent $4,000 per pupil? A: pay = 12129 + 3.31 expenditures pay = 12129 + 3.31*4000 = $25,369 What if we had per pupil expenditures of $6400 (Michigan’s amount)? Pay = 12129 + 3.31*6400 = $33,313 Regression: What Can We Learn? Q5: For the states where we have data, we can also observe the difference between our prediction and the actual amount. A: Take the case of Alaska: expenditures $8,349 actual pay $41,480 predicted pay = 12129 + 3.31*8,349 = 38744 difference between actual and predicted pay: 41480 - 38744 = $1,735 Regression: What Can We Learn? Note that we have under predicted actual pay. Why might this occur? This is called the residual, it is a measure of the imperfection of our model What is the residual for the state of Maine? per pupil expenditure is $3346 actual teacher pay is $19,583 Regression: What Can We Learn? $45,000 Residual (e) = Actual - Predicted $40,000 $35,000 Avg. Pay $30,000 $25,000 $20,000 $15,000 $10,000 $5,000 $0 $0 $1,000 $2,000 $3,000 $4,000 $5,000 Expenditures $6,000 $7,000 $8,000 $9,000 Regression Nomenclature Intercept Slope Coefficient i indexes the observation Y= 0+ 1*X+ i i Dependent Variable Explanatory Variable i Residual or Error Pay=12,129 + 3.31*Expenditure + e i i Intercept Slope Coefficient i Components of a Regression Model Dependent variable: we are trying to explain the movement of the dependent variable around its mean. Explanatory variable(s): We use these variables to explain the movement of the dependent variable. Error Term: This is the difference between what we can account for with our explanatory variables and the actual value taken on by the dependent variable. Parameter: The measure of the relationship between an explanatory variable and a dependent variable. Regression Models are Linear Q: What do we mean by “linear”? A: The equation takes the form: Y a bX where Y: the var iablebeing predicted X : the predictor var iable a: int ercept of the line b: slope of the line Regression Example #3 Using numbers, lets make up an equation for a compensation bonus system in which everyone starts with a bonus of $500 annually and then receives an additional $100 for every point earned. Bonus Income $500 $100 * Job Po int s Now create a table relating job points to bonus income Regression Example #3 Regression Example #3 Regression Example #3 Basic model takes the form: Y = β0 + β1*X + ε or, for the bonus pay example, Pay = $500 + $100*expenditure + ε Regression Example #3 This is the equation of a line where: $500 is the minimum bonus when the individual has no bonus points. This is the intercept of the line $100 is the increase in the total bonus for every additional job point. This is the slope of the line Or: β0 is the intercept of the vertical axis (Y axis) when X = 0 β1 is the change in Y for every 1 unit change in X, or: Y2 Y1 Y rise 1 X 2 X 1 X run Regression Example #3 For points on the line: Let X1 = 10 & X2 = 20 Using our line: Y1= $500 + $100*10 = $1,500 Y2= $500 +$100*20 = $2,500 Regression Example #3 Regression Example #3 1. The change in bonus pay for a 1 point increase in job points: $2,500 $1,500 $1,000 1 $100 20 10 10 2. What do we mean by “linear”? Equation of a line: Y = β0 + β1*X + ε is the equation of a line Regression Example #3 Equation of a line which is linear in coefficients but not variables: Y = β0 + β1*X + β2*X2 + ε Think about a new bonus equation: Total Bonus 500 0 * Bonus Po int s 10 * Bonus Po int s 2 Base Bonus is still $500 You now get $0 per bonus point and $10 per bonus point squared Regression Example #3 Regression Example #3 Regression Example #3 Linearity of Regression Models Y = β0 + β2*Xβ + ε is not the equation of a line Regression has to be linear in coefficients, not variables We can mimic curves and much else if we are clever The Error Term The error term is the difference between what has occurred and what we predict as an outcome. Our models are imperfect because omitted “minor” influences measurement error in Y and X’s issues of functional form (linear model for non-linear relationship) pure randomness of behavior The Error Term Our full equation is Y = β0 + β1*X + ε However, we often write the deterministic part of our model as: E(Y|X) = β0 + β1*X We use of “conditional on X” similar to conditional probabilities. Essentially saying this is our best guess about Y given the value of X. The Error Term This is also written as Y 0 1 * X Note that is called “Y-hat,” the estimate of Y So we can write the full model as: What does this mean in practice? Same x value may produce somewhat different Y values. Our predictions are imperfect! Populations, Samples, and Regression Analysis Population Regression: Y = β0 + β1 X1 + ε The population regression is the equation for the entire group of interest. Similar in concept to μ, the population mean The population regression is indicated with Greek letters. The population regression is typically not observed. Populations, Samples, and Regression Analysis Sample Regressions: As with means, we take samples and use these samples to learn about (make inferences about) populations (and population regressions) The sample regression is written as yi = b0 + b1 x1i + ei or as yi 0 1 X 1i ei Populations, Samples, and Regression Analysis Populations, Samples, and Regression Analysis As with all sample results, there are lots of samples which might be drawn from a population. These samples will typically provide somewhat different estimates of the coefficients. This is, once more, sampling variation. Populations and Samples: Regression Example Illustrative Exercise: 1. Estimate a simple regression model for all of the data on managers and professionals, then take random 10% subsamples of the data and compare the estimates! 2. Sample estimates are generated by assigning a number between 0 and 1 to every observation using a uniform distribution. We then chose observations for all of the numbers betwee 0 and 0.1, 0.1 and 0.2, 0.3 and 0.3, etc. Populations and Samples: Regression Example POPULATION ESTIMATES: Results for: lir832-managers-andprofessionals-2000.mtw The regression equation is weekearn = - 485 + 87.5 years ed 47576 cases used 7582 cases contain missing values Predictor Constant years ed S = 530.5 Coef -484.57 87.492 SE Coef 18.18 1.143 R-Sq = 11.0% Analysis of Variance Source DF SS Regression 1 1648936872 Residual Error 47574 13389254994 Total 47575 15038191866 T -26.65 76.54 P 0.000 0.000 R-Sq(adj) = 11.0% MS 1648936872 281441 F 5858.92 P 0.000 Side Note: Reading Output The regression equation is weekearn = - 485 + 87.5 years ed variable] [equation with dependent 47576 cases used 7582 cases contain missing values [number of observations and number with missing data - why is the latter important] Predictor Coef SE Coef T P Constant -484.57 18.18 -26.65 0.000 years ed 87.492 1.143 76.54 0.000 [detailed information on estimated coefficients, standard error, t against a null of zero, and a p against a null of 0] S = 530.5 R-Sq = 11.0% of fit measures] R-Sq(adj) = 11.0% [two goodness Side Note: Reading Output Analysis of Variance Source DF SS MS F P Regression 1 1648936872 Residual Error 47574 13389254994 Total 47575 15038191866 1648936872 281441 5858.92 0.000 ESS SSR TSS [This tells us the number of degrees of freedom, the explained sum of squares, the residual sum of squares, the total sum of squares and some test statistics] Populations and Samples: Regression Example Populations and Samples: Regression Example SAMPLE 1 RESULTS The regression equation is weekearn = - 333 + 79.2 Education 4719 cases used 726 cases contain missing values Predictor Constant Educatio S = 539.5 Coef -333.24 79.208 SE Coef 58.12 3.665 R-Sq = 9.0% T -5.73 21.61 P 0.000 0.000 R-Sq(adj) = 9.0% Populations and Samples: Regression Example Populations and Samples: Regression Example SAMPLE 2 RESULTS The regression equation is weekearn = - 489 + 88.2 Education 4792 cases used 741 cases contain missing values Predictor Constant Educatio S = 531.7 Coef -488.51 88.162 SE Coef 56.85 3.585 R-Sq = 11.2% T -8.59 24.59 P 0.000 0.000 R-Sq(adj) = 11.2% Populations and Samples: Regression Example Populations and Samples: Regression Example SAMPLE 3 RESULTS The regression equation is weekearn = - 460 + 85.9 Education 4652 cases used 773 cases contain missing values Predictor Constant Educatio S = 525.2 Coef -460.15 85.933 SE Coef 56.45 3.565 R-Sq = 11.1% T -8.15 24.10 P 0.000 0.000 R-Sq(adj) = 11.1% Populations and Samples: Regression Example SAMPLE 4 RESULTS The regression equation is weekearn = - 502 + 88.4 Education 4708 cases used 787 cases contain missing values Predictor Constant Educatio S = 535.6 Coef -502.18 88.437 SE Coef 57.51 3.632 R-Sq = 11.2% T -8.73 24.35 P 0.000 0.000 R-Sq(adj) = 11.2% Populations and Samples: Regression Example SAMPLE 5 RESULTS The regression equation is weekearn = - 485 + 87.9 Education 4737 cases used 787 cases contain missing values Predictor Constant Educatio S = 523.4 Coef -485.19 87.875 SE Coef 56.60 3.572 R-Sq = 11.3% T -8.57 24.60 P 0.000 0.000 R-Sq(adj) = 11.3% Populations and Samples: Regression Example Populations and Samples: A Recap of the Example Estimate 0 (Intercept) POPULATION -484.57 Sample 1 -333.24 Sample 2 -488.51 Sample 3 -460.15 Sample 4 -502.18 Sample 5 -485.19 1 (Coefficient on Education) 87.49 79.21 88.16 85.93 88.44 87.88 Populations and Samples: A Recap of the Example The sample estimates are not exactly equal to the population estimates. Different samples produce different estimates of the slope and intercept. Ordinary Least Squares (OLS): How We Determine the Estimates The residual is a measure of what we do not know. ei = yi - b0 + b1 x1i We want ei to be as small as possible How do we choose (b0, b1)? AKA: Criteria for the sample regression: n Chose among lines so that: ei 0 i1 The average value of the residual is zero. Statistically, this occurs through any line that passes through the means (X-bar, Y-bar). Problem: there are an infinity of lines which meet this criteria. Example of a Possible Regression Line Mean = $3,696 $45,000 $40,000 $35,000 Avg. Pay $30,000 $25,000 Mean = $24,356 $20,000 $15,000 $10,000 $5,000 $0 $0 $1,000 $2,000 $3,000 $4,000 $5,000 Expenditures $6,000 $7,000 $8,000 $9,000 Problem: Many Lines Meet That Criteria Mean = $3,696 $45,000 $40,000 $35,000 Avg. Pay $30,000 $25,000 Mean = $24,356 $20,000 $15,000 $10,000 $5,000 $0 $0 $1,000 $2,000 $3,000 $4,000 $5,000 Expenditures $6,000 $7,000 $8,000 $9,000 OLS: Choosing the Coefficients Among these lines, find (b0, b1) pair which minimizes the sum of squared residuals: n min i1 2 ei 2 e1 2 2 e2 en Want to make the difference between the prediction and the actual value, (Y - E(Y|X)), as small as possible. Squaring puts greater weight on avoiding large individual differences between actual and predicted values. So we will chose the middle course, middle sized errors, rather than a combination of large and small errors. OLS: Choosing the Coefficients OLS: Choosing the Coefficients What are the characteristics of a sample regression? D. It can be shown that, if these two conditions hold, our regression line is: Best Linear Unbiased Estimator (or B-L-U-E) This is called the: Gauss-Markov Theorem OLS: Choosing the Coefficients Descriptive Statistics: pay, expenditures Variable pay expendit N 51 51 Mean 24356 3697 Median 23382 3554 TrMean 23999 3596 Variable pay Minimum 18095 Maximum 41480 Q1 21419 Q3 26610 StDev 4179 1055 SE Mean 585 148 OLS: Choosing the Coefficients The regression equation is pay = 12129 + 3.31 expenditures Predictor Constant expendit S = 2325 Coef 12129 3.3076 SE Coef 1197 0.3117 R-Sq = 69.7% T 10.13 10.61 P 0.000 0.000 R-Sq(adj) = 69.1% Analysis of Variance Source Regression Residual Error Total DF 1 49 50 SS 608555015 264825250 873380265 MS 608555015 5404597 F 112.60 P 0.000 OLS: Choosing the Coefficients Mean of the residuals equal to zero? Descriptive Statistics: Residual Variable Residual N 51 Mean -0 Median -218 TrMean -107 Variable Residual Minimum -3848 Maximum 5529 Q1 -2002 Q3 1689 StDev 2301 SE Mean 322 OLS: Choosing the Coefficients Passes Through the Point of Means? pay = 12129 + 3.3076 expenditures Variable pay expendit N 51 51 Mean 24356 3697 $24,356 = 12129 + 3.3076*3697 $24,356 = 12129 + 12,122.20 $24,356 = $24357.2 Not too bad with rounding! OLS: Demonstrating Residuals Mean = $3,696 $45,000 e1 $40,000 $35,000 Avg. Pay $30,000 e2 $25,000 Mean = $24,356 $20,000 $15,000 $10,000 $5,000 $0 $0 $1,000 $2,000 $3,000 $4,000 $5,000 Expenditures $6,000 $7,000 $8,000 $9,000 How Does OLS Know Which Line is BLUE? If we are trying to minimize the sum of squared residuals, we can manipulate the model to find the following: yi = b0 + b1 x1i + ei ei = yi - b0 - b1 x1i Therefore: n i1 ei2 n ( yi b0 b1 xi ) 2 i1 Thus, since BLUE requires us to minimize the sum of squared residuals, OLS chooses the b0 and b1 to minimize the right side (since we know y and x). How Does OLS Calculate the Coefficients? The formulas used for the coefficients are as follows: y cov( x, y) xy b1 2 x var( x) x n i 1 ( xi x )( yi y ) ( xi x ) 2 b0 y b1 x Illustrative Example: Attendance and Output We want to build a model of output based on attendance. We hypothesize the following: output = 0 + 1*attendance + e Attendance 8 3 2 6 4 Output 40 28 20 39 28 Example Results The regression equation is output = 15.7 + 3.32 attend Predictor Constant attend S = 3.079 Coef 15.733 3.3190 SE Coef 3.247 0.6392 R-Sq = 90.0% T 4.85 5.19 P 0.017 0.014 R-Sq(adj) = 86.6% Analysis of Variance Source Regression Residual Error Total Obs 1 2 3 4 5 attend 8.00 3.00 2.00 6.00 4.00 DF 1 3 4 output 40.00 28.00 20.00 39.00 28.00 SS 255.56 28.44 284.00 Fit 42.28 25.69 22.37 35.65 29.01 MS 255.56 9.48 F 26.96 SE Fit 2.57 1.72 2.16 1.64 1.43 P 0.014 Residual -2.28 2.31 -2.37 3.35 -1.01 St Resid -1.35 0.90 -1.08 1.29 -0.37 Computing the Coefficients Obs 1 2 3 4 5 mean Attendance(X) Output(Y) 8 40 3 28 2 20 6 39 4 28 4.6 31 (X-Xbar) (X-Xbar)^2 3.4 11.56 -1.6 2.56 -2.6 6.76 1.4 1.96 -0.6 0.36 sum (Y-Ybar) (X-Xbar)*(Y-Ybar) 9 30.6 -3 4.8 -11 28.6 8 11.2 -3 1.8 23.2 77 cov(x,y)/var(x)=77/23.2 cov(x,y)/var(x)=3.31896 So, b1 = 3.31896. Thus, b0 = ybar-b1*xbar = 31-3.31896*4.6 = 15.732 Example: Residual Analysis Variable C15 N 5 Mean 0.00 Median -1.01 TrMean 0.00 Variable C15 Minimum -2.37 Maximum 3.35 Q1 -2.33 Q3 2.83 StDev 2.66 SE Mean 1.19 Exercise We are interested in the relationship between the number of weeks an employee has been in some firm sponsored training course and output. We have data on three employees. Thus, compute the coefficients for the following model: output = 0 + 1*training + e employee 1 employee 2 employee 3 Weeks Training 10 20 30 Output 590 400 430 Exercise: Worksheet Using the data, calculate b1 and b0: Training (X) Output (Y) X-Xbar (X-Xbar)^2 Y-Ybar (X-Xbar)*(Y-Ybar) Employee1 Employee2 Employee3 mean sum X-BAR Y-BAR sum VAR (X) b1 = COV(X,Y)/VAR(X) = b0 = Y-BAR - b1*X-BAR = COV(X,Y) OLS: The Intercept (bO) Why you shouldn’t spend too much time worrying about the value of the intercept: b0 = 24356 - 3.3076*3697 = 12129 Note that b0 is the value for pay if expenditures were equal to 0, something we may never observe. Multiple Regression Few outcomes are determined by a single factor: 1. We know that gender plays an important role in determining pay. Is gender the only factor? 2. What is likely to matter in determining attendance at a work site: our program holidays weather illness demographics of the labor force Multiple Regression A complete model of an outcome will depend not only on inclusion of our explanatory variable of interest, but also including other variables which we believe influence our outcome. Getting the “correct” estimates of our coefficients depends on specifying the balance of the equation correctly. This raises the bar in our work. Multiple Regression: Example An example with Weekly Earnings: 1. Regress weekly earnings of managers on education 2. Add age and gender to the model 3. Add weekly hours to the model Example: Weekly Earnings The regression equation is weekearn = - 485 + 87.5 years ed 47576 cases used 7582 cases contain missing values Predictor Constant years ed S = 530.5 Coef -484.57 87.492 SE Coef 18.18 1.143 R-Sq = 11.0% T -26.65 76.54 P 0.000 0.000 R-Sq(adj) = 11.0% Analysis of Variance Source DF SS Regression 1 1648936872 Residual Error 47574 13389254994 Total 47575 15038191866 MS 1648936872 281441 F 5858.92 P 0.000 Example: Weekly Earnings The regression equation is weekearn = - 402 + 76.4 years ed + 6.29 age - 319 Female 47576 cases used 7582 cases contain missing values Predictor Constant age Female years ed S = 500.4 Coef -401.76 6.2874 -318.522 76.432 SE Coef 18.87 0.2021 4.625 1.089 R-Sq = 20.8% T -21.29 31.11 -68.87 70.16 P 0.000 0.000 0.000 0.000 R-Sq(adj) = 20.8% Analysis of Variance Source DF SS Regression 3 3126586576 Residual Error 47572 11911605290 Total 47575 15038191866 MS 1042195525 250391 F 4162.27 P 0.000 Example: Weekly Earnings The regression equation is weekearn = - 1055 + 65.7 years ed + 6.87 age - 229 Female + 18.2 uhour-cd 44839 cases used 10319 cases contain missing values Predictor Constant age Female uhour-cd years ed S = 459.1 Coef -1054.63 6.8736 -229.466 18.2205 65.701 SE Coef 19.48 0.1932 4.490 0.2183 1.041 R-Sq = 31.8% T -54.15 35.57 -51.10 83.47 63.12 P 0.000 0.000 0.000 0.000 0.000 R-Sq(adj) = 31.8% Analysis of Variance Source DF SS Regression 4 4415565740 Residual Error 44834 9450180490 Total 44838 13865746230 MS 1103891435 210782 F 5237.13 P 0.000 Example: Weekly Earnings In the last model, how does age affect weekly earnings? How does gender affect weekly earnings? How do average weekly hours of work affect weekly earnings? How does the estimated effect of education change as we add these “control variables”? Interpreting the Coefficients In the last model, the coefficient on education indicates that for every additional year of education a manager earnings an additional 65.09 per month, holding their age, gender, and hours of work constant. E(Weekly Income|education,age, gender, hours of work) Alternatively, it is the difference in weekly earnings between two individuals who, except for a one year difference in years of education, are the same age and gender and work the same weekly hours (otherwise equivalent managers) Interpreting the Coefficients The coefficient on gender indicates that women managers earn $229.79 less than male managers who are otherwise similar in education, age and weekly hours of work. Note the similarity to the comparative static exercises in labor economics in which we attempt to tease out the effect of one factor holding all other factors constant. What is the effect of raising the demand for labor, holding supply of labor constant? What is the effect on the wage of an improvement in working conditions, holding other compensation related factors constant (Theory of compensating differentials). The Effect of Adding Variables The addition of factors to a model don’t always make a difference. Example: Model of teacher pay as a function of expenditures per pupil. Does region make a difference. The Effect of Adding Variables Regression Analysis: pay versus expenditure The regression equation is pay = 12129 + 3.31 expenditures Predictor Constant expendit S = 2325 Coef 12129 3.3076 SE Coef 1197 0.3117 R-Sq = 69.7% T 10.13 10.61 P 0.000 0.000 R-Sq(adj) = 69.1% The Effect of Adding Variables Regression Analysis: pay versus expenditures, NE, S The regression equation is pay = 13269 + 3.29 expenditures - 1674 NE - 1144 S Predictor Constant expendit NE S S = 2270 Coef 13269 3.2888 -1673.5 -1144.2 SE Coef 1395 0.3176 801.2 861.1 R-Sq = 72.3% T 9.51 10.35 -2.09 -1.33 P 0.000 0.000 0.042 0.190 R-Sq(adj) = 70.5% Region matters, but its influence on the expenditure/pay relationship is de minimis. Evaluating the Results We will consider a number of criteria in analyzing a regression equation. Before touching the data Is the equation supported by sound theory? Are all the obviously important variables included in the model? Should we be using OLS to estimate this Model (what is OLS)? Has the correct form been used to estimate the model? Evaluating the Results The data itself: Is the data set a reasonable size and accurate? The results: How well does the estimated regression fit the data? Do the estimated coefficients correspond to the expectations developed by the researcher before the data was collected? Does the regression appear to be free of major econometric problems? Evaluating the Results: R-Squared (Goodness of Fit) R2 (also seen as r2), the Coefficient of Determination: We would like a simple measure which tells us how well our equation fits out data. This is R2 ( Coefficient of Determination) For example: in our teacher pay model: R2 = 69.7% For attendance/output: R2 = 86.6% For our weekly earnings model R2 varies from 10.6% to 31.9% R-Squared (Goodness of Fit) What is R2? The percentage of the total movement of the dependent variable around its mean (variance *n) explained by the explanatory variable. R-Squared (Goodness of Fit) Concept of R2: Our dependent variable Y, moves around its mean We are trying to explain that movement with out x’s. If we are doing well, then it should be the case that most of the movement of Y should be explained (predicted) by the X’s. That suggests that explained movement should be large and unexplained movement should be small. R-Squared (Goodness of Fit) n TSS i 1 (Yi Y ) 2 1 n n * var(Y ) n (Yi Y ) 2 n i 1 n TSS (Yi Y ) 2 i 1 n RSS ESS i 1 RSS the residual sum of squares ESS the exp lained sum of squares R-Squared (Goodness of Fit) ESS exp lained sum of squares 2 R TSS total sum of squares Note: 0 < R2 < 1 Suppose that we a regression which explains nothing. Then the ESS = 0 and the measure is equal to zero. Now suppose we have a model which fits the data exactly. Every movement in y is correctly predicted. Then the ESS = TSS and our measure is equal to 1. R-Squared (Goodness of Fit) In other words, as we approach R2=1, our ability to explain movement in the dependent variable increases. Most of our results will fall into the middle range between 0 and 1. R-Squared (Goodness of Fit) ESS R TSS 2 or , more commonly RSS R 1 TSS 2 Returning to Weekly Earnings of Managers Examples The regression equation is weekearn = - 485 + 87.5 years ed dependent variable] [equation with 47576 cases used 7582 cases contain missing values [number of observations and number with missing data - why is the latter important] Predictor Coef SE Coef T P Constant -484.57 18.18 -26.65 0.000 years ed 87.492 1.143 76.54 0.000 [detailed information on estimated coefficients, standard error, t against a null of zero, and a p against a null of 0] S = 530.5 R-Sq = 11.0% [two goodness of fit measures] R-Sq(adj) = 11.0% Returning to Weekly Earnings of Managers Examples Regression Analysis: weekearn versus Education The regression equation is weekearn = - 442 + 85.2 Education 47576 cases used 7582 cases contain missing values Predictor Constant Educatio S = 531.7 Coef -442.42 85.228 SE Coef 17.99 1.136 R-Sq = 10.6% T -24.59 75.01 P 0.000 0.000 R-Sq(adj) = 10.6% Analysis of Variance Source DF SS Regression 1 1590256151 Residual Error 47574 13447935715 Total 47575 15038191866 MS 1590256151 282674 F 5625.76 P 0.000 Returning to Weekly Earnings of Managers Examples Regression Analysis: weekearn versus Education, age, female The regression equation is weekearn = - 382 + 75.0 Education + 6.53 age - 320 female 47576 cases used 7582 cases contain missing values Predictor Constant Educatio age female S = 500.9 Coef -382.38 74.967 6.5320 -319.952 SE Coef 18.78 1.079 0.2020 4.628 R-Sq = 20.6% T -20.36 69.45 32.34 -69.14 P 0.000 0.000 0.000 0.000 R-Sq(adj) = 20.6% Analysis of Variance Source DF SS Regression 3 3103974768 Residual Error 47572 11934217098 Total 47575 15038191866 MS 1034658256 250866 F 4124.34 P 0.000 Returning to Weekly Earnings of Managers Examples Regression Analysis: weekearn versus Education, age, female, hours The regression equation is weekearn = - 1053 + 65.1 Education + 7.07 age - 230 female + 18.3 hours 44839 cases used 10319 cases contain missing values Predictor Constant Educatio age female hours S = 459.0 Coef -1053.01 65.089 7.0741 -229.786 18.3369 SE Coef 19.43 1.029 0.1929 4.489 0.2180 R-Sq = 31.9% T -54.20 63.27 36.68 -51.19 84.11 P 0.000 0.000 0.000 0.000 0.000 R-Sq(adj) = 31.9% Returning to Weekly Earnings of Managers Examples So the fit of the final model, with a control for hours of work, is considerably better than the fit for a model which added gender and age and much better than the fit of a model with just education as an explanatory variable. Adjusted R-Squared (“R-bar Squared”) First limitation of R2: 1. As we add variables, the magnitude of ESS never falls and typically increases. If we just use R2 as a criteria for adding variables to a model, we will keep adding infinitum. R2 never falls and usually increases as one adds variables. 2. Instead use the measure R-bar squared. This measure is calculated as: Adjusted R-Squared (“R-bar Squared”) RSS RSS (n 1) (n k 1) 2 2 ) * (n 1) R 1 1 * 1 ( 1 R TSS TSS ( n k 1 ) ( n k 1 ) ( n 1) where n is the number of observations k is the number of exp lanatory var iables (n k 1) is the deg rees of freedom Adjusted R-Squared (“R-bar Squared”) As k , the number of regressors becomes large, R-bar-squared becomes smaller, all else constant. It imposes a penalty on adding variables which really have very little to do with the dependent variable. If you add irrelevant variables, R2 may remain the same or increase, but R-barsquared may well fall. Adjusted R-Squared (“R-bar Squared”) Regression Analysis: pay versus expenditure The regression equation is pay = 12129 + 3.31 expenditures Predictor Constant expendit S = 2325 Coef 12129 3.3076 SE Coef 1197 0.3117 R-Sq = 69.7% T 10.13 10.61 P 0.000 0.000 R-Sq(adj) = 69.1% Adjusted R-Squared (“R-bar Squared”) Regression Analysis: pay versus expenditures, NE, S The regression equation is pay = 13269 + 3.29 expenditures - 1674 NE - 1144 S Predictor Constant expendit NE S S = 2270 Coef 13269 3.2888 -1673.5 -1144.2 SE Coef 1395 0.3176 801.2 861.1 R-Sq = 72.3% T 9.51 10.35 -2.09 -1.33 P 0.000 0.000 0.042 0.190 R-Sq(adj) = 70.5% Adjusted R-Squared (“R-bar Squared”) Note that the increase in R-bar-squared is more modest than the increase in R2. This is because the explanatory power of region is modest and the effect of that power in reducing the RSS is being counter-balanced by the increase in the number of parameters. Adjusted R-Squared (“R-bar Squared”) Need to be careful in the use of compare regressions. R 2 or R 2 to It can be good in comparing specifications such as with the variables in our specification for managers. Confirms our view that weekly pay in influenced education but also by age, gender and hours (note that both r and r-bar increase). It is not good for comparing different equations with different data sets. Example: Teachers’ Pay Our model using state average earnings and expenditures has a R-sq of 72.3% Regression Analysis: pay versus expenditures, NE, S The regression equation is pay = 13269 + 3.29 expenditures - 1674 NE - 1144 S Predictor Constant expendit NE S S = 2270 Coef 13269 3.2888 -1673.5 -1144.2 SE Coef 1395 0.3176 801.2 861.1 R-Sq = 72.3% T 9.51 10.35 -2.09 -1.33 P 0.000 0.000 0.042 0.190 R-Sq(adj) = 70.5% Example: Teachers’ Pay Now consider a micro-data model: Use our CPS data set for 2000 and merge the expenditure data into data on individual teachers. Using STATE DATA: . reg teacherpay expenditures Source | SS df MS -------------+-----------------------------Model | 608555015 1 608555015 Residual | 264825250 49 5404596.94 -------------+-----------------------------Total | 873380265 50 17467605.3 Number of obs F( 1, 49) Prob > F R-squared Adj R-squared Root MSE = = = = = = 51 112.60 0.0000 0.6968 0.6906 2324.8 -----------------------------------------------------------------------------teacherpay | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------expenditures | 3.307585 .3117043 10.61 0.000 2.681192 3.933978 _cons | 12129.37 1197.351 10.13 0.000 9723.205 14535.54 ------------------------------------------------------------------------------ Example: Teachers’ Pay Now Shift to Year 2000 micro-data and append state expenditures on education: . summ weekearn age female uhour1 expenditure if pocc1 >= 151 & pocc1 <= 159 Variable | Obs Mean Std. Dev. Min Max -------------+----------------------------------------------------weekearn | 7579 702.5348 429.1002 .02 2884.61 age | 7903 41.63254 11.9243 15 90 female | 7903 .732127 .4428791 0 1 uhour1 | 7903 35.51411 15.84321 -4 99 expenditures | 7903 3786.745 990.0271 2297 8349 Example: Teachers’ Pay Now Estimate a Regression Equation Similar to the State Data Equation Note the number of observations: . reg weekearn expenditure NE Midwest South if pocc1 >= 151 & pocc1 <= 159 Source | SS df MS -------------+-----------------------------Model | 21170657.3 4 5292664.32 Residual | 1.3741e+09 7574 181429.033 -------------+-----------------------------Total | 1.3953e+09 7578 184126.967 Number of obs F( 4, 7574) Prob > F R-squared Adj R-squared Root MSE = = = = = = 7579 29.17 0.0000 0.0152 0.0147 425.94 -----------------------------------------------------------------------------weekearn | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------expenditures | .0390489 .0060053 6.50 0.000 .0272769 .0508209 NE | 58.93258 15.97818 3.69 0.000 27.61091 90.25425 Midwest | 32.89631 14.26238 2.31 0.021 4.938092 60.85454 South | 2.824219 13.6974 0.21 0.837 -24.02649 29.67493 _cons | 533.6598 24.94855 21.39 0.000 484.7538 582.5659 ------------------------------------------------------------------------------ For every $1 in expenditures we get 3.9¢ in teacher pay per week or, on a 52 week basis, $2.08! Example: Teachers’ Pay Build a more suitable model and R-sq increases. . reg weekearn expenditure pocc1 <= 159 female black NE Source | SS df MS -------------+-----------------------------Model | 19477648.0 8 2434706.00 Residual | 61297869.1 407 150609.015 -------------+-----------------------------Total | 80775517.1 415 194639.80 Midwest South age coned if pocc1 >= 151 & Number of obs = F( 8, 7579) = Prob > F = R-squared = Adj R-squared = Root MSE = 7479 16.17 0.0000 0.2411 0.2262 388.08 -----------------------------------------------------------------------------weekearn | Coef. Std. Err. t P>|t| [95% Conf. Interval] -------------+---------------------------------------------------------------expenditures | .0636406 .0726116 0.88 0.381 -.0791 .2063811 female | -88.36832 43.00688 -2.05 0.041 -172.9117 -3.824972 black | 72.28883 48.35753 1.49 0.136 -22.77287 167.3505 NE | -84.77944 116.1567 -0.73 0.466 -313.1213 143.5625 Midwest | -38.8376 54.29961 -0.72 0.475 -145.5803 67.9051 South | -.8350449 48.33866 -0.02 0.986 -95.85964 94.18955 age | 8.797438 1.653155 5.32 0.000 5.547649 12.04723 coned | 81.40359 11.48141 7.09 0.000 58.83332 103.9739 _cons | -1089.701 300.7268 -3.62 0.000 -1680.873 -498.5296 ------------------------------------------------------------------------------ Example: Teachers’ Pay Why the difference in R-sq? Different levels of aggregation of data lead to different total variance. Micro-data has much more variance than state average data (why might this be)? Times Series data often has r-sq of .98 or .99. As a result, we cannot use R-sq to compare the results across different data sets or types of regressions. It can be useful to compare specifications within a particular model. Correlation & R-Squared R2 and ρ: What is the relationship? ρ is the population value of the correlation, in the sample the symbol for correlation is r. If r is the correlation between X and Y, then R2, the goodness of fit measure of a regression equation is r 2. Note that this ONLY holds for bi-variate relationships. An example for the relationship between education expenditures and teacher pay: Correlation & R-Squared: Example Results for: Teacher Expenditure.MTW Correlations: pay, expenditures Pearson correlation of pay and expenditures = 0.835 P-Value = 0.000 Regression Analysis: pay versus expenditures The regression equation is pay = 12129 + 3.31 expenditures Predictor Constant expendit S = 2325 Coef 12129 3.3076 SE Coef 1197 0.3117 R-Sq = 69.7% r2 = .8352 =.697225 = R2 T 10.13 10.61 P 0.000 0.000 R-Sq(adj) = 69.1%