Tips and Tricks for Minimizing ARM Cortex

advertisement

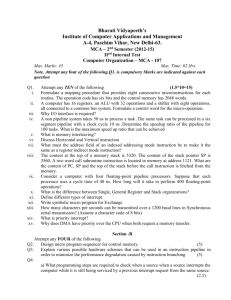

Tips and Tricks for Minimizing ARM Cortex-M CPU Power Consumption By Matt Saunders, Senior Marketing Manager, Microcontroller and Wireless Products, Silicon Labs There are myriad CPU choices available to embedded developers, and each choice has its own idiosyncrasies, architectural benefits and array of power saving techniques. To provide a useful context for the wide range of embedded CPU options, it’s helpful to focus on one widely used architecture: the ARM Cortex-M series. This architectural choice provides several CPU variants to explore and many different MCU devices from silicon vendors. The diversity of options within the Cortex-M series is important as minimizing the CPU power consumption is achieved not just through the CPU architecture itself but also by the supporting elements around it, such as interrupt controllers, memory interfaces, memory accelerators, low power modes and intelligent peripherals. Everything You Need to Know about Thumb-2 Let’s begin our look at power-saving techniques in a place that might not be an obvious starting point – the instruction set. Cortex-M CPUs all use the Thumb-2 instruction set, which blends the 32-bit ARM instruction set) with the 16-bit Thumb instruction set and provides a flexible solution for both raw performance and overall code size. A typical Thumb-2 application on Cortex-M will be in the range of 25 percent smaller and 90 percent as efficient (when optimized for time) compared to code written entirely in ARM instructions. Thumb-2 includes a number of powerful instructions that reduce the cycle count for basic operations. Reducing the cycle count means you can do the job at hand with a significant reduction in CPU power consumption. Consider, for example, a 16-bit multiply (see Figure 1). Performing this operation on an 8bit 8051-based MCU would take 48 clock cycles and 48 bytes. Using a 16-bit core such as C166, this operation would be in the range of 8 clock cycles and 8 bytes. In contrast, on a Cortex-M3 core using Thumb-2, this is done in a single cycle and uses only two bytes of flash. The Cortex-M3 saves power by using less clock cycles to do the same job and by using much less flash memory, therefore much fewer access to flash to achieve the end result (in addition to potentially fitting the application into a smaller flash and reducing overall system power). We could spend a lot more time examining the Thumb-2 instruction set, but there are other important areas to cover as well. Figure 1. Cycle Count Comparison 1 Power-Saving Interrupt Controller Techniques The interrupt controller (Nested Vectored Interrupt Controller or NVIC) in the Cortex-M architecture also plays a role in reducing CPU consumption. The Cortex-M3 NVIC takes just 12 cycles to get from the interrupt request to executing the interrupt code, compared to the ‘up to’ 42 cycles that it took previously on the ARM7-TDMI, clearly bringing a benefit in efficiency and reducing wasted time for the CPU. In addition to the fast entry to the interrupt routine, the NVIC also manages moving from one interrupt routine to another much more efficiently. On the ARM7-TDMI implementations, it was necessary to spend cycles moving from the interrupt routine back to the main application, and then back into the next interrupt routine, burning up to 42 cycles between interrupt service routines on just “push-and-pop” functions. The Cortex-M NVIC enables a much more efficient method for accomplishing this task called “tail-chaining.” This method uses a simple 6-cycle process for getting the necessary information to run the next service routine. With tail-chaining, there is no need to do a full push-and-pop cycle, resulting in an overall savings of 65 percent in clock cycles to manage interrupts (see Figure 2). Figure 2. Interrupt Response on ARM7 and Cortex-M3 Power-Saving Memory Considerations Memory interfaces and memory accelerators can make a significant impact on how much power the CPU consumes. Branches and jumps in the code have the effect of flushing the pipeline feeding instructions to the CPU, and in that case the CPU will stall for a number of cycles while the pipeline is refilled. On a Cortex-M3 or Cortex-M4, the CPU is equipped with a 3-stage pipeline. Flushing this pipeline will cause the CPU to stall for three cycles -- even longer if there are wait-states on the flash -- while it is refilled. These stalls waste power as effectively nothing useful is happening. To help mitigate the delay, the Cortex-M3 and M4 cores include a function called Speculative Fetch, which upon fetching a branch in the 2 pipeline will also fetch the likely branch target. If that branch is taken, then the Speculative Fetch will have reduced the stall by 1 cycle. While this feature is useful, it is clearly not enough, and many vendors of Cortex-M devices add their own IP to enhance this capability. Consider, for example, different approaches to instruction caches used in popular ARM Cortex-M class MCUs. An MCU with a simple instruction cache, such as an EFM32 device from Silicon Labs, may store 128 x 32 (512 bytes) of the most recently executed instructions (with logic to determine if the requested address is in the cache or not). The EFM32 reference manuals suggest a typical application will get a >70 percent hit rate on this cache, meaning far fewer flash accesses and faster execution of the code and an overall reduction in consumption. In contrast, an ARM-based MCU using a branch cache with 64 x 128-bit buffers can store the first few instructions (minimum of 4, maximum of 8 for each branch depending on the mix of 16-bit or 32-bit instructions). A branch cache implementation can therefore fill the pipeline in a single cycle for any branch or jump that hits the cache, eliminating any stalls or wasted cycles for the CPU. Both of these cache techniques offer considerable improvements in performance and reductions in CPU consumption compared to the same CPU without these cache features. (Both cache types also minimize accesses to flash, enabling an overall reduction in MCU power consumption as well.) Closer Look at the M0+ Core For power-sensitive applications where every nano-watt counts, the Cortex-M0+ core is a good option. The M0+ is based on a Von-Neumann architecture (whereas the Cortex-M3 and Cortex-M4 cores are Harvard architecture), meaning it has a smaller gate count for lower overall consumption figures and only a minimal hit on performance (0.93 DMIPS/MHz vs 1.25 DMIPS/MHz for its bigger ARM siblings). It also uses a smaller subset of the Thumb-2 instruction set (see Figure 3). Nearly all of the instructions have 16bit opcodes (52 x 16-bit opcodes and 7 x 32-bit opcodes; data operations are all 32-bit), enabling some interesting options for reducing CPU power consumption. Figure 3. Cortex-M0+ Instruction Set The first of these power-saving options is to reduce the number of flash accesses. Having a predominantly 16-bit instruction set means you can access the flash on alternate cycles (see Figure 4) and fetch two instruction for the pipeline with each access. This assuming that you have two instructions aligned in a 32-bit word in memory; in the case where the instructions are not aligned, then the Cortex-M0+ will disable half of the bus to conserve every last bit of energy. 3 Figure 4. Alternate Clock Flash Access on Cortex-M0+ Additionally, the Cortex-M0+ core can save power as a byproduct of the reduction to a 2-stage pipeline. In pipelined processors, subsequent instructions are fetched while the CPU executes the current instructions. If the program branches and does not use the subsequently fetched instructions, the energy used to fetch them (branch shadow) is effectively wasted. In a 2-stage pipeline this branch shadow is reduced so energy can be saved (although it a small amount), and it also means there is one cycle less to refill the pipeline in the event it is flushed (see Figure 5). Figure 5. Pipeline and Branch Shadow Leveraging GPIO Ports to Save Power Another area where the Cortex-M0+ core offers some power savings is through its high-speed GPIO ports. On the Cortex-M3 and Cortex-M4 cores, the process of toggling a bit or GPIO port is to “readmodify-write” a 32-bit register. While the Cortex-M0+ can use this method too, it has a dedicated 32-bitwide I/O port that gives single-cycle access to the GPIO, making it possible to toggle the bit / pin efficiently. Note: this is an optional feature on the Cortex-M0+ so not all vendors will implement this useful GPIO feature. Putting the CPU to Sleep One of the most effective ways to minimize CPU power consumption is to switch off the CPU itself. There are a number of different sleep modes in the Cortex-M architecture, each offering a tradeoff between power consumption in the chosen sleep mode and startup time to executing code again (see Figure 6). It is also possible to have the CPU enter a sleep mode automatically upon completing an interrupt service routine without the need for a line or two of code to do the job. This approach saves CPU cycles for a task that is likely to be very common in an ultra-low power application. 4 Figure 6. Cortex-M Native Low-Power Modes In deep sleep mode, it’s also possible to use the Wakeup Interrupt Controller (WIC) to offload the NVIC. When using the WIC, there is no need for a clock to the NVIC in low-power modes to wake up the CPU to by external interrupts. Offloading the CPU with Autonomous Peripherals Autonomous on-chip peripherals also can be a real benefit for power reduction. Most MCU vendors have their own architectures for enabling autonomous interaction between peripherals, such as the Peripheral Reflex System (PRS) used in Silicon Labs’ EFM32 MCU devices. Autonomous peripheral implementations enable fairly complex chains of peripheral actions (triggers rather than data transfers) to take place while the CPU remains asleep. For example, using the PRS capability of an EFM32 MCU, the application could be configured such that in a low-power mode with the CPU asleep, the onboard comparator detects a voltage that crosses its threshold, triggering a timer to start counting down. When that timer hits zero, it then triggers the DAC to start its output – all while the CPU remains asleep. With such complex interactions occurring autonomously, it is easy to get a lot done without CPU intervention. Furthermore, peripherals that have some built-in intelligence such as sensor interfaces or pulse counters can be configured to wake the CPU on an interrupt with a pre-configured condition, such as having counted 10 pulses. In this example, when the CPU wakes to that specific interrupt, it knows exactly what needs to be done and doesn’t need to check counters or registers to see what information has come in, thereby saving some cycles and getting on with other important tasks. Parting Thoughts We’ve covered some of the easier-to-access methods of reducing CPU consumption on the wide choice of Cortex-M devices. There are, of course, other considerations impacting power consumption, such as the process node used to manufacture the device or the memory technology used to store the application code. Process and memory technologies can have significant impacts on both run-time power consumption and the leakage in low-power modes, and thus should be factored into embedded developer’s overall power-saving strategy. # # # 5