CPSC 533

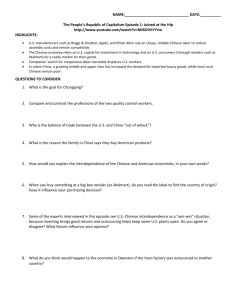

advertisement

CPSC 533 Philosophical Foundations of Artificial Intelligence Presented by: Arthur Fischer Philosophical Questions in AI How can mind arise from nonmind? (This is the mind-body problem.) How can there be “free will” in the mind, if the brain is subject to the laws of nature? What does it mean to “know” or “understand” something. Can we mechanise the discovery of knowledge. More Questions Is there such a thing as a priori knowledge? What is the structure of knowledge? Can mind exist in something other than a brain? What do we communicate when we communicate with language? Alan M. Turing Computing Machinery and Intelligence The Imitation Game If a computer can “fool” a human interrogator into believing that it is human, then it may be said that the machine is intelligent, and thinks. The scope of questions that may be asked is virtually unlimited. An Interrogation Q: Please write me a sonnet on the subject of the Forth Bridge. A: Count me out of this one. I never could write poetry. Q: Add 34957 to 70764. A: <Pause about 30 seconds> 105621. Q: Do you play chess? A: Yes. Q: I have K at my K1, and no other pieces. You have only K at K6 and R at R1. It is your move. What do you play? A: <Pause about 15 seconds> R-R8 mate. The Theological Objection “Thinking is a function of man’s immortal soul. God has given an immortal soul to every man and woman, but not to any other animal or to machines. Hence no animal or machine can think.” The ‘Heads in the Sand’ Objection “The consequences of machines thinking would be too dreadful. Let us hope and believe that they cannot do so.” The Mathematical Objection “[T]here are limitations to the powers of discrete state machines” therefore there are questions that humans can answer, but not machines. The Argument from Consciousness “Not until a machine can write a sonnet or compose a concerto because of thoughts and emotions felt, and not by the chance fall of symbols, could we agree that machine equals brain - that is, not only write it but know that it had written it. No mechanism could feel (and not merely artificially signal, an easy contrivance) pleasure at its successes, grief when its valves fuse, be warmed by flattery, be made miserable by its mistakes, be charmed by sex, be angry or depressed when it cannot get what it wants.” Arguments from Various Disabilities “I grant you that you can make machines do all the things that you have mentioned but you will never be able to make one to do X.” Lady Lovelace’s Objection ”The Analytical Engine has no pretensions to originate anything. It can do whatever we know how to order it to perform.” “[A] machine can ‘never do anything really new’.” Arguments from Continuity in the Nervous System “The nervous system is certainly not a discretestate machine. A small error in the information about the size of a nervous impulse impinging on a neuron, may make a large difference to the size of the outgoing impulse. It may be argued that, this being so, one cannot expect to be able to mimic the behaviour of the nervous system with a discrete-state machine.” The Argument from Informality of Behaviour “It is not possible to produce a set of rules purporting to describe what a man should do in every conceivable set of circumstances. … To attempt to provide rules of conduct to cover every eventuality… appears to be impossible.” The Argument from ExtraSensory Perception Using ESP, one could conceivably route around the issue in the Imitation Game, asking questions that would require telepathy or clairvoyance in order to be frequently answered correctly. J. R. Lucas Minds, Machines and Gödel Incompleteness in a Nutshell In any consistent formal system which is strong enough to produce simple arithmetic there are formulae which cannot be proved-in-the-system. Even if you add these formulae as axioms to the formal system, there exist other formulae that cannot be provedin-the-system. This is a necessity for any such formal system. How do we do it? Create a formal statement which says, under interpretation, “I cannot be proven in this formal system”. This statement is called a Gödel-statement. If the statement were provable in the system, it would be false, and thus the system would be inconsistent. If the statement cannot be proven in the system, it is true, and therefore there are true statements that cannot be proven in the system, meaning that the system is incomplete. The Argument Since a machine is a concrete representation of a formal system, a human mind can find the system’s Gödel-statement, and the machine would be unable to correctly determine that the statement is true. The human interrogator can (trivially) determine that the statement is true, therefore there is something that the human mind can do, that the machine cannot. Ergo, the human mind is not a machine. And in no possible way can a machine be equivalent to a human mind. Objections This assumes that the human mind is not a formal system itself. The argument “begs the question.” “Lucas cannot consistently assert this sentence.” could be seen as Lucas’ Gödel-statement. [C.H. Whitely] Lucas imagines that machines must necessarily work at the “machine level” of gears, switches, transistors, etc, while implicitly assuming that the human mind works at a higher level. John Searle Minds, Brains, and Programs Into the Chinese Room If a computer’s answers is indistinguishable from a human’s, then that computer is said to have “understood” the questions as well as a human. This is a Strong AI position. Therefore, Searle must understand Chinese (writing) as well as any native speaker of Chinese. A Possible Instruction Conclusions The Turing Test is not a suitable test for intelligence. Could something think, understand, and so on solely in virtue of being a computer with the right sort of program? Could instantiating a program by itself be a sufficient condition of understanding? NO. The Systems Reply “While it is true that the individual person… does not understand [Chinese], the fact is that he is merely part of a whole system, and the system does understand the story.” The Brain Simulator Reply “Suppose we design a program that … simulates the actual sequence of neuron firings at the synapses of the brain of a native Chinese speaker…. … Now surely in such a case we would have to say that the machine understood the stories; and if we deny that, wouldn’t we also have to deny that native Chinese speakers understood the stories?” The Other Minds Reply “How do you know that other people understand Chinese or anything else? Only by their behavior.”