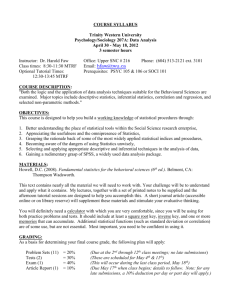

Continuous variables - VAM Resource Center

Hypothesis testing

5th - 9th December 2011, Rome

Hypothesis testing

Hypothesis testing involves:

1.

2.

defining research questions and assessing whether changes in an independent variable are associated with changes in the dependent variable by conducting a statistical test

Dependent and independent variables

Dependent variables are the outcome variables

Independent variables are the predictive/ explanatory variables

Examples…

Research question: Is educational level of the mother related to birthweight?

What is the dependent and independent variable?

Research question: Is access to roads related to educational level of mothers?

Now?

Tests statistics

To test hypotheses, we rely on test statistics…

Test statistics are simply the result of a particular statistical test

The most common include:

T-tests calculate T-statistics

ANOVAs calculate F-statistics

Correlations calculate the pearson correlation coefficient

Significant test statistic

Is the relationship observed by chance, or because there actually is a relationship between the variables???

This probability is referred to as a p-value and is expressed a decimal percent (ie. p=0.05)

If the probability of obtaining the value of our test statistic by chance is less than 5% then we generally accept the experimental hypothesis as true: there is an effect on the population

Ex: if p=0.1-- What does this mean? Do we accept the experimental hypothesis?

This probability is also referred to as significance level (sig.)

Statistical significance

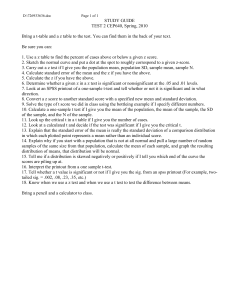

Hypothesis testing Part 1: Continuous variables

Topics to be covered in this presentation

T- test

One way analysis of variance (ANOVA)

Correlation

Hypothesis testing…

WFP tests a variety of hypothesis…

Some of the most common include:

1. Looking at differences between groups of people (comparisons of means)

Ex. Are different livelihood groups more likely to have different levels food consumption??

2. Looking at the relationship between two variables…

Ex. Is asset wealth associated with food consumption??

How to assess differences in two means statistically

T-tests

T-test

A test using the t-statistic that establishes whether two means differ significantly.

Independent means t-test:

It is used in situations in which there are two experimental conditions and different participants have been used in each condition.

Dependent or paired means t-test:

This test is used when there are two experimental conditions and the same participants took part in both conditions of experiment.

T-test: assumptions

Independent T-tests works well if:

continuous variables

groups to compare are composed of different people

within each group, variable’s values are normally distributed

there is the same level of homogeneity in the 2 groups.

Normal distribution

Normal distributions are perfect symmetrical around the mean

(mean is equal to zero)

Values close to the mean (zero) have higher frequency.

Values very far from the mean are less likely to occur (lower frequency)

Variance

Variance measures how cases are similar on a specific variable (level of homogeneity)

V = sum of all the squared distances from the Mean / N

Variance is low → cases are very similar to the mean of the distribution (and to each other). The group of cases is therefore homogeneous (on this variable)

Variance is high → cases tend to be very far from the mean (and different from each other). The group of cases is therefore heterogeneous (on this variable)

Homogeneity of Variance

T-test works well if the two groups have the same homogeneity

(variance) on the variable. If one group is very homogeneous and the another is not, T-test fails.

The independent t-test

The independent t-test compares two means , when those means have come from different groups of people ;

To conduct an independent t-test in SPSS

1.

2.

3.

4.

5.

Click on “Analyze” drop down menu

Click on “Compare Means”

Click on “Independent- Sample T-Test…”

Move the independent and dependent variable into proper boxes

Click “OK”

T-test: SPSS procedure

Drag the variables into the proper boxes

define values for the independent variable

One note of caution about independent t-tests

It is important to ensure that the assumption of homogeneity of variance is met:

To do so:

Look at the column labelled Levene’s Test for Equality of Variance.

If the Sig. value is less than .05 then the assumption of homogeneity of variance has been broken and you should look at the row in the table labelled Equal variances not assumed .

If the Sig. value of Levene’s test is bigger than .05 then you should look at the row in the table labelled Equal variances assumed .

T-test: SPSS output

Group Statistics coping s trategies index beneficiary household as per CP records

1

2

N

581

568

Mean

40.9019

42.3750

Std. Deviation

30.70829

32.38332

Std. Error

Mean

1.27399

1.35877

Look at the Levene’s Test …

If the Sig. value of the test is less than .05, groups have different variance.

Read the row “ Equal variances not assumed ”

If the Sig. value of test is bigger than .05

, read the row labelled “ Equal variances assumed ” coping s trategies index Equal variances ass umed

Equal variances not as sumed

Levene's Test for

Equality of Variances

Independent Samples Test

F

.004

Sig.

.950

t

-.791

df

1147 t-tes t for Equality of Means

Sig. (2-tailed)

.429

Mean

Difference

-1.47311

Std. Error

Difference

95% Confidence

Interval of the

Difference

Lower

1.86149

-5.12542

Upper

2.17921

-.791

1140.469

.429

-1.47311

1.86261

-5.12764

2.18143

What to do if we want to statistically compare differences in three means?

Analysis of variance

(ANOVA)

Analysis of Variance (ANOVA)

ANOVAs test tells us if there are any difference among the different means but not how (or which) means differ.

ANOVAs are similar to t-tests and in fact an ANOVA conducted to compare two means will give the same answer as a t-test.

Calculating an ANOVA

ANOVA formulas: calculating an ANOVA by hand is complicated and knowing the formulas are not necessary…

Instead, we will rely on SPSS to calculate ANOVAs…

Example of One-Way ANOVAs

Research question: Do mean child malnutrition (GAM) rates differ according to mother’s educational level (none, primary, or secondary/ higher)?

Report

W AZNEW

Mother' s education level

No educati on

Primary

Secondary

Higher

T otal

Mean

-1.3147

-1.0176

-.5525

-.1921

-.9494

ANOVA

N

736

3247

907

172

5062

St d. Deviation

1. 32604

1. 21521

1. 25238

1. 33764

1. 27035

WAZNEW

Between Groups

Within Groups

T otal

Sum of Squares

354.567

7812.148

8166.715

df

3

5057

5060

Mean Square

118.189

1. 545

F

76.507

Sig.

.000

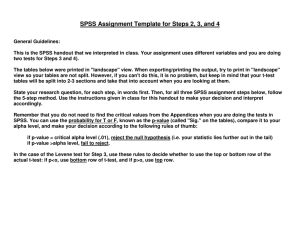

To calculate one-way ANOVAs in SPSS

In SPSS, one-way ANOVAs are run using the following steps:

Click on “Analyze” drop down menu

1.

Click on “Compare Means”

2.

Click on “One-Way ANOVA…”

3.

Move the independent and dependent variable into proper boxes

4.

Click “OK”

ANOVA: SPSS procedure

1.

Analyze; compare means; one-way

ANOVA

2.

Drag the independent and dependent variable into proper boxes

3.

Ask for the descriptive

4.

Click on ok

ANOVA: SPSS output

Along with the mean for each group, ANOVA produces the

F-statistic . It tells us if there are differences between the means. It does not tell which means are different.

Look at the F’s value and at the Sig. level

ANOVA coping s trategies index

Between Groups

Within Groups

Total

Sum of

Squares

25600.110

1116564

1142164 df

10

1138

1148

Mean Square

2560.011

981.163

F

2.609

Sig.

.004

Determining where differences exist

In addition to determining that differences exist among the means, you may want to know which means differ.

There is one type of test for comparing means:

Post hoc tests are run after the experiment has been conducted (if you don’t have specific hypothesis).

ANOVA post hoc tests

Once you have determined that differences exist among the means, post hoc range tests and pairwise multiple comparisons can determine which means differ.

Tukeys post hoc test is the amongst the most popular and are adequate for our purposes…so we will focus on this test…

To calculate Tukeys test in SPSS

In SPSS, Tukeys post hoc tests are run using the following steps:

1.

2.

3.

4.

5.

6.

7.

8.

Click on “Analyze” drop down menu

Click on “Compare Means”

Click on “One-Way ANOVA…”

Move the independent and dependent variable into proper boxes

Click on “Post Hoc…”

Check box beside “Tukey”

Click “Continue”

Click “OK”

Determining where differences exist in SPSS

Once you have determined that differences exist among the means → you may want to know which means differ…

Different types of tests exist for pairwise multiple comparisons

Pairwise comparisons: SPSS output

Once you have decided which post-hoc test is appropriate

Look at the column “mean difference” to know the difference between each pair

Look at the column Sig.: if the value is less than .05

then the means of the two pairs are significantly different

Multiple Comparisons

Dependent Variable: coping strategies index

Tukey HSD

(I) ass et wealth ass et poor ass et medium ass et rich

(J) as set wealth ass et medium ass et rich ass et poor ass et rich ass et poor ass et medium

Mean

Difference

(I-J)

8.5403*

22.5906*

-8.5403*

14.0503*

-22.5906*

-14.0503*

Std. Error

1.6796

2.7341

1.6796

2.5873

2.7341

2.5873

*. The mean difference is significant at the .05 level.

Sig.

.000

.000

.000

.000

.000

.000

95% Confidence Interval

Lower Bound Upper Bound

4.599

12.481

16.175

-12.481

7.979

-29.006

-20.121

29.006

-4.599

20.121

-16.175

-7.979