bias essentials GROUP 6 final

advertisement

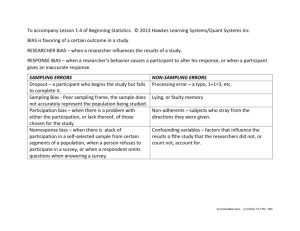

Essentials of Research Bias I. How is ethics different than bias? Two main points about research ethics: 1. Researchers have a responsibility to know and understand the codes of research ethics. No excuses. 2. Abiding by the codes of ethical research means you, as the researcher, must not put the needs of your research above the well being of the study participants (human or otherwise). Tomal writes very little about research ethics. That is why we needed to read the articles and get the NIH certificates. A researcher can’t abide by the codes of ethics if the researcher is unaware of what the ethics codes contain. Researchers need to know about ethics prior to designing studies, not after or during studies. Two main points about research bias: 1. Bias is a product of the researcher, the participants and/or the research design. 2. Bias makes the research study design, data, results and conclusions suspect and likely invalid. Tomal writes more about research bias. That topic is tackled in Chapter 3. This is an important chapter. Make sure you read it. I have read it a few times. With each type of data collection there is some information about how bias could happen. For example, on page 39 there is information about the many different kinds of bias that can occur when conducting observations. Educators need to be good observers. Being aware of observation types of biases is important in our work, whether formal action research is being conducted or not. It is just part of being a professional. It is important to note that in all of these, data collection techniques and data collection instruments (tools) are mixed together. Techniques and instruments are two different things. For example a data collection technique is observation. A data collection instrument that is used for observing is a checklist. II. Application We will analyze our own research for bias. The two research bias tables below are going to be your guides, along with your textbook, for determining bias in your own research as we move to the next phase of this course: data collection methods in week 4. You will need to become familiar with them since you will complete sections of the tables as you select techniques, design instruments and collect data. Knowing about possible sources of bias is important for research design, procedures, analysis, knowing the limitations of the study and more. There are two tables. The task is to create summary statements in each about sources of bias. So, a few sentences is all you will need. But make your sentences count! Suggestion: Each group member becomes responsible for a few of the biases. Then have each member make a suggestion, add to it, Okay it, until you all agree one final product. You might find Googledocs useful for this so your document is up and you can all contribute simultaneously, real time.. BIAS IN DATA (INFORMATION) COLLECTION By COLLECTION METHOD BIAS: when data does not represent reality due to distortions in data collection methods or tools. TEAM 6 Jodi Herold, Ronny Vargas, and Joy Sneff Data Collection Techniques and main elements that could contribute to bias Observation Observer Observation: Setting and conditions Observation instrument Survey/Questionnaire Questionnaires - open ended Questionnaires: Survey (i.e. likert scale) Respondents Summary Statement of Bias for Each Data Collection Technique There are different forms of observation. Direct observation is when the researcher is obtaining first hand information. Some biases in this form are that teachers and students act differently when they know they are being observed, as well as giving higher or lower ratings to all subjects even if differences occur or will only give average scoring or rate upon a prejudice or have a hard time rating due to lack of concentration or indecisiveness. Taking field notes is another form of direct observation and is used to record everything and anything. Checklists help researchers make timely and accurate observations. A bias in this form is that is difficult to observe all behaviors so the observer must be focused on what is listed on the checklist. Journals are a method of recording behaviors, feelings, and incidents. It helps obtain more detailed information. According to (Tomal, 2010), conducting a survey is, without a doubt, one of the most effective techniques for data collection in action research. There are many different formats used but they all serve the same purpose; to ask direct questions to get information. Questionnaires (the instrument) that are of the open ended style tend to exist in surveys allowing respondents to give their honest and candid responses in a narrative form. They are structured so that the respondent is forced to respond with one or more statements, although they can be ineffective and time consuming. Example: Please describe the things you like best about … Surveys such as the Likert Scale are very common and fall under the topic of designing the questionnaire. It is a close ended format that allows the respondent to select or rate a value for the question on a five-point scale. One questionnaire that could be very popular and easy for younger, limited comprehension respondents would certainly be the facial pictorial scale. Interviewing Protocol (Interview questions) Interviewer Interviewee Respondents to surveys and questionnaires can be fickle at best. Tomal (2010) places much emphasis on stage five of this area-the cover letter. The cover letter can be the make or break component for the respondents if it is not written it the correct manner to accomplish the objective. Interviewing consists of asking questions of an individual or group to obtain their verbal responses. The protocol for this process is previewed in a basic tenstep format, Tomal (2010), fig. 3.9. The first step obviously is to prepare the questions. It is suggested that tailoring the questions based on what information the researcher is wishing to obtain is key. Step ten is thanking the interviewee for participating in the interview. One suggestion given is that researches should always give an estimate for completion of the study to avoid the possibility of participants developing unrealistic expectations. Interviewers can be most effective when they can establish a rapport quickly with the interviewee to obtain relevant information. The use of proper personality styles/theories is suggested in helping interviewers develop better communication with their interviewees. The Swiss psychologist Karl Gustav Jung, shares a selection of four styles in chapter three. As an outgrowth of Jung’s work, the four styles identified: Intuitor, feeler, thinker, and doer Tomal, (1999, 2010). Intuitor-theoretical and abstract. Feeler-values feelings and emotions. Thinker-objective, logical, and analytical. Doer- practical and results oriented. Assessments (such as tests, portfolios, performance assessments) Reliability Validity Interviewees who exhibit these styles of character can make the interviewing process quite challenging. Interviewees can also exhibit or resort to defense mechanisms. Defense mechanisms are psychological crutches that people utilize to prevent from experiencing negative feelings (Tomal, 2010). The following is a list of possible forms of defense mechanisms: denial, projection, reaction formation, fantasy and idealization, avoidance, aggressive behavior, and displacement. As I read this list, I related the teaching environment on a daily basis with many of these defense mechanisms from students of all ages. They are learned behaviors that start at a very early age. Assessing involves the evaluation of individuals’ work by examining tests, portfolios, records, and through the direct observation of individual and group skills and behaviors (Tomal, 2010). Testing is one of the major forms of assessment that validity and reliability is of concern. The term validity refers to, “Does the test measure what it is supposed to measure?” Reliability refers to “the ability of the test to accurately measure consistently over time” (Tomal, 2010). Validity – There are several types of validity: content, criterion, and construct validity. Content validity – This is the ability of the test to measure the subject of the content. Criterion validity – This type of validity refers to what extent two tests correlate. Construct validity – This type of validity refers to the ability of a test to actually assess some concept. Reliability – Test reliability is usually established through a coefficient. A high coefficient determines that the test is highly reliable. A low coefficient indicates that the test has low reliability. Reliability does not, however, establish validity. BIAS IN DATA (INFORMATION) COLLECTION By TYPE OF BIAS Research bias can also be classified by the type of bias. Sometime there are three larger categories of research bias: sample (selection), measurement and intervention. Other times those larger categories are subdivided into smaller ones like what is in the table below. Type of Bias Summary statement about the type of bias Sample Sampling is selecting people from a specific population. There are six different kind of sampling techniques. Random sampling is just as it says. Stratified sampling is selecting in equally size subgroups, based on a percentage. For example, a researcher wants 60% ESL and 40% English only speakers and wants 50 subjects. S/he would have 30 ESL students and 20 English only students. Cluster sampling is choosing groups (e.g. classrooms) than individuals. Systematic sampling is selecting every preset number of people from a list. (e.g. every fifth person). Convenience sampling is using subjects that are conveniently accessible. (e.g. classrooms). Finally, purposeful sampling is most commonly used in action research. Choosing students that the researcher wants to improve. There are two main areas of measurement; measure of central tendency and measure of variability. It is important for the researcher to use the best measure of tendency when making analysis and interpretation. They must also remember that sometimes no measure will adequately describe a set of data (Tomal, 2010). The measurement of variability includes observation, as well as turning that observation into descriptive data. The researcher, in any case, needs to remember to keep in mind that common sense and inductive reasoning is incredibly important in analyzing and interpreting data. Measurement Research Design Researchers need to have a basic understanding of tests of significance when it comes to conducting research. It helps them interpret scholarly research literature. The researcher needs to understand the concepts of tests of significance in order to determine whether or not to accept or reject a hypothesis. He/she needs to know these basic concepts so that he/she does not create a type I or type II error in failing to reject a hypothesis that is actually false. Procedural Procedural bias can certainly be an area of true concern. The entire action research process could be contaminated from the very start if not performed with the utmost confidentiality and good faith. As seen in the early examples of unethical studies such as the Tuskegee Syphilis Experiment, the Rakai Aids Study, and the Monster Study, procedural breakdown or outright deception can lead to very devastating results in the areas of respect for person, beneficence, and justice. Bias can also result from researchers selecting subjects that are more likely to generate the desired results. Interviewer and the interviewee may have different thinking /communication style and therefore the interviewer may view him/her in certain way. The interviewee may lie to avoid embarrassment or denial of feelings or behaviors. The interviewer then has to decide to use the information or not and determine if it is valid. Interviewer may misinterpret the body language of the interviewee. The interviewer may guide the conversation to receive a particular response. Response- Evidence can exist that require a person to complete a questionnaire and often can cause the person to construct an attitude where none existed prior to the questionnaire. The what, the how, the where, and the when data that needs to be collected can be manipulated to the point of changing or limiting the study. It is strongly suggested that a pilot test be performed to limit errors in any of the above categories and not compromise the actual study of the researcher. Reporting- usually in the form of a narrative report that contains statistics, charts, diagrams, and tables. The length of the report depends solely on the reason of the study. It should be organized and succinct. The bias that could exist in the report could easily surface and do perhaps the most damage. If the report is positive and lives up to the expectation of the study, one might to create the document with more accuracy and feeling. The opposite could also happen if the results are not what the researcher(s) had Interviewer Response Reporting hoped for. The statistics and other areas of the report can be created to present an illusion to an untrained eye with optimistic expectations. I’m certain that even the experts at some point have been convinced that the truth lies within the numbers, only perhaps the numbers are not the truth at all.