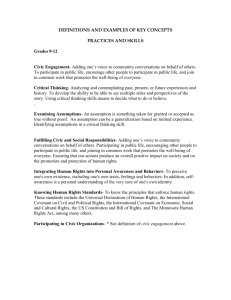

pptx

advertisement

Using Trees to Depict a Forest Bin Liu, H. V. Jagadish EECS, University of Michigan, Ann Arbor 1 Presented by Sergey Shepshelvich 2 Motivation In interactive database querying, we often get more results than we can comprehend immediately When do you actually click over 2-3 pages of results? 85% of users never go to the second page! What to display on the first page? 3 Standard solutions Sorting by attributes Computationally expensive Similar results can be distributed many pages apart Ranking Hard to estimate of the user's preference. In database queries, all tuples are equally relevant! What to do when there are millions of results? 4 Make the First Page Count Human beings are very capable of learning from examples Show the most “representative” results Best help users learn what is in the result set User can decide further actions based on representatives 5 The Proposal: MusiqLens Experience (Model-driven Usable Systems for Information Querying) 6 Suppose a user wants a 2005 Civic but there are too many of them… 7 MusiqLens on the Car Data Id Model Price Year Mileage Condition 872 Civic $12,000 2005 50,000 Good 901 Civic $16,000 2005 40,000 Excellent 725 Civic $18,500 2005 30,000 Excellent 423 Civic $17,000 2005 42,000 Good 132 Civic $9,500 2005 86,000 Fair 322 Civic $14,000 2005 73,000 Good 122 more like this 345 more like this 86 more like this 201 more like this 185 more like this 55 more like this 8 MusiqLens on the Car Data Id Model Price Year Mileage Condition 872 Civic $12,000 2005 50,000 Good 901 Civic $16,000 2005 40,000 Excellent 725 Civic $18,500 2005 30,000 Excellent 423 Civic $17,000 2005 42,000 Good 132 Civic $9,500 2005 86,000 Fair 322 Civic $14,000 2005 73,000 Good 122 more like this 345 more like this 86 more like this 201 more like this 185 more like this 55 more like this 9 After Zooming in: 2005 Honda Civics ~ ID 132 Id Model Price Year Mileage Condition 342 Civic $9,800 2005 72,000 Good 768 Civic $10,000 2005 60,000 Good 132 Civic $9,500 2005 86,000 Fair 122 Civic $9,500 2005 76,000 Good 123 Civic $9,100 2005 81,000 Fair 898 Civic $9,000 2005 69,000 Fair 25 more like this 10 more like this 63 more like this 5 more like this 40 more like this 42 more like this 10 After Filtering by “Price < 9,500” Id Model Price Year Mileage Condition 123 Civic $9,100 2005 81,000 Fair 898 Civic $9,000 2005 69,000 Fair 133 Civic $9,300 2005 87,000 Fair 126 Civic $9,200 2005 89,000 Good 129 Civic $8,900 2005 81,000 Fair 999 Civic $9,000 2005 87,000 Fair 40 more like this 42 more like this 33 more like this 3 more like this 20 more like this 12 more like this 11 Challenges Representation metric What is the best set of representatives? Representative finding How to find them efficiently? Query Modeling: finding a suitable Refinement How to efficiently adapt to user’s query operations? 12 Finding a Suitable Metric Users should be the ultimate judge Which metric generates the representatives that I can learn the most from? User study to evaluate different representation modeling 14 Metric Candidates Sort by attributes Uniform random sampling Small clusters are missed Density-biased Sample more from sparse regions, less from dense regions Sort sampling by typicality Based on probabilistic modeling K-medoids 16 Metric Candidates - K-medoids A medoid of a cluster is the object whose dissimilarity to others is smallest Average medoid and max medoid K-medoids are k objects, each from a different cluster where the object is the medoid Why not K-means? K-means cluster centers do not exist in database We must present real objects to users 17 Plotting the Candidates Data: Yahoo! Autos, 3922 data points. Price and mileage are normalized to 0..1 1 1 Random 0.9 0.8 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 0 0.5 Density Biased 0.9 1 0 0.5 1 18 Plotting the Candidates - Typicality 1 0.9 Typical 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 0.2 0.4 0.6 0.8 1 19 Plotting the Candidates – k-medoids 1 1 0.9 Max-Medoids 0.9 0.8 0.8 0.7 0.7 0.6 0.6 0.5 0.5 0.4 0.4 0.3 0.3 0.2 0.2 0.1 0.1 0 0 0 0.2 0.4 0.6 0.8 1 Avg-Medoids 0 0.2 0.4 0.6 0.8 1 20 User Study Procedure Users 7 sets of data, generated using the 7 candidate methods Each set consists of 8 representative points Users are given: predict 4 more data points That are most likely in the data set Should not pick those already given Measure the predication error 21 Verdict K-meoids is the winner In this paper, authors choose average kmedoids Proposed algorithm can be extended to maxmedoids with small changes 22 Challenges Representation metric What is the best set of representatives? Representative finding How to find them efficiently? Query Modeling: finding a suitable Refinement How to efficiently adapt to user’s query operations? 23 Cover Tree Based Algorithm Cover Tree was proposed by Beygelzimer, Kakade, and Langford in 2006 Briefly discuss Cover Tree properties See Cover Tree based algorithms for computing k-medoids 24 Cover Tree Properties (1) Nesting: for all 𝑖, 𝐶𝑖 ⊂ 𝐶𝑖+1 𝐶𝑖 𝐶𝑖+1 Points in the Data (One Dimension) 25 Cover Tree Properties (2) Covering: node in 𝐶𝑖 is within distance of 1 2𝑖 to its children in 𝐶𝑖+1 𝐶𝑖 𝐶𝑖+1 Distance from node to any descendant is less than 1 2𝑖−1 . This value is called the “span” of the node. 26 Cover Tree Properties (3) Separation: nodes in 𝐶𝑖 are separated by at least 1 2𝑖 𝐶𝑖 𝐶𝑖+1 Note: 𝑖 allowed to be negative to satisfy above conditions. 27 Additional Stats for Cover Tree (2D Example) s5 s2 s1 s3 s7 s6 s4 p s5 s8 s10 s9 s3 s3 s2 s7 DS = 10 s5 s8 s5 s8 DS = 3 s1 s3 s2 s6 s7 s5 s4 s8 s9 s10 Density (DS): number of points in the subtree Centroid (CT): geometric center of points in the subtree 28 k-medoid Algorithm Outline We descend the cover tree to a level with more than 𝑘 nodes Choose an initial 𝑘 points as first set of medoids (seeds) Bad seeds can lead to local minimums with a high distance cost Assigning nodes and repeated update until medoids converge 29 Cover Tree Based Seeding Descend the cover tree to a level with more than 𝑘 nodes (denote as level m) Use the parent level 𝑚 − 1 as starting point for seeds Each node has a weight, calculated as product of span and density (the contribution of the subtree to the distance cost) Expand nodes using a priority queue Fetch the first 𝑘 nodes from the queue as seeds 30 A Simple Example: k = 4 s5 s2 s1 s3 s7 s6 s3 s4 s5 s8 s10 s9 s3 s2 s7 Span = 2 s5 s8 Span = 1 s5 s8 Span = 1/2 s1 s3 s2 s6 s7 s5 s4 s8 s9 s10 Priority Queue on node weight (density * span): S3 (5), S8 (3), S5 (2) S8 (3/2), S5 (1), S3 (1), S7 (1), S2 (1/2) Final set of seeds Span = 1/4 31 Update Process 1. 2. Initially, assign all nodes to closest seed to form 𝑘 clusters For each cluster, calculate the geometric center Use centroid and density information to approximate subtree 3. 4. Find the node that is closest to the geometric center, designate as a new medoid Repeat from step 1 until medoids converge 32 Challenges Representation metric What is the best set of representatives? Representative finding How to find them efficiently? Query Modeling: finding a suitable Refinement How to efficiently adapt to user’s query operations? 33 Query Adaptation Handle user actions Zooming Selection (filtering) 34 Zooming Zooming Expand all nodes assigned to the medoid Run k-medoid algorithm on the new set of nodes 35 Effect node of selection on a Completely invalid Fully valid Partially valid Estimate the validity percentage (VG) of each node Multiply the VG with weight of each node Price Selection A 50 12000 S1 30 150 S 3 57 S5 S2 45 S4 a b 201 90 S6 S7 Mileage 37 Experiments – Initial Medoid Quality Compare with R-tree based method by M. Ester, H. Kriegel, and X. Xu Data sets Synthetic dataset: 2D points with zipf distribution Real dataset: LA data set from R-tree Portal, 130k points Measurement Time to compute the medoids Average distance from a data point to its medoid 38 Results on Synthetic Data Distance Time 800 0.01 700 0.008 0.006 0.004 R-tree Cover Tree 0.002 Distance Time (seconds) 600 500 400 R-tree 300 Cover Tree 200 100 0 0 256K 512K 1024K Cardinality 2048K 4096K 256K 512K 1024K 2048K Cardinality For various sizes of data, Cover-tree based method outperforms R-tree based method 4096K 39 Results on Real Data 0.06 R-tree 0.05 1400 R-tree 1200 Cover Tree 1000 800 600 400 Time (seconds) Distance 1600 Cover Tree 0.04 0.03 0.02 0.01 200 0 0 2 8 32 k 128 512 2 8 32 128 k For various k values, Cover-tree based method outperforms R-tree based method on real data 512 40 Query Adaptation Compare with re-building the cover tree and running the k-medoid algorithm from scratch. Synthetic Data Real Data 600 350 Re-Compute Incremental 400 300 200 Re-Compute 300 Incremental 250 Distance Distance 500 200 150 100 100 50 0 0 0.8 0.6 0.4 Selectivity 0.2 0.8 0.6 0.4 Selectivity Time cost of re-building is orders-of-magnitude higher than incremental computation. 0.2 41 Conclusion Authors proposed MusiqLens framework for solving the many-answer problem Authors conducted user study to select a metric for choosing representatives Authors proposed efficient method for computing and maintaining the representatives under user actions Part of the database usability project at Univ. of Michigan Led by Prof. H.V. Jagadish http://www.eecs.umich.edu/db/usable/