SDSC Staff Home Pages - San Diego Supercomputer Center

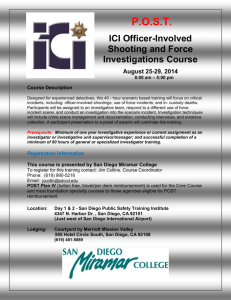

advertisement

Data Grid Management Systems (DGMS) Arun Swaran Jagatheesan San Diego Supercomputer Center University of California, San Diego Tutorial at the fourth IEEE International Conference on Data Mining Brighton, UK November 01 - 04, 2004 San Diego Supercomputer Center University of Florida Grid Physics Network (GriPhyN) Dynamic Calibration of Content • • • • • Academic researchers and Students () Business analysts & Office of the CTO () Software architects () Software developers () Savvy users () Just to make sure all of us get the most out of this tutorial when we leave this room University of Florida 2 San Diego Supercomputer Center ICDM 2004 Some Questions Is there a problem worth my (student’s) Ph.D thesis? What is Data Grid? Can data grid technologies help IT department to save cost? How are the concepts I should know to design data grid product. What are the undocumented tricks of the trade that worked? Are there people using these or is it still in research labs? University of Florida Been hearing about it a lot. Can I see something for real 3 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 4 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids o o o o The “Grid” Vision Hype/Reality Where: Data Grid Infrastructures in production Why: Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 5 San Diego Supercomputer Center ICDM 2004 We All Know the Story • Information is growing …, information explosion • In 2002, 5 Exabytes of data was produced by man kind (Equivalent of all words ever spoken by human beings) • In 2006, 62 billion e-mails in 800~1335 PB • Mostly files, e-mails,multi-media Source: “How much Information? 20002” – SIMS, UC Berkeley University of Florida 6 San Diego Supercomputer Center ICDM 2004 Enterprise Data Storage • Distributed in multiple locations and resources • Geographically or administratively distributed • Multiple “autonomous administrative domains” • Independent control over operations • Business driven - collaborations, acquisitions etc., • Heterogeneous storage and data handling systems • File systems, databases, archives, e-mail/web servers… Can we have a single logical namespace for data storage? Can enterprises reduce TCO treating data storage as a single utility or infrastructure? University of Florida 7 San Diego Supercomputer Center ICDM 2004 Distributed Computing © Images courtesy of Computer History Museum University of Florida 8 San Diego Supercomputer Center ICDM 2004 The “Grid” Vision University of Florida 9 San Diego Supercomputer Center ICDM 2004 Data Grids – Hype / Reality? • Forrester research • “Data and infrastructure are top of mind for grid at more than 50 percent of firms … the vision of data grids will become part of a greater vision of storage virtualization and information life cycle management” – may 2004 • CIO magazine • “While most people think of computational grids, enterprises are looking into data grids” – may 2004 • Why talk about Busine$$ in an IEEE conference? • Necessity drives business; Business drives standards and technology evolution; …; Grid is not just technology alone, but also standards evolution (that require businesses participation) University of Florida 10 San Diego Supercomputer Center ICDM 2004 Media Perspective • CNN: The world's biggest brain: Distributed [grid] computing • BBC: Grid virtualises processing and storage resources and lets people use, or rent, the capacity they need for particular tasks • Lots more University of Florida 11 San Diego Supercomputer Center ICDM 2004 Visionaries Perspective • Computing and storage • shared amongst autonomous organizations [using an grid enabled cyberinfrastructure] • As an utility like commodities used within inter/intra organizational collaborations • Government agencies and policy makers have subscribed to this and don’t want their country to be left behind University of Florida 12 San Diego Supercomputer Center ICDM 2004 Academia Perspective • “Same questions, different answers” • Parallel distributed computing (with multiple organizations) • Autonomous administrative domains • Heterogeneous Infrastructure • Hide heterogeneity using logical resource namespace • Change in computing models to take advantage of grid? • Large bandwidth, large storage space, large computing power everywhere – does the “large” affect the models/algorithms? • Old wine in a new bottle • It’s a solved problem. Nothing new • “Greed Computing”: Use it to get funding University of Florida 13 San Diego Supercomputer Center ICDM 2004 User / Vendor Perspective • Vendor • Yes, its brand new paradigm. • We were ready for this long time back – In fact our product X had all the concepts. Do you want to test drive our product and give feed back? • Users • Our resources (human, computer) are distributed world-wide • Collaborations that can span across multiple resources from autonomous administrations of the same company • Reducing the Total Cost of Operation (TCO), flexibility to create or use logical resource pools • Automobile Industry, Bio-Tech, Electronics, … (Mostly distributed teams with multiple locations and very large data/computing) University of Florida 14 San Diego Supercomputer Center ICDM 2004 Reality? • Navigating hype wave • Touting immediate soft benefits • Just an application (“Seti@home”,…) • Need for standards • Standards that can facilitate new algorithms that can take advantage of heterogeneous infrastructure • No use without standard on which interoperable products are developed • How long is the wait? • Will grid computing deliver? How soon? Or is it just an hype that will fade away? • Already some technologies available which are quite promising University of Florida 15 San Diego Supercomputer Center ICDM 2004 Autonomous Administrative Domain • A Grid Entity that: • • • • Manages one or more grid resources Can make its own policies Might abide by a superior or global policy Can be act as a resource provider or requestor or both • • Examples: • A department or research lab in an university • A HR or finance department of a company (sub-organization) • Or simply a single computational or storage resource that manages it self governed by some policies • A Grid / Enterprise contains one or more autonomous administrative domains with distributed heterogeneous resources University of Florida 16 San Diego Supercomputer Center ICDM 2004 Data Grid Resources • Context (Information) • Information about digital entities (location, size, owners, ..) • Relationship between digital entities (replicas, collection, .) • Behavior the digital entities (services) • Content (Data) • Structured and unstructured • Virtual or derived • Commodity (Producers and consumers) • Storage resources • Also providers, brokers and requestors University of Florida 17 San Diego Supercomputer Center ICDM 2004 Very Large Scale Data Storage Grid Resource Providers (GRP) providing content and/or storage GRP /txt3.txt University of Florida 18 San Diego Supercomputer Center ICDM 2004 GRP Very Large Scale Data Storage Autonomous Administrative Domain with one or more Grid Resource Providers Research Lab GRP /txt3.txt University of Florida 19 San Diego Supercomputer Center ICDM 2004 GRP Very Large Scale Data Storage Research Lab data + storage (10) Storage-R-Us Resource Providers data + storage (50) GRP GRP GRP Finance Department data + storage (40) GRP /…/text1.txt University of Florida GRP GRP /…//text2.txt 20 GRP /txt3.txt San Diego Supercomputer Center ICDM 2004 GRP Very Large Scale Data Storage /home/arun.sdsc/exp1 /home/arun.sdsc/exp1/text1.txt /home/arun.sdsc/exp1/text2.txt /home/arun.sdsc/exp1/text3.txt data + storage (100) Logical Namespace (Need not be same as physical view of resources ) Research Lab data + storage (10) Storage-R-Us Resource Providers data + storage (50) GRP GRP GRP Finance Department data + storage (40) GRP /…/text1.txt University of Florida GRP GRP /…//text2.txt 21 GRP /txt3.txt San Diego Supercomputer Center ICDM 2004 GRP Tutorial Outline Introduction to Data Grids o o o o The “Grid” Vision Hype/Reality Where: Data Grid Infrastructures in production Why: Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 22 San Diego Supercomputer Center ICDM 2004 DGMS Technology Usage • • • • • • • • • • • NSF Southern California Earthquake Center digital library Worldwide Universities Network data grid NASA Information Power Grid NASA Goddard Data Management System data grid DOE BaBar High Energy Physics data grid NSF National Virtual Observatory data grid NSF ROADnet real-time sensor collection data grid NIH Biomedical Informatics Research Network data grid NARA research prototype persistent archive NSF National Science Digital Library persistent archive NHPRC Persistent Archive Test bed University of Florida 23 San Diego Supercomputer Center ICDM 2004 Southern California Earthquake Center University of Florida 24 San Diego Supercomputer Center ICDM 2004 Southern California Earthquake Center • Build community digital library • Manage simulation and observational data • 60 TBs, several million files • Provide web-based interface • Support standard services on digital library • Manage data distributed across multiple sites • USC, SDSC, UCSB, SDSU, SIO • Provide standard metadata • Community based descriptive metadata • Administrative metadata • Application specific metadata University of Florida 25 San Diego Supercomputer Center ICDM 2004 SCEC Data Management Technologies • Portals • Knowledge interface to the library, presenting a coherent view of the services • Knowledge Management Systems • Organize relationships between SCEC concepts and semantic labels • Process management systems • Data processing pipelines to create derived data products • Web services • Uniform capabilities provided across SCEC collections • Data grid • Management of collections of distributed data • Computational grid • Access to distributed compute resources • Persistent archive • Management of technology evolution University of Florida 26 San Diego Supercomputer Center ICDM 2004 University of Florida 27 San Diego Supercomputer Center ICDM 2004 NASA Data Grids • NASA Information Power Grid • NASA Ames, NASA Goddard • Distributed data collection using the SRB • ESIP federation • Led by Joseph JaJa (U Md) • Federation of ESIP data resources using the SRB • NASA Goddard Data Management System • Storage repository virtualization (Unix file system, Unitree archive, DMF archive) using the SRB • NASA EOS Petabyte store • Storage repository virtualization for EMC persistent store using the Nirvana version of SRB University of Florida 28 San Diego Supercomputer Center ICDM 2004 NIH BIRN SRB Data Grid • Biomedical Informatics Research Network • Access and analyze biomedical image data • Data resources distributed throughout the country • Medical schools and research centers across the US • Stable high performance grid based environment • Coordinate data sharing • Federate collections • Support data mining and analysis University of Florida 29 San Diego Supercomputer Center ICDM 2004 BIRN: Inter-organizational Data University of Florida 30 San Diego Supercomputer Center ICDM 2004 SRB Collections at SDSC Asofof8/2/2004 9/3/2004 As of 6/1/2004 As of 7/15/2004 As of 5/17/2002 As As of 12/22/2000 As of 3/3/2004 Data_size Data_size Data_size Count Data_size Data_size (in Count Count Count Data_size Comments Count Count (files) Users Users Funding Agency Project InstanceInstance CountData_size (files) Users Users Project Users GB) (files) (in GB) (in GB) (files) (in GB) (files)(files) (in GB)(inGB) (in GB) (files) 5,012,192 380 380 NPACI Users NSF/PACI NPACI 379 17,578.00 4,694,075 380 21,060.00 18,220.00 4,730,063 Data Grid 16,782.00 4,631,819 9,126,471 80 2Mass,DPOSS,NVO Digsky 51,380.00 8,690,0037,599.00 80 51,380.00 8,690,003 80 53,516.00 51,380.00 5,139,249 8,690,003 80 45,939.00 Digsky 3,630,300 17,800.00 8,685,572 NSF/ITR 80 720.00 45,365 23 Visible Embryo NLM DigEmbryo 720.00 45,365 23 720.00 45,365 23 720.00 45,365 23 NPACI 329.63 46,844 1,972.00 1,083,230 13,700.00 4,050,863 379 249.00 8,016 36 HyperSpectral Images NSF/NPACI (ESS) HyperLter 224.00 5,166 29 233.00 6,111 35 241.00 7,065 35 Hayden 6,800.00 41,391 7,835.00 60,001 168 7,201.00 113,600 178 FlyThrough for Planetarium AMNH/Hayden Hayden 7,201.00 113,600 178 7,201.00 113,600 178 7,201.00 113,600 178 SLAC 514.00 77,168 3,432.00 446,613 43 1,816.00 49,342 392 Grid Portal NSF/NPACI Portal 1,690.00 46,011 384 1,745.00 48,174 384 1,767.00 48,513 384 LDAS/SALK 239.00 1,766 2,002.00 14,427 66 5,227.00 652,023 50 Protein Crystallography NSF/NPACI (Alpha) SLAC 4,161.00 551,918 45 4,317.00 563,176 47 4,898.00 617,374 47 TeraGrid 22,563.00 452,868 2,585 89.00 253,930 58 Archival Documents NARA NARA/Collection 63.00 81,191 58 63.00 81,191 58 63.00 81,191 58 BIRN 892.00 2,472,299 160 2,122.00 758,233 27 SIO Explorer Documents NSF/NSDL NSDL/SIO Exp 1,578.00 518,261 27 2,062.00 750,684 27 2,062.00 750,684 27 Digital Library 2,387 Classroom Videos TRA 2,387 124.30 26 92.00 2,479 2,387 26433.0092.00 92.00 2,387 26 26 720.00 DigEmbryo 92.00 31,629 45,365 NSF/NPACI (EOT) 23 8,704.00 21,881 67 land and neuro LDAS/SALK 3,390.00 15,547 66 4,562.00 16,781 66 7,160.00 20,437 66 HyperLter 28.94 69 158.00 3,596 215.00 5,110 29 5,619.00 3,625,858 160 160 Biomedical Informatics NIH (NCRR) BIRN 5,123.00 3,295,296 183 5,421.00 3,374,485 148 5,518.00 3,477,841 Portal 33.00 5,485 1,610.00 46,278 374 681.00 57,221 21 Cell Signalling Images/Docs NIH AfCS 438.00 54,706 21 462.00 49,729 21 562.00 54,407 21 AfCS 27.00 4,007 236.00 42,987 21 128.00 203,930 UCSD UCSDLib 127.00 202,445 29 127.00 202,445 29 127.00 202,445 29 29 Archival Image Files NSDL/SIO Exp 19.20 383 1,217.00 193,888 26 26,839,834 122 122 K-12 Curriculum Web-sites NSF/NSDL NSDL/CI 2,445.00 18,491,862 119 3,008.00 21,420,181 119 3,519.00 3,203.00 23,559,785 TRA 5.80 92 92.00 2,387 26 1,776,882 SCEC 14,738.00 1,735,900 52 22,781.00 1,748,924 56 41,532.00 25,715.00 1,753,458 56 59 South Cal. Earthquake Ctr. NSF/ITR SCEC 12,311.00 1,730,432 47 907,145 3,0773,192 TeraGrid NSF TeraGrid 58,228.00 481,489 2,870 86,420.00 687,108 2,994 104,300.00 94,203.00 704,493 UCSDLib 127.00 202,445 29 Persistent Archive 256,575 49,454,310 4,7694,900 TOTAL 168,380.00 38,962,966 4,569 208,172.00 42,494,419 4,671 223,132 44,859,111 NARA/Collection 7.00 2,455 72.00 82,192 58 NSDL/CI 1,529.00 12,658,072 116 256 49.5millions millions 4 thousand114 TB TOTAL 8 TB 2083.7 million 31 million 4230 168 TB 38 millions 4 thousand TB million 42 millions 4 thousand28 TB 223TB TB 6.4 44.8 4 thousand ** Does not cover data brokered by SRB spaces administered outside SDSC. 31file systems San Diego Supercomputer Center University of Florida Does not cover databases; covers only files stored in and archival storage systems ICDM 2004 Does not cover shadow-linked directories Tutorial Outline Introduction to Data Grids o o o o The “Grid” Vision Hype/Reality Where: Data Grid Infrastructures in production Why: Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 32 San Diego Supercomputer Center ICDM 2004 Why They Require Data Grids • • • • • Inter/Intra Organizational Sharing Inter/Intra Organizational Data Storage Utility Data Storage Resource Plug-n-play provisioning Data Preservation (Technology Migration) Information Lifecycle Management (ILM) University of Florida 33 San Diego Supercomputer Center ICDM 2004 Inter/intra Organizational Sharing Research Lab 1: You can use our storage for this project GRP Research Lab 2: We have relevant data for this project GRP GRP /txt3.txt University of Florida 34 San Diego Supercomputer Center ICDM 2004 Inter/intra Organizational Sharing • Sharing of resources between autonomous domains • Either same (Inter) or different (Intra) organizations • Shared resources • Data, storage, IT staff • Logical namespace for Collaboration • Shared data and physical resources available in the logical namespace for usage • Inter-organizational digital libraries, Personal digital libraries University of Florida 35 San Diego Supercomputer Center ICDM 2004 Inter/intra Organizational Utility Data Center GRP /…/text1.txt West Coast Offices GRP GRP GRP East Coast Office University of Florida 36 /txt3.txt San Diego Supercomputer Center ICDM 2004 Inter/intra Organizational Utility That was so easy in slide show Data Center GRP /…/text1.txt West Coast Offices /…/text1.txt GRP GRP GRP East Coast Office University of Florida 37 /txt3.txt San Diego Supercomputer Center ICDM 2004 Inter/intra Organizational Utility • Create a logical data storage utility • Virtualization of enterprise resources (data and storage) • Manage resource usage based on demand and supply • Distribute the quantity and QoS of resources in an enterprise based on the project demands, priorities, usage • Saves a lot in TCO • Total Cost of Operation, managing logically unified distributed resources without loosing flexibility and local autonomy University of Florida 38 San Diego Supercomputer Center ICDM 2004 Data Storage Resource Plug-n-play • Plug-n-play Provisioning • Add a storage resource in grid without major reconfiguration • Logical resources • Logical namespace of all the resources to which resources can be added (or removed gracefully without affecting the applications) • Update resources based on demand and supply • Add resources to the storage pool from another inter/intra organizational partner (another department or data center) University of Florida 39 San Diego Supercomputer Center ICDM 2004 Data Preservation (Technology Migration) • Facilitate “Technology Migration” • Flexible enterprise data architecture for technology evolution • Update seamlessly to new file/storage system resources • Hardware changes, Software changes • Change archival resource from digital tape to disks • Change from magnetic to optical • The application and users not aware of any change • Significant saving by avoiding downtime • Create a replicated resource of all or selected data University of Florida 40 San Diego Supercomputer Center ICDM 2004 Information Lifecycle Management (ILM) • Business oriented management of data and resources • If data is in demand; replicate, move to higher QoS • Archive only the less accessed data • Grid middleware facilitates ILM in the background • More than one physical resource as a single logical resource • Logical namespace of online and offline data • Irrespective of number of replicas and resources added • Compliance with federal regulations • Health, Finance and many domains now have regulations on digital backup of transactions and communication University of Florida 41 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 42 San Diego Supercomputer Center ICDM 2004 Using a Data Grid – in Abstract Data Grid •User asks for data from the data grid •The data is found and returned •Where & how details are managed by data grid •But access controls are specified by owner University of Florida 43 San Diego Supercomputer Center ICDM 2004 Real World : Physical Heterogeneities • • • • Multiple autonomous administrative domains Distributed digital entities in different domains Heterogeneous storage resources and systems Distributed users and authentication mechanisms • Different user preferences of usage • Logical hierarchy • Users, groups, sub-organization/departments, administrative domains, enterprises, virtual enterprise University of Florida 44 San Diego Supercomputer Center ICDM 2004 Data Grid: Every Thing Is Logical • • • • Logical namespace of grid resources Collection hierarchy Logical resources Grid Users and Virtual organizations University of Florida 45 San Diego Supercomputer Center ICDM 2004 Transparencies/Virtualizations (bits,data,information,..) Semantic data Organization (with behavior) myActiveNeuroCollection patientRecordsCollection Virtual Data Transparency image.cgi image.wsdl image.sql Data Replica Transparency image_0.jpg…image_100.jpg Interorganizational Information Storage Management Data Identifier Transparency E:\srbVault\image.jpg /users/srbVault/image.jpg Select … from srb.mdas.td where... Storage Location Transparency Storage Resource Transparency University of Florida 46 San Diego Supercomputer Center ICDM 2004 Data Grid Transparencies • Find data without knowing the identifier • Descriptive attributes • Access data/storage without knowing the location • Logical name space • Access data without knowing the type of storage • Storage repository abstraction • Provide transformations for any data collection • Data behavior abstraction University of Florida 47 San Diego Supercomputer Center ICDM 2004 Data Grid Abstractions • Storage repository virtualization • Standard operations supported on storage systems • Data virtualization • Logical name space for files - Global persistent identifier • Information repository virtualization • Standard operations to manage collections in databases • Access virtualization • Standard interface to support alternate APIs • Latency management mechanisms • Aggregation, parallel I/O, replication, caching • Security interoperability • GSSAPI, inter-realm authentication, collection-based authorization University of Florida 48 San Diego Supercomputer Center ICDM 2004 Data Organization • Physical Organization of the data • Distributed Data • Heterogeneous resources • Multiple formats (structured and unstructured) • Logical Organization • Impose logical structure for data sets • Collections of semantically related data sets • Users create their own views (collections) of the data grid University of Florida 49 San Diego Supercomputer Center ICDM 2004 Data Identifier Transparency Four Types of Data Identifiers: • Unique name • OID or handle • Descriptive name • Descriptive attributes – meta data • Semantic access to data • Collective name • Logical name space of a collection of data sets • Location independent • Physical name • Physical location of resource and physical path of data University of Florida 50 San Diego Supercomputer Center ICDM 2004 Mappings on Resource Name Space • Define logical resource name • List of physical resources • Replication • Write to logical resource completes when all physical resources have a copy • Load balancing • Write to a logical resource completes when copy exist on next physical resource in the list • Fault tolerance • Write to a logical resource completes when copies exist on “k” of “n” physical resources University of Florida 51 San Diego Supercomputer Center ICDM 2004 Data Replica Transparency • Replication • • • • Improve access time Improve reliability Provide disaster backup and preservation Physically or Semantically equivalent replicas • Replica consistency • Synchronization across replicas on writes • Updates might use “m of n” or any other policy • Distributed locking across multiple sites • Versions of files • Time-annotated snapshots of data University of Florida 52 San Diego Supercomputer Center ICDM 2004 Latency Management -Bulk Operations • Bulk register • Create a logical name for a file • Bulk load • Create a copy of the file on a data grid storage repository • Bulk unload • Provide containers to hold small files and pointers to each file location • Bulk delete • Mark as deleted in metadata catalog • After specified interval, delete file • Bulk metadata load • Requests for bulk operations for access control setting, … University of Florida 53 San Diego Supercomputer Center ICDM 2004 In Short… • The whole data grid infrastructure is logical • All physical heterogeneities and distribution are hidden • Flexibility needed for ever changing business demands in enterprise data management • The presentation of the underlying physical infrastructure is controlled by the autonomous administrative domains in the grid • Grid Middleware has to be the “plumber” • Has to do lot of “plumbing” to provide all these transparencies without any significant degrade in performance or QoS • Distributed data management: Latency, Replica, Logical namespace, meta-data, P2P, database tuning, etc., University of Florida 54 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker o SRB Architecture o SRB Clients o SRB Demo Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 55 San Diego Supercomputer Center ICDM 2004 Storage Resource Broker • Distributed data management technology • Developed at San Diego Supercomputer Center (Univ. of California, San Diego) • 1996 - DARPA Massive Data Analysis • 1998 - DARPA/USPTO Distributed Object Computation Test bed • 2000 to present - NSF, NASA, NARA, DOE, DOD, NIH, NLM, NHPRC • Applications • • • • Data grids - data sharing Digital libraries - data publication Persistent archives - data preservation Used in national and international projects in support of Astronomy, Bio-Informatics, Biology, Earth Systems Science, Ecology, Education, Geology, Government records, High Energy Physics, Seismology University of Florida 56 San Diego Supercomputer Center ICDM 2004 Acknowledgement: SDSC SRB Team Arun Jagatheesan George Kremenek Sheau-Yen Chen Arcot Rajasekar Reagan Moore Michael Wan Roman Olschanowsky Bing Zhu Charlie Cowart Not In Picture: Wayne Schroeder Tim Warnock (BIRN) Lucas Gilbert Marcio Faerman (SCEC) Antoine De Torcy Students: Jonathan Weinberg Yufang Hu Daniel Moore Grace Lin Allen Ding Yi Li University of Florida 57 Emeritus: Vicky Rowley (BIRN) Qiao Xin Ethan Chen Reena Mathew Erik Vandekieft Xi (Cynthia) Sheng San Diego Supercomputer Center ICDM 2004 Three Tier Architecture • Clients (Any interface/API) • Your preferred access mechanism • Servers (SRB Server) • Manage interactions with storage systems • Federated to support direct interactions between servers • Metadata catalog (MCAT) • Separation of metadata management from data storage • State persistence using a well-tuned database University of Florida 58 San Diego Supercomputer Center ICDM 2004 SDSC Storage Resource Broker & Meta-data Catalog Application Unix Shell C, C++, Linux Libraries I/O DLL / Python Java, NT Browsers GridFTP OAI WSDL Access APIs Consistency Management / Authorization-Authentication Logical Name Space Latency Management Catalog Abstraction Databases DB2, Oracle, Sybase, SQLServer Data Transport Metadata Transport Storage Abstraction Archives File Systems Databases HPSS, ADSM, HRM UniTree, DMF University of Florida 59 Unix, NT, Mac OSX DB2, Oracle, Postgres San Diego Supercomputer Center ICDM 2004 SRB Server Drivers Federated SRB server model Peer-to-peer Brokering Read Client Logical Name Or Attribute Condition Parallel Data Access 1 6 5/6 SRB server 3 4 5 SRB agent SRB server SRB agent 2 1.Logical-to-Physical mapping 2.Identification of Replicas 3.Access & Audit Control University of Florida MCAT Data Access R1 60 R2 San Diego Supercomputer Center ICDM 2004 Server(s) Spawning SRB Latency Management Remote Proxies, Staging Source Data Aggregation Containers Network Network Prefetch Destination Destination Replication Streaming Caching Server-initiated I/O Parallel I/O Client-initiated I/O University of Florida 61 San Diego Supercomputer Center ICDM 2004 SRB Name Spaces • Digital Entities (files, blobs, Structured data, …) • Logical name space for files for global identifiers • Resources • Logical names for managing collections of resources • User names (user-name / domain / SRB-zone) • Distinguished names for users to manage access controls • MCAT metadata • Standard metadata attributes, Dublin Core, administrative metadata University of Florida 62 San Diego Supercomputer Center ICDM 2004 Logical Name Space • Global, location-independent identifiers for digital entities • Organized as collection hierarchy • Attributes mapped to logical name space • Attributed managed in a database • Types of administrative metadata • Physical location of file • Owner, size, creation time, update time • Access controls University of Florida 63 San Diego Supercomputer Center ICDM 2004 Remote Proxies • Extract image cutout from Digital Palomar Sky Survey • Image size 1 Gbyte • Shipped image to server for extracting cutout took 2-4 minutes (5-10 Mbytes/sec) • Remote proxy performed cutout directly on storage repository • Extracted cutout by partial file reads • Image cutouts returned in 1-2 seconds • Remote proxies are a mechanism to aggregate I/O commands University of Florida 64 San Diego Supercomputer Center ICDM 2004 Virtual Data Abstraction • Virtual Data or “On Demand Data” • Created on demand is not already available • Recipe to create derived data • Grid based computation to create derived data product • Object based storage (extended data operations) • • • • Data subsetting at the remote storage repository Data formatting at the remote storage repository Metadata extraction at the remote storage repository Bulk data manipulation at the remote storage repository University of Florida 65 San Diego Supercomputer Center ICDM 2004 Grid Bricks • Integrate data management system, data processing system, and data storage system into a modular unit • • • • • Commodity based disk systems (1 TB) Memory (1 GB) CPU (1.7 Ghz) Network connection (Gig-E) Linux operating system • Data Grid technology to manage name spaces • User names (authentication, authorization) • File names • Collection hierarchy University of Florida 66 San Diego Supercomputer Center ICDM 2004 Grid Bricks at SDSC • Used to implement “picking” environments for 10-TB collections • Web-based access • Web services (WSDL/SOAP) for data subsetting • Implemented 15-TBs of storage • Astronomy sky surveys, NARA prototype persistent archive, NSDL web crawls • Must still apply Linux security patches to each Grid Brick • Grid bricks managed through SRB • Logical name space, User Ids, access controls • Load leveling of files across bricks University of Florida 68 San Diego Supercomputer Center ICDM 2004 Data Grid Federation • Data grids provide the ability to name, organize, and manage data on distributed storage resources • Federation provides a way to name, organize, and manage data on multiple data grids. University of Florida 69 San Diego Supercomputer Center ICDM 2004 SRB Zones • Each SRB zone uses a metadata catalog (MCAT) to manage the context associated with digital content • Context includes: • • • • Administrative, descriptive, authenticity attributes Users Resources Applications University of Florida 70 San Diego Supercomputer Center ICDM 2004 SRB Peer-to-Peer Federation • Mechanisms to impose consistency and access constraints on: • Resources • Controls on which zones may use a resource • User names (user-name / domain / SRB-zone) • Users may be registered into another domain, but retain their home zone, similar to Shibboleth • Data files • Controls on who specifies replication of data • MCAT metadata • Controls on who manages updates to metadata University of Florida 71 San Diego Supercomputer Center ICDM 2004 Peer-to-Peer Federation 1. 2. 3. 4. 5. 6. 7. 8. 9. 10. Occasional Interchange - for specified users Replicated Catalogs - entire state information replication Resource Interaction - data replication Replicated Data Zones - no user interactions between zones Master-Slave Zones - slaves replicate data from master zone Snow-Flake Zones - hierarchy of data replication zones User / Data Replica Zones - user access from remote to home zone Nomadic Zones “SRB in a Box” - synchronize local zone to parent Free-floating “myZone” - synchronize without a parent zone Archival “BackUp Zone” - synchronize to an archive SRB Version 3.0.1 released December 19, 2003 University of Florida 72 San Diego Supercomputer Center ICDM 2004 Comparison of peer-to-peer federation approaches Free Floating Partial User-ID Sharing Occasional Interchange Partial Resource Sharing Replicated Data System Set Access Controls System Controlled Complete Synch Complete User-ID Sharing Hierarchical Zone Organization One Shared User-ID No Metadata Synch Resource Interaction User and Data Replica Nomadic System Managed Replication System Set Access Controls System Controlled Partial Metadata Synch No Resource Sharing System Managed Replication Connection From Any Zone Complete Resource Sharing Snow Flake Replicated Catalog Super Administrator Zone Control Master Slave System Controlled Complete Metadata Synch Complete User-ID Sharing Archival University of Florida 74 San Diego Supercomputer Center ICDM 2004 Data Grid Federation - zoneSRB Application C, C++, Java Linux Libraries I/O Java, NT Browsers Unix Shell DLL / Python, Perl HTTP OAI, WSDL, OGSA Federation Management Consistency & Metadata Management / Authorization-Authentication Audit Logical Name Space Catalog Abstraction Databases DB2, Oracle, Sybase, Postgres, mySQL, Informix University of Florida Latency Management Data Transport Metadata Transport Storage Repository Virtualization Databases Archives - Tape, File Systems DB2, Oracle, Sybase, HPSS, ADSM, ORB Unix, NT, SQLserver,Postgres, UniTree, DMF, Mac OSX mySQL, Informix CASTOR,ADS 75 San Diego Supercomputer Center ICDM 2004 SDSC SRB Clients • C library calls • Provide access to all SRB functions • Shell commands • Provide access to all SRB functions • mySRB web browser • Provides hierarchical collection view • inQ Windows browser • Provides Windows style directory view • Jargon Java API • Similar to java.io. API • Matrix WSDL/SOAP Interface • Aggregate SRB requests into a SOAP request. Has a Java API and GUI • Python, Perl, C++, OAI, Windows DLL, Mac DLL, Linux I/O redirection, GridFTP (soon) University of Florida 76 San Diego Supercomputer Center ICDM 2004 SDSC SRB Demo • If possible from the venue • Constraints: Wireless, Murphy’s live demo laws; WAN, SRB, Storage, … University of Florida 77 San Diego Supercomputer Center ICDM 2004 What we are familiar with … University of Florida 78 San Diego Supercomputer Center ICDM 2004 What we are not familiar with, yet =) inQ Windows Browser Interface University of Florida 79 San Diego Supercomputer Center ICDM 2004 How do they differ? • • • • • • Folder, does NOT mean physical folder Files, do NOT mean physical files Everything is logical Everything is distributed Permissions are NOT rwxrwxrwx Permissions are on an object by object basis University of Florida 80 San Diego Supercomputer Center ICDM 2004 inQ • Windows OS only • User Guide at http://www.npaci.edu/dice/srb/inQ/inQ.html • Download .exe from http://www.npaci.edu/dice/srb/inQ/downloads.html University of Florida 81 San Diego Supercomputer Center ICDM 2004 mySRB • Web-based access to the SRB • Secure HTTP • https://srb.npaci.edu/mySRB2v7.shtml • Uses Cookies for Session Control University of Florida 82 San Diego Supercomputer Center ICDM 2004 mySRB Features • • • • • • • Access to Both Data and Metadata Data & File Management Collection Creation and Management Metadata Handling Browsing & Querying Interface Access Control New file creation without upload University of Florida 83 San Diego Supercomputer Center ICDM 2004 mySRB Interface to a SRB Collection University of Florida 84 San Diego Supercomputer Center ICDM 2004 Provenance Metadata University of Florida 85 San Diego Supercomputer Center ICDM 2004 SDSC SRB Information • srb-chat@sdsc.edu • SRB user community posts problems and solutions • srb@sdsc.edu • Request copy of source • http://www.npaci.edu/DICE/SRB/ • Access FAQ, installation instructions, papers • http://srb.npaci.edu/bugs/ • SRB-Zilla (bugzilla) University of Florida 86 San Diego Supercomputer Center ICDM 2004 SRB Availability • SRB source distributed to academic and research institutions • Commercial use access through UCSD Technology Transfer Office • William Decker WJDecker@ucsd.edu University of Florida 87 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows o Introduction o Matrix Project o Data Grid Language DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 88 San Diego Supercomputer Center ICDM 2004 Work in progress GfMS is ‘Hard Hat Area’ (Research) University of Florida 89 San Diego Supercomputer Center ICDM 2004 Data handling pipeline in SCEC (data information pipeline) Metadata derivation Ingest Data Ingest Metadata Pipeline could be triggered by input at data source or by a data request from user Determine analysis pipeline Initiate automated analysis Use the optimal set of resources based on the task – on demand Organize result data into distributed data grid collections All gridflow activities stored for data flow provenance University of Florida 90 San Diego Supercomputer Center ICDM 2004 Gridflows (Grid Workflow) • Automation of an execution pipeline • Data and/or tasks processed by multiple autonomous grid resources • According to set of procedural rules • Confluence of multiple autonomous administrative domains • GridFlow Execution Servers • By themselves are from autonomous administrative domains • P2P (Distributed) Control University of Florida 91 San Diego Supercomputer Center ICDM 2004 Need for Gridflows • Data-intensive and/or compute-intensive processes • Long run processes or pipelines on the Grid • (e.g) If job A completes execute jobs x, y, z; else execute job B. • Self-organization/management of data • Semi-automation of data, storage distribution, curation processes • (e.g) After each data insert into a collection, update the meta-data information about the collection or replicate the collection • Knowledge Generation • Offline data analysis and knowledge generation pipelines • (e.g) What inferences can be assumed from the new seismology graphs added to this collection? Which domain scientist will be interested to study these new possible pre-results? University of Florida 92 San Diego Supercomputer Center ICDM 2004 SDSC Matrix Project • CS Research & Development • Gridflow Description, Data Grid Administration Rules • Gridflow P2P protocols for Gridflow Server Communication • Development • SRB Data Grid Web Services • SRB Datagrid flow automation and provenance • Theory Practice • Help in customized development & deployment of gridflow concepts in scientific / grid applications • Visibility and assist in standardization of efforts at GGF University of Florida 93 San Diego Supercomputer Center ICDM 2004 Advantages from Data Grid Perspective • Reduces the Client-Server Communication • The whole execution logic is sent to the server • Less number of WAN messages • Our experiments prove significant increase in performance • Datagrid Information Lifecycle Management • Autonomic: “Move data at 9:00 PM in weekdays and in week ends” • Data Grid Administration • Power-users and Sophisticated Users • Data Grid Administrator (Rules to manage data grid) • Scientist or Librarian (Visualized data flow programming) University of Florida 94 San Diego Supercomputer Center ICDM 2004 What they want? We know the business (scientific) process CyberInfrastructure is all we care (why bother about atoms or DNA) University of Florida 95 San Diego Supercomputer Center ICDM 2004 What they want? Use DGL to describe your process logic with abstract references to datagrid infrastructure dependencies University of Florida 96 San Diego Supercomputer Center ICDM 2004 Why a Gridflow Language? • Infrastructure independent description • Abstract references to hardware and cyberinfrastructure • Description of execution flow logic • Separate the execution flow logic from application logic • (e.g) MonteCarlo is an application, execution of that 10 times or till a variable becomes zero is execution logic • Procedural Rules associated with execution flow • Provenance • What happened, when, who, how …? (and querying) University of Florida 97 San Diego Supercomputer Center ICDM 2004 Gridflow Language Requirements • High level Abstract descriptions • Abstract description of cyberinfrastructure dependencies • Simple yet flexible • Flexible to describe complex requirements (no brute force) • Gridflow dependency patterns • Based on execution structure and data semantics • (Parallel, Sequential, fork-new), (milestones, for-each, switch-case).. • Asynchronous execution • For long-run requests • Querying using existing standard • XQuery University of Florida 98 San Diego Supercomputer Center ICDM 2004 Gridflow Language Requirements • Process meta data and annotations • Runtime definition, update and querying of meta-data • Runtime Management of Gridflows • Stop gridflow at run time • Partitioning • Facility in language to divide a gridflow request to multiple requests (Excellent Research Topic) • Import descriptions • Refer other gridflows in execution University of Florida 99 San Diego Supercomputer Center ICDM 2004 Data Grid Language (DGL) • XML based gridflow description • Describes execution flow logic • ECA-based rule description for execution • ECA = Event, Condition, Action • Querying of Status of Gridflow • XQuery / Simple query of a Gridflow Execution • Scoped variables and gridflow patterns • For control of execution flow logic University of Florida 100 San Diego Supercomputer Center ICDM 2004 DGL Requests • Data Grid Flow • An XML Structure that describes the execution logic, associated procedural rules and grid environment variables • Status Query • An XML Structure used to query the execution status any gridflow or a sub-flow at any granular level • A DGL or Matrix client sends any of these to the Matrix Server University of Florida 101 San Diego Supercomputer Center ICDM 2004 Data Grid Request Annotations about the Data Grid Request Can be either a Flow or a Status Query University of Florida 102 San Diego Supercomputer Center ICDM 2004 Grid User <GridUser> <userID>Matrix-demo</userID> <organization> <organizationName>sdsc</organizationName> </organization> <challenge-Response>******</challenge-Response> <homeDirectory>/home/Matrixdemo.sdsc</homeDirectory> <defaultStorageResource>sdscunix</defaultStorageResource> <phoneNumber>0</phoneNumber> <e-mail>arun@sdsc.edu</e-mail> </GridUser> University of Florida 103 San Diego Supercomputer Center ICDM 2004 Grid Ticket University of Florida 104 San Diego Supercomputer Center ICDM 2004 VO Info University of Florida 105 San Diego Supercomputer Center ICDM 2004 Flow Scoped Variables that can control the flow Logic used by the sub-members Sub-members that are the real execution statements University of Florida 106 San Diego Supercomputer Center ICDM 2004 Using DG-Modeler • GUI for dataflow programming University of Florida 107 San Diego Supercomputer Center ICDM 2004 Gridflow Process I Gridflow Description Data Grid Language End User using DGBuilder University of Florida 108 San Diego Supercomputer Center ICDM 2004 Gridflow Process II Abstract Gridflow using Data Grid Language University of Florida Planner 109 Concrete Gridflow Using Data Grid Language San Diego Supercomputer Center ICDM 2004 Gridflow Process III Gridflow Processor Concrete Gridflow Using Data Grid Language University of Florida Gridflow P2P Network 110 San Diego Supercomputer Center ICDM 2004 Other Gridflow Research Projects • • • • • GriPhyN Pegasus, Sphinx, Matrix Taverna (MyGrid) Kepler (also from SDSC) GridAnt … University of Florida 111 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 112 San Diego Supercomputer Center ICDM 2004 DGMS Philosophy • Collective view of • Inter-organizational data • Operations on datagrid space • Local autonomy and global state consistency • Collaborative datagrid communities • Multiple administrative domains or “Grid Zones” • Self-describing and self-manipulating data • Horizontal and vertical behavior • Loose coupling between data and behavior (dynamically) • Relationships between a digital entity and its Physical locations, Logical names, Meta-data, Access control, Behavior, “Grid Zones”. University of Florida 113 San Diego Supercomputer Center ICDM 2004 DGMS Research Issues • Self-organization of datagrid communities • Using knowledge relationships across the datagrids • Inter-datagrid operations based on semantics of data in the communities (different ontologies) • High speed data transfer • Terabyte to transfer • Protocols, routers needed • Latency Management • Data source speed >> data sink speed • Datagrid Constraints • Data placement and scheduling • How many replicas, where to place them… University of Florida 114 San Diego Supercomputer Center ICDM 2004 Work Vision Ahead Half-baked research ahead University of Florida 115 San Diego Supercomputer Center ICDM 2004 Active Datagrid Collections Resources Data Sets 121.Event Behavior Thit.xml 121.Event getEvents() National Lab University of Florida Hits.sql addEvent() SDSC 116 University of Gators San Diego Supercomputer Center ICDM 2004 Active Datagrid Collections 121.Event Thit.xml Heterogeneous, distributed physical data 121.Event getEvents() National Lab University of Florida Dynamic or virtual data Hits.sql addEvent() SDSC 117 University of Gators San Diego Supercomputer Center ICDM 2004 Active Datagrid Collections Logical Collection gives location and naming transparency myHEP-Collection Meta-data 121.Event Thit.xml National Lab University of Florida 121.Event SDSC SDSC 118 Hits.sql University of Gators San Diego Supercomputer Center ICDM 2004 Active Datagrid Collections Now add behavior or services to this logical collection Collection state and services myHEP-Collection Meta-data Horizontal Services 121.Event Thit.xml 121.Event getEvents() National Lab University of Florida Hits.sql addEvent() SDSC SDSC 119 University of Gators San Diego Supercomputer Center ICDM 2004 Active Datagrid Collections ADC Logical view of data & operations ADC specific Operations + Model View Controllers Collection state and services myHEP-Collection Meta-data Horizontal Services 121.Event Thit.xml 121.Event getEvents() National Lab University of Florida Hits.sql addEvent() SDSC SDSC 120 University of Gators San Diego Supercomputer Center ICDM 2004 Active Datagrid Collections Physical and virtual data present in the datagrid Digital entities Standardized schema with domain specific schema extensions Meta-data Horizontal datagrid services and vertical domain specific services (portType) or pipelines (DGL) Events, collective state, mappings to domain services to be invoked Services State University of Florida 121 San Diego Supercomputer Center ICDM 2004 Related Technologies/Links • • • • A complete history of the Grid SDSC Storage Resource Broker Globus Data Grid The Legion Project University of Florida 122 San Diego Supercomputer Center ICDM 2004 Global Grid Forum (GGF) • Global Forum for Information Exchange and Collaboration • Promote and support the development and deployment of Grid Technologies • Creation and documentation of “best practices”, technical specifications (standards), user experiences, … • Modeled after Internet Standards Process (IETF, RFC 2026) • http://www.ggf.org University of Florida 123 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 124 San Diego Supercomputer Center ICDM 2004 Data Grid Mining • Distributed data mining • Underlying infrastructure heterogeneous (not uniform LAN or bandwidth or memory) • Mining software (algorithms) to take advantage of the logical resource namespace to select execution site • Can the mining software estimate and acquire required cyber infrastructure resources before it starts? Grid standards must be evolved to communicate this infrastructure dependent information • Co-location of dependent data or tasks; Distribution (parallel execution) of independent tasks at different domains University of Florida 125 San Diego Supercomputer Center ICDM 2004 Data Grid Mining • Using the Data Grid to mine data • Replicating or selecting the right resources for mining • Cost-based analysis for the best utilization of the heterogeneous infrastructure • Data, mining software, execution location • Move data or code or execution location to alternative location based on QoS and available budget • Co-locating or distributing appropriate data mining steps • (e.g) NVO co-add at FNAL and SDSC (distribution + colocation) University of Florida 126 San Diego Supercomputer Center ICDM 2004 Q & A Session; feedback Makes a significant difference from being here in this room today, and flipping through the slides from the internet later – So lets make sure we all benefit from the 3 hours we spent here University of Florida 127 San Diego Supercomputer Center ICDM 2004 Tutorial Outline Introduction to Data Grids Data Grid Design philosophies SDSC Storage Resource Broker Gridflows DGMS Related Topics Q & A Session Hand-on/Demo Session University of Florida 128 San Diego Supercomputer Center ICDM 2004 For More Information Arun swaran Jagatheesan San Diego Supercomputer Center University of California, San Diego arun@sdsc.edu University of Florida 129 San Diego Supercomputer Center ICDM 2004