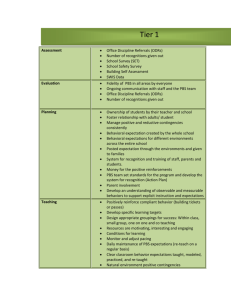

HPCL Cluster

Using Parallel Computing

Resources at Marquette

HPC Resources

• Local Resources

– HPCL Cluster

– PARIO Cluster

– PERE Cluster

– MU Grid hpcl.mscs.mu.edu

pario.eng.mu.edu

pere.marquette.edu

• Regional Resources

– Milwaukee Institute

– SeWhip

• National Resources

– NCSA

– ANL http://www.ncsa.illinois.edu/ http://www.anl.gov/

– TeraGrid Resources http://www.teragrid.org/

• Commercial Resources

– Amazon EC2 http://aws.amazon.com/ec2/

To MARQNET

134.48.

Head Node

Compute Node #1

Compute Node #2

Compute Node #3

Pere Cluster

128 HP ProLiant BL280c G6 Server Blade

1024 Intel Xeon 5550 Cores (Nehalem)

50 TB raw storage

3 TB main memory

Compute Node #128

Steps to Run A Parallel Code

1. Get the source code

– You can do it either on your local computer and then transfer to hpcl.mscs.mu.edu, or

– Use vi to edit a new one on hpcl.mscs.mu.edu

2. Compile your source code using mpicc, mpicxx or mpif77

3. Write a submission script for your job

– vi myscript.sh

4. Use qsub to submit the script.

– qsub myscript.sh

hello.c

Getting Parallel Code

You can write the code on your development machine using IDE and then transfer the code to the cluster. (Recommended)

For small code, you can also directly edit it on the cluster.

Transfer File to Cluster

• Method 1: sftp (text or GUI) sftp mscs6060@pere.marquette.edu

put simple.c

bye

• Method 2: scp scp simple.c mscs6060@pere.marquette.edu:example/

• Method 3: rsync rsync -rsh=ssh -av example \ mscs6060@pere.marquette.edu:

Compile MPI Programs

• Method 1: Using MPI compiler wrappers

– mpicc: for c code

– mpicxx/mpic++/mpiCC: for c++ code

– mpif77, mpif90: for FORTRAN code

Examples: mpicc –o hello hello.c

mpif90 –o hello hello.f

Compile MPI Programs (cont.)

• Method 2: Using standard compilers with mpi library

Note: MPI is just a library, so you can link the library to your code to get the executables.

Examples: gcc -o ping ping.c \

-I/usr/mpi/gcc/openmpi-1.2.8/include \

-L/usr/mpi/gcc/openmpi-1.2.8/lib64 -lmpi

Compiling Parallel Code – Using Makefile

Job Scheduler

• A kind of software that provide

– Job submission and automatic execution

– Job monitoring and control

– Resource management

– Priority management

– Checkpoint

– ….

• Usually implemented as master/slave architecture

Commonly used Job Schedulers

PBS: PBS Pro/TORQUE

SGE (Sun Grid Engine, Oracle)

LSF (Platform Computing)

Condor (UW Madison)

Access the Pere Cluster

• Access

– ssh <your-marquette-id>@pere.marquette.edu

• Account management

– Based on Active Directory, you use the same username and password to login Pere as the one you are using for your Marquette email.

– Need your professor to help you sign up.

• Transfer files from/to Pere

Modules

• The Modules package is used to customize your environment settings.

– control what versions of a software package will be used when you compile or run a program.

• Using modules

– module avail check which modules are available

– module load <module> set up shell variables to use a module

– module unload

– module list

– module help remove a module show all loaded modules get help on using module

Using MPI on Pere

• Multiple MPI compilers available, each may need different syntax

– OpenMPI compiler (/usr/mpi/gcc/openmpi-1.2.8)

• mpicc –o prog prog.c

• mpif90 –o prog prog.f

– mvapich compiler (/usr/mpi/gcc/mvapich-1.1.0)

• mpicc –o prog prog.c

• mpif90 –o prog prog.f

– PGI compiler (/cluster/pgi/linux86-64/10.2)

• pgcc –Mmpi –o prog prog.c

• pgf90 –Mmpi –o prog prog.f

– Intel compiler

• icc –o prog prog.c –lmpi

• ifort –o prog prog.f -lmpi

Pere Batch Queues

• Pere current runs PBS/TORQUE

• TORQUE usage

– qsub myjob.qsub

– qstat

– qdel job-id

– pbsnodes

– pbstop submit job scripts view job status delete job show nodes status show queue status

Sample Job Scripts on Pere

• #!/bin/sh

•

•

#PBS -N hpl

Assign a name to the job

Request resources: 64 nodes, each with 8 processors, 1 hour

• #PBS -q batch

Submit to batch queue

• #PBS -j oe

Merge stdout and stderr output

• #PBS -o hpl-$PBS_JOBID.log

Redirect output to a file

• cd $PBS_O_WORKDIR

• cat $PBS_NODEFILE

Change work dir to current dir

Print allocated nodes (not required)

• mpirun -np 512 --hostfile `echo $PBS_NODEFILE` xhpl

Extra Help For Accessing Pere

• Contact me.

• User’s guide for pere

Using Condor

• Resources:

– http://www.cs.wisc.edu/condor/tutorials/

Using Condor

1. Write a submit script – simple.job

Universe = vanilla

Executable = simple

Arguments = 4 10

Log = simple.log

Output = simple.out

Error = simple.error Queue

2. Submit the script to condor pool condor_submit simple.job

3. Watch the job run condor_q condor_q –sub <youusername>

Doing a Parameter Sweep

Can put a collections of jobs in the same submit scripts to do a parameter sweep.

Universe = vanilla

Executable = simple

Arguments = 4 10

Log = simple.log

Output = simple .$(Process).

out

Error = simple .$(Process).

error

Queue

Arguments = 4 11

Queue

Arguments = 4 12

Queue

Tell condor to use different output for each job

Use queue to tell the individual jobs

Can be run independently

Condor DAGMan

DAGMAn, lets you submit complex sequences of jobs as long as they can be expressed as a directed acylic graph

Each job in the DAG can only one queue.

Commands: condor_submit_dag simple.dag

./watch_condor_q

Submit MPI Jobs to Condor

Difference from serial jobs: use MPI universe machine_count > 1

When there is no shared file system, transfer executables and output from/to local systems by specifying should_transfer_file and when_to_transfer_output

Questions

• How to implement parameter sweep using

SGE/PBS?

• How to implement DAG on SGE/PBS?

• Is there better ways to run the a large number of jobs on the cluster?

• Which resource I should use and where I can find help?

To MARQNET

134.48.

Head Node

Compute Node #1

Compute Node #2

Compute Node #3

HPCL Cluster

Compute Node #4

How to Access HPCL Cluster

On Windows: Using SSH Secure Shell or PUTTY

On Linux: Using ssh command

Developing & Running Parallel Code

Identify Problem & Analyze

Requirement

Analyze Performance

Bottleneck

Designing Parallel Algorithm

Coding

Writing Parallel Code

Building Binary Code

(Compiling)

Testing Code

Solving Realistic Problems

(Running Production Release)

Compiling

Running

Steps to Run A Parallel Code

1. Get the source code

– You can do it either on your local computer and then transfer to hpcl.mscs.mu.edu, or

– Use vi to edit a new one on hpcl.mscs.mu.edu

2. Compile your source code using mpicc, mpicxx or mpif77

They are located under /opt/openmpi/bin. Use which command to find it location;

If not in your path, add the next line to your shell initialization file

(e.g., ~/.bash_profile) export PATH=/opt/openmpi/bin:$PATH

3. Write a submission script for your job

– vi myscript.sh

4. Use qsub to submit the script.

– qsub myscript.sh

hello.c

Getting Parallel Code

You can write the code on your development machine using IDE and then transfer the code to the cluster. (Recommended)

For small code, you can also directly edit it on the cluster.

Transfer File to Cluster

• Method 1: sftp (text or GUI) sftp mscs6060@hpcl.mscs.mu.edu

put simple.c

bye

• Method 2: scp scp simple.c mscs6060@hpcl.mscs.mu.edu:example/

• Method 3: rsync rsync -rsh=ssh -av example \ mscs6060@hpcl.mscs.mu.edu:

• Method 4: svn or cvs svn co \ svn+ssh://hpcl.mscs.mu.edu/mscs6060/example

Compile MPI Programs

• Method 1: Using MPI compiler wrappers

– mpicc: for c code

– mpicxx/mpic++/mpiCC: for c++ code

– mpif77, mpif90: for FORTRAN code

Examples: mpicc –o hello hello.c

mpif90 –o hello hello.f

Looking the cluster documentation or consulting system administrators for the types of available compilers and their locations.

Compile MPI Programs (cont.)

• Method 2: Using standard compilers with mpi library

Note: MPI is just a library, so you can link the library to your code to get the executables.

Examples: gcc -o ping ping.c \

-I/usr/mpi/gcc/openmpi-1.2.8/include \

-L/usr/mpi/gcc/openmpi-1.2.8/lib64 -lmpi

Compiling Parallel Code – Using Makefile

Job Scheduler

• A kind of software that provide

– Job submission and automatic execution

– Job monitoring and control

– Resource management

– Priority management

– Checkpoint

– ….

• Usually implemented as master/slave architecture

Commonly used Job Schedulers

PBS: PBS Pro/TORQUE

SGE (Sun Grid Engine, Oracle)

LSF (Platform Computing)

Condor (UW Madison)

Using SGE to Manage Jobs

• HPCL cluster using SGE as job scheduler

• Basic commands

– qsub submit a job to the batch scheduler

– qstat examine the job queue

– qdel delete a job from the queue

• Other commands

– qconf SGE queue configuration

– qmon graphical user's interface for SGE

– qhost show the status of SGE hosts, queues, jobs

simple.sh

Submit a Serial Job

Submit Parallel Jobs to HPCL Cluster

force to use bash for shell interpreter

Request Parallel Environment orte using 64 slots (or processors)

Run the job in specified director

Merge two output files (stdout, stderr)

Redirect output to a log file

Run mpi program

For your program, you may need to change the processor number, the program name at the last line, and the job names.

References

• SUN Grid Engine User’s Guide http://docs.sun.com/app/docs/doc/817-6117

• Command used commands

– Submit job: qsub

– Check status: qstat

– Delete job: qdel

– Check configuration: qconf

• Check the manual of a command

– man qsub