root

advertisement

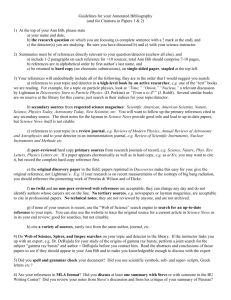

A Proposal for the MEG

Offline Systems

Corrado Gatto

Lecce 1/20/2004

Outlook

Choice of the framework: ROOT

–

–

–

–

–

Architecture of the offline systems

–

–

–

–

–

Offline Requirements for MEG

ROOT I/O Systems: is it suitable?

LAN/WAN file support

Tape Storage Support

Parallel Code Execution Support

Computing Model

General Architecture

Database Model

Montecarlo

Offline Organization

Offline

Sotfware

Framework

Services

Experiment

Independent

Already Discussed (phone meeting Jan 9th, 2004)

– Why ROOT?

Coming Soon…

Next Meeting (PSI Feb 9th, 2004)

– Data Model (Object Database vs ROOT+RDBMS)

– More on ROOT I/O

– UI and GUI

– GEANT3 compatibility

Dataflow and Reconstruction

Requirements

100 Hz L3 trigger

evt size : 1.2 MB

Raw Data throughput: (10+10)Hz 1.2Mb/Phys evt 0.1

+ 80Hz 0.01 Mb/bkg evt = 3.5 Mb/s

<evt size> : 35 kB

Total raw data storage: 3.5Mb/s 107s = 35 TB/yr

Framework Implementation

Constraints

• Geant3 compatible (at least at the beginning)

• Written and maintained by few people

• Low level of concurrent access to reco data

• Scalability (because of the uncertainty of the event rate)

Compare to BABAR

No. of Subsystems

No. of Channels

Event Rate

Raw Event Size

L3 to Reconstruction

Reco to HSS

Storage

Requirements

(including MC)

BABAR

MEG

5

3

~250,000

~1000

109 event/yr

109 event/yr

32 kB

1.2 MB

30 Hz+70Hz

(2.5 MB/s)

100 Hz (7.5 MB/s)

20 Hz+80 Hz

(3.5 MB/s)

100 Hz (3.6 MB/s)

300 TB/yr

70 TB/yr

(reprocessing not

included)

BaBar Offline Systems

>400 nodes (+320 in Pd)

>20 physicists/engineers

Experiments Using ROOT for the

Offline

Experiment

Max Evt size

Evt rate

DAQ out

Tape Storage

STAR

20 MB

1 Hz

20 MB/s

200 TB/yr

3

>400

Phobos

300 kB

100 Hz

30 MB/s

400 TB/yr

3

>100

Phenix

116 kB

200 Hz

17 MB/s

200 TB/yr

12

600

Hades

9 kB (compr.)

33 Hz

300 kB/s

1 TB/yr

5

17

Blast

0.5 kB

500 Hz

250 kB/s

5

55

Meg

1.2 MB

100 Hz

3.5 MB/s

70 TB/yr

Subdetectors Collaborators

3

Incidentally……

All the above experiments use the ROOT framework and I/O system

BABAR former Offline Coordinator now at STAR (T. Wenaus) moved to

ROOT

BABAR I/O is switching from Objy to ROOT

Adopted by Alice Online+Offline with the most demanding requirements

regarding raw data processing/storage.

– 1.25 GBytes/s

– 2 PBytes/yr

Large number of experiments (>30) using ROOT world-wide ensures opensource style support

Requirements for HEP software

architecture or framework

Easy interface with existing packages:

– Geant3 , Geant4, Event generators

Simple structure to be used by non-computing experts

Portability

Experiment-wide framework

Use a world-wide accepted framework, if possible

Collaboration-specific framework is less likely

to survive in the long term

ROOT I/O Benchmark

Phobos: 30 MB/s - 9 TB (2001)

– Event size: 300 kB

– Event rate: 100 Hz

NA57 MDC1: 14 MB/s - 7 TB (1999)

– Event size: 500kB

– Event rate: 28 Hz

Alice MDC2: 100 MB/s - 23 TB (2000)

– Event size: 72 Mbytes

CDF II: 20 MB/s - 200 TB (2003)

– Event size: 400kB

– Event Rate: 75 Hz

ROOT I/O Performance Check #1:

Phobos: 30 MB/s-9 TB (2001)

Event size: 300 kB

Event rate: 100 Hz

Real Detector

ROOT I/O used between Evt builder (ROOT code) and HPSS

Raid (2 disks * 2 SCSI ports only used to balance CPU load)

30 MB/sec data transfer not limited by ROOT streamer (CPU

limited)

With additional disk arrays estimated throughput > 80 MB/sec

Farm: 10 nodes running Linux

rootd File I/O Benchmark

2300 evt read in a loop (file access only, no reco, no selection)

Results: 17 evt/sec

rootd(aemon) transfers data to actual node with 4.5 MB/sec

Inefficient design: ideal situation for PROOF

Global throughput (MB/s)

Raw Performances (Alice MDC2)

140.0

120.0

100.0

80.0

60.0

40.0

20.0

0.0

1

2

3

Pure Linux setup

20 data sources

FastEthernet local connection

4

5

6

7

8

9

10 11 12 13 14 15 16 17 18 19 20

Number of event builders

Experiences with Root I/O

Many experiments are using Root I/O today or

planning to use it in the near future:

RHIC (started last summer)

– STAR

100 TB/year + MySQL

– PHENIX 100 TB/year + Objy

– PHOBOS 50 TB/year + Oracle

– BRAHMS 30 TB/year

JLAB (starting this year)

– Hall A,B,C, CLASS >100 TB/year

FNAL (starting this year)

– CDF 200 TB/year + Oracle

– MINOS

Experiences with Root I/O

DESY

– H1

moving from BOS to Root for DSTs and

microDSTs 30 TB/year DSTs + Oracle

– HERA-b extensive use of Root + RDBMS

– HERMES moving to Root

– TESLA Test beam facility have decided for Root, expect

many TB/year

GSI

– HADES Root everywhere + Oracle

PISA

– VIRGO > 100 TB/year in 2002 (under discussion)

Experiences with Root I/O

SLAC

– BABAR >5 TB microDSTs, upgrades under way + Objy

CERN

– NA49 > 1 TB microDSTs + MySQL

– ALICE MDC1 7 TB,

–

–

–

–

–

MDC2 23 TB + MySQL

ALICE MDC3 83 TB in 10 days 120 MB/s (DAQ->CASTOR)

NA6i starting

AMS Root + Oracle

ATLAS, CMS test beams

ATLAS,LHCb, Opera have chosen Root I/O against Objy

+ several thousand people using Root like PAW

LAN/WAN files

Files and Directories

– a directory holds a list of named objects

– a file may have a hierarchy of directories (a la Unix)

– ROOT files are machine independent

– built-in compression

Local file

Support for local, LAN and WAN files

– TFile f1("myfile.root")

– TFile f2("http://pcbrun.cern.ch/Renefile.root")

– TFile f3("root://cdfsga.fnal.gov/bigfile.root")

– TFile f4("rfio://alice/run678.root")

Remote file

access via

a Web server

Remote file

access via

the ROOT daemon

Access to a file

on a mass store

hpps, castor, via RFIO

Support for HSM Systems

Two popular HSM systems are supported:

– CASTOR

developed by CERN, file access via RFIO API and

remote rfiod

– dCache

developed by DESY, files access via dCache API and

remote dcached

TFile *rf = TFile::Open(“rfio://castor.cern.ch/alice/aap.root”)

TFile *df = TFile::Open(“dcache://main.desy.de/h1/run2001.root”)

Parallel ROOT Facility

Data Access Strategies

Each slave get assigned, as much as possible,

packets representing data in local files

If no (more) local data, get remote data via rootd

and rfio (needs good LAN, like GB eth)

The PROOF system allows:

– parallel analysis of trees in a set of files

– parallel analysis of objects in a set of files

– parallel execution of scripts

on clusters of heterogeneous machines

Parallel Script Execution

Local PC

root

#proof.conf

slave node1

slave node2

slave node3

slave node4

stdout/obj

ana.C

Remote PROOF Cluster

proof

proof

node1

ana.C

$ root

root [0] .x ana.C

root [1] gROOT->Proof(“remote”)

root [2] gProof->Exec(“.x ana.C”)

TFile

proof

node2

proof

*.root

*.root

TNetFile

*.root

node3

proof = master server

proof = slave server

proof

node4

*.root

A Proposal for the MEG Offline

Architecture

Computing Model

General Architecture

Database Model

Montecarlo

Offline Organization

Corrado Gatto

PSI 9/2/2004

Computing Model: Organization

Based on a distributed computing scheme with a hierarchical

architecture of sites

Necessary when software resources (like the software groups

working on the subdetector code) are deployed over several

geographic regions and need to share common data (like the

calibration).

Also important when a large MC production involves several

sites.

The hierarchy of sites is established according to the computing

resources and services the site provides.

Computing Model: MONARC

A central site, Tier-0

– will be hosted by PSI.

Regional centers, Tier-1

– will serve a large geographic region or a country.

– Might provide a mass-storage facility, all the GRID services, and an adequate

quantity of personnel to exploit the resources and assist users.

Tier-2 centers

–

–

–

–

–

Will serve part of a geographic region, i.e., typically about 50 active users.

Are the lowest level to be accessible by the whole Collaboration.

These centers will provide important CPU resources but limited personnel.

They will be backed by one or several Tier-1 centers for the mass storage.

In the case of small collaborations, Tier-1 and Tier-2 centers could be the

same.

Tier-3 Centers

– Correspond to the computing facilities available at different Institutes.

– Conceived as relatively small structures connected to a reference Tier-2 center.

Tier-4 centers

– Personal desktops are identified as Tier-4 centers

Data Processing Flow

STEP 1 (Tier-0)

– Prompt Calibration of Raw data (almost Real Time)

– Event Reconstruction of Raw data (within hours of PC)

– Enumeration of the reconstructed objects

– Production of three kinds of objects per each event:

ESD (Event Summary Data)

AOD (Analysis Object Data)

Tag objects

– Update of the database of calibration data, (calibration

constants, monitoring data and calibration runs for all the

MEG sub-detectors).

– Update the Run Catalogue

– Post the data for Tier-1 access

Data Processing Flow

STEP 2 (Tier-1)

– Some reconstruction (probably not needed at MEG)

– Eventual reprocessing

– Mirror locally the reconstructed objects.

– Provide a complete set of information on the

production (run #, tape #, filenames) and on the

reconstruction process (calibration constants, version

of reconstruction program, quality assessment, and

so on).

– Montecarlo production

– Update the Run catalogue.

Data Processing Flow

STEP 3 (Tier-2)

– Montecarlo production

– Creation of DPD (Derived Physics Data) objects.

– DPD objects will contain information specifically

needed for a particular analysis.

– DPD objects are stored locally or remotely and might

be made available to the collaboration.

Data Model

ESD (Event Summary Data)

–

contain the reconstructed tracks (for example, track pt, particle Id, pseudorapidity and phi,

and the like), the covariance matrix of the tacks, the list of track segments making a track

etc…

–

AOD (Analysis Object Data)

–

Tag objects

–

contain information on the event that will facilitate the analysis (for example, centrality,

multiplicity, number of electron/positrons, number of high pt particles, and the like).

identify the event by its physics signature (for example, a Higgs electromagnetic decay and

the like) and is much smaller than the other objects. Tag data would likely be stored into a

database and be used as the source for the event selection.

DPD ( Derived Physics Data)

–

–

–

–

are constructed from the physics analysis of AOD and Tag objects.

They will be specific to the selected type of physics analysis (ex: mu->e gamma, mu->e e e)

Typically consist of histograms or ntuple-like objects.

These objects will in general be stored locally on the workstation performing the analysis,

thus not add any constraint to the overall data-storage resources

Building a Modular System

Use ROOT’s

Folders

Folders

A Folder can contain:

other Folders

an Object or multiple Objects.

a collection or multiple collections

Folders Types

Tasks

Data

Folders Interoperate

Data Folders are filled by Tasks (producers)

Data Folders are used by Tasks (consumers)

Folders Type: Tasks

Reconstructioner

1…3

Reconstructioner

(per detector)

Digitizer

(per detector)

Clusterizer

(per detector)

DQM

1…3

Fast Reconstructioner

(per detector)

Analizer

Analizer

Alarmer

Digitizer

{User code}

Clusterizer

{User code}

Folders Type: Tasks

Calibrator

1…3

Calibrator

(per detector)

Histogrammer

(per detector)

Aligner

1…3

Aligner

(per detector)

Histogrammer

(per detector)

Folders Type: Tasks

Vertexer

1…3

Vertexer

(per detector)

Histogrammer

(per detector)

Trigger

1…3

Trigger

(per detector)

Histogrammer

(per detector)

Data Folder Structure

Main.root

Constants

Header

TreeeH

Run

Header

Conditions

Configur.

Event(i)

1…n

DCH.Hits.root

Event #1

Event #2

TreeeH

TreeH

Raw Data

DC

EMC

TOF

Hits

DCH.Digits.root

Hits

Event #1

Event #2

Hits

TreeeD

TreeD

Reco Data

DC

EMC

TOF

MC only

Kinematics

Particles

Track Ref.

Digi

Track

Particles

Kine.root

Event #1

Event #2

TreeK

TreeK

Files Structure of « raw » Data

1 common TFile + 1 TFile per detector +1 TTree per event

TTree0,…i,…,n : kinematics

main.root

DCH.Hits.root

TClonesArray

TParticles

MEG: Run Info

TTree0,…i,…,n : hits

TBranch : DCH

TClonesArray

TreeH0,…i,…,n : hits

TBranch : EMC

TClonesArray

•

•

•

EMC.Hits.root

Files Structure of « reco » Data

Detector wise splitting

DCH.Digits.root

•• •

•

•

•

DCH.Reco.root

SDigits.root

EMC.Digits.root

•

•

•

•• •

Reco.root

EMC.Reco.root

Each task generates one TFile

• 1 event per TTree

• task versioning in TBranch

TTree0,…i,…n

TBranchv1,…vn

TClonesArray

Run-time Data-Exchange

Post transient data to a white board

Structure the whiteboard according to detector substructure & tasks results

Each detector is responsible for posting its data

Tasks access data from the white board

Detectors cooperate through the white board

Whiteboard Data Communication

Class 1

Class 2

Class 8

Class 3

Class 7

Class 4

Class 6

Class 5

Coordinating Tasks & Data

Detector stand alone (Detector Objects)

– Each detector executes a list of detector actions/tasks

– On demand actions are possible but not the default

– Detector level trigger, simulation and reconstruction are implemented as clients of

the detector classes

Detectors collaborate (Global Objects)

– One or more Global objects execute a list of actions involving objects from several

detectors

The Run Manager

– executes the detector objects in the order of the list

– Global trigger, simulation and reconstruction are special services controlled by the

Run Manager class

The Offline configuration is built at run time by executing a

ROOT macro

Run manager Structure

GlobalTheOffline

executes

Run Manager

Run Class

the detector objects

in the order of the list

MC Run Manager

Run Class

Each detector

executes a list

of detector tasks

DCH

One or more

Global Objects

execute a list of tasks

involving objects

from several detectors

Detector Class

Detector

tasks

ROOT

Data Base

Tree

On demand actions

Branches

are possible but

not the default

Detector Class

Detector

tasks

Global Reco

EMC

Detector Class

Detector

tasks

TOF

Detector Class

Detector

tasks

Detector Level Structure

List of

detectors

DCH

Detector Class

DCH Simulation

Hits

Branches

of a Root Tree

DetectorTask Class

Digits

TrigInfo

DCH Digitization

Local tracks

DetectorTask Class

List of

detector

tasks

DCH Trigger

DetectorTask Class

DCH Reconstruction

DetectorTask Class

The Detector Class

Base class for MEG subdetectors

modules.

Both sensitive modules (detectors)

and non-sensitive ones are

described by this base class. This

class

supports the hit and digit trees

produced by the simulation

supports the the objects produced

by the reconstruction.

This class is also responsible for

building the geometry of the

detectors.

AliTPC

AliDetector

DCH

Detector

actions

Detector

tasks

Detector Class

AliTOF Class

Module

AliDetector

DCHGeometry

Detector

actions

Geometry Class

-CreateGeometry

AliTRD

AliDetector

-BuildGeometry

-CreateMaterials

AliFMD

AliDetector

MEG Montecarlo

Organization

The Virtual Montecarlo

Geant3/Geant4 Interface

Generator Interface

The Virtual MC Concept

Virtual MC provides a virtual interface to Monte Carlo

It enables the user to build a virtual Monte Carlo application

independent of any actual underlying Monte Carlo

implementation itself

The concrete Monte Carlo (Geant3, Geant4, Fluka) is

selected at run time

Ideal when switching from a fast to a full simulation: VMC

allows to run different simulation Monte Carlo from the same

user code

The Virtual MC Concept

User

Code

VMC

Reconstruction

Visualisation

Generators

G3

G3 transport

G4

G4 transport

FLUKA

FLUKA

transport

Virtual

Geometrical

Modeller

Geometrical

Modeller

Running a Virtual Montecarlo

Transport

engine

selected

at run time

Generators

Run Control

Fast MC

FLUKA

Geant3.2 Geant4

1

Root particle stack

Virtual

MC

Root

Output

File

hits structures

Geometry

Simplified

Root geometry

Database

Generator Interface

TGenerator is an abstract base class, that defines

the interface of ROOT and the various event

generators (thanks to inheritance)

Provide user with

– Easy and coherent way to study variety of physics signals

– Testing tools

– Background studies

Possibility to study

– Full events (event by event)

– Single processes

– Mixture of both (“Cocktail events”)

Data Access: ROOT + RDBMS Model

ROOT

files

Event Store

Oracle

MySQL

Calibrations

histograms

Run/File

Catalog

Trees

Geometries

Offline Organization

No chance to have enough people at one site to

develop the majority of the code of MEG.

Planning based on maximum decentralisation.

– All detector specific software developed at outside

institutes

– Few people directly involved today

Off-line team responsible for

– central coordination, software distribution and

framework development

– prototyping

Offline Team Tasks:

I/O Development:

–

–

–

–

Offline Framework:

–

–

–

–

Interface to DAQ

Distributed Computing (PROOF)

Interface to Tape

Data Challenges

ROOT Installation and Maintenance

Main program Implementation

Container Classes

Control Room User Interface (Run control, DQM, etc…)

Database Development (Catalogue and

Conditions):

– Installation

– Maintenance of constant

– Interface

Offline Team Tasks 2:

Offline Coordination:

– DAQ Integration

– Coordinate the work of detector software subgroups

Reconstruction

Calibration

Alignment

Geometry DB

Histogramming

– Montecarlo Integration

Data Structure

Geometry DB

– Supervise the production of collaborating classes

– Receive, test and commit the code from individual subgroups

Offline Team Tasks 3:

Event Display

Coordinates the Computing

– Hardware Installation

– Users and queue coordination

– Supervise Tier-1, Tier-2 and Tier-3 computing facilities

DQM

– At sub-event level responsibility is of subdetector

– Full event is responsability of the offline team

Documentation

Software Project Organisation

Representatives from

Detector Subgroups

DCH

reco, calib & histo)

EMC

reco, calib & histo

Representatives from

Institutions

TOF

reco, calib & histo

Offline

Team

Representative from

Montecarlo Team

Generator

GEANT

Digi

Production

Database

Geometry

Geometry DB

Manpower Estimate

Activity

Off-line Coordination

Off-line Framework Develop.

Global Reco Develop. (?)

Databases (Design & Maint)

QA & Documentation

I/O Development

MDC

Event Display & UI

Syst. + web support

Physics Tools

Production

Total Needed

Profile

PH

PH/CE

PH

PH

PH/CE

CE/PH

CE

CE

CT

2004

2005

0,8

1,2

1,0

1,0

0,5

1,0

0,5

0,5

2006

0,8

1,2

1,0

1,0

0,5

1,0

0,5

1,0

1,0

1,0

2007

0,8

1,2

1,0

1,0

0,7

1,0

0,5

1,0

1,0

1,0

PH/CE

6,5

9,0

9,2

0,8

1,2

1,0

1,0

1,0

0,5

0,5

2,0

1,0

9,0

Proposal

Migrate immediately to C++

– Immediately abandon PAW

– But accept GEANT3.21 (initially)

Adopt the ROOT framework

– Not worried of being dependent on ROOT

– Much more worried being dependent on G4, Objy....

Impose a single framework

– Provide central support, documentation and distribution

– Train users in the framework

Use Root I/O as basic data base in combination with an

RDBMS system (MySQL, Oracle?) for the run

catalogue.