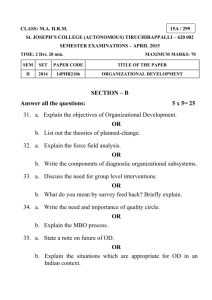

(SEE): Model background, methodology, results from first two years

advertisement