HartzWGISSoptiNets.v3

advertisement

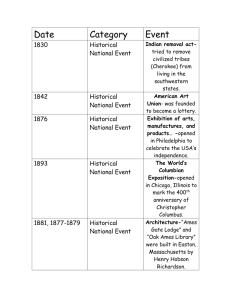

Columbia Supercomputer and the NASA Research & Education Network WGISS 19: CONAE March, 2005 David Hartzell NASA Ames / CSC dhartzell@arc.nasa.gov 1 Agenda • Columbia • NREN-NG • Applications 2 NASA’s Columbia System • NASA Ames has embarked on a joint SGI and Intel Linux Super Computer. – Initally twenty 512 processor Intel IA-64 SGI Altix nodes – NREN-NG: an Optical support WAN • NLR will be the optical transport for this network, delivering high-bandwidth to other NASA centers. • Achieved 51.9 Teraflops with all 20 nodes on Nov 2004 • Currently 2nd on the Top500 list – Other systems have come on-line that are now faster. 3 Columbia 4 Preliminary Columbia Uses Space Weather Modeling Framework (SWMF) SWMF has been developed at the University of Michigan under the NASA Earth Science Technology Office (ESTO) Computational Technologies (CT) Project to provide “plug and play” Sun-to-Earth simulation capabilities to the space physics modeling community. Estimating the Circulation and Climate of the Ocean (ECCO) Continued success in ocean modeling has improved model and the work continued during very busy Return To Flight uses of Columbia. finite-volume General Circulation Model (fvGCM) Very promising results from 1/4° fvGCM runs encouraged use for real time weather predictions during hurricane seasons - one goal is to predict hurricanes accurately in advance. Return to Flight (RTF) Simulations of tumbling debris from foam and other sources are being used to assess the threat that shedding such debris poses to various elements of the Space Shuttle Launch Vehicle. 5 20 Nodes in Place • Kalpana was on site at the beginning of the project • The first two new systems were received on 28 June and placed in to service that week. • As of late October, 2004, all systems were in place. 6 Power • Ordered and received twenty 125kw PDU’s • Upgrade / installation of power distribution panels 7 Cooling • New Floor Tiles • Site visits conducted • Plumbing in HSPA and HSPB complete • Heating problem contingency plans developed 8 Networking • Each Columbia node has four 1 GigE • And one 10 GigE • Plus Fiber Channel and Infiniband • Required all new fiber and copper infrastructure, plus switches 9 Components RTF 128 10GigE Front End - 128p Altix 3700 (RTF) Networking - 10GigE Switch 32-port -10GigE Cards (1 Per 512p) - InfiniBand Switch (288port) - InfiniBand Cards (6 per 512p) - Altix 3900 2048 Numalink Kits InfiniBand T512p Fibre Fibre Fibre Fibre Fibre ChannelFibre ChannelFibre ChannelFibre Channel Channel 20TBChannel 20TBChannel 20TBChannel 20TB 20TB 20TB 20TB 20TB T512p SATA SATA SATA SATA 35TB SATA35TB SATA35TB SATA35TB SATA 35TB 35TB 35TB 35TB T512p T512p T512p T512p T512p T512p A512p A512p A512p A512p A512p A512p A512p A512p A512p A512p A512p A512p Switch 128p FCFC Switch 128p Compute Node - Altix 3700 12x512p - “Altix 3900” 8x512p Storage Area Network -Brocade Switch 2x128port Storage (440 TB) -FC RAID 8x20 TB (8 Racks) -SATARAID 8x35TB (8 Racks) 10 NREN Goals • Provide a wide area, high-speed network for large data distribution and real-time interactive applications • Provide access to NASA research and engineering communities primary focus: supporting distributed data access to/from Columbia • Provide access to federal and academic entities via peering with High Performance Research and Engineering Networks (HPRENs) • Perform early prototyping and proofs-of-concept of new technologies that are not ready for production network (NASA Integrated Services Network - NISN) 11 NREN-NG • NREN Next Generation (NG) wide-area network will be expanded from OC-12 to 10 GigE within the next 3-4 months to support Columbia applications. • NREN will “ride” the National Lambda Rail (NLR) to reach the NASA research centers and major exchange locations. 12 Approach Implementation Plan, Phase 1 NREN-NG Target ARC/NGIX-West NLR Sunnyvale StarLight NLR Chicago GRC NLR Cleveland NGIX-East GSFC MATP LRC JPL NLR Los Angeles NREN Sites Peering Points 10 GigE MSFC NLR MSFC JSC NLR Houston 13 NREN-NG Progress • Equipment order has been finalized. • Start construction of network from West to East • Temporary 1 GigE connection up to JPL in place, moving to 10 GigE by end of summer. • Current NREN paths to/from Columbia seeing gigabit/s transfers • NREN-NG will ride the National Lambda Rail network in the US 14 The NLR • National Lambda Rail (NLR). • NLR is a U.S. consortium of education institutions and research entities that partnered to build a nation-wide fiber network for research activities. – NLR offers wavelengths to members and/or Ethernet transport services. – NLR is buying a 20-year right-to-use of the fiber. 15 NLR – Optical Infrastructure - Phase 1 Seattle Portland Boise Chicago Denver Ogden /Salt Lake Clev Pitts KC Wash DC Raleigh LA San Diego Atlanta NLR Route NLR Layer 1 Jacksonville 16 17 Some Current NLR Members • • • • • • • • • • CENIC Pacific Northwest GigaPOP Pittsburgh Supercomp. Center Duke (coalition of NC universities) Mid-Atlantic Terascale Partnership Cisco Systems Internet2 Florida LambdaRail Georgia Institute of Technology Committee on Institutional Cooperation (CIC) • • • • • • Texas / LEARN Cornell Louisiana Board of Regents University of New Mexico Oklahoma State Regents UCAR/FRGP Plus Agreements with: • SURA (AT&T fiber donation) • Oak Ridge National Lab (ORNL) 18 NLR Applications • Pure optical wavelength research • Transport of Research and Education Traffic (like Internet2/Abilene today) • Private Transport of member traffic • Experience working operating and managing an optical network – Development of new technologies to integrate optical networks into existing legacy networks 19 Columbia Applications Distribution of Large Data Sets – Finite Volume General Circulation Model (fvGCM): Global atmospheric model – Requirements: (Goddard – Ames) • ~23 million points • 0.25 degree global grid • 1 Terabyte set for 5 day forecast Bandwidth [Gigabits/sec] (LAN/WAN) Data Transfer Time (hours) Current GSFC - Ames Performance 1.00 / 0.155 17 - 22 GSFC - Ames Performance (1/10 Gig) 1.00 / 10.00 3-5 GSFC - Ames Performance (Full 10 Gig) 10.00 / 10.00 0.4 - 1.1 – No data compression required, prior to data transfer – Assumes BBFTP for file transfers, instead of FTP or SCP 20 Columbia Applications • Distribution of Large Data Sets ECCO: Estimating the Circulation and Climate of the Ocean. Joint activity among Scripps, JPL, MIT & others • Run Requirements are increasing as model scope and resolution are expanded: – – – November ’03 = 340 GBytes / day February ’04 = 2000 GBytes / day February ‘05 = 4000 Gbytes / day (est) Bandwidth [Gigabits/sec] (LAN/WAN) Data Transfer Time (Hours) Previous NREN Performance 1.0/0.155 6 - 12 NREN Feb 2005 (CENIC 1G) 1.0/1.0 0.6 - 0.9 Projected NREN (CENIC 10G) 10.0/10.0 0.2 - 0.4 – Bandwidth for distributed data intensive applications can be limiter – Need high bandwidth alternatives and better file transfer options 21 22 23 hyperwall-1: large images 24 Columbia Applications Disaster Recovery/Backup – Transfer up to seven 200 gigabyte files per day between Ames and JPL – Limiting Factors • Bandwidth: recent upgrade from OC-3 POS to 1 Gigabit Ethernet • Compression: 4:1 Compression utilized for WAN transfers at lower bandwidths. Compression limited bandwidth to 29 Mbps (end host constraint) Data Compression Required Bandwidth [Gigabits/sec] (LAN/WAN) Data Transfer Time (hours) Yes (4:1) 1.00 / 0.155 27 - 31 JPL - Ames (CENIC 1 GigE) No 1.00 / 1.00 4.4 - 6.2 JPL - Ames (CENIC 10 GigE) No 10.00 / 10.00 0.6 - 1.5 Projected Transfer Improvement JPL - Ames (OC-3 POS) ARC JPL 25 Thanks. David Hartzell dhartzell@arc.nasa.gov 26