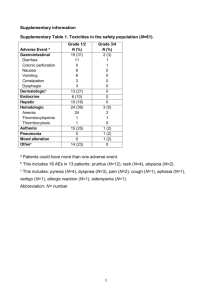

Layer 2 and Layer 3 Network Overview

advertisement

National LambdaRail Layer 2 and 3 Networks: Update 17 July 2005 Joint Techs Meeting Burnaby, BC Caren Litvanyi NLR Layer2/3 Service Center Global Research NOC at Indiana University litvanyi@grnoc.iu.edu 1 Layer 2 2 What’s already been Deployed (L2) 7/17/05 SEA CHI CLE PIT DEN SVL NYC KAN WDC RAL LAX ALB TUL ATL ELP JAC Cisco 6509 switch BAT HOU 3 NLR L2 Services Summary Goals • “To provide a high speed, reliable, flexible, and economical layer 2 transport network offering to the NLR community, while remaining on the cutting-edge of technology and service offerings through aggressive development and upgrade cycles.” • Provide circuit-like options for users who can’t use, can’t afford, or don’t need, a 10G Layer1 wave. 4 Experiment with and provide L2 capabilities – Create distributed nationwide broadcast domain. – Create tools and procedures for automated and user-controlled provisioning of L2 resources. – Provide a level of openness not possible from ISPs, including visibility into the devices, measurement, and performance statistics. – Refine services through member feedback so they are tailored to meet the needs of the research community. 5 NLR Layer 2 locations Jacksonville: Atlanta: Raleigh: WashDC: NYC: Pittsburgh: Cleveland: Chicago: Kansas City: Denver: Seattle: Sunnyvale: Los Angeles: El Paso: Houston: Tulsa: Baton Rouge: Albuquerque: Level3, 814 Phillips Hwy Level3, 345 Courtyard, Suite 9 (?) Level3, 5301 Departure Drive Level3, 1755/1757 Old Meadow Rd Suite: 111, McLean VA MANLAN, 32 Avenue of the Americas, 24th Floor Level3, 143 South 25th Level3, 4000 Chester Avenue. Level3, 111 N. Canal, Suite 200 Level3, 1100 Walnut Street, MO Level3, 1850 Pearl St, Suite 4 PacWave, 1000 Denny Way (Westin) Level3, 1360 Kifer Road Suite: 251 Equinix, 818 W. 7th Street, 6th Floor Wiltel, 501 W. Overland Wiltel, 1124 Hardy St. Wiltel, 18 W. Archer Wiltel, No Address Yet Level3, 104 Gold St. (Blue means that site is installed and up, ready to take connections) 6 Layer 2 Initial Logical Topology SEA CHI SVL DEN NYC PIT CLE KAN WDC RAL LAX ALB TUL ATL ELP JAC Cisco 6509 switch 10GE wave BAT HOU 7 NLR L2 Hardware - Cisco Catalyst 6509-NEB-A Dual Sup720-3BXL PFC3/MSFC3 one 4 x 10GE WS-X6704-10GE (initially) one 24-port 1GE SFP WS-X6724-SFP DC Power: 2 -2500 WDC power supplies 10GE will change to the 2-port 6802 cards when they become available. The 4-port cards will be retained for other connections. SFP support - most anything we can get to work, but LX is the default. SX, ZX, CWDM upon request. No copper blade by default at this time. 8 KSC! 9 Denver! 10 Generic NLR L1, L2, L3 PoP Layout Colo West 15808 15454 East 6509 NLR demarc CRS-1 DWDM 10G wave, link or port 1G wave, link or port 11 NLR L2 User/Network Interface • Physical connection will initially be a 1 Gbps LX connection over singlemode fiber, which the member connects or arranges to connect. • One 1GE connection to the layer 2 network is part of NLR membership. Another for L3 is optional. • Additional connections/services will be available for some cost. 12 NLR L2 User/Network Interface • Tagged or native (untagged) will be supported. • Trunked (802.1q) is expected default configuration. • VLAN numbers will be negotiated. • LACP/etherchannel will be supported. • Q-in-Q supported, vlan translation only if needed. 13 More good-to-know about L2 connections • • • • Mac learning limit - initially 256, negotiable. No CDP on the customer-facing edge. No VTP or GVRP. Rapid per-vlan spanning tree; root guard will be used. • CoS will be rewritten, DSCP passed transparently. • MTU can be standard, jumbo, or custom, but needs to allow the headroom for 802.1q and Q-in-Q. 14 More good-to-know about L2 connections • Redundancy on point-to-point connections is optional via rapid spanning tree and a configured backup path. • Some users may prefer deterministic performance (unchanging path) over having a backup path. • Use Q-in-Q to tunnel BPDUs as requested. • PIM snooping. 15 Initial Services Dedicated Point to Point Ethernet – VLAN between 2 members with dedicated bandwidth from sub 1G to multiple 1G. Best Effort Point to Multipoint – Multipoint VLAN with no dedicated bandwidth. National Peering Fabric – Create a national distributed exchange point, with a single broadcast domain for all members. This can be run on the native vlan. This is experimental, and the service may morph. 16 Expected Near Term Services Dedicated Multipoint : Dedicated bandwidth for multipoint connections (how to count?) Scavenger: Support less-than-best-effort forwarding. This would be useable for all connections. Connections: Support 10GE user ports. (Maybe this is right away rather than soon) 17 Possible Long-range Services User-controlled Web-based provisioning and configuration. Allow users to automatically create new services, or reconfigure existing services on the network using a web-based tool. Time-sensitive provisioning – Allow users to have L2 connections with bandwidth dedicated only at certain times of day, or certain days. 18 NLR L2 Monitoring & Measurement • GRNOC will manage and monitor 24x7 for device, network, and connection health using existing tools as the baseline, including statistics gathering/reporting. • Also maintains databases on equipment, connections, customer contacts, and configurations. • Active measurement is currently the responsibility of the end users across their layer2 connections, but we are open to other ideas. • A portal/proxy will be available to execute commands on the switches directly. Currently only non-servicedisrupting commands are permitted. https://ratt.uits.iu.edu/routerproxy/nlr/ 19 Layer 3 20 What’s already been Deployed (L3) 6/22/05 SEA CHI DEN NYC CLE PIT WDC RAL LAX ALB TUL ATL BAT JAC HOU Cisco CRS-1 8/S router 21 NLR L3 Services Summary Goals: The purpose of the NLR Layer3 Network is to create a national routed infrastructure for network and application experiments, in a way that is not possible with current production commodity networks, network testbeds, or production R&E networks. In addition to provided baseline services, network researchers will have the opportunity to make use of an advanced national “breakable” infrastructure to try out technologies and configurations. 22 NLR L3 Services Summary Goals: A guiding design principle is flexibility. This is planned to be reflected in a more open AUP, willingness to prioritize some experimentation over baseline production services, and additional tools development. 23 NLR Layer 3 locations Atlanta: Level3, 345 Courtyard, Suite 9 WashDC: Level3, 1755/7 Old Meadow Rd,McLean VA. NYC: MANLAN, 32 Avenue of the Americas, 24th floor Chicago: Level3, 111 N. Canal, Suite 200 Denver: Level3, 1850 Pearl St, Suite 4 Seattle: PacWave, (Westin) Los Angeles: Equinix, 818 W. 7th Street, 6th Floor Houston: Wiltel, 1124 Hardy St. (Blue means that site is installed and up) 24 Layer 3 Initial Logical Topology PNWGP SEA NLR L2 ITN Cornell CIC ITN ITN NLR L2 UCAR/FRGP NYC NLR L2 PSC NLR L2 CHI DEN CENIC LAX MAT P Duke/NC OK UNM NLR L2t NLR L2 ATL Cisco CRS-1 router 10GE wave WDC NLR L2 LA LEARN NLR L2 GATech FLR HOU 25 NLR Layer 3 Hardware Cisco CRS-1 (half-rack, 8-slot) 2 route processors (RPs) 4 switch fabric cards 2 Power Entry Modules 2 control plane software bundle licenses (IOS-XR) with crypto 2 memory modules for each RP (required) – 2GB each 1 or 2 8x10GE line card(s) 1 multi-service card (MSC) 1 8x10GE PLIM 1 line card software license 1 extra MSC 1 extra line card software license XENPAK 10G-LR optics (SC) 26 NLR Layer 3 Hardware Things to note: FRONT PLIM SIDE BACK MSC SIDE • Providing 10GE only by default. • 1GE is not yet available on the CRS • SONET is available but we did not purchase any (oc768, oc192, oc48). • redundant power and RPs. • We will be participating in the beta program for the CRS. • Midplane design 27 CRS1 base HW configuration Sites that had at least 7 of their 8 10GE interfaces assigned at initial installation receives a second 8x10GE, including the MSC and software license. Chicago Denver Houston. These location have a total of 12 XENPAK 10G-LR optics modules. We have been calling the first configuration “A”, and the configuration with the additional 8x10GE type “B”. The NLR layer 3 network will be comprised initially of 5 type “A” routers and 3 type “B” routers. 28 Denver! 29 Generic NLR L1, L2, L3 PoP Layout Colo West 15808 15454 East 6509 NLR demarc CRS-1 DWDM 10G wave, link or port 1G wave, link or port 30 NLR L3 User/Network Interface • Physical connection will be a 10 Gbps Ethernet (1310nm) connection over singlemode fiber, which the member connects or arranges to connect. • One connection directly to the layer 3 network is part of NLR membership, a backup 1Gbps VLAN through the layer 2 network is optional and included. • Additional connections/services will be available for some cost. • Trunked (802.1q) will be supported. • Point-to-point connections (no 802.1q) is supported. 31 Connecting to NLR L3 Cisco CRS-1 router Cisco 6509 switch CHI Regional Network router WDC 10G wave, link or port 1G wave, link or port RAL ATL HOU NCLR router LEARN router BAT JAC FLR router HOU, RAL, and JAC are shown as examples; ALL other MetaPoPs get a dual backhauled connection as well. 32 NLR L3 Backbone Baseline Configuration dual IPv4 and IPv6 ISIS core IGP BGP IPv4 Multicast: PIM, MSDP, MBGP by default. Investigating doing IPv6 multicast by default. MPLS is not currently a planned default, but some can be supported upon request. 33 NLR L3 Services - Features Day One Connection - each member gets a 10GE connection and a VLAN backhauled over the L2 network to a second node if they desire. General operations of the network, including base features (configuration with no experiment running), connections, and communication of experiments. Future Possibilities: Peering with other R&E networks. Commodity Internet Connections or peering. User-Controllable Resource Allocation: Will be supported as experiments, and rolled into the base feature list if there is general use and interest. 34 NLR L3 Connection Interests Private Test-lab Network Connections. Route Advertiser Connections: Get active commodity routing table for experiments, but no actual commodity bandwidth drainage. Pre-emptible Connections: Allow other types of connections to use unused ports on a temporary basis, such as for a conference or measurement project. 35 NLR L3 Services “Layer 3 Services Document” - engineering subcommittee Set user expectations for service on L3 network. Make clear the experiment support model. Service Expectations: SLA isn’t good measure since the network may appear “down” because of experiments. Network may not have same uptime as production network, but will have same level of service and support as production network. 36 NLR L3 Services -Experiment Support Each experiment will have a representative from the L2/L3 Support Center and a representative from the ESC. If necessary, the prospective experiment will be sent to the NNRC for review. (?) L2/L3 Services staff will be responsible for scheduling network assets for experiments and will see the experiment through to completion. In general, experiments will be scheduled on a first-come-first-serve basis. 37 Layer 3 Network Conditions Way of communicating the current state of the network to users. Users may choose to automatically have their interfaces shutdown under any Network Conditions they desire. Users will receive notification of changes to Network Condition, with focused communication to those who will be turned on or off because of it. Tools will be available for users to monitor and track Network Conditions. 38 Network Conditions NetCon 7- No Experiment Currently Active NetCon 6- Experiment Active, No Instability Expected NetCon 5- Possible Feature Instability/No General Instability Expected NetCon 4- Possible Network Instability NetCon 3- Congestion Expected NetCon 2- Probable Network Instability; Possible Impact on Connecting Networks NetCon 1- Network Completely Dedicated 39 NLR Layer 3 - discussion What are members’ plans? Questions? Issues? What do users want/need? Tools? Monitoring and measurement ability? Direct access to login and configure routers? A router “ghost” service? Instruction/workshops? Commodity access or ISP collaboration? Collaboration with projects like PlanetLab? 40 Installation Overview • • • Layer 2: – 18 Cisco 6509s Layer 3: – 8 Cisco CRS-1 (half-rack) General Strategy: 1. Focus on sites with main interest first 2. Grow network footprint linearly from there 41 A word about deployment in NLR Phase 2 Sites... • In addition to installing Phase 2 optical hardware, Wiltel will ready sites for L2/L3. • L2/L3 deployment in phase 2 and phase2/phase1 borders will depend on this. 42 Upcoming Schedule Site Raleigh Sunnyvale Seattle Atlanta Washington Jacksonville LA New York Tulsa Houston El Paso Baton Rouge Albuquerque L2 L3 Date June 20-22 June 28-29 July 27-29 August 2-5 August 9-10 August 1-2 August 16-20 September 6-9 August 9-10 August 23-25 August 25-26 August 30-31 October? 43 Thank you! noc@nlr.net Caren Litvanyi litvanyi@grnoc.iu.edu 44