Lecture 5

advertisement

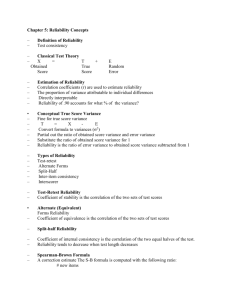

Reliability Psych 395 - DeShon How Do We Judge Psychological Measures? Two concepts: Reliability and Validity Reliability: How consistent is the assessment over time, items or raters? How reproducible are the measurements? How much measurement error is involved? Validity: How well does an assessment measure what it is supposed to measure? How accurate is our assessment? – An assessment is valid if it measures what it purports to measure. Correlation Review Some Data from the 2005 Baseball Season Team Yankees Red Sox Payroll 208.31 (1st) 123.51 (2nd) Win % 58.6% 58.6% ERA 4.52 4.74 Attendance 4.09 2.85 White Sox Tigers Devil Rays 75.18 (13th) 69.09 (15th) 29.68 (30th) 61.1% 43.8% 41.4% 3.61 4.51 5.39 2.34 2.02 1.14 Questions We Might Ask … How strongly is payroll associated with winning percentage? How strongly is payroll associated with making the playoffs? How can we answer these? Option 1: Plot the Data Option 2: Quantify the Association with the Correlation Coefficient The Correlation Coefficient Credited to Karl Pearson (1896) Measures the degree of linear association between two variables. Ranges from -1.0 to 1.0 Sign refers to direction – – Negative: As X increases Y decreases Positive: As X increases Y increases One Formula Symbolized by r Covariance of X and Y Divided by the Product of the SDs of X and Y. cov XY r s X sY Calculation of r for Payroll (X) and Winning Percentage (Y) covXY = 1.13 sX = 34.23 sY = .07 r cov XY 1.13 1.13 .47 s X sY ( 34.23)( 0.07) 2.40 Calculation of r for Payroll (X) and Making Post-Season (Y) Y coded so that 1=Playoffs 0=No covXY = 8.24 sX = 34.23 sY = .45 r cov XY 8.24 8.24 .53 s X sY ( 34.23)( 0.45) 15.42 Examples of Correlations Source: Meyer et al. (2001) Associations Test Anxiety and Grades r -.17 SAT and Grades in College .20 GRE Quant. and Graduate School GPA .22 Quality of Marital Relationships and Quality of Parent-Child Relationships Alcohol and Aggressive Behavior .22 Height and Weight .44 Gender and Height .67 .23 Commonly Used Rule of Thumb +/- .10 is Small +/- .30 is Medium +/- .50 is Large Use these with care. This guidelines only provide a loose framework for thinking about the size of correlations Sources: Cohen (1988) and Kline (2004) r=0 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.10 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.20 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.30 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.40 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.50 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.60 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.70 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.80 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=.90 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 r=1.0 4 3 2 true 1 0 -4 -3 -2 -1 -1 0 -2 -3 -4 observed 1 2 3 4 Now Back to Reliability Classical Test Theory X=T+E where X = Observed Score T = True Score E = Error score Consider the Construct of Self-Esteem Global self-esteem reflects a person’s overall evaluation of value and worth. William James (1890) argued that self-esteem was the result of an individual’s perceived successes divided by their pretensions Rosenberg (1965) defined global self-esteem as an individual’s overall judgment of adequacy We can’t directly observe self-esteem Measuring Self-Esteem We can ask people questions that reflect individual differences in self-esteem. – – “I feel that I have a number of good qualities” “I see myself as a person with high self-esteem” We assume that a “hidden” self-esteem variable causes people to respond to these questions. We do not want to assume that these items are perfect indicators of an individual’s level of selfesteem. Classical Test Theory X=T+E where X = Observed Score T = True Score E = Error score Classical Test Theory Assumptions 1. 2. 3. True scores and errors are uncorrelated (independent) Errors across people average to zero Across repeated measurements, a person’s average score is ≈ equal to his/her true score. Thinking about Total Variability If X = T + E, then: var (X) = var (T) + var (E) Reliability Coefficients Reliability coefficients reflect the proportion of true score variance to observed score variance var(T ) rxx var( X ) Therefore reliabilities range from 0.0 (no true score variance) to 1.0 (all true-score variance) Classic Definition of Reliability The ratio of true score variance to total score variance. Test 1: Total Variance = 10; True Score Variance = 9. Test 2: Total Variance = 20; True Score Variance = 15. Which Test is More Reliable? Reliability More technical: To what extent do observed scores reflect true scores? How consistent is the assessment? Three Kinds of Reliability Internal Consistency (Content) – Test-Retest (Time) – Random error affects responses to items on an assessment The construct stays the same. However, random errors vary from one occasion to the next. Inter-Rater (Observer Biases) Internal Consistency Use a 5-item measure of Self-Esteem. – – – – – 1. I feel that I am a person of worth, at least on an equal basis with others. 2. I feel that I have a number of good qualities. 3. All in all, I am inclined to feel that I am a failure. 4. I am able to do things as well as most other people. 5. I feel I do not have much to be proud of. Response Options (1 = Strongly Disagree to 5 = Strongly Agree) Internal Consistency Correlate All Items (N = 450) Item 1 Item 2 Item 3 Item 4 Item 1 - Item 2 .70 - Item 3 .38 .45 - Item 4 .50 .51 .41 - Item 5 .32 .25 .43 .25 Item 5 - Summary Statistics of those Correlations Average: .42 Standard Deviation: .13 Minimum: .25 (Items 4 & 5) Maximum: .70 (Items 1 & 2) Standardized Alpha = .78 Alpha is an index of how strongly the items on a measure are associated with each other. Coefficient Alpha Coefficient Alpha () k rij 1 (k 1)rij Where you need (1) the # of items (called k) and (2) the average inter-item correlation. This formula yields the standardized alpha. Coefficient Alpha versus Split-half reliability Estimates… Split-Half Reliability – Divide the items on the assessment into 2 halves and then correlate the two halves. Problem: Estimates fluctuate depending on what items get split into which halves. Alpha is the average of all possible split-half reliabilities. Sample Matrix Item 1 Item 2 Item 3 Item 4 Item 5 Item 6 John 4 3 5 5 3 2 Paul 4 5 5 3 4 4 Ringo 2 2 2 1 2 3 George 4 4 3 2 5 4 Real Results 10 Item Measure of Self-Esteem for 451 women. Correlate the average of the odd number items with the average of the even number items: r = .79 Correlate average first five items with the average of the last five items: r = .67 Average Inter-Item r = .46 Standardized Alpha = .89 Caveats about Coefficient Alpha …. Recall – what goes into the Alpha calculation: – – Number of items Average inter-item correlation There are at least two things to think about when considering Coefficient Alpha… – – Length of the Assessment Dimensionality Pay Attention to the Length of the Assessment. Constant average inter-item correlation (e.g. .420) but increase the number of items…. Items Standardized Alpha 5 .78 6 .81 7 .84 8 .85 9 .87 10 .88 100 .99 Now let’s use something like an average inter-item correlation of .15 Items Standardized Alpha 5 .47 10 .64 15 .73 20 .78 25 .82 30 .84 100 .95 With enough items it is possible to achieve very high alpha coefficients… Dimensionality of the Measure Let’s Get To The Same Average InterItem Correlation in Two Ways Example from Schmitt (1996) Item Pool #1 (Average inter-item r = .5) Item 1. 2. 3. 4. 5. 1. 1.0 2. .8 1.0 3. .8 .8 1.0 4. .3 .3 .3 1.0 5. .3 .3 .3 .8 1.0 6. .3 .3 .3 .8 .8 6. 1.0 Item Pool #2 (Average inter-item r = .5) Item 1. 2. 3. 4. 5. 1. 1.0 2. .5 1.0 3. .5 .5 1.0 4. .5 .5 .5 1.0 5. .5 .5 .5 .5 1.0 6. .5 .5 .5 .5 .5 6. 1.0 Let’s Calculate Alphas… Item Pool #1: Average Inter-item r = .50, number of items = 6. Item Pool #2: Average Inter-item r = .50, number of items = 6. Standardized Alpha for Item Pool #1 = Standardized Alpha for Item Pool #2 = .86 Same alphas but the underlying correlation matrices are quite different… Alpha does NOT index unidimensionality What is unidimensionality? Unidimensionality can be rigorously defined as the existence of one latent trait underlying the set of items (Hattie, 1985, p. 152). Simply put, all of the items forming the instrument all measure just one thing. Turns out that 100% “pure” unidimensionality is hard to achieve for personality and attitude measures. Try to get items that are as close as possible to a unidimensionality set. A Few Tips Think about the construct Pay attention to the number of items on a scale and the average item correlation. Always look at the inter-item correlation matrix. Motto: An essential ingredient in the research process is the judgment of the scientist. (Jacob Cohen, 1923-1998). Question: What is a good alpha level? Answer: It depends…. Reliability Standards Reliability Standards – – .7 for research .9 for actual decisions But… – “Does a .50 reliability coefficient stink? To answer this question, no authoritative source will do. Rather, it is for the user to determine what amount of error variance he or she is willing to tolerate, given the specific circumstances of the study.” Pedhazur and Scmelkin (1991, p. 110) Test-Retest Reliability The extent to which scores at one time point do not perfectly correlate with scores at another time point is an indicator of error Correlation is an estimate of the reliability ratio This assumes the underlying construct is stable. Test-Retest Reliability What Time Interval? Long enough that memory biases are not present but short enough that there is no expectation of true change. Cattell et al (1970, p. 320): “When the lapse of time is insufficient for people themselves to change.” Watson (2004) suggested 2-weeks. Inter-Rater Reliability Just like test-retest reliability Correlation of ratings from 2 or more judges Correlation is an estimate of the reliability ratio Question: What is one undesirable consequence of measurement error? Researchers are often concerned about attenuation in predictor-criterion associations due to measurement error. Assume that measures of X and Y have alphas of .60 and .70, respectively. An estimate of the upper limit on the observed correlation between X and Y is .65 Take the square root of the product of the two reliabilities Measure 1 Measure 2 Upper Limit .50 .85 .65 .60 .85 .71 .70 .85 .77 .80 .85 .82 .90 .85 .87 Correcting Correlations for Attenuation rc rxy rxx ryy rxy = observed correlation between x and y rxx and ryy = reliability coefficients of x and y Appling the Formula Reliability Measure 1 .50 Reliability Measure 2 .60 Observed Correlation .40 Corrected .60 .70 .40 .62 .70 .80 .40 .53 .80 .90 .40 .47 .90 .90 .40 .44 .73 Standard Error of Measurement Estimating the precision of individual scores Standard Error of Measurement = Standard deviation of the error around any individual’s true score 2 SEM captures 95% of the error Calculation of the Standard Error of Measurement (SEM) SEM sx 1 rxx sx = SD of test scores rxx = test reliability good reliability low SEM SEM 10 1 .84 4 poor reliability high SEM SEM 10 1 .19 9 Standard Error of Measurement Assumptions SEM sx 1 rxx • a reliability coefficient based on an appropriate measure • the sample appropriately represents the population Confidence Bands There are additional complexities involved in setting confidence bands around observed scores but we won’t cover them in PSY 395 (see Nunnally & Bernstein, 1994, p. 259) SEM Confidence Interval – 95% Confidence: Z = 1.96 (Often round to 2) – 68% Confidence: Z = 1.0 CI Observed Score Z Confidence SEM Consider 2 Tests Case 1 The CAT (Creative Analogies Test) has 100 items. Assume the SEM of this test is 10. Amy scored 75 The 95% Band = Score (2 *SEM) So the 95% Confidence Band around her score is 55 to 95 Case 2 The CAT-2 (Creative Analogies Test V.2) also has 100 items. Assume the SEM of this test is 2 Amy scored 75 95% Confidence Band around her score is 71 to 79 Why? Recall the 95% Band = Score (2 *SEM) Which test should be used to make decisions about Graduate School Admission? Why? Decisions….Decisions… TRUTH Doesn’t Have “it” Doesn’t Have “it” Has “it” Correct False Negative Test Decision Has “it” False Positive Correct Cut Scores Cut scores are set values that determine who “passes” and who “fails” the test. – Commonly used for licensure or certification (bar exam, medical licensure, civil service) What is the impact of the standard error of measurement on interpreting cut scores? The smaller the SEM the better. Why?