Reliability Concepts: Test Theory & Measurement

advertisement

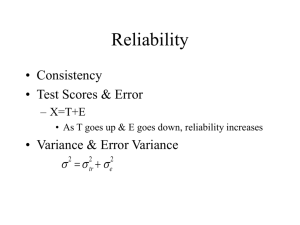

Chapter 5: Reliability Concepts – – – – Definition of Reliability Test consistency Classical Test Theory X = T + Obtained True Score Score E Random Error – – – – – Estimation of Reliability Correlation coefficients (r) are used to estimate reliability The proportion of variance attributable to individual differences Directly interpretable Reliability of .90 accounts for what % of the variance? • – – – – – – Conceptual True Score Variance Fine for true score variance T = X E Convert formula to variances (2) Partial out the ratio of obtained score variance and error variance Substitute the ratio of obtained score variance for 1 Reliability is the ratio of error variance to obtained score variance subtracted from 1 – – – – – – Types of Reliability Test-retest Alternate Forms Split-Half Inter-item consistency Interscorer – – Test-Retest Reliability Coefficient of stability is the correlation of the two sets of test scores • – Alternate (Equivalent) Forms Reliability Coefficient of equivalence is the correlation of the two sets of test scores – Split-half Reliability – – Coefficient of internal consistency is the correlation of the two equal halves of the test. Reliability tends to decrease when test length decreases – – Spearman-Brown Formula A correction estimate The S-B formula is computed with the following ratio: # new items # original items – – – Spearman-Brown Example Reducing the test length reduces reliability What is the new estimated reliability for a 100-item test with a reliability of .90 that is reduced to 50 items? – – – – – Inter-item Consistency The degree to which test items correlate with each other Two special formulas to look at all possible splits of a test a) Kuder-Richardson 20 b) Coefficient Alpha – – Inter-scorer reliability Tests (or performance) are scored by two independent judges and the scores are correlated - What are fluctuations attributed? • – Possible Sources of Error Variance Error differences associated with differences in test scores – Time Sampling – Item Sampling – Inter-Scorer Differences – – Time sampling Conditions associated with administering a test across two different occasions – – – Item Sampling Conditions associated with item content Content heterogeneity v. homogeneity – – Inter-scorer differences Error associated with differences among raters • – Factors affecting the reliability coefficient Test length – The greater the number of reliable test items, the higher the reliability coefficient – Larger test length increases the probability of obtaining reliable items that accurately measure the behavior domain Heterogeneity of scores Item Difficulty Speeded Tests (Timed tests) Based on speed of work, not consistency of the test For example, consistency of speed, not performance Test situation – Conditions associated with test administration Examinee-related – – – – – – – – – – – – Conditions associated with the test taker Examiner-related – conditions associated with scoring and interpretation Stability of the construct – dynamic v. stable variables stable variables more reliable Homogeneity of the items – The more homogeneous the items, the higher the reliability – – – – Interpreting Reliability A test is never perfectly reliable A method for interpreting individual test scores takes into account random error We may never obtain a test-taker’s true score • • • • Standard Error of Measurement (SEM) Provides an index of test measurement error SEM interpreted as standard deviations within a normally distributed curve. SEM is used to estimate true score by constructing a range (confidence interval) within which the examinee's true score is likely to fall given the obtained score SEM Formula St is the standard deviation rtt is the reliability – • • – – – – – SEM Example For example, X = 86, St = 10, rtt = .84 What is the SEM? What is the Confidence Interval (CI)? Within 1 standard deviation, there is a 68% chance that the true score falls within the confidence interval – 2 SDs = 95% – 3 SDs = 99% – – – – – – – – Generalizability Theory Extension of Classical test theory Based on domain sampling theory (Tryson, 1957) Classical Theory emphasizes test error Generalizability Theory emphasizes test circumstances, conditions, and content Test score is considered relatively stable Estimates sources of error that contribute to test scores Variability is the result of variables or error in the test situation – – – – Importance of Reliability Estimates accuracy/consistency of a test Recognizes that error plays a role in testing Understanding reliability helps a test administrator decide which test to use – Strong reliability contributes to the validity of a test