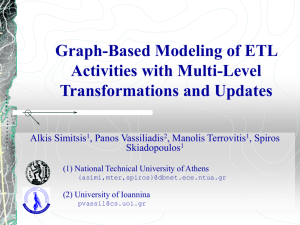

Formal Specification and Optimization of ETL Workflows

advertisement

Data Provenance in ETL Scenarios

Panos Vassiliadis

University of Ioannina

(joint work with Alkis Simitsis, IBM Almaden Research Center,

Timos Sellis and Dimitrios Skoutas, NTUA & ICCS)

Outline

Introduction

Conceptual Level

Logical Level

Physical Level

Provenance &ETL

PrOPr 2007

2

Outline

Introduction

Conceptual Level

Logical Level

Physical Level

Provenance &ETL

PrOPr 2007

3

Data Warehouse Environment

PrOPr 2007

4

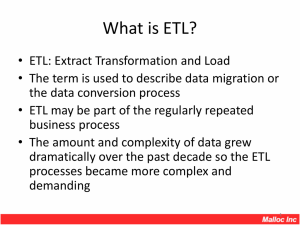

Extract-Transform-Load (ETL)

Extract

Sources

Transform

& Clean

Load

DSA

DW

PrOPr 2007

5

ETL: importance

ETL and Data Cleaning tools cost

ETL market: a multi-million market

IBM paid $1.1 billion dollars for Ascential

ETL tools in the market

30% of effort and expenses in the budget of the DW

55% of the total costs of DW runtime

80% of the development time in a DW project

software packages

in-house development

No standard, no common model

most vendors implement a core set of operators and provide GUI to

create a data flow

PrOPr 2007

6

Fundamental research question

Now: currently, ETL designers work directly at the

physical level (typically, via libraries of physicallevel templates)

Challenge: can we design ETL flows as declaratively

as possible?

Detail independence:

no care for the algorithmic choices

no care about the order of the transformations

(hopefully) no care for the details of the inter-attribute

mappings

PrOPr 2007

7

Now:

DW

Involved

data stores +

Physical

templates

Physical

scenario

Engine

PrOPr 2007

8

Vision:

Schema

mappings

ETL tool

Involved

data stores +

Conceptual to logical

mapping

Conceptual to

logical mapper

DW

DW

Physical

templates

Logical

templates

Logical

scenario

Optimizer

Physical

scenario

Physical

templates

Physical

scenario

Engine

Engine

PrOPr 2007

9

Detail independence

Schema

mappings

ETL tool

Automate

(as much as possible)

Conceptual: the

details of the interattribute mappings

Conceptual to logical

mapping

Conceptual to

logical mapper

Logical

templates

Logical

scenario

Optimizer

Logical: the order of

the transformations

Physical: the

algorithmic choices

DW

Physical

templates

Physical

scenario

Engine

PrOPr 2007

10

Outline

Introduction

Conceptual Level

Logical Level

Physical Level

Provenance &ETL

PrOPr 2007

11

Conceptual Model: first attempts

Necessary providers:

S1 and S2

Due to acccuracy

and small size

(< update window)

{Duration<4h}

PS1

U

Annual

PartSupp’s

PKey

DW.PARTSUPP

S2.PARTSUPP

Recent

PartSupp’s

PK

S1.PARTSUPP

SuppKey

{XOR}

Qty

PKey

PKey

SK

SuppKey

γ

Qty

Date

f

Cost

f

y

Ke

y

.P

Ke

2

S Supp

.

S2

S2.Date

SU

SU M(S2.Q

ty)

M

(S

2.

Co

st)

SK

SuppKey

Date

PKey

Dept

SuppKey

+

f

Qty

Qty

Cost

NN

Cost

Dept

$€

American to

European Date

PS2

Date = SysDate()

PrOPr 2007

PS1.Pkey+=PS2.PKey

PS1.SuppKey+=PS2.SuppKey

PS1.Dept+=PS2.Dept

PKey

SuppKey

Cost

Dept

12

Conceptual Model: The Data Mapping Diagram

Extension of UML to handle inter-attribute mappings

PrOPr 2007

13

Conceptual Model: The Data Mapping Diagram

Aggregating computes the quarterly sales for each product.

PrOPr 2007

14

Conceptual Model: Skoutas’ annotations

Application vocabulary

Datastore mappings

Datastore annotation

VC = {product, store}

VPproduct = {pid, pName, quantity, price,

type, storage}

VPstore = {sid, sName, city, street}

VFpid = {source_pid, dw_pid}

VFsid = {source_sid, dw_sid}

VFprice = {dollars, euros}

VTtype = {software, hardware}

VTcity = {paris, rome, athens}

PrOPr 2007

15

Conceptual Model: Skoutas’ annotations

The class hierarchy

Definition for class

DS1_Products

PrOPr 2007

16

Outline

Introduction

Conceptual Level

Logical Level

Physical Level

Provenance &ETL

PrOPr 2007

17

Logical Model

DS.PSNEW2.PKEY,

DS.PSOLD2.PKEY

SOURCE

DS.PSNEW2

DIFF2

DS.PS2

DS.PS1.PKEY,

LOOKUP_PS.SKEY,

SOURCE

AddAttr2

rejected

DS.PSOLD2

Log

DS.PS2.PKEY,

LOOKUP_PS.SKEY,

SOURCE

DS.PSNEW1.PKEY,

DS.PSOLD1.PKEY

DS.PSNEW1

DIFF1

DS.PS1

COST

SK2

rejected

A2EDate

$2€

rejected

rejected

Log

Log

COST

γ

Log

PKEY,DATE

U

AddDate

rejected

PK

rejected

DS.PSOLD1

Log

DSA

QTY,COST

rejected

DATE=SYSDATE

NotNULL

SK1

DATE

Log

Log

PKEY, DAY

MIN(COST)

S2.PARTS

FTP2

Aggregate1

DW.PARTS

DW.PARTSUPP.DATE,

DAY

S1.PARTS

PKEY, MONTH

AVG(COST)

FTP1

TIME

Sources

V1

Aggregate2

V2

DW

PrOPr 2007

18

Logical Model

Main question:

What information should we put inside a metadata

repository to be able to answer questions like:

what is the architecture of my DW back stage?

which attributes/tables are involved in the population of

an attribute?

what part of the scenario is affected if we delete an

attribute?

PrOPr 2007

19

Architecture Graph

DS.PSNEW2.PKEY,

DS.PSOLD2.PKEY

SOURCE

DS.PSNEW2

DIFF2

DS.PS2

DS.PS1.PKEY,

LOOKUP_PS.SKEY,

SOURCE

AddAttr2

rejected

DS.PSOLD2

Log

DS.PS2.PKEY,

LOOKUP_PS.SKEY,

SOURCE

DS.PSNEW1.PKEY,

DS.PSOLD1.PKEY

DS.PSNEW1

DIFF1

DS.PS1

COST

rejected

A2EDate

$2€

SK2

rejected

rejected

Log

Log

COST

γ

Log

PKEY,DATE

U

AddDate

rejected

PK

rejected

DS.PSOLD1

Log

DSA

QTY,COST

rejected

DATE=SYSDATE

NotNULL

SK1

DATE

Log

Log

PKEY, DAY

MIN(COST)

S2.PARTS

FTP2

Aggregate1

DW.PARTS

DW.PARTSUPP.DATE,

DAY

S1.PARTS

PKEY, MONTH

AVG(COST)

FTP1

TIME

Sources

V1

Aggregate2

V2

DW

PrOPr 2007

20

Architecture Graph

Example

DS.PS2

OUT

IN

Add_Attr2

OUT

IN

PAR

OUT

SK2

IN

PAR

PKEY

PKEY

PKEY

PKEY

PKEY

PKEY

SUPPKEY

SUPPKEY

SUPPKEY

SUPPKEY

SUPPKEY

SUPPKEY

QTY

QTY

QTY

QTY

QTY

QTY

COST

COST

COST

COST

COST

COST

DATE

DATE

DATE

DATE

DATE

DATE

SOURCE

SOURCE

SOURCE

SOURCE

SKEY

AddConst2

in

TMP_STOR.

PARTSUPP

PKEY

out

SOURCE

1

2

LOOKUP2

OUT

PKEY

LPKEY

SOURCE

LSOURCE

SKEY

LSKEY

PrOPr 2007

21

Architecture Graph

Example

DS.PS2

OUT

IN

Add_Attr2

OUT

input

schema

PAR

IN

OUT

SK2

IN

output

schema

PAR

PKEY

PKEY

PKEY

PKEY

PKEY

PKEY

SUPPKEY

SUPPKEY

SUPPKEY

SUPPKEY

SUPPKEY

SUPPKEY

QTY

QTY

QTY

QTY

QTY

QTY

COST

COST

COST

COST

COST

COST

DATE

DATE

DATE

DATE

DATE

DATE

SOURCE

SOURCE

SOURCE

SOURCE

SKEY

AddConst2

in

TMP_STOR.

PARTSUPP

PKEY

out

SOURCE

1

2

LOOKUP2

OUT

PKEY

LPKEY

SOURCE

LSOURCE

SKEY

LSKEY

PrOPr 2007

projected-out

schema

generated

schema

functionality

schema

22

Optimization

Execution order…

S2.PARTSUPP

PKey

DW.PARTSUPP

PKey

SK

SuppKey

γ

Qty

Date

f1

Cost

f2

PK

y

Ke

P

.

y

S2

pKe

Sup

.

2

S

S2.Date

SUM

SU (S2.Q

ty)

M(

S2

.C

os

t)

SuppKey

Date

Qty

Cost

which is the proper

execution order?

PrOPr 2007

23

Optimization

Execution order…

S2.PART

SUPP

SK

f1

γ

f2

PK

DW.PART

SUPP

order equivalence?

SK,f1,f2 or SK,f2,f1 or ... ?

PrOPr 2007

24

Logical Optimization

1

PARTS1

2

PARTS2

3

7

NN

8

9

σ

U

(€COST)

4

5

$2€

A2E

($COST)

(DATE)

6

γ(DATE)

1

8_1

PARTS1

σ(€COST)

3

NN

(€COST)

7

U

2

PARTS2

4

8_2

$2€

σ

($COST)

(€COST)

Can we push selection

early enough?

Can we aggregate

before $2€ takes place?

PARTS

(€COST)

6

γ(DATE)

9

PARTS

5

A2E

(DATE)

PrOPr 2007

25

Outline

Introduction

Conceptual Level

Logical Level

Physical Level

Provenance &ETL

PrOPr 2007

26

Logical to Physical

ETL tool

Schema

mappings

Conceptual to

logical mapper

Conceptual to logical

mapping

Logical

templates

Logical

scenario

Optimizer

Physical

templates

DW

Physical

scenario

“identify the best

possible physical

implementation for a

given logical ETL

workflow”

Engine

PrOPr 2007

27

Problem formulation

Given

a logical-level ETL workflow GL

Compute a physical-level ETL workflow GP

Such that

the semantics of the workflow do not change

all constraints are met

the cost is minimal

PrOPr 2007

28

Solution

We model the problem of finding the physical implementation of an ETL

process as a state-space search problem.

States. A state is a graph GP that represents a physical-level ETL workflow.

Transitions. Given a state GP, a new state GP’ is generated by replacing the

implementation of a physical activity aP of GP with another valid

implementation for the same activity.

The initial state G0P is produced after the random assignment of physical

implementations to logical activities w.r.t. preconditions and constraints.

Extension: introduction of a sorter activity (at the physical-level) as a new

node in the graph.

Sorter introduction

Intentionally introduce sorters to reduce execution & resumption costs

PrOPr 2007

29

Sorters: impact

We intentionally introduce orderings, (via appropriate physical-level sorter

activities) towards obtaining physical plans of lower cost.

Semantics: unaffected

Price to pay:

cost of sorting the stream of processed data

Gain:

it is possible to employ order-aware algorithms that significantly reduce

processing cost

It is possible to amortize the cost over activities that utilize common useful

orderings

PrOPr 2007

30

Sorter gains

3

500

γA

100000

R

σA<600

sel3=0.1

2

1

10000

sel1=0.1

Z

5000

σA>300

sel2=0.5

4 1000

V

γA,Β

W

sel4=0.2

5

1000

γB

Y

sel5=0.2

Without order

cost(σi) = n

costSO(γ) = n*log2(n)+n

With appropriate order

cost(σi) = seli * n

costSO(γ) = n

Cost(G) = 100.000+10.000

+3*[5.000*log2(5.000)+5.000] = 309.316

If sorter SA,B is added to V:

Cost(G’) = 100.000+10.000

+2*5.000+[5.000*log2(5.000)+5.000] =

247.877

PrOPr 2007

31

Interesting orders

3

500

γA

100000

R

sel3=0.1

2

1

10000

σA<600

sel1=0.1

Z

5000

σA>300

4 1000

V

γA,Β

sel2=0.5

W

sel4=0.2

5

A asc

A desc

{A,B, [A,B]}

1000

γB

Y

sel5=0.2

PrOPr 2007

32

Outline

Introduction

Conceptual Level

Logical Level

Physical Level

Provenance &ETL

PrOPr 2007

33

A principled architecture for ETL

ETL tool

Schema

mappings

Conceptual to

logical mapper

Conceptual to logical

mapping

Logical

templates

DW

WHY

Logical

scenario

WHAT

Optimizer

Physical

templates

Physical

scenario

HOW

Engine

PrOPr 2007

34

Logical Model: Questions revisited

What information should we put inside a metadata

repository to be able to answer questions like:

what is the architecture of my DW back stage?

it is described as the Architecture Graph

which attributes/tables are involved in the population of

an attribute?

what part of the scenario is affected if we delete an

attribute?

follow the appropriate path in the Architecture Graph

PrOPr 2007

35

Fundamental questions on provenance & ETL

Why do we have a certain record in the DW?

Because there is a process (described by the Architecture

Graph at the logical level + the conceptual model) that

produces this kind of tuples

Where did this record come from in my DW?

Hard! If there is a way to derive an “inverse” workflow

that links the DW tuples to their sources you can answer

it.

Not always possible: transformations are not invertible,

and a DW is supposed to progressively summarize data…

Widom’s work on record lineage…

PrOPr 2007

36

Fundamental questions on provenance & ETL

How are updates to the sources managed?

(update takes place at the source, DW+data marts must be

updated)

Done, although in a tedious way: log sniffing, mainly.

Also, “diff” comparison of extracted snapshots

When errors are discovered during the ETL process,

how are they handled?

(update takes place at the data staging area, sources must

be updated)

Too hard to “back-fuse” data into the sources, both for

political and workload issues. Currently, this is not

automated.

PrOPr 2007

37

Fundamental questions on provenance & ETL

What happens if there are updates to the schema of

the involved data sources?

What happens if we must update the workflow

structure and semantics?

Currently this is not automated, although the automation

of the task is part of the detail independence vision

Nothing is versioned back – still, not really any user

requests for this to be supported

What is the equivalent of citations in ETL?

… nothing really …

PrOPr 2007

38

Thank you!

PrOPr 2007

39