Daily Behavior Cards RtI/PBS

advertisement

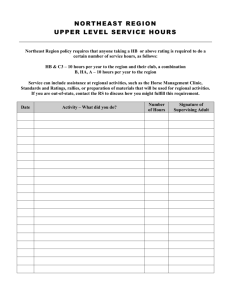

Direct Behavior Ratings and Daily Behavior Cards Amy Jablonski, A.T. Allen Elementary School Assistant Principal for Instruction Leah Mills, A.T. Allen Elementary School Second Grade Teacher Direct Behavior Rating and Daily Behavior Cards Objectives • What is a Direct Behavior Rating? • Why use Direct Behavior Ratings? • How can Direct Behavior Ratings be used with PBIS and RtI? • Explore the effectiveness of whole school Daily Behavior Card. • School site example of Daily Behavior Cards Direct Behavior Ratings Definition: Assessment tool that combines characteristics of systematic direct observation and behavior rating scales. – SDO- method of behavioral assessment that requires a trained observer to identify and operationally define a behavior of interest, use a system of observation in a specific time and place, and then score and summarize the data in a consistent manner (Salvia & Ysseldyke, 2004; Riley-Tillman, Kalaber, Chafouleas, 2006) • Rate 1 target behavior (ex: degree to which a student is engaged in activity) • Use a scale to rate the degree to which that behavior was displayed during specified time • Target for short amount of time Direct Behavior Ratings: Background CURRENT/TRADITIONAL • Most behavioral data has been collected from office referrals – Not able to capture all behaviors – Not sensitive to individual student needs – Compiled after long a set period of time (month, semester, year) • Formative data has been used to progress monitor academics (curriculum based measurements) DBRs • Rating target on a behavior scale for one behavior (ex: off task behavior during class) • DBRs are designed to be used formatively and for specific amount of time Example Standard DBR Direct Behavior Ratings: Overall Purpose • Used to assess the effectiveness of an intervention • Document student progress • Communication within the school • Home-school consistency and communication Direct Behavior Ratings: Characteristics • DBRs are designed to be used formatively (repeated) and for specific amount of time (3 weeks) and rates a specific behavior – Specified behavior – Data is shared with team members – Card serves as progress monitoring tool for effectiveness of intervention • Flexibility to design actual rating and procedures based on student need (Chafouleas, Riley-Tillman & McDougal, 2002) DBRs Must Have… • Behavior must be operationally defined • Observations conducted using standard procedures • Used at predetermined specific time, place, and frequency • Data must be scored and summarized in consistent matter When put together equals a ‘systematic’ DBR When to use… When should you use DBRs? • Guiding questions: – Why do you need the data? – Which tools are the best match to assess the behavior of interest? – What decisions will be made using the data? – What resources are available to collect the data? • When multiple data are needed on the same student(s) and/or behavior(s) When to use… • • • • Limited resources Low-priority situations Educators are willing to use Answering the following questions – “Is a class-wide intervention effective for changing a particular student’s problematic behavior?” – ‘Does a child continue to display a behavior when this intervention is put in place?” • Frequent data is needed Guiding Questions for Creating a DBR What is the target behavior and goal? Focus on specific behavior What is the focus of the rating? Individual, small-group, or class-wide What is the period of rating? Specific school period, daily or other What is the setting of observation? Classroom or other location Guiding Questions for Creating a DBR How often will data be collected? Multiple times a day, daily, weekly What is the scale for rating that will be used? Checklist, Likert-type scale, continuous line Who will be conducting the rating? Classroom teacher, aide, or other educational professional Will ratings be tied to consequences? Consequences must be consistently delivered by person responsible Points to consider when creating DBRs… Designing the Card: What and Who • Define the target behavior and who is the focus of the rating – Increase positive behaviors – Decrease negative behaviors – Individual student/small group Designing the Card: Scale • Decide what scale will be used – Maturity of the individual being rated – Smiley faces – Likert-type scale • Recommended to use 1-10 vs 1-5 • Continuous line • Check list Direct Behavior Ratings • Example of rating scales – 1-10 (1 being no behavior observed) – Faces (happy, neutral, sad) – Continuous line – Check mark • Must be ‘rater friendly’ and easy to implement across all settings Options for DBR Scales Designing the Card: When, Where, and How Often • Frequency of collection – Specific period of time – Entire day – Record immediately • Frequency of summary – Daily – Weekly • Location – Where behavior is noticed Designing the Card: Who Will Conduct Rating • Classroom teacher or adult with student most of the day – Word of caution: Profiling the attributes of a student • Increased efficiency • Willingness to rate • Same rater avoids inconsistencies Chafouleas, Christ, et al., 2007; Chafouleaus, Riley-Tillman, et al., 2007 Designing the Card: Who Will Conduct Rating • Caution: DBR data is the rater’s perception of student behavior H. Walker has found: “Teachers universally endorse a similar profile of attributes, yet differ significantly in their tolerance levels for deviant behavior.” Designing the Card: Who Will Conduct Rating • Student Self-Monitoring – Intervention for teaching behavior – Effective for a variety students • Success – Teaching to accuracy – Initially compare – Positively reinforce Designing the Card: Will there be consequences? • Will consequence be involved with DBR – Individual basis – Positive reinforcements • Communication between school and home – Consequences at home as result of ratings on DBR – Same language/same expectation After Implementation • Fidelity – Does rater compete the DBR as specified? – Completed at right time of day? • Periodically check in with rater – Integrity checklist • If fidelity is an issue – Discussion with feedback – Modify plan • Review acceptability of DBR with rater Matching Data • Does the DBR data correspond with other sources? • Situation: Teacher’s perception of student’s behavior and the student’s behavior do not correspond. Hypothesis 1. The student (or teacher) behaves differently when school psychologist is present 2. Teachers is measuring something different than target data 3. Teacher does not perceive a positive effect that the intervention has • Solution: dialogued and discussion Summarizing Data • Summarize relevant to the scale being used • Averages per week • High or low ratings • Bar chart • Line graph Frequency of Behavior Number of Marks this Year 18 16 14 12 10 # of Marks 8 6 4 2 0 8-9am 910am 1011am 1112pm Time 121pm 1-2pm Marks on Cards by Behavior Failure to meet the expected behavior Frequency of Behavior (this year) 70 60 50 40 30 20 10 0 Not Following Directions Not Using Self-Control Behavior Not Behaving in Connect Strengths of DBRs High Flexibility • • • • Preschool through high school Wide range of behaviors Individual or large group Effective to monitor ‘hard to notice’ behaviors – Outbursts and obvious behaviors easily noticed in short observation High Feasibility, Acceptable, Familiar • Teachers are accepting of DBR as tool and intervention • School psychologists accept DBR as intervention monitoring tool • Familiar language for teachers • Becomes part of daily routine Progress Monitoring • Constructed in a way to be connected to behavioral expectations • Administered quickly • Available in multiple forms • Inexpensive • Completed directly following specific rating time • Set goals and progress monitor • Increase communication between home and school Reduced Risk of Reactivity • Reactivity effect: teacher and students will behave in atypical ways • Research findings – Increase the rate of prompt or positive feedback to the target student (Hey, Nelson, & Hay, 1977, 1980) • Behavior can be documented entire day • One observer interrupts classroom space Weaknesses of DBRs Rater Influence • Influence of raters not fully understood • May be less accurate estimate of student’s actual behavior during rating period • History with student • Sattler (2002) Research: – Low reliability – Scale issues – Time delay between observation and recording Limited Response Format • Less sensitivity to change compared to systematic direct observation • Same score given to student not displaying the behavior and student displaying behavior at a low frequency Is this really new? • No….Other names for DBRs include: – Home-School Note – Behavior Report Card – Daily Progress Report – Good Behavior Note – Check-In Check-Out Card – Performance-based behavioral recording Whole-School Based Assessment Approach School-Based Behavioral Assessment • Tier I (primary level) – assessment efforts are preventive and proactive indicators of performance • Tier II (secondary level) – assessment efforts focused on select group of students deemed for at risk – progress monitoring • Tier III (tertiary level) – assessment focused on individual student – progress monitoring Behavioral Assessment: Whole School Approach • Use whole-school data to determine what, how, where, and when behaviors are occurring • Proactive approach to determining potential problem areas and student concerns • Assists with Special Education – Behavior goals – Progress monitoring Behavioral Assessment: Whole School Approach • Productive and effective school environment – Clear expectations – Common language – Immediate conversations – Communication within the school School Site Example: Whole School Approach and DBRs A.T. Allen Elementary School • • • • Cabarrus County K-5 Elementary School Full Title I school Demographics – 54% free and reduced lunch – 27% Hispanic population – EC population- resource, speech, selfcontained classroom (previous years) Behavior Cards • • • • • Created over 15 years ago Track student behavior Communication in school and with home Movement to ‘Positive Discipline’ Based on administration and staff expectations (SIT team) Early Version of Behavior Card Sent home weekly Students were to earn a point each hour of the day. Different card at each grade level Behavior Cards • Adjusted over time • Same card for entire school • Creation of daily cards as an option – Carbon copy Behavior Cards to Responsibility Cards • Need for change – Change in staff – ‘Buy in’ not present – Inconsistent use of card in school • Implementation of Positive Behavior Intervention & Support Responsibility Cards Responsibility Cards • Matched expectations with PBIS expectations: – Be Safe, Be Responsible, Be Respectful • Added location column – Assist with communication – Data collection to choose intervention • Ex: bathroom vs. classroom • Focus on area of need: more targeted • Sent home daily for all students vs. weekly • Communication • Teacher ‘remembering’ incident Classroom Application QuickTime™ and a H.264 decompressor are needed to see this picture. Daily Behavior Cards • Increases in-school communication – Student accountability in common areas and all classes • Parent communication – Track behavior for each hour – Specific behavior noted • Data driven decisions – – – – Individual student plans made Time of day Location Determine effectiveness of intervention Parent Perspective QuickTime™ and a H.264 decompressor are needed to see this picture. Daily Behavior Cards and DBRs Together • Use data on card to target behavior • Choose Daily Behavior Rating scale – Match student needs – Ease of teacher use • Implement intervention • Keep data on that one target behavior using DBR Daily Behavior Cards and Daily Behavior Ratings Together • Progress monitor on behavior • Graph data – Use data to make decision: • Discontinue intervention • Change intervention • Move to next Tier/Level • Continue to use Daily Behavior Card throughout day Key Points • Clear definition of behavior • Training/information for staff members involved – Same language – Same policy for rating • Choices for re-teaching opportunities Writing IEP Objectives QuickTime™ and a H.264 decompressor are needed to see this picture. Student Example • • • • • • • Found target behavior Created DBR Implemented intervention Collected data Progress monitored Used data to make decision Continued progress monitoring Example • Target behavior: tantruming • Clear definitions of mild, moderate, severe • Tracking in all areas of the school • Training on ratings given to needed staff members Beginning Data Collection 20 Number of Incidents 18 16 14 12 10 8 6 4 2 0 NonCompliance Lef t area Tantruming Craw ling/hiding Verbal Disruptive Target Behaviors Hanging onto object Physical Aggressive Graph DBR Frequency of Tantrums 30 25 Number of Incidents 25 20 15 10 8 8 4 5 4 4 4 3 0 Mild Meduim Type of Tantrum Mild: Grunting, stomping on floor, swaying in class that results in being off-task and/or disrupting class Medium: Refusal, screaming/yelling, crawling under desk and tables Severe: Threats to safety: kicking, hitting, throwing objects, runnning, darting, physical aggression No Intervention Intervention 1 Intervention 1 Continued Severe What do the students say? QuickTime™ and a H.264 decompressor are needed to see this picture. Staff Insight QuickTime™ and a H.264 decompressor are needed to see this picture. Administrative Support QuickTime™ and a H.264 decompressor are needed to see this picture. Questions/Comments…. Resources www.interventioncentral.org - This website offers an extensive resource on using behavior ratings in the Classroom Behavior Report Card Manual. Chafouleas, S.M., Riley-Tillman, T.C., & Sugai, G. (in press). Behavior Assessment and Monitoring in Schools. New York: Guilford Press. Crone, D. A., Horner, R. H., & Hawken, L. S. (2004). Responding to problem behavior in schools: The behavior education program. New York: Guilford Press. Jenson, W.R., Rhode, G., & Reavis, H.K. (1994). The Tough Kid Tool Box. Longmont, CO: Sopris West. Kelley, M.L. (1990). School Home Notes: Promoting Children’s Classroom Success. New York: Guilford Press. Shapiro, E.S., & Cole, C.L. (1994). Behavior change in the classroom: Self management interventions. New York: Guilford Press. For More Information • Amy Jablonski, A.T. Allen Elementary School ajablons@cabarrus.k12.nc.us • Leah Mills, A.T. Allen Elementary School lmills@cabarrus.k12.nc.us • Charouleas, S., Riley-Tillman, T. C., & Sugai, G. (2007). School-Based Behavioral Assessment: Informing intervention and instruction. RILEYTILLMANT@ecu.edu