Appendix O

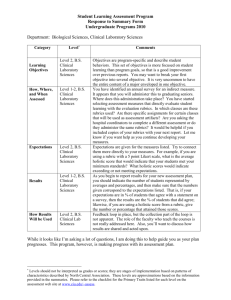

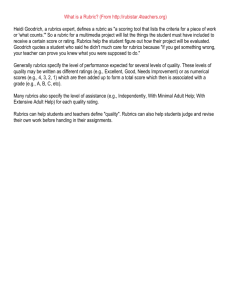

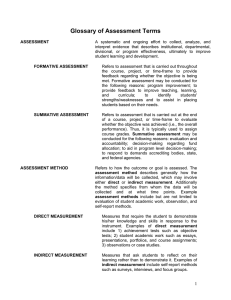

advertisement

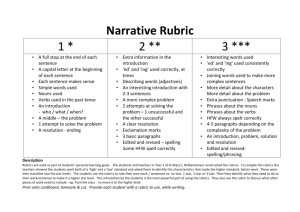

Assessment: Developing Learning Outcomes Teaching Learning Assessment Cycle • Establish Learning Goals • Provide Learning Opportunities • Assess Student Learning • Use the Results Establish Learning Goals Meaningful Learning goals should lead to the improvement of teaching and learning • Institution • Program • Course Institution: UCCC’s Mission Statement: SUNY Ulster is a vibrant community of learners distinguished by academic excellence, collaboration, innovation, service and responsible use of resources. As a public, comprehensive two year institution dedicated to providing affordable accessible education, we work in an ever changing environment to: -Prepare students to transfer to four institutions -Prepare students for success in college and in the workforce -Provide enrichment and life long learning opportunities -Augment learning through the integration and application of emerging technologies -Prepare students to live and work in a global society -Play an active role in economic development, and -Enhance the quality of life for residents in Ulster County Benefits of Learning Outcomes • Helps students understand the nature of the program or course and what skills or knowledge will be expected of them. • Provides a guide to students so they are assured they have not missed any major content. • Gives structure to the instructor in lecture development – what to teach Gives structure to the instructor in exam or assignment development – link to goals. Insures the student learning outcomes are linked to the college mission. Enables the public and four year schools to understand what the program or course intends to accomplish. Most importantly – leads to improvement of teaching and curriculum development. Developing Learning Goals: • Meaningful learning goals will lead to improvement of teaching and learning. • There should be an interconnectedness of goals in programs and courses. Developing Learning Outcomes • Learning outcomes should be developed collaboratively from research and reflection. • Collaborate: • This ensures the integration of course outcomes to program outcomes. As many faculty as possible should be involved. Research • Internal Sources: – Mission statement – Current Syllabi – Previous program reviews and surveys – External Sources: – Associations – Advisory Councils – Other colleges Program descriptions (websites) • External Sources: Advisory Councils Other colleges Program descriptions (websites) Associations Reflection: • Key word: WHY. • Why do you want your students to know what you put down as a learning outcome. Middle States Recommended Discussion Topics: • What is this course or discipline all about? • What does the department aim to achieve with this program? • What do we value about our discipline? • What are the most important things students learn in this discipline? • Why are those things important? • What does our disciplinary association think students should learn? Why does the college offer this program? Why is it important student study it? How will this experience prepare them for post graduation? What do we expect of students in this program? What do we want students to get out of this program? How does this program relate to the college mission? How does it relate to other programs? What do our students do after graduation? How will students be different after completing the program? What will the student be able to perform after the completing the program? What skills will the students acquire after the completing the program? What indicators will be used to measure the student’s performance? Knowledge Thinking Attitudes Knowledge • Duplication of procedure, learning definitions summarizing characteristics, understanding components. • Memorization • Not as stressed today as in previous decades Examples of Knowledge Goals • Explain the three goals of a macro economy and how they are measured. • Summarize distinctive characteristics of a various novelists • Describe the …scientific model Thinking • Application of knowledge in a new context • Analysis or the ability to understand the relationships amongst the components of a complex concept • Evaluation or making informed judgments on new material through research and problem solving • Synthesis or the ability to theorize, generalize, reflect generate new hypothesis or ne ideas. • Critical Thinking or seeking truth and ability to question Examples of Thinking Goals • • • • • • • Explain a reaction Explain the impact Analyze errors Compare and contrast Explain why Judge the effectiveness Evaluate the validity Research and identify Choose the appropriate procedure Write a poem using.. Theorize design Recognize Design and conduct…. Identify a problem….. Attitudes • • • • • • Dispositions and values Appreciation of the subject Enjoyment of learning Metacognition Formation of opinions and values Development of a sense of ethics. Example of Attitude Outcomes • • • • Be intellectually curious Choose an ethical course of action Appreciate the merits of … Identify the strengths and weakness of others’ work • Work independently • Work in groups • Develop leadership skills Writing Learning Outcomes • Avoid broad nebulous terms: –Students will: –Learn –Know –Understand –Become aware –Appreciate –Think critically Action words help students understand what we want them to learn and it makes assessment easier • Describe the solar the key characteristics of each planet in the solar system • Make appropriate references and deductions from biological information • Develop graphic spreadsheet to support a decision • Design a chemical experiment to test a hypothesis Systematically analyze and solve problems… Defend one’s views Present original interpretations… Critically evaluate the effectiveness… Apply basic problem solving skills… Conduct research Assessment of the Learning Outcomes Two Categories of Measures • Direct measures provide evidence of whether or not the student has command of the subject matter • Indirect Measures provide data that is related to the act of learning. It implies the student has learned, i.e. how the student perceives whether or not he/she has learned. Questionnaires and surveys are indirect. Direct vs Indirect • Direct evidence should answer the questions what did the student learn? What did he/she not learn? But it does not answer the question why did the student learn it? Why didn’t the student learn it? • Indirect evidence focuses on the learning process. Comments about homework or lectures or group work. Choosing Evaluation Instruments: QUESTIONS TO ASK: • 1. Is the evidence provided by the method linked to learning outcomes? • 2. Is a standardized instrument appropriate for the learning goals of the institution • 3. Is the evaluation method comprehensive enough? • 4. Are important learning outcomes measured by multiple means? 5.Do the test questions test what is desired to be learned? 6. Are the questions clear? 7. Do the questions elicit information useful for making improvements? 8. Does everyone interpret the response the same way? 9. Do the results make sense? Are the results corroborated by evidence? Tools and techniques : Tests Standardized vs Home grown Quote from Middle states: Although a test created locally may not have the same statistical validity and reliability as a standardized instrument, it’s relevance to the specific learning goals in question may make it a more appropriate and more effective instrument. Test blueprint is an outline that matches test items to the intended learning outcomes. Rubrics • A set of criteria used to assess papers, projects, performances, field experiences. • Four Types: • Checklist • Rating Scale • Descriptive • Holistic Checklist Rubrics • A list of yes or no or Did or Didn’t do • Used primarily in lab or clinical settings Rating Scale Rubric • This is a checklist with a rating scale added to show the degree to which the things you’re looking of are present • Uses terms like Outstanding, Very Good Adequate Or • Strongly Agree, Somewhat Agree etc.. Descriptive Rubrics • These give a description of what constitutes deficient adequate or exemplary • Or unacceptable, Fair Proficient Holistic Rubrics • For the more massive assessments, rating scale and descriptive rubrics may not be feasible i.e reading 1000 essays for placement. • Holistic rubrics have short narrative descriptions of the characteristics for outstanding work, acceptable work, and unacceptable work Other Assessment Tools • Self reflection Engage in metacognition. Ask students to reflect on what they have learned This gives insight into the learning process and it helps students integrate what they have learned Ratings and comments from internship supervisors Placement Rates Ad Hoc Surveys Focus Groups Portfolios What is Middle States Looking For? • They will ask the fundamental questions: • • Is the Institution fulfilling it’s mission? • • Are institutional and program level goals clear to the public, students, faculty and staff? • • Are Institutional programs and resources organized and coordinated to achieve these goals? • • Is the Institution using assessment results to improve student learning and advance the institution. • Characteristics of Assessment Processes that meet Middle States Expectations • Useful Lead to improvement • Cost Effective No need to start from scratch use what is there • Reasonably Accurate Is evidence clear and visable -both direct and indirect • Planned Assessment must be interrelated to strategic plan and academic plan • Organized, systemized and sustained Implement an ongoing system and review it. What Should Be Documented? • Learning outcomes in programs and courses . • An assessment Plan • Assessment results • Uses of results to show what is being changed towards improvement Assessment: Using the Results To Improve Teaching and Learning Three steps: Choose a standard benchmark Set a standard or benchmark for exemplary, adequate and inadequate Setting targets for the collective class or program Choosing a Benchmark What is a Benchmark • The process of comparing the results of your test, essay, paper or other assignment against something. Local Standards: Department members agree to what is a good grade on a specific exam. Having faculty involved in courses and exams leads to more attention paid to assessment. External Standards: This is the use of disciplinary association standards. Usually there are tests that are required from the association. Certifications have required exams with established benchmarks i.e. NCLEX Internal peer benchmark The bell curve – or looking at how Psychology majors do on the economics test vs business majors. Or looking at all the students in the program. Which ones succeed and why? External peer benchmark We compare Jane Doe’s 65 to other instructors’ or colleges’ 65. Setting Standards: • Choose the bench mark most appropriate for your course or program and set a specific appropriate performance standard. • Ask what is an example of exemplary work? • How does this differ from adequate and inadequate? Setting Targets for Students’ Collective Performance What do you want the class or students in a program as a whole achieve? • Express targets as a percentage. Ex: 90 percent of students earn at least a minimally adequate rating • Or 95 percent of the students will earn a 65 % on all exams. Summarizing the Results Five ways to summarize results: Tallies Percentages Aggregates: Scores Averages Qualitative Summaries Using The Results Identify areas of strengths and weaknesses Compare results with what you wanted to hear Create strategy for improving the learning experience Base changes on real data Empower faculty to make those changes Articulate exactly who is responsible for the changes Share the results and actions with the whole institution