Basic Statistics II - Asian School of Business

advertisement

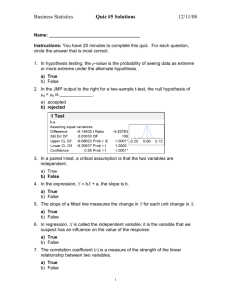

Basic Statistics II Biostatistics, MHA, CDC, Jul 09 Prof. KG Satheesh Kumar Asian School of Business Frequency Distribution and Probability Distribution • Frequency Distribution: Plot of frequency along y-axis and variable along the x-axis • Histogram is an example • Probability Distribution: Plot of probability along y-axis and variable along x-axis • Both have same shape • Properties of probability distributions • Probability is always between 0 and 1 • Sum of probabilities must be 1 Theoretical Probability Distributions • For a discrete variable we have discrete probability distribution • • • • Binomial Distribution Poisson Distribution Geometric Distribution Hypergeometric Distribution • For a continuous variable we have continuous probability distribution • Uniform (rectangular) Distribution • Exponential Distribution • Normal Distribution The Normal Distribution • If a random variable, X is affected by many independent causes, none of which is overwhelmingly large, the probability distribution of X closely follows normal distribution. Then X is called normal variate and we write X ~ N(, 2), where is the mean and 2 is the variance • A Normal pdf is completely defined by its mean, and variance, 2. The square root of variance is called standard deviation . • If several independent random variables are normally distributed, their sum will also be normally distributed with mean equal to the sum of individual means and variance equal to the sum of individual variances. The Normal pdf The area under any pdf between two given values of X is the probability that X falls between these two values Standard Normal Variate, Z • SNV, Z is the normal random variable with mean 0 and standard deviation 1 • Tables are available for Standard Normal Probabilities • X and Z are connected by: Z = (X - ) / and X = + Z • The area under the X curve between X1 and X2 is equal to the area under Z curve between Z1 and Z2. • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • z 0.0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 1.8 1.9 2.0 2.1 2.2 2.3 2.4 2.5 2.6 2.7 2.8 2.9 3.0 3.1 3.2 3.3 3.4 0.00 0.0000 0.0398 0.0793 0.1179 0.1554 0.1915 0.2257 0.2580 0.2881 0.3159 0.3413 0.3643 0.3849 0.4032 0.4192 0.4332 0.4452 0.4554 0.4641 0.4713 0.4772 0.4821 0.4861 0.4893 0.4918 0.4938 0.4953 0.4965 0.4974 0.4981 0.4987 0.4990 0.4993 0.4995 0.4997 0.01 0.0040 0.0438 0.0832 0.1217 0.1591 0.1950 0.2291 0.2611 0.2910 0.3186 0.3438 0.3665 0.3869 0.4049 0.4207 0.4345 0.4463 0.4564 0.4649 0.4719 0.4778 0.4826 0.4864 0.4896 0.4920 0.4940 0.4955 0.4966 0.4975 0.4982 0.4987 0.4991 0.4993 0.4995 0.4997 0.02 0.0080 0.0478 0.0871 0.1255 0.1628 0.1985 0.2324 0.2642 0.2939 0.3212 0.3461 0.3686 0.3888 0.4066 0.4222 0.4357 0.4474 0.4573 0.4656 0.4726 0.4783 0.4830 0.4868 0.4898 0.4922 0.4941 0.4956 0.4967 0.4976 0.4982 0.4987 0.4991 0.4994 0.4995 0.4997 0.03 0.0120 0.0517 0.0910 0.1293 0.1664 0.2019 0.2357 0.2673 0.2967 0.3238 0.3485 0.3708 0.3907 0.4082 0.4236 0.4370 0.4484 0.4582 0.4664 0.4732 0.4788 0.4834 0.4871 0.4901 0.4925 0.4943 0.4957 0.4968 0.4977 0.4983 0.4988 0.4991 0.4994 0.4996 0.4997 0.04 0.0160 0.0557 0.0948 0.1331 0.1700 0.2054 0.2389 0.2704 0.2995 0.3264 0.3508 0.3729 0.3925 0.4099 0.4251 0.4382 0.4495 0.4591 0.4671 0.4738 0.4793 0.4838 0.4875 0.4904 0.4927 0.4945 0.4959 0.4969 0.4977 0.4984 0.4988 0.4992 0.4994 0.4996 0.4997 0.05 0.0199 0.0596 0.0987 0.1368 0.1736 0.2088 0.2422 0.2734 0.3023 0.3289 0.3531 0.3749 0.3944 0.4115 0.4265 0.4394 0.4505 0.4599 0.4678 0.4744 0.4798 0.4842 0.4878 0.4906 0.4929 0.4946 0.4960 0.4970 0.4978 0.4984 0.4989 0.4992 0.4994 0.4996 0.4997 0.06 0.0239 0.0636 0.1026 0.1406 0.1772 0.2123 0.2454 0.2764 0.3051 0.3315 0.3554 0.3770 0.3962 0.4131 0.4279 0.4406 0.4515 0.4608 0.4686 0.4750 0.4803 0.4846 0.4881 0.4909 0.4931 0.4948 0.4961 0.4971 0.4979 0.4985 0.4989 0.4992 0.4994 0.4996 0.4997 0.07 0.0279 0.0675 0.1064 0.1443 0.1808 0.2157 0.2486 0.2794 0.3078 0.3340 0.3577 0.3790 0.3980 0.4147 0.4292 0.4418 0.4525 0.4616 0.4693 0.4756 0.4808 0.4850 0.4884 0.4911 0.4932 0.4949 0.4962 0.4972 0.4979 0.4985 0.4989 0.4992 0.4995 0.4996 0.4997 0.08 0.0319 0.0714 0.1103 0.1480 0.1844 0.2190 0.2517 0.2823 0.3106 0.3365 0.3599 0.3810 0.3997 0.4162 0.4306 0.4429 0.4535 0.4625 0.4699 0.4761 0.4812 0.4854 0.4887 0.4913 0.4934 0.4951 0.4963 0.4973 0.4980 0.4986 0.4990 0.4993 0.4995 0.4996 0.4997 0.09 0.0359 0.0753 0.1141 0.1517 0.1879 0.2224 0.2549 0.2852 0.3133 0.3389 0.3621 0.3830 0.4015 0.4177 0.4319 0.4441 0.4545 0.4633 0.4706 0.4767 0.4817 0.4857 0.4890 0.4916 0.4936 0.4952 0.4964 0.4974 0.4981 0.4986 0.4990 0.4993 0.4995 0.4997 0.4998 Standard Normal Probabilities (Table of z distribution) The z-value is on the left and top margins and the probability (shaded area in the diagram) is in the body of the table Illustration Q.A tube light has mean life of 4500 hours with a standard deviation of 1500 hours. In a lot of 1000 tubes estimate the number of tubes lasting between 4000 and 6000 hours A. P(4000<X<6000) = P(-1/3<Z<1) = 0.1306 + 0.3413 = 0.4719 Hence the probable number of tubes in a lot of 1000 lasting 4000 to 6000 hours is 472 Illustration Q. Cost of a certain procedure is estimated to average Rs.25,000 per patient. Assuming normal distribution and standard deviation of Rs.5000, find a value such that 95% of the patients pay less than that. A. Using tables, P(Z<Z1) = 0.95 gives Z1 = 1.645. Hence X1 = 25000 + 1.645 x 5000 = Rs.33,225 95% of the patients pay less than Rs.33,225 Sampling Basics • Population or Universe is the collection of all units of interest. E.g.: Households of a specific type in a given city at a certain time. Population may be finite or infinite • Sampling Frame is the list of all the units in the population with identifications like Sl.Nos, house numbers, telephone nos etc • Sample is a set of units drawn from the population according to some specified procedure • Unit is an element or group of elements on which observations are made. E.g. a person, a family, a school, a book, a piece of furniture etc. Census Vs Sampling • Census – Thought to be accurate and reliable, but often not so if the population is large – More resources (money, time, manpower) – Unsuitable for destructive tests • Sampling – Less resources – Highly qualified and skilled persons can be used – Sampling error, which can be reduced using large and representative sample Sampling Methods • Probability Sampling (Random Sampling) – – – – Simple Random Sampling Systematic Random Sampling Stratified Random Sampling Cluster Sampling (Single stage , Multi-stage) • Non-probability Sampling – Convenience Sampling – Judgment Sampling – Quota Sampling Limitations of Non-Random Sampling • Selection does not ensure a known chance that a unit will be selected (i.e. non-representative) • Inaccurate in view of the selection bias • Results cannot be used for generalisation because inferential statistics requires probability sampling for valid conclusions • Useful for pilot studies and exploratory research Sampling Distribution and Standard Error of the Mean • The sampling distribution of x is the probability distribution of all possible values of x for a given sample size n taken from the population. • According to the Central Limit Theorem, for large enough sample size, n, the sampling distribution is approximately normal with mean and standard deviation /n. This standard deviation is called standard error of the mean. • CLT holds for non-normal populations also and states: For large enough n, x ~ N(, 2/n) Illustration Q. When sampling from a population with SD 55, using a sample size of 150, what is the probability that the sample mean will be at least 8 units away from the population mean? A. Standard Error of the mean, SE = 55/sqrt(150) = 4.4907 Hence 8 units = 1.7815 SE Area within 1.7815 SE on both sides of the mean = 2 * 0.4625 = 0.925 Hence required probability = 1-0.925 = 0.075 Illustration Q. An Economist wishes to estimate the average family income in a certain population. The population SD is known to be $4,500 and the economist uses a random sample of size 225. What is the probability that the sample mean will fall within $800 of the population mean? Point and Interval Estimation • The value of an estimator (see next slide), obtained from a sample can be used to estimate the value of the population parameter. Such an estimate is called a point estimate. • This is a 50:50 estimate, in the sense, the actual parameter value is equally likely to be on either side of the point estimate. • A more useful estimate is the interval estimate, where an interval is specified along with a measure of confidence (90%, 95%, 99% etc) • The interval estimate with its associated measure of confidence is called a confidence interval. • A confidence interval is a range of numbers believed to include the unknown population parameter, with a certain level of confidence Estimators • Population parameters (, 2, p) and Sample Statistics (x,s2, ps) • An estimator of a population parameter is a sample statistic used to estimate the parameter • Statistic,x is an estimator of parameter • Statistic, s2 is an estimator of parameter 2 • Statistic, ps is an estimator of parameter p Illustration Q. A wine importer needs to report the average percentage of alcohol in bottles of French wine. From experience with previous kinds of wine, the importer believes the population SD is 1.2%. The importer randomly samples 60 bottles of the new wine and obtains a sample mean of 9.3%. Find the 90% confidence interval for the average percentage of alcohol in the population. Answer Standard Error = 1.2%/sqrt(60) = 0.1549% For 90% confidence interval, Z = 1.645 Hence the margin of error = 1.645*0.1549% = 0.2548% Hence 90% confidence interval is 9.3% +/- 0.3% More Sampling Distributions • Sampling Distribution is the probability distribution of a given test statistic (e.g. Z), which is a numerical quantity calculated from sample statistic • Sampling distribution depends on the distribution of the population, the statistic being considered and the sample size • Distribution of Sample Mean: Z or t distribution • Distribution of Sample Proportion: Z (large sample) • Distribution of Sample Variance: Chi-square distribution The t-distribution • The t-distribution is also bell-shaped and very similar to the Z(0,1) distribution • Its mean is 0 and variance is df/(df-2) • df = degrees of freedom = n-1 & n = sample size • For large sample size, t &Z are identical • For small n, the variance of t is larger than that of Z and hence wider tails, indicating the uncertainty introduced by unknown population SD or smaller sample size n Illustration Q. A large drugstore wants to estimate the average weekly sales for a brand of soap. A random sample of 13 weeks gives the following numbers: 123, 110, 95, 120, 87, 89, 100, 105, 98, 88, 75, 125, 101. Determine the 90% confidence interval for average weekly sales. A. Sample mean = 101.23 and Sample SD = 15.13. From t-table, for 90% confidence at df = 12 is t = 1.782. Hence Margin of Error = 1.782 * 15.13/sqrt(13) = 7.48. The 90% confidence interval is (93.75,108.71) Chi-Square Distribution • Chi-square distribution is the probability distribution of the sum of several independent squared Z variables • It has a df parameter associated with it (like t distribution). The mean is df and variance is 2df • Being a sum of squares, the chi-squares cannot be negative and hence the distribution curve is entirely on the positive side, skewed to the right. Confidence Interval for population variance using chi-square distribution Q. A random sample of 30 gives a sample variance of 18,540 for a certain variable. Give a 95% confidence interval for the population variance A. Point estimate for population variance = 18,540 Given df = 29, excel gives chi-square values: For 2.5%, 45.7 and for 97.5, 16.0 Hence for the population variance, the lower limit of the confidence interval = 18540 *29/45.7 = 11,765 and the upper limit of the confidence interval = 18540*29/16.0 = 33,604 Chi-Square Distribution • Chi-square distribution is the probability distribution of the sum of several independent squared Z variables • It has a df parameter associated with it (like t distribution). • Being a sum of squares, the chi-squares cannot be negative and hence the distribution curve is entirely on the positive side, skewed to the right. The mean is df and variance is 2df Chi-Square Test for Goodness of Fit • A goodness-of-fit is a statistical test of how sample data support an assumption about the distribution of a population • Chi-square statistic used is Χ2 = ∑(O-E)2/E, where O is the observed value and E the expected value The above value is then compared with the critical value (obtained from table or using excel) for the given df and the required level of significance, α (1% or 5%) Illustration Q. A company comes out with a new watch and wants to find out whether people have special preferences for colour or whether all four colours under consideration are equally preferred. A random sample of 80 prospective buyers indicated preferences as follows: 12, 40, 8, 20. Is there a colour preference at 1% significance? A. Assuming no preference, the expected values would all be 20. Hence the chi-square value is 64/20 + 400/20 + 144/20 + 0 = 30.4 For df = 3 and 1% significance, the right tail area is 11.3. The computed value of 30.4 is far greater than 11.3 and hence deeply in the rejection region. So we reject the assumption of no colour preference. Q. Following data is about the births of new born babies on various days of the week during the past one year in a hospital. Can we assume that birth is independent of the day of the week? Sun:116, Mon:184, Tue: 148, Wed: 145, Thu: 153, Fri: 150, Sat: 154 (Total: 1050) Ans: Assuming independence, the expected values would all be 1050/7 = 150. Hence the chi-square value is 342/150+342/150+22/150+52/150+32/150+42/150=2366/1 50 = 15.77 For df = 6 and 5% significance, the right tail area is 12.6. The computed value of 15.77 is greater than the critical value of 12.6 and hence falls in the rejection region. So we reject the assumption of independence. Correlation • Correlation refers to the concomitant variation between two variables in such a way that change in one is associated with a change in the other • The statistical technique used to analyse the strength and direction of the above association between two variables is called correlation analysis Correlation and Causation • Even if an association is established between two variables no cause-effect relationship is implied • Association between x and y may be looked upon as: – – – – – x causes y y causes x x and y influence each other (mutual influence) x and y are both influenced by z, v (influence of third variable) due to chance (spurious association) • Hence caution needed while interpreting correlation Types of Correlations • Positive (direct) and negative (inverse) – Positive: direction of change is the same – Negative: direction of change is opposite • Linear and non-linear – Linear: changes are in a constant ratio – Non-linear: ratio of change is varying • Simple, Partial and Multiple – Simple: Only two variables are involved – Partial: There may be third and other variables, but they are kept constant – Multiple: Association of multiple variables considered simultaneously Scatter Diagrams Correlation coefficient r = 1 r= - 0.94 r = - 0.54 r=0.42 r = 0.85 r=0.17 Correlation Coefficient • Correlation coefficient (r) indicates the strength and direction of association • The value of r is between -1 and +1 • • • • • • -1: perfect negative correlation +1: perfect positive correlation Above 0.75: Very high correlation 0.50 to 0.75: High correlation 0.25 to 0.50: Low correlation Below 0.25: Very low correlation Methods of Correlation Analysis • Scatter Diagram • A quick approximate visual idea of association • Karl Pearson’s Coefficient of Correlation • For numeric data measured on interval or ratio scale • r = Cov(x,y) /(SDx SDy) • Spearman’s Rank Correlation • For ordinal (rank) data • R = 1 – 6 * Sum of Squared Difference of Ranks / [n(n2-1)] • Method of Least Squares • r2 = bxy * byx, i.e. product of regression coefficients Karl Pearson Correlation Coefficient (Product-Moment Correlation) • r = Covariance (x,y) / (SD of x * SD of y) • Recall: n Var(X) = SSxx, nVar(Y) = SSYY and n Cov(X,Y) = SSXY • Thus r2 = Cov2(X,Y)/[Var(X) Var(Y)] = SS2XY / (SSxx * SSYY) Note: r2 is called coefficient of determination Sample Problem The following data refers to two variables, promotional expense (Rs. Lakhs) and sales (‘000 units) collected in the context of a promotional study. Calculate the correlation coefficient Promo 7 10 9 4 11 5 3 Sales 12 14 13 5 15 7 4 Sales (Y) XAve(X ) 7 12 0 2 0 0 4 10 14 3 4 12 9 16 9 13 2 3 6 4 9 4 5 -3 -5 15 9 25 11 15 4 5 20 16 25 5 7 -2 -3 6 4 9 3 4 -4 -6 24 16 36 7 10 83 58 124 Ave(X) Ave(Y) SSxy SSxx SSyy Promo (X) Y - Ave (Y) Sxy Sxx Coefficient of Determination, r-squared = 83*83 / (58*124) = Coefficient of Correlation, r = square root of 0.95787 = Syy 0.95787 0.978708 Spearman’s Rank Correlation Coefficient • The ranks of 15 students in two subjects A and B are given below. Find Spearman’s Rank Correlation Coefficient (1,10); (2,7); (3,2); (4,6); (5,4); (6,8); (7,3); (8,1); (9,11); (10,15); (11,9); (12,5); (13,14); (14,12) and (15,13) Solution: SSD of Ranks = 81+25+1+4+1+4+ 16+49+4+25+4+49+1+4+4 = 272 R = 1 – 6*272/(14*15*16) = 0.5143 Hence moderate degree of positive correlation between the ranks of students in the two subjects Regression Analysis • Statistical technique for expressing the relationship between two (or more) variables in the form of an equation (regression equation) • Dependent or response or predicted variable • Independent or regressor or predictor variable • Used for prediction or forecasting Types of Regression Models • Simple and Multiple Regression Models – Simple: Only one independent variable – Multiple: More than one independent variable • Linear and Nonlinear Regression Models – Linear: Value of response variable changes in proportion to the change in predictor so that Y = a+bX Simple Linear Regression Model Y = a + bX, a and b are constants to be determined using the given data Note: More strictly, we may say: Y = ayx + byxX To determine a and b solve the following two equations (called “normal equations”): ∑Y = a n + b ∑x ------- (1) ∑YX = a ∑x + b ∑x2 ------- (2) Calculating Regression Coeff • Instead of solving the simultaneous equations one may directly use formulae • For Y = a + bX, i.e. regression of Y on X • byx = SSxy / SSxx • ayx = Y – byxX where mean values of Y, X are used • For X = a + bY form (regression of X on Y) • bxy = SSxy / SSyy • axy = Y – bxyX where mean values of Y, X are used Example For the earlier problem of Sales (dependent variable) Vs Promotional expenses (independent variable) set up the simple linear regression model and predict the sales when promotional spending is Rs.13 lakhs Solution: We need to find a and b in Y = a + bX b = SSxy / SSxx = 83/58 = 1.4310 a = Y - bX, at mean = 10 – 1.4310*7 = -0.017 Hence regression equation is Y = -0.017+1.4310X For X = 13 Lakhs, we get Y = 18.59, i.e. 18,590 units of predicted sales Linear Regression using Excel 18 y = 1.431x - 0.0172 R2 = 0.9579 16 14 12 10 8 6 4 2 0 0 2 4 6 8 10 12 Properties of Regression Coeff • Coefficient of determination r2 = byx * bxy • If one regression coefficient is greater than one the other is less than one because r2 lies between 0 and 1 • Both regression coeff must have the same sign, which is also the sign of the correlation coeff r • The regression lines intersect at the means of X and Y • Each regression coefficient gives the slope of the respective regression line Coefficient of Determination • Recall: • SSyy = Sum of squared deviations of Y from the mean • Let us define • SSR as sum of squared deviations of estimated (using regression equation) values of Y from the mean • SSE as the sum of squared deviations of errors (error means actual Y ~ estimated Y) • It can be shown that: • SSyy = SSR + SSE, i.e. Total Variation = Explained Variation + Unexplained (error) Variation • r2 = SSR/SSyy = Explained Variation / Total Variation • Thus r2 represents the proportion of the total variability of the dependent variable y that is accounted for or explained by the independent variable x Coefficient of Determination for Statistical Validity of Promo-Sales Regression Model Promo (X) Sales (Y) Squared deviation of Ye from Mean Ye = -0.017+1.4310X Squared deviation of Ye from Y Squared Deviation of Y from Mean 7 12 10.00 0.00 4.00 4 10 14 14.29 18.43 0.09 16 9 13 12.86 8.19 0.02 9 4 5 5.71 18.43 0.50 25 11 15 15.72 32.76 0.52 25 5 7 7.14 8.19 0.02 9 3 4 4.28 32.76 0.08 36 7 10 118.77 5.22 124 SSR Coefficient of determination, r-squared = 118.77/124 = Thus 96% of the variation in sales is explained by promo expenses SSE 0.957824 Ssyy