Data Mining - BYU Computer Science

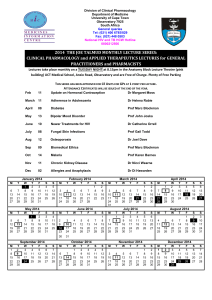

advertisement

Olfa Nasraoui

Dept. of Computer Engineering & Computer Science

Speed School of Engineering, University of Louisville

Contact e-mail: olfa.nasraoui@louisville.edu

This work is supported by NSF CAREER Award IIS-0431128,

NASA Grant No. AISR-03-0077-0139 issued through the Office of

Space Sciences, NSF Grant IIS-0431128, Kentucky Science & Engr.

Foundation, and a grant from NSTC via US Navy.

Outline of talk

Data Mining Background

Mining Footprints Left Behind by Surfers on the Web

Web Usage Mining: WebKDD process, Profiling &

Personalization

Mining Illegal Contraband Exchanges on Peer to Peer

Networks

Mining Coronal Loops Created from Hot Plasma

Eruptions on the Surface of the Sun

Too Much Data!

There is often information “hidden” in the data that is

not always evident

Human analysts may take weeks to discover useful

information

Much of the data is never analyzed at all

4,000,000

3,500,000

Total new disk (TB) since 1995

3,000,000

The Data Gap

2,500,000

2,000,000

1,500,000

1,000,000

Number of

analysts

500,000

0

1995

1996

1997

1998

1999

From: R. Grossman, C. Kamath, V. Kumar, “Data Mining for Scientific and Engineering Applications”

Data Mining

Many Definitions

Non-trivial extraction of implicit, previously unknown

and potentially useful information from data

Exploration & analysis, by automatic or

semi-automatic means, of large quantities of data

in order to discover meaningful patterns

Typical DM tasks: Clustering, classification,

association rule mining

Applications: wherever there is data:

Business, World Wide Web (content, structure, usage),

Biology, Medicine, Astronomy, Social Networks, Elearning, Images, …, etc

Introduction

Information overload: too much information to sift/browse

through in order to find desired information

Most information on Web is actually irrelevant to a particular user

This is what motivated interest in techniques for Web

personalization

As they surf a website, users leave a wealth of historic data

about what pages they have viewed, choices they have made,

etc

Web Usage Mining: A branch of Web Mining (itself a branch

of data mining) that aims to discover interesting patterns

from Web usage data (typically Web Access Log

data/clickstreams)

Classical Knowledge Discovery Process For

Web Usage Mining

Source of Data: Web Clickstreams: that get recorded in

Web Log Files:

Date, time, IP Address/Cookie, URL accessed, …etc

Goal: Extract interesting user profiles: by categorizing

user sessions into groups or clusters

Profile 1:

URLs a, b, c

Sessions in profile 1

Profile 2:

URLs x, y, z, w

Sessions in profile 2

…etc

Classical Knowledge Discovery Process For

Web Usage Mining

Complete KDD process:

Preprocessing:

selecting and

cleaning data

(result = session data)

Classical Knowledge Discovery Process For

Web Usage Mining

Complete KDD process:

Data Mining

(Learning Phase):

• Clustering algorithm to

categorize sessions into usage

Preprocessing:

categories/modes/profiles

selecting and

• Frequent Itemset Mining

cleaning data

algorithms to discover frequent

(result = session data) usage patterns/profiles

Classical Knowledge Discovery Process For

Web Usage Mining

Complete KDD process:

Data Mining

(Learning Phase):

Preprocessing:

selecting and cleaning

data

(result = session data )

Derivation

and Interpretation

of results:

• Computing profiles

• Clustering algorithm to

categorize sessions into usage • Evaluating results

• Analyzing profiles

categories/modes/profiles

• Frequent Itemset Mining

algorithms to discover frequent

usage patterns/profiles

Web Personalization

Web Personalization: Aims to adapt the Website according to

the user’s activity or interests

Intelligent Web Personalization: often relies on Web Usage

Mining (for user modeling)

Recommender Systems: recommend items of interest to the

users depending on their interest

Content-based filtering: recommend items similar to the items liked

by current user

No notion of community of users (specialize only to one user)

Collaborative filtering: recommend items liked by “similar” users

Combine history of a community of users: explicit (ratings) or implicit

(clickstreams)

Hybrids: combine above (and others)

Focus of our

research

Different Steps Of our Web Personalization

System

STEP 1: OFFLINE

PROFILE

DISCOVERY

Site Files

STEP 2: ACTIVE RECOMMENDATION

Post Processing /

Derivation of

User Profiles

Preprocessing

Server

Logs

Data Mining:

Transaction Clustering

Association Rule Discovery

User Sessions Pattern Discovery

Recommendation

Engine

User profiles/

User Model

Active Session Recommendations

Challenges & Questions in Web Usage Mining

STEP 1: OFFLINE

PROFILE DISCOVERY

ACTIVE RECOMMENDATION

Post Processing /

Derivation of

User Profiles

User profiles/

Site Files

Preprocessing

Server

Logs

User Model

Recommendation

Engine

Active Session Recommendations

Data Mining:

Transaction Clustering

Association Rule Discovery

User Sessions Pattern Discovery

Dealing with Ambiguity: Semantics?

• Implicit taxonomy? (Nasraoui, Krishnapuram, Joshi. 1999)

•Website hierarchy (can help disambiguation, but limited)

• Explicit taxonomy? (Nasraoui, Soliman, Badia, 2005)

•From DB associated w/ dynamic URLs

•Content taxonomy or ontology (can help disambiguation, powerful)

• Concept hierarchy generalization / URL compression / concept

abstraction:

(Saka

Nasraoui,in2006)

Nasraoui: Web Usage

Mining&

& Personalization

and Ambiguous

Environments

•How Noisy,

doesDynamic,

abstraction

affect

quality of user models?

Challenges & Questions in Web Usage Mining

STEP 1: OFFLINE

PROFILE DISCOVERY

ACTIVE RECOMMENDATION

Post Processing /

Derivation of

User Profiles

Site Files

Preprocessing

Server

Logs

User Sessions

Recommendation

Engine

User profiles/

User Model

Active Session Recommendations

Data Mining:

Transaction Clustering

Association Rule Discovery

Pattern Discovery

User Profile Post-processing Criteria?

(Saka & Nasraoui, 2006)

• Aggregated profiles (frequency average)?

• Robust profiles (discount noise data)?

• How do they really perform?

•How to validate? (Nasraoui & Goswami, SDM 2006)

Challenges & Questions in Web Usage Mining

STEP 1: OFFLINE

PROFILE DISCOVERY

ACTIVE RECOMMENDATION

Post Processing /

Derivation of

User Profiles

Site Files

Preprocessing

Server

Logs

Recommendation

Engine

User profiles/

User Model

Active Session Recommendations

Data Mining:

Transaction Clustering

Association Rule Discovery

User Sessions Pattern Discovery

Evolution: (Nasraoui, Cerwinske, Rojas, Gonzalez. CIKM 2006)

Detecting & characterizing profile evolution & change?

Challenges & Questions in Web Usage Mining

STEP 1: OFFLINE

PROFILE DISCOVERY

ACTIVE RECOMMENDATION

Post Processing /

Derivation of

User Profiles

Site Files

Preprocessing

Server

Logs

Recommendation

Engine

User profiles/

User Model

Active Session Recommendations

Data Mining:

Transaction Clustering

Association Rule Discovery

User Sessions Pattern Discovery

In case of massive evolving data streams:

•Need stream data mining (Nasraoui et al. ICDM’03, WebKDD 2003)

•Need stream-based recommender systems? (Nasraoui et al. CIKM 2006)

•How do stream-based recommender systems perform under evolution?

•How to validate above? (Nasraoui et al. CIKM 2006)

Clustering: HUNC Methodology

•Hierarchical Unsupervised Niche Clustering (HUNC) algorithm:

a robust genetic clustering approach.

Hierarchical: clusters the data recursively and discovers profiles at

increasing resolutions to allow finding even relatively small

profiles/user segments.

•

•

Unsupervised: determines the number of clusters automatically.

Niching: maintains a diverse population in GA with members

distributed among niches corresponding to multiple

solutions smaller profiles can survive alongside bigger profiles.

•

Genetic optimization: evolves a population of candidate solutions

through generations of competition and reproduction until convergence

to one solution.

•

Hierarchical Unsupervised Niche

Clustering Algorithm (H-UNC):

Encode binary

session vectors

0

1

1

0

1

1

0

All data

Cluster 1

Perform UNC in

hierarchical

mode (HUNC)

Cluster 1.1

Cluster 2 …

… Cluster 1.N

Cluster K

Cluster K.1

…Cluster K.M

Unsupervised Niche Clustering

(UNC)

Evolutionary

Algorithm

Niching

Strategy

“Hill climbing”

performed in parallel

with the evolution for

estimating the niche

sizes accurately and

automatically

Unsupervised Niche Clustering

(UNC)

0

1

1

1

fi

0

1

N

0

w ij

j 1

2

i

2

d

ij

wij exp

2

2 i

Role of Similarity Measure: Adding

Semantics

we exploit both

an implicit taxonomy as inferred from the website

directory structure (we get this by tokenizing URL),

an explicit taxonomy as inferred from external data:

Relation: object 1 is parent of object 2 …etc

Both implicit and explicit taxonomy

information are seamlessly incorporated into

clustering via a specialized Web session

similarity measure

Similarity Measure

• Map NU URLs on site to indices

• User session vector s(i) : temporally compact sequence of Web

accesses by a user

th

1 if user accessed j URL

s (ji )

0 otherwise

If site structure ignored cosine similarity

NU

i 1

( k ) (l )

si si

S1, kl

NU ( k )

NU ( l )

s

i 1 i

i 1 si

Taking site structure into account relate distinct URLs

pi p j

Su (i, j ) min 1,

max 1, max pi , p j 1

th

• pi: path from root to i URL’s node

Multi-faceted Web user profiles

Pre-processing

Web Logs

Server

content DB

Data Mining

about which

companies?

from which

companies?

Postprocessing

search

queries

viewed Web

pages?

Example: Web Usage Mining of January 05 Log

Data

Prof. 3

#users:

75

Prof. 4

#users:

455

Prof. 5

#users:

46

Prof. 8

#users:

323

Prof. 0

#users:

295

Prof. 12

#users:

21

Prof. 11

#users:

55

Prof. 10

#users:

56

Prof. 18

#users:

89

Prof. 19

#users:

128

Prof. 14

#users:

Prof. 7

308

#users:

152

Jan. 05

Web Logs

Prof. 17

#users:

188

Prof. 15

#users:

44

Prof. 6

#users:

51

Prof. 2

#users:

60

Prof. 1

#users:

88

Prof. 16

#users:

280

Prof.20

#users:

94

Jan. 05

Web Logs

Prof. 13

#users:

88

Prof. 9

#users:

94

Prof. 21

#users:

68

Prof. 25

#users:

105

Prof. 24

#users:

102

Total Number of Users: 3333

26 profiles

Prof. 23

#users:

42

Prof. 22

#users:

26

Web Usage Mining of January 05 Cont…

Who are these Users?

/FRAMES.ASPX/MENU_FILE=.JS&MAIN=/UNIVERSAL.ASPX

/TOP_FRAME.ASPX

Some are:

/LEFT1.HTM

What

web pages

/MAINPAGE_FRAMESET.ASPX/MENU_FILE=.JS&MAIN=/UNIVERSAL.ASPX

•Highway Customers/Austria Facility

/MENU.ASPX/MENU_FILE=.JS

Prof. 4

did

these users

Prof. 17

#users:

•Naval

Education

And Training Program

/UNIVERSAL.ASPX/ID=Company

/ Suppliers/Soda Blasting

Prof. 5

Prof. 3 Connection/Manufacturers

#users:

Prof.

16

Prof. 18

visit?

455

#users:

#users:

/UNIVERSAL.ASPX/ID= Surface Treatment/Surface Preparation/Abrasive Blasting Management/United

188 States

#users:

#users:

46

75

Prof. 6

#users:

51

Prof. 2

#users:

60

Prof. 1

What page did

#users:

they visit before88

starting their

Prof. 0

session? #users:

Prof. 7

#users:

152

Jan. 05

Web Logs

Prof. 8

#users:

323

295

Some are:

Prof. 12

#users:

http://www.eniro.se/query?q=www.nns.com&what

21

Prof. 11

Prof. 10

=se&hpp=&ax=

#users:

#users:

55

56

•

Prof. 9

#users:

94

280

89

•

Magna International Inc/Canada

Prof. 15

Prof. 19

#users:• Norfolk Naval Shipyard (NNSY).

#users:

44

128

Prof. 14

#users:

308

Prof.20

What did #users:

94

they search for

before visiting

Prof. 21

our website?#users:

68

Jan. 05

Web Logs

Prof. 13

#users:

88

Prof. 25

#users:

105

•http://www.mamma.com/Mamma?qtype=0&query

=storage+tanks+in+water+treatment+plant%2Bcor

rosion

•http://www.comcast.net/qry/websearch?cmd=qry

&safe=on&query=mesa+cathodic+protection&sear

chChoice=google

Total Number of Users:3333

•http://msxml.infospace.com/_1_2O1PUPH04B2YN

Prof. 24

#users:

102

Prof. 22

#users:

26

Prof. 23

Some

are:

#users:

•“shell42blasting”

•“Gavlon Industries

•“obrien paints”

•“Induron Coatings”

•“epoxy polyamide”

Two-Step Recommender Systems Based on a

Committee of Profile-Specific URL-Predictor

Neural Networks

Current Session

Two-Step Recommender Systems Based on a

Committee of Profile-Specific URL-Predictor

Neural Networks

Current Session

Step 1:

Find closest

Profile

discovered

by HUNC

Two-Step Recommender Systems Based on a

Committee of Profile-Specific URL-Predictor

Neural Networks

Step 2: Choose its

specialized network

NN #1

NN #2

Current Session

Step 1:

Find closest

profile

?

NN #3

….etc

NN # n

Two-Step Recommender Systems Based on a

Committee of Profile-Specific URL-Predictor

Neural Networks

Step 2: Choose the

specialized network

NN #1

NN #2

Current Session

Step 1:

Find closest

profile

?

NN #3

….etc

NN # n

Recommendations

Train each Neural Net to Complete missing puzzles

(sessions from that profile/cluster)

?

?

?

complete session

Striped = Input session

(incomplete) to the neural

network

“?” = Output that is

predicted to complete the

puzzle

Precision: Comparison 2-step, 1-step, & K-NN

(better for longer sessions)

Precision

1

0.9

2-step Profile-specific NN

0.8

0.7

K-NN(K=50,N=10)

Precision

0.6

0.5

1-step Decision Tree

0.4

1-step Nearest Profile-Cosine

1-step Nearest ProfileWebSession

1-step Neural Network

0.3

0.2

0.1

0

0

1

2

3

4

5

subsession size

6

7

8

9

10

Coverage: Comparison 2-step, 1-step, and & KNN (better for longer sessions)

Coverage

0.9

0.8

0.7

2-step Profile-specific NN

K-NN(K=50,N=10)

0.6

Coverage

1-step Decision Tree

0.5

1-step Neural Network

1-step Nearest Profile-Cosine

0.4

1-step Nearest Profile-WebSession

0.3

0.2

0.1

0

0

1

2

3

4

5

subsession size

6

7

8

9

10

E-Learning HyperManyMedia Resources

http://www.collegedegree.com/library/college-life/the_ultimate_guide_to_using_open_courseware

Architecture

Semantic representation ( knowledge representation),

2. Algorithms (core software), and

3. Personalization interface.

1.

Semantic Search Engine

Experimental Evaluation

A total of 1,406 lectures (documents) represented the profiles, with the size of each profile varying

from one learner to another, as follows. Learner1 (English)= 86 lectures, Learner2 (Consumer

and Family Sciences) = 74 lectures, Learner3 (Communication Disorders) = 160 lectures,

Learner4 (Engineering) = 210 lectures, Learner5 (Architecture and Manufacturing Sciences) =

119 lectures, Learner6 (Math) = 374 lectures, Learner7 (Social Work) = 86 lectures, Learner8

(Chemistry) = 58 lectures, Learner9 (Accounting) = 107 lectures, and Learner10 (History) = 132

lectures.

Concept drift / Data Streams

TECNO-Streams: inspired by the immune system

[ICDM 2003, WEBKDD 2003, COMPUTER

NETWORKS 2006]

CIKM results: collaborative filtering recommendation

in evolving streams

TRAC-Streams: extends a robust clustering algorithm

to streams [SDM 2006]

Recommender Systems in Dynamic Usage Environments

For massive Data streams, must use a stream mining

framework

Furthermore must be able to continuously mine evolving data

streams

TECNO-Streams: Tracking Evolving Clusters in Noisy Streams

Inspired by the immune system

Immune system: interaction between external agents (antigens) and

immune memory (B-cells)

Artificial immune system:

Antigens = data stream

B-cells = cluster/profile stream synopsis = evolving memory

B-cells have an age (since their creation)

Gradual forgetting of older B-cells

B-cells compete to survive by cloning multiple copies of themselves

Cloning is proportional to the B-cell stimulation

B-cell stimulation: defined as density criterion of data around a profile (this is

what is being optimized!)

Nasraoui: Web Usage Mining & Personalization in

O. Nasraoui, C. Cardona, C. Rojas, and F. Gonzalez. Mining Evolving User Profiles in Noisy Web Clickstream Data with a

Noisy, Dynamic, and Ambiguous Environments

Scalable Immune System Clustering Algorithm, in Proc. of WebKDD 2003, Washington DC, Aug. 2003, 71-81.

The Immune Network Memory

External antigen

(RED) stimulates

binding B-cell Bcell (GREEN)

clones copies of

itself (PINK)

Stimulation breeds

Survival

Even after

external antigen

disappears:

B-cells costimulate each

other thus

sustaining each

other Memory!

General Architecture of Proposed Approach

Evolving data

stream

1-Pass Adaptive

Immune

Learning

Immune network

information system

?

Evolving Immune

Network

(compressed into

subnetworks)

Stimulation (competition

& memory)

Age (old vs. new)

Outliers (based on

activation)

Initialize ImmuNet and MaxLimit

Compress ImmuNet into K subNet’s

Trap Initial Data

Memory

Constraints

Present NEW antigen data

Identify nearest subNet*

Compute soft activations in subNet*

Update subNet* ‘s ARB Influence range /scale

S

Update subNet* ‘s ARBs’ stimulations

Yes

Activates

ImmuNet?

Start/

Reset

No

Clone antigen

Clone and Mutate ARBs

Domain

Knowledge

Constraints

Outlier?

Kill lethal ARBs

#ARBs >

MaxLimit?

ImmuNet

Stat’s &

Visualization

Yes

No

Compress ImmuNet

Kill extra ARBs (based on

age/stimulation strategy) OR

increase acuteness of competition OR

Move oldest patterns to aux. storage

Secondary

storage

Summarizing noisy data in 1 pass

Different Levels of Noise

Validation for clustering streams in 1 pass:

- Detect all clusters ( miss no cluster)

- do not discover spurious clusters

Validation measures (averaged over 10 runs):

Hits,

Spurious Clusters

Experimental Results

Arbitrary Shaped Clusters

Experimental Results

Arbitrary Shaped Clusters

Concept drift / Data Streams

TECNO-Streams: inspired by the immune system

[ICDM 2003, WEBKDD 2003, COMPUTER

NETWORKS 2006]

CIKM results: collaborative filtering recommendation

in evolving streams

TRAC-Streams: extends a robust clustering algorithm

to streams [SDM 2006]

Validation Methodology in Dynamic

Environments

Limit Working Capacity (memory) for Profile Synopsis in TECNOStreams (or Instance Base for K-NN) to 30 cells/instances

Perform 1 pass mining + validation

• First present all combination subset(s) of a real ground-truth session to

recommender,

• Determine closest neighborhood of profiles from TECNO-Stream’s synopsis (or

instances for KNN)

• Accumulate URLs in neighborhood

• Sort and select top N URLs Recommendations

• Then Validate against ground-truth/complete session (precision, coverage, F1),

• Finally present complete session to TECNO-Streams (and K-NN)

Mild Changes: F1 versus session number, 1.7K sessions

TECNO-Streams higher

(noisy, naturally occurring

but unexpected fluctuation in

user access patterns)

Memory capacity limited to 30 nodes in TECNOStreams’ synopsis, 30 KNN-instances

Concept drift / Data Streams

TECNO-Streams: inspired by the immune system

[ICDM 2003, WEBKDD 2003, COMPUTER

NETWORKS 2006]

CIKM results: collaborative filtering recommendation

in evolving streams

TRAC-Streams: extends a robust clustering algorithm

to streams [SDM 2006]

TRAC-STREAMS:

Robust weights: decrease in distance & in time since data arrived

criterion ≈ density = weights * distances/scale - weights

Adaptive Robust Weight with gradual forgetting of Old patterns

Objective function:

First term: Robust sum of normalized errors

Second term: robust soft count of inliers/good points that are not noise

Total Effect: minimize robust error, while including as many non-noise points as

possible – To maximize robustness & efficiency from robust estimation point of

view

Details

- Incremental Updates: J/c = 0, J/ = 0,

- Centers & scale: updated with every new data point from the

stream

- Cluster parameters (c, ) slowly forget older data, and track new

data

-Chebyshev test:

-to determine whether a new data record is an outlier

-To determine whether two clusters should be merged

Incremental location (center) update:

Incremental scale update:

Results in 1 pass over noisy data stream:

- Number of clusters determined automatically

- Clusters vary in size and density

- Noisy data

- Scales are automatically estimated in 1 pass

P2P

Information is exchanged in a decentralized manner

Attractive platform for participants in contraband information

exchange due to the sense of “anonymity” that prevails while

performing P2P transactions.

P2P networks vary in the level of preserving the anonymity of their

users.

Information Exchange in a broadcast-based (unstructured) P2P

retrieval:

Query is initiated at a starting node,

then it is relayed from this node to all nodes in its neighborhood,

and so on…

until a matching information is found on a peer node,

Finally, a direct communication is established from the initiating node and

the last node to transfer the content.

As query propagates on its way, each node does not know whether:

Neighboring node is asking for info for itself

Or simply transmitting/broadcasting another node’s query

Anonymity Illegal exchanges: e.g. child pornography material

Possible Roles of Nodes

Creator/Top Level

Distributor (A)

Connection (B)

Recipient (C)

Connection (B)

Recipient

(C)

Connection (B)

Recipient (C)

Steps in Mining P2P Networks

Undercover Node-based Probing and Monitoring: (next

slide)

to Build an Approximate Model of Network Activity

Flagging Contraband Content:

key word,

hashes,

other patterns

Evaluation against different scenarios:

recipient querying,

distribution and

routing cases

Using the Evaluation results to fine-tune the node

positioning strategy

Normal or

Undercover

Recipient

(initiates query)

query

query

query

query

query

found

X

query

X

query

query

query

found

query

undercover

query

Undercover

(Y)

(Y)

query

query

query

found

query

Z

Z

found

query

Distributor

Reconstructing Illegal Exchanges

Each undercover node capture a local part of the exchange

All nodes “collective” information attempt to reconstruct a bigger

picture of the exchange

Infer most likely

contraband query initiators (or recipients, thus type C) and

content distributors (thus type A)

==> Suspect Rankings of the nodes

How to perform the above “inference”?

relational probabilistic inference , or

network influence propagation mechanisms

where evidence is gradually propagated in several iterations via the links from node to

node.

Belief Propagation algorithms that rely on message passing from each node to its

neighbors

Challenges:

Keeping up to date with the dynamic nature of the network (as new nodes

leave and new nodes join)

Aim for a close (yet approximate) snapshot to reality in a statistical sense,

particularly using a large number of concurrent crawlers in parallel.

Undercover Node-based Probing:

Positioning nodes…

Distributed crawling of P2P networks to: collect

logical topology (i.e. virtual connections between nodes that determine

who are the neighbors accessible to each node when passing a message)

network topology (i.e. actual network identifiers such as IP address of a

node)

Analysis of correlations between the logical and network topologies

by treating network topologies (e.g. top domain) as class information,

by clustering the logical topology,

and then comparing the class versus cluster entropies

using for e.g. the information gain measure.

Using the above results to validate assumptions about the relationships

between logical and network topologies.

Using the results to refine node positioning in our proposed Node-based

Probing and Monitoring algorithm in the next step

Olfa Nasraoui

Computer Engineering & Computer Science

University of Louisville

Olfa.nasraoui@louisville.edu

In collaboration with

Joan Schmelz

Department of Physics

University of Memphis

jschmelz@memphis.edu

Acknowledgement: team members who worked on this project:

Nurcan Durak, Sofiane Sellah, Heba Elgazzar, Carlos Rojas (Univ. of

Louisville)

Jonatan Gomez and Fabio Gonzalez (National Univ. of Colombia)

Jennifer Roames, Kaouther Nasraoui (Univ. of Memphis)

NASA-AISRP PI Meeting, Univ. Maryland,

Oct. 3-5 2006

Nasraoui & Schmelz: Mining Solar Images to Support

Astrophysics Research

Motivations (1): The Coronal Heating Problem

The question of why the solar corona is so hot remains one of the

most exciting astronomy puzzles for the last 60 years.

Temperature increases very steeply

from 6000 degrees in photosphere (visible surface of the Sun)

to a few million degrees in the corona (region 500 kilometers above the

photosphere).

Even though the Sun is hotter on the inside than it is on the outside.

The outer atmosphere of the Sun (the corona) is indeed hotter than the

underlying photosphere!

Measurements of the temperature distribution along the coronal

loop length can be used to support or eliminate various classes of

coronal temperature models.

Scientific analysis requires data observed by instruments such as

EIT, TRACE, and SXT.

Motivations (2): Finding Needles in Haystacks (manually)

The biggest obstacle to completing the coronal temperature

analysis task is collecting the right data (manually).

The search for interesting images (with coronal loops) is by far the most

time consuming aspect of this coronal temperature analysis.

Currently, this process is performed manually.

It is therefore extremely tedious, and hinders the progress of science in

this field.

The next generation "EIT" called MAGRITE, scheduled for

launch in a few years on NASA's Solar Dynamics Observatory,

should be able to take

as many images in about four days

as was taken by EIT over 6 years!

and will no doubt need state of the art techniques to sift through the

massive data to support scientific discoveries

Goals of the project: Finding Needles in Haystacks

(automatically)

Develop an image retrieval system based on Data

Mining

to quickly sift through data sets downloaded from online

solar image databases

and automatically discover the rare but interesting images

containing solar loops, which are essential in studies of the

Coronal Heating Problem

Publishing mined knowledge on the web in an easily

exchangeable format for astronomers.

Sources of Data

EIT: Extreme UV Imaging Telescope aboard the

NASA/European Space Agency spacecraft called SOHO

(Solar and Heliospheric Observatory)

http://umbra.nascom.nasa.gov/eit

TRACE: NASA’s Transition Region And Coronal

Explorer

http://vestige/lmsal.com/TRACE.SXT

SXT: Soft X-ray Telescope database on the Japanese

spacecraft Yohkoh:

http://ydac.mssl.ucl.ac.uk/ydac/sxt/sfm-cal-top.html

Samples of Data: EIT

NASA-AISRP PI Meeting,

Univ. Maryland, Oct. 3-5 2006

NASA-AISRP PI Meeting, Univ.

Maryland, Oct. 3-5 2006

NASA-AISRP PI Meeting, Univ.

Maryland, Oct. 3-5 2006

Steps

Sample Image Acquisition and Labeling:

1.

images with and without solar loops, 1020 X 1022 ~ 2 MB / image

Image Preprocessing, Block Extraction, and Feature

extraction

Building & Evaluating Classification Models

2.

3.

At block level (is a block a loop or no-loop block?)

10-fold cross validation

Train, then test on independent set 10 times,

average results

At image level (does an image contain a loop block?)

Use model learned from training data

One global model, or 1 model/solar cycle

Test on independent set of images from different solar cycles

1.

Step 2. Image Preprocessing and Block

Extraction

Despeckling (to clean noise) and Gradient

Transformation (to bring out the edges)

Phase I (loops out of solar disk): divide

area outside solar disk into blocks with an

optimal size (to maximize overlap with

marked areas over all training images)

Use each block as one data record to

extract individual data attributes for

learning and testing

Difficult classification problem

Loops come in different sizes, shapes, intensities,

…etc

Hardly distinguishable regions without

interesting loops

Inconsistencies in labeling are common

Subjectivity, quality of data

Even at edge level: challenging

Which block is NOT a loop block?

Even at edge level: challenging

Which block is NOT a loop block?

Defective and Asymmetric nature of Loop

Shapes

Features inside each block - applied on the original

intensity levels

Statistical Features

Mean

Standard Deviation

Smoothness

Third Moment

Uniformity

Entropy

Features inside each block - applied on edges

Hough-based Features

First apply Hough transform

Image space Hough Space (H.S.)

Pixel parameter combination for a given shape

All pixels vote for several parameter combinations

Extract peaks from H.S.

Then construct features based on H.S.

Peak detection is very challenging:

Many false peaks (noise)

Bin splitting (peaks are split)

Biggest problem: size of Hough accumulator array:

Every pixel votes for all possible curves that go trough this pixel

Combinatorial explosion as we add more parameters

Solution: we feed the Hough space into a stream clustering

algorithm to detect peaks

TRAC-Stream clustering

eliminate need to “store” Hough accumulator array by processing it in 1 pass

Input: initial scales 0, max. No. of clusters

Output: running (real-time) synopsis of clusters in input stream

Repeat until end of stream {

Input next data point x

For each cluster in current synopsis {

Perform Chebyshev test (test for compatibility without any assumptions on distributions, but

requires robust scale estimates)

If x passes Chebyshev test Then

Update cluster parameters: centroid, scale

}

If no cluster or x fails all Chebyshev tests Then

Create new cluster (c=x, 0

Perform pairwise Chebyshev tests to merge compatible clusters:

densest cluster absorbs merged cluster

Centroid updated

Eliminate clusters with low density

}

Examples of clustering 2-D Hough space

Curvature Features

Original Image

Automatically

Detected Curves

Step 3. Classification

Classification algorithm

Type of classifier

Decision Stump

Decision Tree Induction & Pruning

C4.5

Decision Tree Induction

Adaboost

Decision Tree w/ Boosting

RepTree

Tree Induction

Conjunctive Rule

Rule Generation

Decision Table

Rule Generation

PART

Rule Generation

JRip

Rule Generation

1-NN

Lazy

3-NN

Lazy

SVM (Support Vector Machines)

Function based

Multi-Layer Perceptron (Neural Network)

Function based

Naive Bayes (Bayesian classifier)

Probabilistic

Mont

Total

h

Viewed

Images

Aug.

96

Mar.

00

Dec.

00

Feb.

04

Jan.

05

Jun.

05

Jun.

96

Dec.

96

Loop

Imag

e

s

# Loops on

limb

# Loops

on

disk

Wavelength

Ratio of limb

loops / viewed

imgs

Ratio of limb

loops /

All loops:

3/37 = 8% 3/6=50%

37

6

3

3

171

1/115= 0.8% 1/6=16%

115

6

1

5

171

3/123= 2% 3/18=1/6=16%

123

18

3

15

171

1/111= 0.9% 1/17=5%

111

372

360

17

48

18

1

15

17

16

171

15/372= 4% 15/48=31%

33

171,195,2

84,304

17/360= 4% 17/18=94%

13

171,195,2

84,304

2/113= 1% 2/14=14%

113

14

2

12

171

23/167= 13% 23/162=14%

167

162

Mar.NASA-AISRP PI Meeting, Univ.

Maryland, Oct. 3-5 2006

97

154

31

23

139

171

Nasraoui & Schmelz: Mining Solar Images to Support

3

171

Astrophysics

Research28

3/154= 2% 3/31=9%

Block-based results

Features / Statistical

Classifier

Houghbased

Pre. Rec. Pre. Rec.

Spatial

Pre. Rec.

Curvature

All Features

Pre

Pre.

Rec.

AdaBoost

0.39

0.256

0.464

0.4

0.458

0.409

0.416

0.325

NB

0.193

0.057

0.398

0.618

0.437

0.501

0.289

0.801

MLP

C4.5

0.484

0.218

0.463

0.457

0.463

0.139

0.442

0.3

0.459

0.266

0.441

0.362

0.481

0.434

0.419

0.218

RIPPER

K-NN

(k=5)

0.515

0.166

0.487

0.325

0.498

0.36

0.448

0.431

0.201

0.472

0.357

0.479

0.31

0.377

Rec.

0.643

0.596

0.404

0.573

0.6

0.638

0.548

0.536

0.233

0.591

0.613

0.208

0.641

0.462

150 solar images from 1996, 1997, 2000, 2001, 2004 2005

403 Loop blocks

7950 No-loop blocks

Loop Mining Tool

Nasraoui & Schmelz: Mining Solar Images to Support

Astrophysics Research

Image Based Testing Results

Actual Loop Images

Predicted

Loop Images

No-Loop Images

Total

Minimum

Cycle

Pre.

Rec.

0.5

0.9

40

11

51

No-Loop Images

Total

10

39

49

50

50

100

Medium

Cycle

Maximum All Cycles

Cycle

Pre. Rec.

Pre. Rec. Pre. Rec.

0.8

0.7

0.8

1

0.88

0.83

• More DATA Data MINING

• FASTER Data Arrival Rates (Massive Data) STREAM Data

Mining

• Many BENEFITS:

• from DATA to KNOWLEDGE!!!

• Better KNOWLEDGE better DECISIONS help society: E.g. Scientists,

Businesses, Students, Casual Web Surfer, Cancer Patients, Children…

• Many RISKS: Privacy, Ethics, Legal Implications:

• Might anything that we do harm certain people?

•

Who will use a certain Data Mining tool?

•

•

What if certain Govts. use it to catch political dissidents?

Who will benefit from DM?

•

FAIRNESS: E.g. shouldn’t businesses/websites reward Web surfers in return for the wealth of

data/user profiles that their clickstreams provide???

•Questions/Comments/Discussions????