Rumba: An Online Quality Management System for

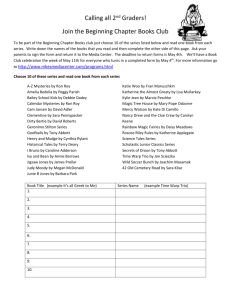

advertisement

WarpPool: Sharing Requests with Inter-Warp Coalescing for Throughput Processors John Kloosterman, Jonathan Beaumont, Mick Wollman, Ankit Sethia, Ron Dreslinski, Trevor Mudge, Scott Mahlke Computer Engineering Laboratory University of Michigan Introduction • GPUs have high peak performance • For many benchmarks, memory throughput limits performance 50% % Benchmarks 40% 30% 20% 10% 0% < 12% 12-33% 33-66% % cycles stalled 66%+ GPU Architecture • 32 threads grouped into SIMD warps warp add r1, r2, r3 thread load [r1], r2 • Warp scheduler sends ready warps to FUs warp 0 1 2 47 ... warp scheduler ALUs Load/Store Unit 3 GPU Memory System Warp Scheduler Load Load/Store Unit Intra-Warp Coalescer L1 MSHR to L2, DRAM Group by cache line Cache Lines Problem: Divergence Warp Scheduler Load Load/Store Unit Intra-Warp Coalescer L1 Group by cache line Cache Lines MSHR to L2, DRAM … Problem: Bottleneck at L1 Warp Scheduler Loads Warp 0 Warp 1 Warp 2 Warp 3 Warp 4 Warp 5 Load/Store Unit Intra-Warp Coalescer Group by cache line Warp 1 0 Warp 5 Warp 4 L1 Warp 3 Warp 2 MSHR to L2, DRAM Hazards in Benchmarks 30 Cache lines per load/store 25 Waiting loads/stores 20 15 10 5 0 Memory Divergent Bandwidth-Limited Cache-Limited 7 Inter-Warp Spatial Locality • Spatial locality not just within a warp warp 0 warp 1 warp 2 warp 3 warp 4 divergent inside a warp Inter-Warp Spatial Locality • Spatial locality not just within a warp warp 0 warp 1 warp 2 warp 3 warp 4 Inter-Warp Spatial Locality • Spatial locality not just within a warp warp 0 warp 1 warp 2 warp 3 warp 4 • Key insight: use this locality to address throughput bottlenecks Inter-Warp Window Warp Scheduler Intra-Warp Coalescer 32 addresses Warp Scheduler 32 addresses Intra-Warp Coalescer Intra-Warp Coalescer Intra-Warp Coalescer L1 1 cache line from one warp Inter-Warp Coalescer 1many cache line from cache lines one fromwarp many warps L1 1 cache line from many warps 11 Design Overview Warp Scheduler Intra-Warp Coalescer Inter-Warp Coalescer L1 Intra-Warp Coalescer Intra-Warp Coalescers Warp Scheduler Inter-Warp Queues Selection Logic L1 12 Intra-Warp Coalescers Address Generation Warp Scheduler load load Intra-Warp Coalescer ... to inter-warp coalescer Queue memory instructions • Queue load instructions before address generation • Intra-warp coalescers same as baseline • 1 request for 1 cache line exits per cycle 13 Inter-Warp Coalescer intra-warp coalescers W0 Cache line address W0 ... Cache line address warp ID thread mapping warp ID thread mapping ... ... ... ... sort by address • Many coalescing queues, small # tags each • Requests mapped to coalescing queues by address • Insertion: tag lookup, max 1 per cycle per queue 14 Inter-Warp Coalescer intra-warp coalescers W0 W0 W0 Cache line address warp ID ... Cache line address thread mapping warp ID thread mapping ... ... ... 0 ... sort by address • Many coalescing queues, small # tags each • Requests mapped to coalescing queues by address • Insertion: tag lookup, max 1 per cycle per queue 15 Inter-Warp Coalescer intra-warp coalescers W1 Cache line address W1 warp ID ... thread mapping warp ID thread mapping 0 0 ... Cache line address ... ... ... sort by address • Many coalescing queues, small # tags each • Requests mapped to coalescing queues by address • Insertion: tag lookup, max 1 per cycle per queue 16 Inter-Warp Coalescer intra-warp coalescers Cache line address warp ID ... thread mapping Cache line address warp ID 0 0 1 ... thread mapping ... sort by address • Many coalescing queues, small # tags each • Requests mapped to coalescing queues by address • Insertion: tag lookup, max 1 per cycle per queue 17 Selection Logic • Select a cache line from the inter-warp queues to send to L1 L1 Cache ... Selection Logic • 2 strategies: • Default: pick oldest request • Cache-sensitive: prioritize one warp • Switch based on miss rate over quantum 18 Methodology • Implemented in GPGPU-sim 3.2.2 • • • • GTX480 baseline 32 MSHRS 32kB cache GTO scheduler • Verilog implementation for power and area • Benchmark criteria • Parboil, PolyBench, Rodinia benchmark suites • Memory throughput limited: waiting memory requests for more than 90% of execution time • WarpPool configuration • • • • 2 intra-warp coalescers 32 inter-warp queues 100,000 cycle quantum for request selector Up to 4 inter-warp coalesces per L1 access 19 Results: Speedup 5.16 2.35 3.17 2 1.38x Speedup (x) 1.5 1 0.5 0 Memory Divergent Bandwidth-Limited 8-way banked cache MRPB [1] MRPB: Memory request prioritization for massively parallel processors: HPCA 2014 Cache-Limited WarpPool 20 Results: L1 Throughput Requests Serviced per L1 access 1.5 1 0.5 0 Memory Divergent Bandwidth-Limited 8-way banked cache Cache-Limited WarpPool • Banked cache uses divergence, not locality • WarpPool merges even when not divergent • No speedup for banked cache: 1 miss/cycle 21 Results: L1 Misses 100% % Baseline MPKI 75% 50% 25% 0% Memory Divergent Bandwidth-Limited MRPB Cache-Limited WarpPool • MRPB has larger queues • Oldest policy sometimes preserves cross-warp temporal locality [1] MRPB: Memory request prioritization for massively parallel processors: HPCA 2014 22 Conclusion • Many kernels limited by memory throughput • Key insight: use inter-warp spatial locality to merge requests • WarpPool improves performance by 1.38x: • Merging requests: increase L1 throughput by 8% • Prioritizing requests: decrease L1 misses by 23% WarpPool: Sharing Requests with Inter-Warp Coalescing for Throughput Processors John Kloosterman, Jonathan Beaumont, Mick Wollman, Ankit Sethia, Ron Dreslinski, Trevor Mudge, Scott Mahlke Computer Engineering Laboratory University of Michigan