WS06AFSR_summary_for_CSTR

advertisement

Articulatory Feature-Based Speech Recognition

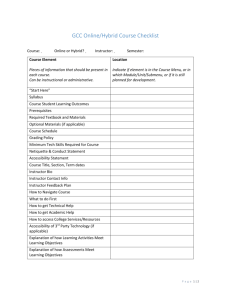

word

word

ind1

ind1

U1

U1

sync1,2

sync1,2

S1

S1

ind2

ind2

U2

U2

sync2,3

sync2,3

S2

S2

ind3

ind3

U3

U3

S3

S3

JHU WS06 Final team presentation

August 17, 2006

Project Participants

Team members:

Karen Livescu (MIT)

Özgür Çetin (ICSI)

Mark Hasegawa-Johnson (UIUC)

Simon King (Edinburgh)

Nash Borges (DoD, JHU)

Chris Bartels (UW)

Arthur Kantor (UIUC)

Partha Lal (Edinburgh)

Lisa Yung (JHU)

Ari Bezman (Dartmouth)

Stephen Dawson-Haggerty (Harvard)

Bronwyn Woods (Swarthmore)

Advisors/satellite members:

Jeff Bilmes (UW), Nancy Chen (MIT), Xuemin Chi (MIT), Trevor Darrell (MIT),

Edward Flemming (MIT), Eric Fosler-Lussier (OSU), Joe Frankel

(Edinburgh/ICSI), Jim Glass (MIT), Katrin Kirchhoff (UW), Lisa Lavoie

(Elizacorp, Emerson), Mathew Magimai (ICSI), Daryush Mehta (MIT), Kate

Saenko (MIT), Janet Slifka (MIT), Stefanie Shattuck-Hufnagel (MIT), Amar

Subramanya (UW)

Why are we here?

Why articulatory feature-based ASR?

Improved modeling of co-articulation

Potential savings in training data

Compatibility with more recent theories of phonology (autosegmental

phonology, articulatory phonology)

Application to audio-visual and multilingual ASR

Improved ASR performance with feature-based observation models in

some conditions [e.g. Kirchhoff ‘02, Soltau et al. ‘02]

Improved lexical access in experiments with oracle feature

transcriptions [Livescu & Glass ’04, Livescu ‘05]

Why now?

A number of sites working on complementary aspects of this idea: U.

Edinburgh (King et al.), UIUC (Hasegawa-Johnson et al.), (Livescu et al.)

Recently developed tools (e.g. GMTK) for systematic exploration of the

model space

Definitions: Pronunciation and observation modeling

language model

P(w)

w = “makes sense...”

pronunciation

model

P(q|w)

q = [ m m m ey1 ey1 ey2 k1 k1 k1 k2 k2 s ... ]

observation

model

P(o|q)

o =

Feature set for observation modeling

pl 1

dg 1

nas

glo

rd

vow

LAB, LAB-DEN, DEN, ALV, POST-ALV, VEL, GLO,

RHO, LAT, NONE, SIL

VOW, APP, FLAP, FRIC, CLO, SIL

+, ST, IRR, VOI, VL, ASP, A+VO

+, aa, ae, ah, ao, aw1, aw2, ax, axr, ay1, ay2, eh, el,

em, en, er, ey1, ey2, ih, ix, iy, ow1, ow2, oy1, oy2,

uh, uw, ux, N/A

SVitchboard

Data: SVitchboard - Small Vocabulary Switchboard

SVitchboard [King, Bartels & Bilmes, 2005] is a collection of

small-vocabulary tasks extracted from Switchboard 1

Closed vocabulary: no OOV issues

Various tasks of increasing vocabulary sizes: 10, … 500 words

Pre-defined train/validation/test sets

and 5-fold cross-validation scheme

Utterance fragments extracted from SWB 1

always surrounded by silence

Word alignments available (msstate)

Whole word HMM baselines already built

SVitchboard = SVB

SVitchboard: amount of data

Vocabulary

size

Utterances

Word tokens

Duration

Duration

(total, hours) (speech, hours)

10

6775

7792

3.2

0.9

25

9778

13324

4.7

1.4

50

12442

20914

6.2

1.9

100

14602

28611

7.5

2.5

250

18933

51950

10.5

4.0

500

23670

89420

14.6

6.4

SVitchboard: example utterances

10 word task

oh

right

oh really

so

well the

500 word task

oh how funny

oh no

i feel like they need a big home a nice place where someone can

have the time to play with them and things but i can't give them

up

oh

oh i know it's like the end of the world

i know i love mine too

SVitchboard: isn’t it too easy (or too hard)?

No (no).

Results on the 500 word task test set using a recent SRI

system:

First pass

42.4% WER

After adaptation

26.8% WER

SVitchboard data included in the training set for this system

SRI system has 50k vocab

System not tuned to SVB in any way

SVitchboard: what is the point of a 10 word task?

Originally designed for debugging purposes

However, results on the 10 and 500 word tasks obtained in this

workshop show good correlation between WERs on the two

tasks:

WER on 500 word task vs 10 word task

WER (%) 500 word task

85

80

75

70

65

60

55

50

15

17

19

21

23

25

WER (%) 10 word task

27

29

SVitchboard: pre-existing baseline word error

rates

Whole word HMMs trained on SVitchboard

these results are from [King, Bartels & Bilmes, 2005]

Built with HTK

Use MFCC observations

Vocabulary

Full validation

set

Test set

10 word

20.2

20.8

500 word

69.8

70.8

SVitchboard: experimental technique

We only perfomed task 1 of SVitchboard (the first of 5 crossfold sets)

Training set is known as “ABC”

Validation set is known as “D”

Test set is known as “E”

SVitchboard defines cross-validation sets

But these were too big for the very large number of

experiments we ran

We mainly used a fixed 500 utterance randomly-chosen

subset of “D” which we call the small validation set

All validation set results reported today are on this set,

unless stated otherwise

SVitchboard: experimental technique

SVitchboard includes word alignments.

We found that using these made training significantly

faster, and gave improved results in most cases

Word alignments are only ever used during training

Word

alignments?

Validation set

Test set

without

65.1

67.7

with

62.1

65.0

Results above is for a monophone HMM with PLP

observations

SVitchboard: workshop baseline word error rates

Monophone HMMs trained on SVitchboard

PLP observations

Vocabulary

Small

validation set

Full validation

set

Test set

10 word

16.7

18.7

19.6

500 word

62.1

-

65.0

SVitchboard: workshop baseline word error rates

Triphone HMMs trained on SVitchboard

PLP observations

500 word task only

System

Small

validation set

Validation set

Test set

HTK

-

56.4

61.2

GMTK /

gmtkTie

56.1

-

59.2

(GMTK system was trained without word alignments)

SVitchboard: baseline word error rates summary

Test set word error rates

Model

10 word

500 word

Whole word

20.8

70.8

Monophone

19.6

65.0

HTK triphone

61.2

GMTK triphone

59.2

gmtkTie

gmtkTie

General parameter clustering and tying tool for GMTK

Written for this workshop

Currently most developed parts:

Decision-tree clustering of Gaussians, using same

technique as HTK

Bottom-up agglomerative clustering

Decision-tree tying was tested in this workshop on various

observation models using Gaussians

Conventional triphone models

Tandem models, including with factored observation

streams

Feature based models

Can tie based on values of any variables in the graph,

not just the phone state (e.g. feature values)

gmtkTie

gmtkTie is more general than HTK HHEd

HTK asks questions about previous/next phone identity

HTK clusters states only within the same phone

gmtkTie can ask user-supplied questions about usersupplied features: no assumptions about states,

triphones, or anything else

gmtkTie clusters user-defined groups of parameters, not

just states

gmtkTie can compute cluster sizes and centroids in lots

of different ways

GMTK/gmtkTie triphone system built in this workshop is at

least as good as HTK system

gmtkTie: conclusions

It works!

Triphone performance at least as good as HTK

Can cluster arbitrary groups of parameters, asking questions

about any feature the user can supply

Later in this presentation, we will see an example of

separately clustering the Gaussians for two observation

streams

Opens up new possibilities for clustering

Much to explore:

Building different decision trees for various factorings of the

acoustic observation vector

Asking questions about other contextual factors

Hybrid models

Hybrid models: introduction

Motivation

Want to use feature-based representation

In previous work, we have successfully recovered feature values

from continuous speech using neural networks (MLPs)

MLPs alone are just frame-by-frame classifiers

Need some “back end” model to decode their output into words

Ways to use such classifiers

Hybrid models

Tandem observations

Hybrid models: introduction

Conventional HMMs generate observations via a likelihood

p(O|state) or p(O|class) using a mixture of Gaussians

Hybrid models use another classifier (typically an MLP) to

obtain the posterior P(class|O)

Dividing by the prior gives the likelihood, which can be used

directly in the HMM: no Gaussians required

Hybrid models: introduction

Advantages of hybrid models include:

Can easily train the classifier discriminatively

Once trained, MLPs will compute P(class|O) relatively fast

MLPs can use a long window of acoustic input frames

MLPs don’t require input feature distribution to have

diagonal covariance (e.g. can use filterbank outputs from

computational auditory scene analysis front-ends)

Hybrid models: standard method

Standard phone-based hybrid

Train an MLP to classify phonemes, frame by frame

Decode the MLP output using simple HMMs for smoothing

(transition probabilities easily derived from phone duration

statistics – don’t even need to train them)

Hybrid models: our method

Feature-based hybrid

Use ANNs to classify articulatory features instead of phones

8 MLPs, classifying pl1, dg1, etc frame-by-frame

One of the motivations for using features is that

it should be easier to build a multi-lingual /

cross-language system this way

Hybrid models: using feature-classifying MLPs

p(dg1 | phoneState) = Non-deterministic CPT (learned)

phoneState

dg1

...

pl1

rd

dummy

variable

MLPs provide “virtual evidence” here

Hybrid models: training the MLPs

We use MLPs to classify speech into AFs, frame-by-frame

Must obtain targets for training

These are derived from phone labels

obtained by forced alignment using the SRI recogniser

this is less than ideal, but embedded training might

help (results later)

MLPs were trained by Joe Frankel (Edinburgh/ICSI) & Mathew

Magimai (ICSI)

Standard feedforward MLPs

Trained using Quicknet

Input to nets is a 9-frame window of PLPs (with VTLN

and per-speaker mean and variance normalisation)

Hybrid models: training the MLPs

Two versions of MLPs were initially trained

Fisher

Trained on all of Fisher but not on any data from

Switchboard 1

SVitchboard

Trained only on the training set of SVB

The Fisher nets performed better, so were used in all hybrid

experiments

Hybrid models: MLP details

MLP architecture is:

input units x hidden units x output units

Feature

MLP architecture

glo

351 x 1400 x 4

dg1

351 x 1600 x 6

nas

351 x 1200 x 3

pl1

351 x 1900 x 10

rou

351 x 1200 x 3

vow

351 x 2400 x 23

fro

351 x 1700 x 7

ht

351 x 1800 x 8

Hybrid models: MLP overall accuracies

Frame-level accuracies

MLPs trained on Fisher

Accuracy computed with

respect to SVB test set

Silence frames

excluded from this

calculation

More detailed analysis

coming up later…

Feature

Accuracy (%)

glo

85.3

dg1

73.6

nas

92.7

pl1

72.6

rou

84.7

vow

65.6

fro

69.2

ht

68.0

Hybrid models: experiments

Using MLPs trained on Fisher using original phone-derived

targets

vs.

Using MLPs retrained on SVB data, which has been aligned

using one of our models

Hybrid model

vs

Hybrid model plus PLP observation

Hybrid models: experiments – basic model

Basic model is trained on

activations from original

MLPs (Fisher-trained)

The only parameters in

this DBN are the

conditional probability

tables (CPTS) describing

how each feature

depends on phone state

Embedded training

Use the model to realign

the SVB data (500 word

task)

Starting from the Fishertrained nets, retrain on

these new targets

Retrain the DBN on the

new net activations

phoneState

dg1

...

pl1

rd

Model

Small

validation

set

Test set

Hybrid

26.0

30.1

Hybrid,

embedded

training

23.1

24.3

Hybrid models: 500 word results

Small validation set

hybrid

66.6

hybrid, embedded

training

62.6

Hybrid models: adding in PLPs

To improve accuracy, we combined the “pure” hybrid model

with a standard monophone model

Can/must weight contribution of PLPs

Used a global weight on each of the 8 virtual evidences,

and a fixed weight on PLPs of 1.0

Weight tuning worked best if done both during training

and decoding

Computationally expensive: must train and cross-validate

many different systems

Hybrid models: adding PLPs

p(dg1 | phoneState) = Non-deterministic CPT (learned)

phoneState

dg1

...

pl1

rd

PLPs

dummy

variable

MLP likelihoods (implemented via virtual evidence in GMTK)

Hybrid models: weighting virtual evidence vs PLP

Word error rate (%)

19

WER (%)

18.5

18

17.5

17

16.5

0

0.2

0.4

0.6

0.8

1

Weight on virtual evidence

1.2

1.4

1.6

Hybrid models: experiments – basic model + PLP

Basic model is augmented

with PLP observations

Generated from

mixtures of Gaussians,

initialised from a

conventional

monophone model

phoneState

dg1

...

pl1

rd

PLPs

A big improvement over

hybrid-only model

A small improvement over

the PLP-only monophone

model

Model

Small

validation

set

Test set

Hybrid

26.0

30.1

PLP only

16.9

20.0

Hybrid +

PLP

16.2

19.6

Hybrid experiments: conclusions

Hybrid models perform reasonably well, but not yet as well

as conventional models

But they have fewer parameters to be trained

So may be a viable approach for small databases:

Train MLPs on large database (e.g. Fisher)

Train hybrid model on small database

Cross-language??

Embedded training gives good improvements for the “pure”

hybrid model

Hybrid models augmented with PLPs perform better than

baseline PLP-only models

But improvement is only small

The best way to use the MLPs trained on Fisher might be to

construct tandem observation vectors…

Using MLPs to transfer knowledge from larger

databases

Scenario

we need to build a system for a domain/accent/language

for which we have only a small amount of data

We have lots of data from other

domains/accents/languages

Method

Train MLP on large database

Use it in either a hybrid or a tandem system in target

domain

Using MLPs to transfer knowledge from larger

databases

Articulatory features

It is plausible that training MLPs to be AF classifiers could

be more accent/language independent than phones

Tandem results coming up shortly will show that, across

very similar domains (Fisher & SVB), AF nets perform as

well or better than phone nets

Hybrid models vs Tandem observations

Standard hybrid

Train an MLP to classify phonemes, frame by frame

Decode the MLP output using simple HMMs (transition

probabilities easily derived from phone duration statistics –

don’t even need to train them)

Standard tandem

Instead of using MLP output to directly obtain the likelihood,

just use it as a feature vector, after some transformations

(e.g. taking logs) and dimensionality reduction

Append the resulting features to standard features, e.g. PLPs

or MFCCs

Use this vector as the observation for a standard HMM with a

mixture-of-Gaussians observation model

Currently used in state-of-art systems such as from SRI

but first a look at structural modifications . . .

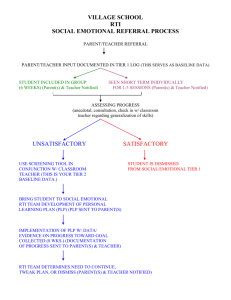

Adding dependencies

Consider the set of random variables constant

Take an existing model and augment it with edges to

improve performance on a particular task

Choose edges greedily, or using a discriminative metric like

the Explaining Away Residue [EAR, Blimes 1998]

One goal is to compare these approaches on our models

EAR(X,Y)=I(X,Y|Q) – I(X,Y)

For instance, let

X = word random variable

Y = degree 1 random variable

Q = {“right”, “another word”, “silence”}

Provides an indication of how valuable it is to model X and Y

jointly

Models

Monophone hybrid + PLP

Monophone hybrid

word

word

phoneState

dg1

pl1 rou

...

MLP likelihoods

(implemented via virtual

evidence in GMTK)

phoneState

pl1 rou

...

PLPs

Which edges?

Learn connections from

classifier outputs to

word

Intuition that the word

will be able to use the

classifier output to

correct other mistakes

being made

word

phoneState

pl1 rou

dg1

...

Results (10 word monophone hybrid)

Baseline monophone hybrid: 26.0% WER on CV, 30.0% Test

Choose edge with best CV score: ROU (25.1%)

Test result: 29.7% WER

Choose two single best edges on CV: VOW + ROU

Test result: 29.9%

Choose edge with highest EAR: GLO

Test result: 30.1%

Choose highest EAR between MLP features: DG1 ↦ PL1

Test result: 31.6% (CV: 26.0%)

In monophone + PLP model , the best result is obtained with

original model.

In Conclusion

The EAR measure would not have chosen the best possible

edges

These models may already be optimized

Once PLPs are added to the model, changing the structure

has little effect

Tandem observation

models

Introduction

Tandem is a method to use the predictions of a MLP as

observation vectors in generative models, e..g. HMMs

Extensively used in the ICSI/SRI systems: 10-20 %

improvement for English, Arabic, and Mandarin

Most previous work used phone MLPs for deriving tandem

(e.g., Hermansky et al. ’00, and Morgan et al. ‘05 )

We explore tandem based on articulatory MLPs

Similar to the approach in Kirchhoff ’99

Questions

Are articulatory tandems better than the phonetic ones?

Are factored observation models for tandem and

acoustic (e.g. PLP) observations better than the

observation concatenation approaches?

Tandem Processing Steps

MLP OUTPUTS

LOGARITHM

PRINCIPAL

COMPONENT ANALYSIS

SPEAKER MEAN/VAR

NORMALIZATION

TANDEM FEATURE

MLP posteriors are processed to

make them Gaussian like

There are 8 articulatory MLPs;

their outputs are joined

together at the input (64 dims)

PCA reduces dimensionality to

26 (95% of the total variance)

Use this 26-dimensional vector

as acoustic observations in an

HMM or some other model

The tandem features are usually

used in combination w/ a

standard feature, e.g. PLP

Tandem Observation Models

Feature concatenation: Simply append tandems to PLPs

- All of the standard modeling methods applicable to this meta

observation vector (e.g., MLLR, MMIE, and HLDA)

Factored models: Tandem and PLP distributions are

factored at the HMM state output distributions

- Potentially more efficient use of free parameters, especially if

streams are conditionally independent

- Can use e.g., separate triphone clusters for each observation

Concatenated Observations

State

PLP

Tandem

Factored Observations

State

PLP

Tandem

p(X, Y|Q) = p(X|Q) p(Y|Q)

Articulatory vs. Phone Tandems

Model

PLP

PLP/Phone Tandem (SVBD)

PLP/Articulatory Tandem (SVBD)

Test WER (%)

67.7

63.0

62.3

PLP/Articulatory Tandem (Fisher)

59.7

Monophones on 500 vocabulary task w/o alignments;

feature concatenated PLP/tandem models

All tandem systems are significantly better than PLP

alone

Articulatory tandems are as good as phone tandems

Articulatory tandems from Fisher (1776 hrs) trained

MLPs outperform those from SVB (3 hrs) trained MLPs

Concatenation vs. Factoring

Model

PLP

PLP / Tandem Concatenation

PLP x Tandem Factoring

PLP

PLP / Tandem Concatenation

PLP x Tandem Factoring

Task

10

500

Test WER (%)

24.5

21.1

19.7

67.7

59.7

59.1

Monophone models w/o alignments

All tandem results are significant over PLP baseline

Consistent improvements from factoring; statistically

significant on the 500 task

Triphone Experiments

Model

# of Clusters

PLP

477

PLP / Tandem Concatenation

880

PLP x Tandem Factoring

467x641

Test WER %

59.2

55.0

53.8

500 vocabulary task w/o alignments

PLP x Tandem factoring uses separate decision trees

for PLP and Tandem, as well as factored pdf’s

A significant improvement from factoring over the

feature concatenation approach

All pairs of results are statistically significant

Summary

Tandem features w/ PLPs outperform PLPs alone for both

monophones and triphones

8-13 % relative improvements (statistically significant)

Articulatory tandems are as good as phone tandems

- Further comparisons w/ phone MLPs trained on Fisher

Factored models look promising (significant results on

the 500 vocabulary task)

- Further experiments w/ tying, initialization

- Judiciously selected dependencies between the factored

vectors, instead of complete independence

Manual feature transcriptions

Main transcription guideline: The output should correspond to

what we would like our AF classifiers to detect

Details

2 transcribers: phonetician (Lisa Lavoie), PhD student in speech

group (Xuemin Chi)

78 SVitchboard utterances

9 utterances from Switchboard Transcription Project for comparison

Multipass transcription using WaveSurfer (KTH)

1st pass: Phone-feature hybrid

2nd pass: All-feature

3rd pass: Discussion, error-correction

Some basic statistics

Overall speed ~1000 x real-time

High inter-transcriber agreement (93% avg. agreement, 85% avg.

string accuracy)

First use to date of human-labeled articulatory feature data for

classifier/recognizer testing

GMTKtoWavesurfer Debugging/Visualization Tool

Input

Output

Per-utterance files

containing Viterbidecoded variables

List of variables

Optional map between

integer values and

labels

Optional reference

transcriptions for

comparison

Per-utterance, perfeature Wavesurfer(KTH)

transcription files

Wavesurfer

configuration for

viewing the decoded

variables, and optionally

comparing to a

reference transcription

General debugging/visualization

for any GMTK model

Summary

Analysis

Improved forced AF alignments obtained using MSState word

alignments combined with new AF-based models

MLP performance analysis shows that retrained classifiers move

closer to human alignments, farther from forced phonetic alignments

Data

Manual transcriptions

PLPs and MLP outputs for all of SVitchboard

New, improved SVitchboard baselines (monophone & triphone)

Tools

gmtkTie

Viterbi path analysis tool

Site-independent parallel GMTK training and decoding scripts

Acknowledgments

Jeff Bilmes (UW), Nancy Chen (MIT), Xuemin Chi (MIT), Trevor Darrell

(MIT), Edward Flemming (MIT), Eric Fosler-Lussier (OSU), Joe Frankel

(Edinburgh/ICSI), Jim Glass (MIT), Katrin Kirchhoff (UW), Lisa Lavoie

(Elizacorp, Emerson), Mathew Magimai (ICSI), Daryush Mehta (MIT),

Florian Metze (Deutsche Telekom), Kate Saenko (MIT), Janet Slifka (MIT),

Stefanie Shattuck-Hufnagel (MIT), Amar Subramanya (UW)

Support staff at

SSLI Lab, U. Washington

IFP, U. Illinois, Urbana-Champaign

CSTR, U. Edinburgh

ICSI

SRI

NSF

DARPA

DoD

Fred Jelinek

Laura Graham

Sanjeev Khudanpur

Jason Eisner

CLSP