Finding Musical Information - Indiana University Computer Science

advertisement

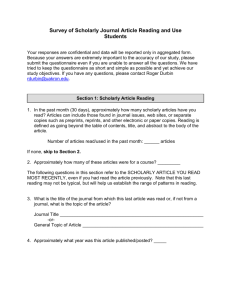

Finding Musical Information Donald Byrd School of Informatics and School of Music Indiana University 7 November 2006 Copyright © 2006, Donald Byrd 1 Review: Basic Representations of Music & Audio Audio Time-stamped Events Music Notation Common examples CD, MP3 file Standard MIDI File Sheet music Unit Sample Event Note, clef, lyric, etc. Explicit structure none little (partial voicing information) much (complete voicing information) Avg. rel. storage 2000 1 10 Convert to left - OK job: easy Good job: hard OK job: easy Good job: hard Convert to right 1 note: fairly hard Other: very hard OK job: hard Good job: very hard - Ideal for music bird/animal sounds sound effects speech music music rev. 4 Oct. 2006 2 Review: Basic & Specific Representations vs. Encodings Basic and Specific Representations (above the line) Audio Time-stamped Events Waveform Time-stamped MIDI Csound score Time-stamped expMIDI SMF .WAV Red Book (CD) Csound score Music Notation Gamelan not. Notelist expMIDI File Tablature CMN Mensural not. MusicXML Finale ETF Encodings (below the line) rev. 15 Feb. 3 Ways of Finding Music (1) • How can you identify information/music you’re interested in? – – – – You know some of it You know something about it “Someone else” knows something about your tastes => Content, Metadata, and “Collaboration” • Metadata – “Data about data”: information about a thing, not thing itself (or part) – Includes the standard library idea bibliographic information, plus information about structure of the content – The traditional library way – Also basis for iTunes, etc. – iTunes, Winamp, etc., use ID3 tags in MP3’s • Content (as in content-based retrieval) – What most people think “information retrieval” means – The Google, etc. way • Collaborative – “People who bought this also bought…” rev. 20 Oct. 06 4 Ways of Finding Music (2) • Do you just want to find the music now, or do you want to put in a “standing order”? • => Searching and Filtering • Searching: data stays the same; information need changes • Filtering: information need stays the same; data changes – Closely related to recommender systems – Sometimes called “routing” • Collaborative approach to identifying music makes sense for filtering, but maybe not for searching(?) 8 Mar. 06 5 Ways of Finding Music (3) • Most combinations of searching/filtering and the three ways of identifying desired music both make sense and seem useful • Examples Searching Filtering By content Shazam, NightingaleSearch, Themefinder FOAFing the Music, Pandora By metadata iTunes, Amazon.com, Variations2, etc. etc. iTunes RSS feed generator, FOAFing the Music Collaboratively N/A(?) Amazon.com, MusicStrands 6 Mar. 06 6 Searching: Metadata vs. Content • To librarians, “searching” means of metadata – Has been around as long as library catalogs (c. 300 B.C.?) • To IR experts, it means of content – Only since advent of IR: started with experiments in 1950’s • Ordinary people don’t distinguish – Expert estimate: 50% of real-life information needs involve both • “I think it’s the Beatles, and it goes like this: da-da-dum…” • Both approaches are slowly coming together – Exx: Variations2 w/ MusArt VocalSearch; FOAFing the Music – Need ways to manage both together 20 Oct. 06 7 Audio-to-Audio Music “Retrieval” (1) • “Shazam - just hit 2580 on your mobile phone and identify music” (U.K. slogan in 2003) • Query (music & voices): • Match: • Query (7 simultaneous music streams!): • Avery Wang’s ISMIR 2003 paper – http://ismir2003.ismir.net/presentations/Wang.html • Example of audio fingerprinting • Uses combinatorial hashing • Other systems developed by Fraunenhofer, Phillips 20 Mar. 06 8 Audio-to-Audio Music “Retrieval” (2) • Shazam is fantastically impressive to many people • Have they solved all the problems of music IR? No, (almost) none! – Reason: intended signal & match are identical => no time warping, let alone higher-level problems (perception/cognition) – Cf. Wang’s original attitude (“this problem is impossible”) to Chris Raphael’s (“they did the obvious thing and it worked”) • Applications – Consumer mobile recognition service – Media monitoring (for royalties: ASCAP, BMI, etc.) 20 Mar. 06 9 A Similarity Scale for Content-Based Music IR • “Relationships” describe what’s in common between two items—audio recordings, scores, etc.—whose similarity is to be evaluated (from closest to most distant) – For material in notation form, categories (1) and (2) don’t apply: it’s just “Same music, arrangement” 1. Same music, arrangement, performance, & recording 2. Same music, arrangement, performance; different recording 3. Same music, arrangement; different performance, recording 4. Same music, different arrangement; or different but closelyrelated music, e.g., conservative variations (Mozart, etc.), many covers, minor revisions 5. Different & less closely-related music: freer variations (Brahms, much jazz, etc.), wilder covers, extensive revisions 6. Music in same genre, style, etc. 7. Music influenced by other music 9. No similarity whatever 21 Mar. 06 10 Searching vs. Browsing • What’s the difference? What is browsing? – – – – Managing Gigabytes doesn’t have an index entry for either Lesk’s Practical Digital Libraries (1997) does, but no definition Clearcut examples of browsing: in a book; in a library In browsing, user finds everything; the computer just helps • Browsing is obviously good because it gives user control => reduce luck, but few systems have it. Why? – “Users are not likely to be pleasantly surprised to find that the library has something but that it has to be obtained in a slow or inconvenient way. Nearly all items will come from a search, and we do not know well how to browse in a remote library.” (Lesk, p. 163) • OK, but for “and”, read “as long as”! • Searching more natural on computer, browsing in real world – Effective browsing takes very fast computers—widely available now – Effective browsing has subtle UI demands 22 Mar. 06 11 How People Find Text Information Query Database understanding understanding Database concepts Query concepts matc hing Results 12 How Computers Find Text Information Query Database Stemming, stopping, query expansion, etc . (no unde rstan ding ) (no unde rstan ding ) matc hing Results • But in browsing, a person is really doing all the finding • => diagram is (computer) searching, not browsing 13 Why is Musical Information Hard to Handle? 1. Units of meaning: not clear anything in music is analogous to words (all representations) 2. Polyphony: “parallel” independent voices, something like characters in a play (all representations) 3. Recognizing notes (audio only) 4. Other reasons – Musician-friendly I/O is difficult – Diversity: of styles of music, of people interested in music 14 Units of Meaning (Problem 1) • Not clear anything in music is analogous to words – No explicit delimiters (like Chinese) – Experts don’t agree on “word” boundaries (unlike Chinese) • • • • • • Are notes like words? No. Relative, not absolute, pitch is important Are pitch intervals like words? No. They’re too low level: more like characters Are pitch-interval sequences like words? In some ways, but – Ignores note durations – Ignores relationships between voices (harmony) – Probably little correlation with semantics 15 Independent Voices in Music (Problem 2) J.S. Bach: “St. Anne” Fugue, beginning 16 Independent Voices in Text MARLENE. What I fancy is a rare steak. Gret? ISABELLA. I am of course a member of the / Church of England.* GRET. Potatoes. MARLENE. *I haven’t been to church for years. / I like Christmas carols. ISABELLA. Good works matter more than church attendance. --Caryl Churchill: “Top Girls” (1982), Act 1, Scene 1 Performance (time goes from left to right): M: What I fancy is a rare steak. Gret? I: G: I haven’t been... I am of course a member of the Church of England. Potatoes. 17 Text vs. Music: Art & Performance • Text is usually for conveying specific information – Even in fiction – Exception: poetry • Music is (almost always) an art – – – – Like poetry: not intended to convey hard-edged information Like abstract (visual) art: not “representational” In arts, connotation is more important than denotation Composer (like poet) usually trying to express things in a new way • Music is a performing art – – – – Like drama, especially drama in verse! Musical work is abstract; many versions exist Sometimes created in score form… Sometimes in form of performance 23 Oct. 06 18 Finding Themes Manually: Indices, Catalogs, Dictionaries • Manual retrieval of themes via thematic catalogs, indices, & dictionaries has been around for a long time • Parsons’index: intended for those w/o musical training – Parsons, Denys (1975). The Directory of Tunes and Musical Themes – Alphabetical; uses contour only (Up, Down, Repeat) • Example: *RUURD DDDRU URDR • Barlow and Morgenstern’s catalog & index – Barlow, Harold & Morgenstern, Sam (1948). A Dictionary of Musical Themes – Main part: 10,000 classical themes in CMN – Alphabetical index of over 100 pages • Gives only pitch classes, ignores octaves (=> melodic direction) • Ignores duration, etc. • Example: EFAG#CG – They did another volume with vocal themes rev. 25 Oct. 06 19 Even Monophonic Music is Difficult • Melodic confounds: repeated notes, rests, grace notes, etc. – Example: repeated notes in the “Ode to Joy” (Beethoven’s 9th, IV) – Look in Barlow & Morgenstern index => misses Beethoven… – But finds Dvorak! • Pitch alone (without rhythm) is misleading – Dvorak starts with same pitch intervals, very different rhythm – Another example: from Clifford et al, ISMIR 2006 25 Oct. 06 20 Finding Themes Automatically: Themefinder • Themefinder (www.themefinder.org) • Repertories: classical, folksong, renaissance – Classical is probably a superset of Barlow & Morgenstern’s – They won’t say because of IPR concerns! • Allows searching by – – – – – Pitch letter names (= Barlow & Morgenstern Dictionary) Scale degrees Detailed contour Rough contour (= Parsons Directory) Etc. • Fixes repeated-note problem with “Ode to Joy” • …but melodic confounds still a problem in general rev. 25 Oct. 06 21 How Well Does Content-based Retrieval Work? • Methods for giving content-based queries – Better for musically-trained people(?) • Letter name, scale degree, detailed contour, etc. • Playing on MIDI keyboard – Better for musically-untrained people(?) • Rough contour (= Parsons Directory) • Humming (MusArt) • Tapping rhythm (SongTapper) – Etc. • How well does it work for musically-untrained? – MusArt: query by humming is very difficult – Uitdenbogerd: Parsons’ method fails • Was Parsons right? An experiment in usability of music representations for melody-based music retrieval • “Even Monophonic Music is Difficult” 27 Oct. 06 22 Music IR in the Real World • Byrd & Crawford (2002). Problems of Music Information Retrieval in the Real World – – – – – – Music perception (psychoacoustics) is subtle Polyphony => salience much harder Realistic (= huge!) databases demand much more efficiency Multiple params: need to consider durations, etc. as well as pitch Melodic confounds, etc. etc. Problems more widely understood than in 2002 • Why is Music IR Hard? – – – – Segmentation and Units of Meaning Polyphony complicates segmentation, voice tracking, salience Recognizing notes in audio A new factor: what aspects does the user care about? 30 Oct. 06 23 Music Similarity, Covers & Variations • Covers & Variations in the Similarity Scale • Mozart “Twinkle” Variations – How similar is each variation to theme? – How similar is Swingle Singers set of variations to original? • The Doors’ Light My Fire – – – – Form: vocal (ca. 1’), instrumental (4’ 30”), vocal (1’ 30”) = 7’ 10” Cf. George Winston’s piano solo version (10’)… & The Guess Who’s Version Two (3’) Which is more similar to the original? • It depends on what you want! • Cf. MIREX 2006 Cover Song task – 30 songs, 11 versions each 30 Oct. 06 24 Relevance, Queries, & Information Needs • Information need: information a user wants or needs. • To convey to an IR system of any kind, must be expressed as (concrete) query • Relevance – Strict definition: relevant document (or passage) helps satisfy a user’s query – Pertinent document helps satisfy information need – Relevant documents may not be pertinent, and vice-versa – Looser definition: relevant document helps satisfy information need. Relevant documents make user happy; irrelevant ones don’t – Aboutness: related to concepts and meaning • OK, but what does “relevance” mean in music? – In text, relates to concepts expressed by words in query – Cf. topicality, utility, & Pickens’ evocativeness rev. 3 March 25 Content-based Retrieval Systems: Exact Match • Exact match (also called Boolean) searching – Query terms combined with connectives “AND”, “OR”, “NOT” – Add AND terms => narrower search; add OR terms => broader – “dog OR galaxy” would find lots of documents; “dog AND galaxy” not many – Documents retrieved are those that exactly satisfy conditions • Older method, designed for (and liked by) professional searchers: librarians, intelligence analysts • Still standard in OPACs: IUCAT, etc. • …and now (again) in web-search systems (not “engines”!) • Connectives can be implied => AND (usually) 30 Oct. 06 26 Content-based Retrieval Systems: Best Match • “Good” Boolean queries difficult to construct, especially with large databases – Problem is vocabulary mismatch: synonyms, etc. – Boston Globe’s “elderly black Americans” example: wrong words for government docs. • New approach: best match searching – Query terms just strung together – Add terms => broader & differently-focused search – “dog galaxy” 22 March 06 27 Luck in Searching (1) • Jamie Callan showed friends (ca. 1997) how easy it was to search Web for info on his family – No synonyms for family names => few false negatives (recall is very good) – Callan is a very unusual name => few false positives (precision is great) – But Byrd (for my family) gets lots of false positives – So does “Donald Byrd” …and “Donald Byrd” music, and “Donald Byrd” computer • Some information needs are easy to satisfy; some very similar ones are difficult • Conclusion: luck is a big factor 21 Mar. 06 28 Luck in Searching (2) • Another real-life example: find information on… – Book weights (product for holding books open) • Query (AltaVista, ca. 1999): '"book weights"’ got 60 hits, none relevant. Examples: 1. HOW MUCH WILL MY BOOK WEIGH ? Calculating Approximate Book weight... 2. [A book ad] ... No. of Pages: 372, Paperback Approx. Book Weight: 24oz. 7. "My personal favorite...is the college sports medicine text book Weight Training: A scientific Approach..." • Query (Google, 2006): '"book weights"’ got 783 hits; 6 of 1st 10 relevant. • => With text, luck is not nearly as big a factor as it was • Relevant because music metadata is usually text • With music, luck is undoubtedly still a big factor – Probable reason: IR technology crude compared to Google – Definite reason: databases (content limited; metadata poor quality) 22 Mar. 06 29 IR Evaluation: Precision and Recall (1) • Precision: number of relevant documents retrieved, divided by the total number of documents retrieved. – The higher the better; 1.0 is a perfect score. – Example: 6 of 10 retrieved documents relevant; precision = 0.6 – Related concept: “false positives”: all retrieved documents that are not relevant are false positives. • Recall: number of relevant documents retrieved, divided by the total number of relevant documents. – The higher the better; 1.0 is a perfect score. – Example: 6 relevant documents retrieved of 20; precision = 0.3 – Related concept: “false negatives”: all relevant documents that are not retrieved are false negatives. • Fundamental to all IR, including text and music • Applies to passage- as well as document-level retrieval 24 Feb. 30 Precision and Recall (2) • Precision and Recall apply to any Boolean (yes/no, etc.) classification • Precision = avoiding false positives; recall = avoiding false negatives • Venn diagram of relevant vs. retrieved documents Retrieved Relevant 1 2 3 1: relevant, not retrieved 2: relevant, retrieved 4 3: not relevant, retrieved 4: not relevant, not retrieved 20 Mar. 06 31 NightingaleSearch: Searching Complex Music Notation • Dialog options (too many for real users!) in groups • Main groups: Match pitch (via MIDI note number) Match duration (notated, ignoring tuplets) In chords, consider... • Search for Notes/Rests searches score in front window: one-at-a-time (find next) or “batch” (find all) • Search in Files versions: (1) search all Nightingale scores in a given folder, (2) search a database in our own format • Does passage-level retrieval • Result list displayed in scrolling-text window; “hot linked” via double-click to documents 32 Nightingale Search Dialog 33 Bach: “St. Anne” Fugue, with Search Pattern 26 Feb. 34 Result Lists for Search of the “St. Anne” Fugue Exact Match (pitch tolerance = 0, match durations) 1: BachStAnne_65: m.1 (Exposition 1), voice 3 of Manual 2: BachStAnne_65: m.7 (Exposition 1), voice 1 of Manual 3: BachStAnne_65: m.14 (Exposition 1), voice 1 of Pedal 4: BachStAnne_65: m.22 (Episode 1), voice 2 of Manual 5: BachStAnne_65: m.31 (Episode 1), voice 1 of Pedal Best Match (pitch tolerance = 2, match durations) 1: BachStAnne_65: m.1 (Exposition 1), voice 3 of Manual, err=p0 (100%) 2: BachStAnne_65: m.7 (Exposition 1), voice 1 of Manual, err=p0 (100%) 3: BachStAnne_65: m.14 (Exposition 1), voice 1 of Pedal, err=p0 (100%) 4: BachStAnne_65: m.22 (Episode 1), voice 2 of Manual, err=p0 (100%) 5: BachStAnne_65: m.31 (Episode 1), voice 1 of Pedal, err=p0 (100%) 6: BachStAnne_65: m.26 (Episode 1), voice 1 of Manual, err=p2 (85%) 7: BachStAnne_65: m.3 (Exposition 1), voice 2 of Manual, err=p6 (54%) 8: BachStAnne_65: m.9 (Exposition 1), voice 4 of Manual, err=p6 (54%) 26 Feb. 35 Precision and Recall with a Fugue Subject • “St. Anne” Fugue has 8 occurrences of subject – 5 are real (exact), 3 tonal (slightly modified) • Exact-match search for pitch and duration finds 5 passages, all relevant => precision 5/5 = 1.0, recall 5/8 = .625 • Best-match search for pitch (tolerance 2) and exact-match for duration finds all 8 => precision and recall both 1.0 – Perfect results, but why possible with such a simple technique? – Luck! • Exact-match search for pitch and ignore duration finds 10, 5 relevant => precision 5/10 = .5, recall 5/8 = .625 20 Mar. 06 36 Precision and Recall (3) • In text, what we want is concepts; but what we have is words • Comment by Morris Hirsch (1996) – If you use any text search system, you will soon encounter two language-related problems: (1) low recall: multiple words are used for the same meaning, causing you to miss documents that are of interest; (2) low precision: the same word is used for multiple meanings, causing you to find documents that are not of interest. • Precision = avoid false positives; recall = avoid false negatives • Music doesn’t have words, and it’s not clear if it has concepts, but that doesn’t help :-) 20 March 06 37 Precision and Recall (4) • Depend on relevance judgments • Difficult to measure in real-world situations • Precision in real world (ranking systems) – Cutoff, r precision • Recall in real world: no easy way to compute – Collection may not be well-defined – Even if it is, practical problems for large collections – Worst case (too bad): the World Wide Web rev. 26 Feb. 38 Efficiency in Simple Music Searches • For acceptable efficiency, must avoid exhaustive searching – Design goal of UltraSeek (late 1990’s): 1000 queries/sec. • Indexing is standard for text IR – Like index of book: says where to find each occurrence of units indexed – Requires segmentation into units – Natural units (e.g., words) are great if you can identify them! – For polyphonic music, very difficult – Artificial units (e.g., n-grams) are better than nothing – W/ monophonic music, 1 param at a time, not hard • Downie (1999) adapted standard text-IR system (w/ indexing) to music, using n-grams – Good results with 10,000 folksongs – But not a lot of music, & not polyphonic 29 March 06 39 Example: Indexing Monophonic Music • Text index entry (words): “Music: 3, 17, 142” • Text index entry (character 3-grams): “usi: 3, 14, 17, 44, 56, 142, 151” Kern and Fields: The Way You Look Tonight -7 18 Pitch: Duration: • • • • • H +2 27 H +2 27 E +1 26 E -1 24 E -2 23 E +2 27 H E Cf. Downie (1999) and Pickens (2000) Assume above song is no. 99 Music index entry (pitch 1-grams): “18: 38, 45, 67, 71, 99, 132, 166” Music index entry (pitch 2-grams): “1827: 38, 99, 132” Music index entry (duration 2-grams): “HH: 67, 99” 11 March 40 Efficiency in More Complex Music Searches (1) • More than one parameter at a time (pitch & duration is obvious combination) • Polyphony makes indexing much harder – Byrd & Crawford (2002): “polyphony will prove to be the source of the most intractable problems [in music IR].” • Polyphony & multiple parameters is particularly nasty – Techniques are very different from text – First published research only 10 years ago • Indexing polyphonic music discussed – speculatively by Crawford & Byrd (1997) – in implementation by Doraisamy & Rüger (2001) • Used n-grams for pitch alone; duration alone; both together 30 Oct. 06 41 Efficiency in More Complex Music Searches (2) • Alternative to indexing: signature files • Signature is a string of bits that “summarizes” document (or passage) • For text IR, inferior to inverted lists in nearly all real-world situations (Witten et al., 1999) • For music IR, tradeoffs may be very different • Audio fingerprinting systems (at least some) use signatures – Special case: always a known item search – Very fast, e.g., Shazam…and new MusicDNS (16M tracks!) • No other research yet on signatures for music (as far as I know) 29 March 06 42 Hofstadter on indexing (and “aboutness”!) in text • Idea: a document is relevant to a query if it’s about what the query is about – “Aboutness” = underlying concepts – Applies to any searching method, with indexing or not • Hofstadter wrote (in Le Ton Beau de Marot): • • • • – “My feeling is that only the author (and certainly not a computer program) can do this job well. Only the author, looking at a given page, sees all the way to the bottom of the pool of ideas of which the words are the mere surface, and only the author can answer the question, ‘What am I really talking about here, in this paragraph, this page, this section, this chapter?’” Hard for representational material: prose, realistic art… But what about poetry, music, non-representational work? For music, cf. musical ideas Pickens: music equivalent of relevance is evocativeness rev. 31 Oct. 06 43 Digital Libraries & Finding via Metadata • A digital library isn’t just a library with an OPAC! • DL as collection/information system • “a collection of information that is both digitized and organized” -- Michael Lesk, National Science Foundation • “networked collections of digital text, documents, images, sounds, scientific data, and software” -- President’s Information Technology Advisory Council report • DL as organization • “an organization that provides the resources, including the specialized staff, to select, structure, offer intellectual access to, interpret, distribute, preserve the integrity of, and ensure the persistence over time of collections of digital works...” -- Digital Library Federation 44 Variations2 Digital Library Scenario • From Dunn et al article, CACM 2005 • • • • • • • • • Music graduate student wants to compare performances of Brahms' Piano Concerto #2 With music search tool, searches for works for which composer's name contains "brahms" & title contains "concerto" Scanning results, sees work he wants & clicks => list of available recordings & scores Selects recordings of 3 performances & encoded score; creates bookmarks for each Instructs system to synchronize each recording with score & then uses a set of controls that allow him to play back the piece & view score, cycling among performances on the fly To help navigate, creates form diagrams for each movement by dividing a timeline into sections & grouping those sections into higher level structures Uses timeline to move within piece, comparing performances & storing his notes as text annotations attached to individual time spans At one point, to find a particular section, plays a sequence of notes on a MIDI keyboard attached to his computer, & system locates sequence in score When finished, he exports timeline as an interactive web page & e-mails it to his professor for comment 3 Nov. 2006 45 Variations Project • Digital library of music sound recordings and scores • Original concept 1990; online since 1996 • Accessible in Music Library and other select locations - restricted for IPR reasons • Used daily by large student population • In 2003: 6900 titles, 8000 hours of audio – 5.6 TB uncompressed – 1.6 TB compressed • Opera, songs, instrumental music, jazz, rock, world music 46 Focus on Audio • • • • High demand portion of collection Fragile formats Lack of previous work; uniqueness …Especially Audio Reserves – Half of sound recording use from reserves – Problems with existing practices • Cassette tape dubs, analog distribution systems – Concentrated use of a few items at any given time 47 Functional Requirements for Bibliographic Records (FRBR) • Represents much more complex relationships than MARC – Work concept is new! • Much of following from a presentation by Barbara Tillett (2002), “The FRBR Model (Functional Requirements for Bibliographic Records)” 25 April 48 FRBR Entities • Group 1: Products of intellectual & artistic endeavor 1. Work (completely abstract) 2. Expression 3. Manifestation 4. Item (completely concrete: you can touch one) – Almost heirarchic; “almost” since works can include other works • Group 2: Those responsible for the intellectual & artistic content – Person – Corporate body • Group 3: Subjects of works – – – – – Groups 1 & 2 plus Concept Object Event Place 25 April 49 Relationships of Group 1 Entities: Example w1 J.S. Bach’s Goldberg Variations e1 Performance by Glenn Gould in 1981 m1 Recording released on 33-1/3 rpm sound disc in 1982 by CBS Records i1a, 1b, 1c Copies of that 33-1/3 rpm disc acquired in 1984-87 by the Cook Music Library m2 Recording re-released on compact disc in 1993 by Sony i2a, i2b Copies of that compact disc acquired in 1996 by the Cook Music Library m3 Digitization of the Sony re-released as MP3 in 2000 25 April 50 Relationships of Group 1 Entities Work is realized through Expression is embodied in recursive one Manifestation is exemplified by many Item 25 April 51 FRBR vs. Variations2 Data Models FRBR Variations2 • Group 1 – – – – Work Expression Manifestation Item – Work – Instantiation – Media object • Group 2 – Person – Corporate body (any named organization?) – Contributor (person or organization) – Container Items in blue are close, though not exact, equivalents. 25 April; rev. 26 April 52 The Elephant in the Room: Getting Catalog Information into FRBR (or Variations2) • Recent MLA discussion by Ralph Papakhian, etc. – Cataloging even to current standards (MARC) is expensive – FRBR & Variations2 are both much more demanding – Michael Lesk/NSF: didn’t like funding metadata projects because “they always said every other project should be more expensive”! • Partial solution 1: collaborative cataloging (ala OCLC) • Partial solution 2: take advantage of existing cataloging – Variations2: simple “Import MARC” feature – VTLS: convert MARC => FRBR is much more ambitious • Why not join forces and save everyone a lot of trouble? • Good idea—under discussion now 25 April 53 Metadata Standards & Usage • In libraries – MARC (standard, in use since 1960’s) – FRBR (new proposed standard, w/ “works”, etc.) – Variations2 (IU only, but like FRBR w/ “works”) • Elsewhere – General purpose: Dublin Core (for XML) – Specialized: MP3 ID3 tags • Examples of use – MusicXML: subset of Dublin Core(?) – iTunes: subset of ID3 6 Nov. 06 54 Review: Searching vs. Browsing • What’s the difference? What is browsing? – – – – Managing Gigabytes doesn’t have an index entry for either Lesk’s Practical Digital Libraries (1997) does, but no definition Clearcut examples of browsing: in a book; in a library In browsing, user finds everything; the computer just helps • Browsing is obviously good because it gives user control => reduce luck, but few systems have it. Why? – “Users are not likely to be pleasantly surprised to find that the library has something but that it has to be obtained in a slow or inconvenient way. Nearly all items will come from a search, and we do not know well how to browse in a remote library.” (Lesk, p. 163) • OK, but for “and”, read “as long as”! • Searching more natural on computer, browsing in real world – Effective browsing takes very fast computers—widely available now – Effective browsing has subtle UI demands 22 Mar. 06 55 Browsing and Visualization • • • • Pampalk et al on “Browsing different Views” “Browsing is obviously good because it gives user control” Pampalk’s way does that(?) High-dimensional spaces often useful to model documents – – – – • • • • Features becomes dimensions Point in high-dimensional space represents document Pandora: each “gene” is a feature => 400 dimensions MusicStrands: each song is a feature => 4,000,000 dimensions! Visualization is limited to 2 (or sometimes 3) dimensions => must reduce from high-dimensional space Pampalk uses Self-Organizing Maps (SOMs) Other methods: MDS, spring embedding, PCA, etc. 3 Nov. 2006 56