pptx - Daniel Wong

advertisement

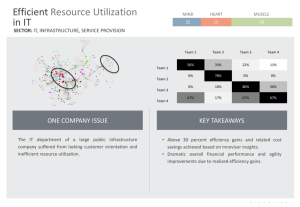

Supported by Implications of High Energy Proportional Servers on Cluster-wide Energy Proportionality Daniel Wong Murali Annavaram Ming Hsieh Department of Electrical Engineering University of Southern California HPCA-2014 Overview | Background | Part I – Effect of High EP Server on Cluster-wide EP ❖Cluster-level Packing may hinder Cluster EP | Part II – Server-level Low Power Mode Scaling ❖Active Low Power Mode can overcome multi-core scaling challenges | Conclusion Overview | 2 Energy Proportionality and Cluster-level Techniques BACKGROUND Background | 3 Background – EP Trends | Poor Server EP prompted Cluster-level techniques to mask Server EP and improve cluster-wide EP ❖Ex. Packing techniques Energy Proportionality 1.2 1 0.8 0.6 0.4 How does0.2Cluster-level techniques impact the perceived Energy Proportionality of Clusters? 0 Nov-07 Jan-09 Apr-10 Jun-11 Sep-12 Nov-13 Published SPECpower date Background | 4 Background Util. Util. Util. Util. | Uniform Load Balancing ❖Minimize response time Background | 5 Background Server EP Curve Cluster EP Curve 100% Cluster peak power Server peak power 100% Util. Util. Util. Util. | Uniform Load Balancing ❖Cluster-wide EP tracks server EP 80% If Server’s EP is poor, then 60% the Cluster’s EP will 40% be poor 80% 60% 40% 20% 0% 20% 0% 0% 20% 40% 60% 80% 100% Utilization 0% 20% 40% 60% 80% 100% Utilization Background | 6 Background | When server EP is poor, we need to mask the poor EP with Dynamic Capacity Management ❖ Uses Packing algorithms, to minimize active server Server EP Curve Cluster EP Curve Cluster peak power 100% 100% Server peak power Util. Util. Util. Util. Turn Turnoffonserver servers when whencapacity not needed needed 80% Packing enables near-perfect80% cluster proportionality 60% 60% in the presence of low 40% server EP 40% Active servers run at higher utilization 20% 0% 0% 20% 40% 60% 80% 100% Utilization 20% 0% 0% 20% 40% 60% 80% 100% Utilization Background | 7 Part I – TODAY SERVER EP IS GETTING BETTER. WHAT DOES THIS MEAN FOR CLUSTER WIDE EP? Cluster-Wide EP | 8 Cluster-wide EP Methodology | 9-day utilization traces from various USC data center servers ❖Significant low utilization periods | Evaluated 3 servers ❖LowEP: EP = 0.24 ❖MidEP: EP = 0.73 ❖HighEP: EP = 1.05 ❖EP curve used as power model ❖Modeled on/off power penalty | Cluster w/ 20 servers | Measured Util & Power @ 1min Cluster-Wide EP | 9 Measuring Energy Proportionality Peak power 100% EP=1- 80% 60% Area actual - Area ideal Area ideal 40% Actual 20% Ideal 0% 0% 20% 40% 60% 80% 100% Utilization | Ideal EP = 1.0 | Cluster-wide EP ❖Take average at each util. ❖3rd degree poly. best fit Cluster-Wide EP | 10 Cluster-wide EP Methodology | G/G/k queuing model based simulator ❖Based on concept of Capability Utilization trace as proxy for time-varying arrival rate Utilization Utilization Trace Uniform L.B. & Packing (Autoscale) Time-varying Arrival Rate 30% Time 30n requests generated k represents capability of server. Baseline, k = 100 Load Balancing Lack of service request time in traces. Service rate: Exp. w/ mean of 1s 1 2 Server 1 k 1 2 Server 2 k 1 2 k Server n Cluster-Wide EP | 11 Packing techniques are highly effective | Uniform Cluster-wide EP tracks Server EP | When server EP is poor, packing improves ClusterEP ❖Turning off idle servers, higher util. for active Uniform Packing servers Cluster EP = 0.24 Cluster EP = 0.69 LowEP (0.24) Server Cluster-Wide EP | 12 With server EP improvements?? | Packing benefits diminish Uniform Packing Cluster EP = 0.73 Cluster EP = 0.79 MidEP (0.73) Server Cluster-Wide EP | 13 With near perfect EP?? | It may be favorable to forego cluster-level packing techniques Uniform Packing Cluster EP Cluster EP = 1.05 = 0.82 We may have now reached a turning point where servers alone may offer more energy proportionality than cluster-level packing techniques can achieve HighEP (1.05) Server Cluster-Wide EP | 14 Why Packing hinders Cluster-Wide EP | Servers has wakeup delays | Require standby servers to meet QoS levels Cluster peak power 100% 80% 60% 40% 20% 0% 0% 20% 40% 60% 80% 100% Utilization Cluster-Wide EP | 15 Cluster-level Packing may hinder Cluster-Wide EP | Servers has wakeup delays | Require standby servers to meet QoS levels Cluster peak power 100% By foregoing Cluster-level Packing, 80% Uniform Load Balancing can expose underlying Server’s EP 60% 40% 20% Enable Server-level EP improvements 0% to Cluster-wide EP improvements to translate 0% 20% 40% 60% 80% 100% Utilization Cluster-Wide EP | 16 KnightShift – Improving Server-level EP | Basic Idea -- fronts a high-power primary server with a low-power compute node, called the Knight Knight Node Server Node Motherboard | During low utilization work shifts to Knight node Motherboard Memory Memory Memory Memory CPU CPU CPU Chipset LAN SATA Chipset | During high utilization work shifts back to primary Simple Router Power LAN SATA Disk Disk Power Cluster-Wide EP | 17 Cluster-EP with KnighShift | Previously, Cluster-level packing mask Server EP | If we forego Cluster-level packing, EP improvements by server-level low power modes can now translate into cluster-wide EP improvements! Average Power = 890W Average Power = 505W Uniform w/ KnightShift Cluster-Wide EP | 18 Part I – Take away | Cluster-level Packing techniques may hinder Cluster-wide EP by masking underlying Server’s EP | Server-level low power modes make it even more attractive to use Uniform Load Balancing Cluster-Wide EP | 19 Part II – SERVER-LEVEL LOW POWER MODE SCALING Low Power Mode Scaling | 20 Server-level Inactive Low Power Modes | PowerNap – Sleep for fine-grain idle periods Core 1 | PowerNap w/ Multi-Core Core 1 Core 2 Core 3 Core 4 Requests Naturally occurring idle periods are Time Idle disappearing with multi-core scaling Low Power Mode Scaling | 21 Server-level Inactive Low Power Modes | Idleness scheduling algorithms used to create artificial idle periods | Batching – Timeout-based queuing of requests Core 1 Core 2 Core 3 Core 4 Requests Idle Batch Time | Dreamweaver – Batching + Preemption Low Power Mode Scaling | 22 Core count vs Idle Periods | Apache workload @ 30% Utilization 40% % Time at Idle 35% 30% 25% 20% 15% 10% 5% 0% 1 2 4 8 16 Number of Cores 32 64 | As core count increases, idleness scheduling algorithms require greater latency penalty to remain effective | Possible Solution – Active Low Power Mode Low Power Mode Scaling | 23 Server-level Low Power Mode Challenges | Require tradeoff of response time ❖Latency Slack: 99th percentile response time impact required before low power mode can be effective | As server core count increases, so does the required latency slack due to disappearing idle periods How does increasing core count impact latency slack of various server-level low power modes? Low Power Mode Scaling | 24 Methodology | BigHouse simulator ❖Stochastic queuing simulation ❖Synthetic arrival/service traces ❖4 workloads (Apache, DNS, Mail, Shell) derived from departmental servers Low Power Mode Scaling | 25 Effect of core count on energy savings | Latency slack vs Energy Savings tradeoff curves | Dreamweaver running Apache @ 30% Utilization Energy Savings 0.5 0.4 2 4 0.3 8 As core count increases, latency slack required to achieve 0.2 energy savings at low core count increases 16 similar 32 0.1 64 128 0 1 counts, 1.5 inactive 2 low power 2.5 modes 3 become At higher core Normalized 99%tile Latency increasingly ineffective Low Power Mode Scaling | 26 Case Study w/ 32-core Server | Active Low Power Mode are dependent on low utilization periods Energy Savings 1 KnightShift DreamWeaver 0.8 Batch 0.6 0.4 1.3x Knightshift consistently performsconsistently best Dreamweaver outperforms Batching Batching provides linear tradeoff 3x 0.2 0 1 1.5 2 2.5 Normalize 99% Latency 3 apache Low Power Mode Scaling | 27 32-core Server 1 KnightShift DreamWeaver 0.8 Energy Savings Energy Savings 1 Batch 0.6 0.4 0.2 KnightShift DreamWeaver 0.8 Batch 0.6 0.4 0.2 0 0 1 1.5 2 2.5 Normalize 99% Latency 3 1 1.5 2 2.5 Normalize 99% Latency Apache DNS 1 KnightShift DreamWeaver 0.8 Energy Savings Energy Savings 1 3 Batch KnightShift DreamWeaver 0.8 Batch Server-level active low power modes outperform inactive low power modes at every latency slack. 0.6 0.4 0.6 0.4 0.2 0.2 0 0 1 1.5 2 2.5 Normalize 99% Latency Mail 3 1 1.5 2 2.5 Normalize 99% Latency 3 Shell Low Power Mode Scaling | 28 Summary | Server-level low power modes challenges ❖Multi-core scaling reduces idle cycles ❖Server-level Active low power modes remain effective with multi-core scaling Low Power Mode Scaling | 30 Conclusion | Revisit effectiveness of Cluster-level Packing techniques ❖Packing techniques are highly effective at masking server EP ❖With improving server EP, it may be favorable to forego cluster-level packing techniques ❖Enable server-level low power modes improvements to translate into cluster-wide improvements | Server-level low power modes challenges ❖Multi-core scaling requires larger latency slack ❖Server-level active low power modes outperform inactive low power modes at every latency slack. Conclusion | 31 Thank you! Questions? Conclusion | 32